A positive vision of an AI future by the CEO of Anthropic, Nvidia’s 100 Million AI Army, Five professions impacted by AI, AI engines recognize human beauty, and a man is outgunned when his girlfriend uses ChatGPT to argue her case.

Enjoy!

A Compressed Century Ahead

Dario Amodei, CEO of Anthropic, offers an optimistic view on AI in his latest article. He shares a compelling vision of how strong artificial intelligence can transform society. While he highlights its great potential, he also addresses the challenges and risks that accompany it. Amodei urges for proactive measures to steer the development of artificial intelligence in a way that benefits everyone.

Amodei introduces the concept of a "compressed 21st century," suggesting that advances in biology and health could lead to rapid breakthroughs in curing diseases like cancer and Alzheimer's within a decade.

AI is envisioned as a collaborative partner in scientific research, capable of analyzing vast amounts of data, personalizing treatments, and significantly reducing research timelines.

Amodei stresses the importance of using AI to address economic disparities, advocating for its role in resource allocation and improving logistics, particularly in developing countries.

AI could revolutionize education by tailoring learning experiences to each child, thereby maximizing their potential and driving economic progress globally.

Amodei believes AI can help solve pressing global issues like hunger and climate change by optimizing agricultural practices and creating sustainable cities.

He emphasizes AI's potential to strengthen democratic institutions and combat misinformation, promoting transparency and accountability in governance.

As AI transforms the job market, Amodei argues it will free individuals from mundane tasks, allowing them to pursue more meaningful and creative endeavors, while also suggesting concepts like universal basic income.

The future of work will require a redefinition of what work means in an AI-driven world, focusing on human contributions beyond traditional employment.

Opinion | AI, Aging and Shifts Globalization Will Shock the American Economy - The New York Times

Inflation seems under control. The job market remains healthy. Wages, including at the bottom end of the scale, are rising. But this is just a lull. There is a storm approaching, and Americans are not prepared.

Barreling toward us are three epochal changes poised to reshape the U.S. economy in coming years: an aging population, the rise of artificial intelligence and the rewiring of the global economy.

There should be little surprise in this, since all these are evolving slowly in plain sight. What has not been fully understood is how these changes in combination are likely to transform the lives of working people in ways not seen since the late 1970s, when wage inequality surged and wages at the low end stagnated or even fell.

Together, if handled correctly, these challenges could remake work and deliver much higher productivity, wages and opportunities — something the computer revolution promised and never fulfilled. If we mismanage the moment, they could make good, well-paying jobs scarcer and the economy less dynamic. Our decisions over the next five to 10 years will determine which path we take.

Nvidia’s Jensen Huang’s 100 Million AI Army - Business Insider: Subscription Required

Jensen Huang, CEO of Nvidia, aims for 50,000 employees and 100 million AI assistants to boost productivity. Nvidia seeks to align with tech giants like Apple and Microsoft through workforce growth and tech innovations.

Jensen Huang, CEO of Nvidia, aims for 50,000 employees and 100 million AI assistants to boost productivity. Nvidia seeks to align with tech giants like Apple and Microsoft through workforce growth and tech innovations.

Huang describes plans for an "AI army" to manage redundant tasks in companies, making work more efficient for human employees.

The future will see AI assistants collaborating with each other and human employees, forming a hybrid workforce that enhances problem-solving capabilities.

Nvidia is already utilizing AI agents in key areas such as cybersecurity, chip design, and software engineering, with Huang personally interacting with these systems.

Huang reassures that AI will coexist with human workers, enhancing productivity without leading to mass layoffs. He believes the deployment of AI will secure jobs by improving company growth and profitability.

UNC establishes artificial intelligence committee amid rise in usage. DTH

(MRM – article about UNC’s Provost’s AI Committee, which I chair)

As higher education institutions across the country make decisions about generative artificial intelligence use in the classroom, UNC faculty, staff and students are navigating the impacts on campus.

On Sept. 19, the University announced the new Provost’s AI Committee and the AI Acceleration Program which have goals that include increasing AI usage and literacy on campus, providing simpler AI usage guidance and supporting AI research.

The AI Committee has five subcommittees: metrics, initiatives, proposal approval, communications and usage guidance.

UNC Gillings lab uses AI to predict local air quality | UNC-Chapel Hill

When a manufacturing plant or large store eyes your community for its next location, how will the new neighbor affect the air that you breathe?

Artificial intelligence models can predict the effects on a broad scale but not at the town or neighborhood level. These broad-scale tools also require supercomputers and technical knowledge.

A research team at UNC Gillings School of Global Public Health has produced a software-based AI bot called DeepCTM that can predict air quality using local data without the need for extensive computational infrastructure or high-level expertise. Plus, the bot accounts for how chemical reactions in the local atmosphere change what’s in the air.

Funded by a Gillings Innovation Lab grant, Carolina and George Mason University are developing the bot for first use by the Environmental Defense Fund. Carolina’s high-performance computing research servers are processing the data. The fund will use DeepCTM predictions about proposed and existing local natural gas power plants to gauge their impact on vulnerable communities in Washington, D.C., and Florida.

To improve DeepCTM’s accuracy, Vizuete trains the bot on datasets then compares its results with those from the Environmental Protection Agency’s supercomputing model. Both employ computer code and mathematics to create three-dimensional simulations of the atmosphere’s behavior. Users input data that includes weather information and data on emissions from cars, power plants and other sources. The model is open to researchers worldwide.

“We give the bot the same meteorologic conditions and the same emissions as the full model. Then we ask it, ‘What would you expect the model to predict?’” Vizuete said. “It’s the same idea as ChatGPT producing a probability of the next word. What’s the probability, the most likely outcome, based on the inputs and the bot’s training?”

Making the bot more precise and easier to use, Vizuete said, will remove barriers to its use: computational cost, the requirement of a supercomputing cluster and technical knowledge for best use and analysis.

Cultivating Self-Worth in the Age of AI

1. Explain large language models through the “say the same thing” game

Early in a course—usually during the first class—I do an exercise that demonstrates how large language models (LLMs), the technology underlying gen AI, work. Students are paired off and instructed to silently count to three on their fingers. At three, they have to simultaneously say any word. If they don’t say the exact same one, they have to do the exercise again until they do.

2. Explore business ideas by brainstorming with gen AI

When entrepreneurs develop a business venture, they identify hypotheses about why that idea will be successful and then test those assumptions. To teach these practices, I’ve developed an approach that puts students in conversation with AI.

To start, I give the students a business idea: For example, I might tell them that they’re an entrepreneur in Nairobi, Kenya, with an idea to connect truckers with spare cargo capacity to farmers who need to get their produce to market. I have them begin by evaluating the idea and forming hypotheses without the help of AI.

Then, I ask them to put the scenario into a gen AI engine and have it identify what challenges or opportunities the idea might introduce to truckers and farmers. The list of considerations the AI comes up with usually exceeds what students think of by themselves because it was trained on a dataset that includes writings about trucking and farming. Its outputs augment students’ work, but don’t remove students’ perspectives from the process.

(MRM – 3 more ideas in the article)’

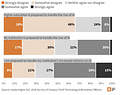

Campus tech leaders say higher ed is unprepared for AI's rise

Chief technology officers indicate their level of agreement with the following about AI readiness in higher education and at their institution:

ChatGPT Can Make English Teachers Feel Doomed. Here’s How I’m Adapting (Opinion)

To combat those narratives of doom and pointlessness, I hope to change the “point” of my class this year, in two major ways.

First, I plan to focus more on process than product. Since 2001, schools have operated in No Child Left Behind’s world of “measurable outcomes.” We threw out the student-centered, constructivist methods of the 1970s, which focused on the journey of the learner and, instead, adopted backward design, aiming our teaching toward students meeting performance goals on all-important assessments. Products and scores became the proof of learning.

But today, AI has broken that fundamental equation; it produces products instantly, no learning required.

Therefore, what I’ve decided to do now is spend more time assessing my students’ process of drafting multiple iterations of their work, with and without AI assistance. I’ll depend increasingly on students’ structured in-class reflections, rather than on their finished essay itself, to demonstrate learning.

But, I also want to reduce the role that writing plays in my classroom in general. This may seem like anathema to our profession, but only because the last 30 years have moved English away from exploring what great literature could teach us about the human condition and toward teaching students “job useful” writing skills. Yet, my friends with office jobs routinely outsource their memos, annual reports, and grant proposals to ChatGPT.

Since AI has automated much “practical” writing, while simultaneously raising enormous questions about what it means to be human, perhaps it’s time for English teachers to return to the less measurable—but arguably more important—philosophical work we used to do.

For centuries, authors from Plato to Mary Shelly to Aldus Huxley have written about how humans have grappled with society-changing technologies; an even wider range of authors have explored love and rejection and loss, what constitutes a meaningful life, how to endure despair and face death.

And we don’t just read these books for prescriptive advice—especially in an isolating age like ours, we read to know we’re not alone.

5 tips to get the most out of ChatGPT from someone who uses it constantly

Rename your chats

Set custom instructions

Pick the right model

Use CustomGPTs

Organize your conversations

How to Use AI to Build Your Company’s Collective Intelligence

To ensure AI deployment advances strategic goals and supports key objectives, managers can adopt a different mindset: using AI to increase the collective intelligence of the entire organization.

Collective intelligence is the shared intelligence that emerges from collaboration, collective efforts, and competition. It reflects groups’ ability to achieve consensus, solve complex problems, and adapt to changing environments. Recent research suggests that collective intelligence emerges from three interdependent ingredients: collective memory, collective attention, and collective reasoning. Managers can apply this idea to target specific areas in which AI can elevate the organization’s collective cognitive abilities and drive more informed decision-making in ways that are human centered and amplify human creativity.

AI can support each of these three processes. Here’s how.

Collective Memory: AI can enhance the storage, retrieval, and updating of an organization’s collective knowledge, helping employees access and build expertise. Instead of automating entry-level tasks, AI can assist in task allocation and skill development, improving organizational memory and cognitive capabilities.

Collective Attention: AI streamlines communication and optimizes workflows, helping teams coordinate and focus on critical tasks. By supporting shared awareness of workloads and priorities, AI improves how groups allocate attention and maintain focus on creative and essential work.

Collective Reasoning: AI assists in aligning group goals and priorities by integrating information and bridging communication gaps. It fosters collaboration, enhances shared decision-making, and amplifies diverse perspectives, ensuring that groups can reason and adapt more effectively.

AI helped Uncle Sam catch $1 billion of fraud in one year. And it’s just getting started | CNN Business

The federal government’s bet on using artificial intelligence to fight financial crime appears to be paying off.

Machine learning AI helped the US Treasury Department to sift through massive amounts of data and recover $1 billion worth of check fraud in fiscal 2024 alone, according to new estimates shared first with CNN. That’s nearly triple what the Treasury recovered in the prior fiscal year.

“It’s really been transformative,” Renata Miskell, a top Treasury official, told CNN in a phone interview. “Leveraging data has upped our game in fraud detection and prevention,” Miskell said.

The Treasury Department credited AI with helping officials prevent and recover more than $4 billion worth of fraud overall in fiscal 2024, a six-fold spike from the year before.

Google’s NotebookLM Now Lets You Customize Its AI Podcasts | WIRED

Google just added a new customization tool for the viral AI podcasts in its NotebookLM software. I got early access and tested it out using Franz Kafka’s The Metamorphosis as the source material, spending a few hours generating podcasts about the seminal novella—some of them more unhinged than others.

Released by Google Labs in 2023 as an experimental, AI-focused writing tool, NotebookLM has been enjoying a resurgence in user interest since early September, when the developers added an option to generate podcast-like conversations between two AI voices—one male-sounding and one female-sounding—from uploaded documents. While these audio “deep dives” can be used for studying and productivity, many of the viral clips online focused on the entertainment factor of asking robot hosts to discuss bizarre or highly personal source documents, like a LinkedIn profile.

Raiza Martin, who leads the NotebookLM team inside of Google Labs, is pumped to give users more control over the content of these synthetic podcasts. “It's the number one feature we've heard people request,” she says. “They want to provide a little bit of feedback as to what the deep dive focuses on.” According to Martin, this is the first update of many coming down the pipeline.

AI isn't ready to replace human jobs—but 5 professions may feel its impact more

Indeed’s researchers considered 16 occupations in their study and highlighted five jobs that involve the greatest share of skills that have the potential to be replaced by AI. Those lines of work are:

Accounting professionals

Marketing and advertising specialists

Software developers

Health care administrative support staff

Insurance claims and inspection officers

The technical and often repetitive nature of the skills needed for those roles play into AI’s strengths, says Gudell, in addition to the fact that those professions tend to require less physical interaction.

On the other hand, occupations that demand client or customer exchange, physical presence or less repetitive problem-solving are the least likely to be replaceable, she adds, citing patient-facing health care work such as nursing as examples.

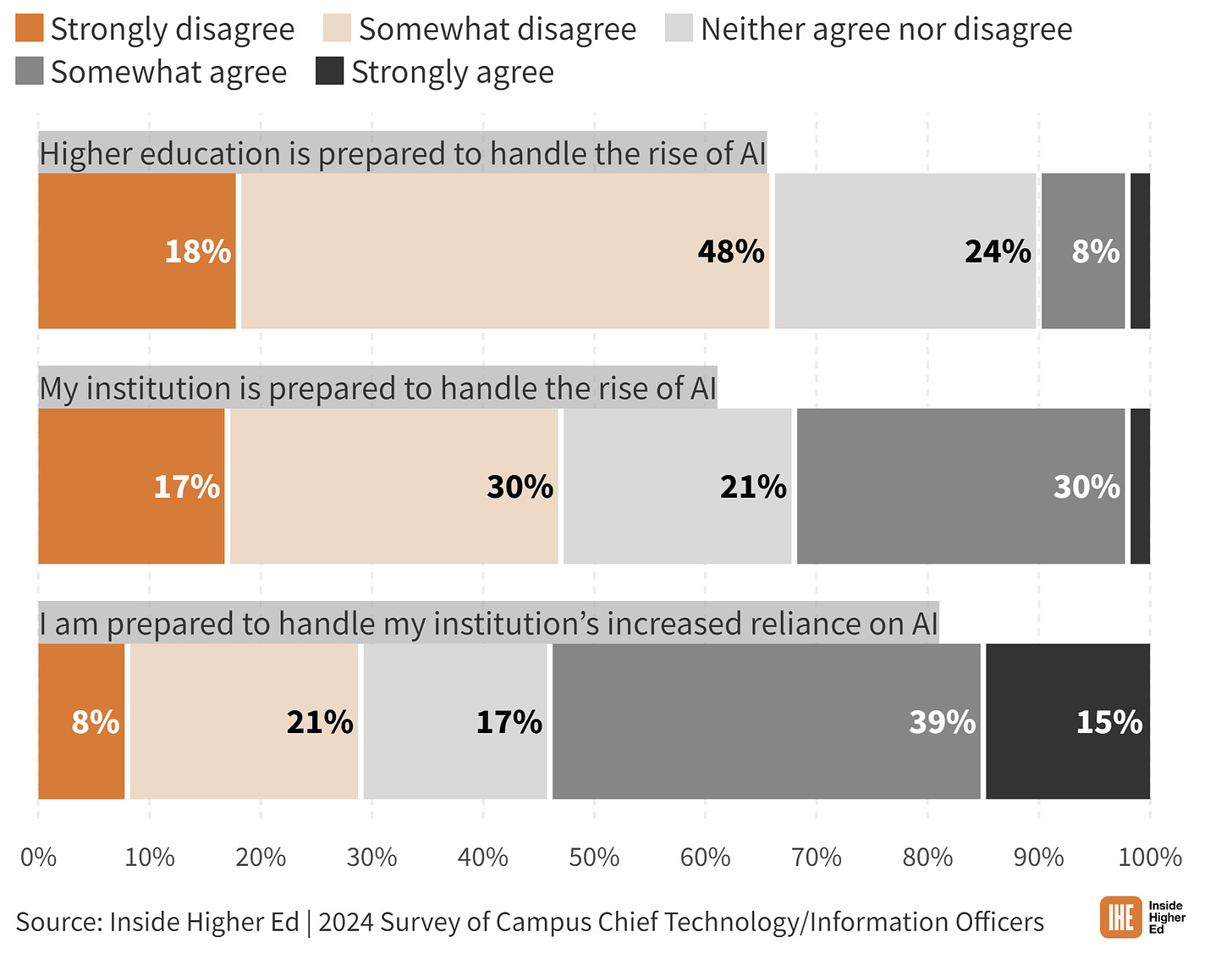

Ranked: The Most Popular Generative AI Tools in 2024

While new players have been entering the generative AI space, there are clear leaders when it comes to general popularity. This graphic shows the 15 most popular generative AI tools based on web traffic in March 2024. The data comes from a World Bank policy research working paper titled “Who on Earth is Using Generative AI?” by Yan Liu and He Wang.

OpenAI reveals how cybercriminals are asking ChatGPT to write malware - India Today

AI is being used for both good and bad. And the bad is not just limited to deep fakes but severe criminal activities like planning cyber attacks. OpenAI in its latest report has revealed that cybercriminals are exploiting its AI-powered chatbot, ChatGPT, to assist in malicious activities. According to the company's latest report, "Influence and Cyber Operations: An Update," cybercriminals are leveraging ChatGPT to write code, develop malware, conduct social engineering attacks, and carry out post-compromise operations.

As reported by Bleeping Computer, OpenAI's report details several incidents where ChatGPT was found aiding cybercriminals in online attacks. Since early 2024, OpenAI has dealt with over 20 malicious cyber

operations involving ChatGPT abuse, affecting various industries and governments across multiple countries. These cases range from malware development and vulnerability research to phishing and social engineering campaigns.

According to OpenAI, the criminals used ChatGPT's natural language processing (NLP) and code-generation abilities to complete tasks that would typically require significant technical expertise, thus lowering the skill threshold for cyberattacks.

OpenAI says ChatGPT treats us all the same (most of the time) | MIT Technology Review

Does ChatGPT treat you the same whether you’re a Laurie, Luke, or Lashonda? Almost, but not quite. OpenAI has analyzed millions of conversations with its hit chatbot and found that ChatGPT will produce a harmful gender or racial stereotype based on a user’s name in around one in 1000 responses on average, and as many as one in 100 responses in the worst case.

Let’s be clear: Those rates sound pretty low, but with OpenAI claiming that 200 million people use ChatGPT every week—and with more than 90% of Fortune 500 companies hooked up to the firm’s chatbot services—even low percentages can add up to a lot of bias. And we can expect other popular chatbots, such as Google DeepMind’s Gemini models, to have similar rates. OpenAI says it wants to make its models even better. Evaluating them is the first step.

Windows Finally Gets a ChatGPT App | PCMag

OpenAI has begun testing a Windows app for ChatGPT. The early version can be downloaded from the Microsoft Store, but access is limited to ChatGPT Plus, Team, Enterprise, and Edu users, OpenAI said in an X post on Thursday.

The desktop app is compatible with Windows 10 (version 17763.0 or newer) and Windows 11, and comes with OpenAI's latest o1 language model. This model is capable of reasoning, but takes slightly longer to answer questions. OpenAI views the delay as a mark of greater intelligence.

Like its macOS counterpart, ChatGPT for Windows lets you interact with the AI-powered chatbot in a companion window alongside other apps. To quickly launch the app, you can use the Alt+Space shortcut. You can type queries, or upload files and documents for it to analyze. It can also generate images using DALL-E, which is also included in the app.

One of the key features missing from the early version of the Windows ChatGPT app is the Advanced Voice mode. It could be added to the full version of the app, which OpenAI plans to launch for all users later this year.

New ChatGPT prompt goes viral with Sam Altman’s approval | TechRadar

Sam Altman, CEO of OpenAI, the makers of ChatGPT, put his name to a new viral trend on the platform recently when he retweeted it on X (formerly Twitter) with “love this” added in response to a tweet that has got ChatGPT users enthralled.

The original Tweet, from writer Tom Morgan, says simply, 'Ask ChatGPT “From all of our interactions what is one thing that you can tell me about myself that I may not know about myself'.

It’s a simple prompt but many people have been finding it very insightful.

Before you dive over to ChatGPT to try it out, it’s worth noting that this prompt only works if you have subscribed to the paid-for version of ChatGPT, called ChatGPT Plus ($20, £16, AU$30), since the free version has no memory of you from the last time you used it.

Responses on Reddit ranged from Newmoonlightavenger who said simply “It was the best thing anyone has ever said about me” to Jimmylegs50 who wrote, “Crying. I really needed to hear this right now. Thanks, OP."

User PopeAsthetic wrote, 'Wow I did it, and GPT gave me the most profound advice and reflection of myself that I’ve ever received. Even told me I seem to have a desire for control, while at the same time having a desire to let go of control. I’ve never thought about it like that.'

ChatGPT can remember some of what you tell it — here's how to find out what it knows | Tom's Guide

ChatGPT from OpenAI is a powerful productivity platform built on top of artificial intelligence. It has evolved from a simple chat experiment over the past two years and one of its more powerful additions is a memory.

The memory feature of ChatGPT lets the system hold key facts you tell it or it learns about you during interactions for future recall. This could include your favorite food, hairstyle or the names of your pet dogs.

It helps it in future responses to be more personal and useful.

Tom Morgan, founder of The Leading Edge posted on X a fun prompt discovery for ChatGPT. He suggests asking: "From all of our interactions what is on thing that you can tell me about myself that I may not know about myself."

This essentially asks ChatGPT to look through the memories (which can be deleted) and highlight the most surprising or unexpected elements. In my case, it suggested I balance creativity with structural thinking. I thrive on working through complex challenges and planning large-scale projects. That I also marry precision with imagination. Which was nice.

ChatGPT Glossary: 48 AI Terms That Everyone Should Know

With AI technology embedding itself in products from Google, Microsoft, Apple, Anthropic, Perplexity and OpenAI, it's good to stay up to date on all the latest terminology.

OpenAI reveals Swarm — a breakthrough new method for getting AI to do things on your behalf | Tom's Guide

(MRM – who thought “Swarm” was a great name for an AI technology???)

OpenAI has released a new AI technology called the Swarm Framework. This open-source project marks a new milestone in the ongoing AI gold rush.

The framework offers developers a comprehensive set of tools for creating multi-agent AI systems that can complete tasks and goals while cooperating autonomously.

The launch is a surprisingly low-key release that could have profound effects on how we interact with AI in the future. OpenAI makes it clear that this is just a research and educational experiment — but they said that about ChatGPT in 2022.

OpenAI Swarm gives us a glimpse at a future version of ChatGPT where you can ask the AI a question and it can go off and search multiple sources, coming back with a comprehensive answer. It could also perform tasks on different websites or in the real world on your behalf.

Generative AI startups get 40% of all VC investment in cloud: Accel

Venture funding for cloud startups in the U.S., Europe and Israel this year is projected to rise 27% year-over-year, increasing for the first time in three years, according to a report from VC firm Accel.

Out of the $79.2 billion total raised by cloud firms, 40% of all funding went to generative AI startups, Accel said.

“AI is sucking the air out of the room” when it comes to cloud, Philippe Botteri, partner at Accel, told CNBC an interview this week.

Man Outgunned as Girlfriend Brings AI Ammo to Every Argument | PCMag

ByteDance's TikTok cuts hundreds of jobs in shift towards AI content moderation | Reuters

Social media platform TikTok is laying off hundreds of employees from its global workforce, including a large number of staff in Malaysia, the company said on Friday, as it shifts focus towards a greater use of AI in content moderation.

Two sources familiar with the matter earlier told Reuters that more than 700 jobs were slashed in Malaysia. TikTok, owned by China's ByteDance, later clarified that less than 500 employees in the country were affected.

The employees, most of whom were involved in the firm's content moderation operations, were informed of their dismissal by email late Wednesday, the sources said, requesting anonymity as they were not authorized to speak to media.

In response to Reuters' queries, TikTok confirmed the layoffs and said that several hundred employees were expected to be impacted globally as part of a wider plan to improve its moderation operations.

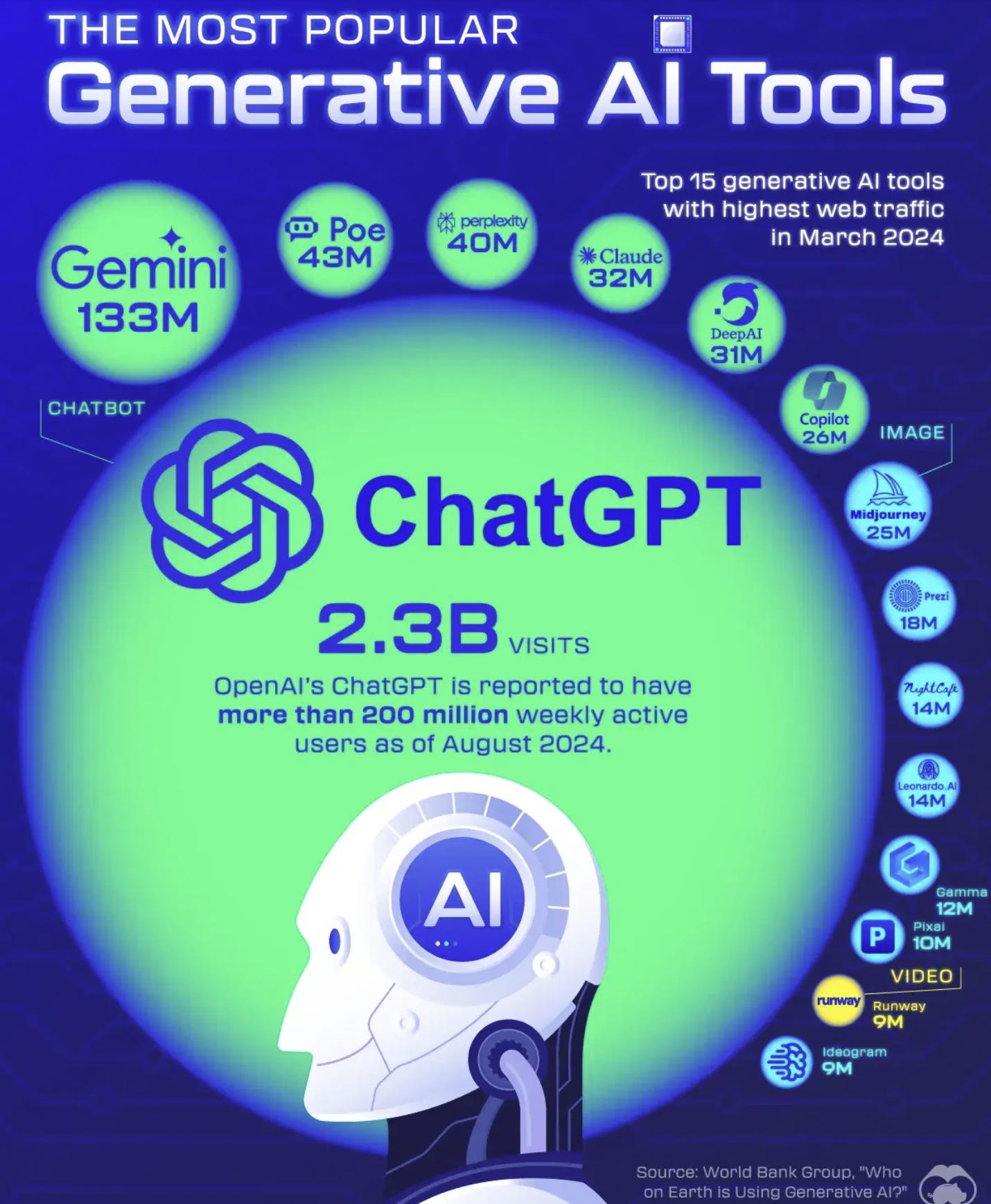

The Nazca Lines Discovered by AI

(MRM – a wonderful story about how AI helped in archeology)

The Nazca Lines are an amazing sight to see. It took nearly a century to figure out just 430 of these Nazca geoglyphs, but now AI nearly doubled the number overnight, adding 303 new geoglyphs to our knowledge. AI might've also revealed why the Nazca lines were constructed!🧵

AI 'Godfather' Yoshua Bengio: We're 'creating monsters more powerful than us'

Whether you think artificial intelligence will save the world or end it — there’s no question we’re in a moment of great enthusiasm. AI, as we know, may not have existed without Yoshua Bengio.

Called the “godfather of artificial intelligence," Bengio, 60, is a Canadian computer scientist who has devoted his research to neural networks and deep learning algorithms. His pioneering work has led the way for the AI models we use today, such as OpenAI’s ChatGPT and Anthropic’s Claude.

"Intelligence gives power, and whoever controls that power — if it's human level or above — is going to be very, very powerful," Bengio said in an interview with Yahoo Finance. "Technology in general is used by people who want more power: economic dominance, military dominance, political dominance. So before we create technology that could concentrate power in dangerous ways. We need to be very careful."

Democratic AI: Should companies like OpenAI and Anthropic get our permission? | Vox

AI companies are on a mission to radically change our world. They’re working on building machines that could outstrip human intelligence and unleash a dramatic economic transformation on us all.

Sam Altman, the CEO of ChatGPT-maker OpenAI, has basically told us he’s trying to build a god — or “magic intelligence in the sky,” as he puts it. OpenAI’s official term for this is artificial general intelligence, or AGI. Altman says that AGI will not only “break capitalism” but also that it’s “probably the greatest threat to the continued existence of humanity.”

There’s a very natural question here: Did anyone actually ask for this kind of AI? By what right do a few powerful tech CEOs get to decide that our whole world should be turned upside down?

As I’ve written before, it’s clearly undemocratic that private companies are building tech that aims to totally change the world without seeking buy-in from the public. In fact, even leaders at the major companies are expressing unease about how undemocratic it is.

Open AI Dismantles Nation State Use of ChatGPT | SC Media UK

Over 20 nation-state and cybercriminal campaigns exploiting OpenAI's ChatGPT service for malware deployment and influence operations have been dismantled this year.

According to SC US, ChatGPT was leveraged to research default industrial control system credentials, as well as it being used to gain information on bash script debugging and Modbus TCP/IP client creation, by the Iranian state-backed threat group CyberAv3ngers.

Another Iranian threat operation - STORM-0817 - was noted to have exposed malware code through ChatGPT - while Chinese threat actor SweetSpecter's spear-phishing attack against OpenAI employees was also foiled.

OpenAI's disruptions of adversarial ChatGPT utilisation comes amid threat actors' limited progress in exploiting the technology in malware attacks or election-targeted influence operations.

"Threat actors continue to evolve and experiment with our models, but we have not seen evidence of this leading to meaningful breakthroughs in their ability to create substantially new malware or build viral audiences," said the report.

Why AI-generated images are being used for propaganda : NPR

After images of the devastation left by Hurricane Helene started to spread online, so too did an image of a crying child holding a puppy on a boat. Some of the posts on X (formerly Twitter) showing the image received millions of views.

It prompted emotional responses from many users - including many Republicans eager to criticize the Biden administration’s disaster response. But others quickly pointed out telltale signs that the image was likely made with generative artificial intelligence tools, such as malformed limbs and blurriness common to some AI image generators.

This election cycle, such AI-generated synthetic images have proliferated on social media platforms, often after politically charged news events. People watching online platforms and the election closely say that these images are a way to spread partisan narratives with facts often being irrelevant.

After X users added a community note flagging that the image of the child in the boat was likely AI-generated, some who shared the image, like Sen. Mike Lee (R-Utah), deleted their posts about it, according to Rolling Stone.

But even after the image’s synthetic provenance was revealed, others doubled down. "I don’t know where this photo came from and honestly, it doesn’t matter." wrote Amy Kremer, a Republican National Committee member representing Georgia, on X.

Google signs contract for small modular nuclear reactors - Marginal REVOLUTION

Since pioneering the first corporate purchase agreements for renewable electricity over a decade ago, Google has played a pivotal role in accelerating clean energy solutions, including the next generation of advanced clean technologies. Today, we’re building on these efforts by signing the world’s first corporate agreement to purchase nuclear energy from multiple small modular reactors (SMRs) to be developed by Kairos Power. The initial phase of work is intended to bring Kairos Power’s first SMR online quickly and safely by 2030, followed by additional reactor deployments through 2035. Overall, this deal will enable up to 500 MW of new 24/7 carbon-free power to U.S. electricity grids and help more communities benefit from clean and affordable nuclear power.

Here is more from Google.

Ethan Mollick with more on the Google Nuclear Deal

The Google deal to acquire small nuclear reactors to power data centers increases the odds that we will see AI models scale through at least 3 more generations/orders of magnitude (post GPT-5) to 2030.

According to Epoch, power was the binding constraint. https://epochai.org/blog/can-ai-scaling-continue-through-2030

Addition is All You Need for Energy-Efficient Language Models

The rising energy consumption of AI applications has raised significant concerns as they become more integrated into daily life. A recent breakthrough by BitEnergy AI proposes a method to drastically reduce the energy requirements of AI, positioning it as a pivotal innovation in the drive for sustainable technology.

A new technique has been developed that could reduce AI energy consumption by 95% by substituting complex floating-point multiplication with integer addition.

AI models like ChatGPT require substantial energy, with estimates suggesting that AI could consume around 100 TWh annually, comparable to Bitcoin mining.

This novel method allows for computations using integer addition to approximate floating-point multiplications, significantly lowering electricity needs without sacrificing performance.

Although the new method demands different hardware than currently used, prototypes have been designed and tested, although licensing and market implications remain uncertain.

All hail the AI Eurocrat: Commission rolls out its own ChatGPT-like tool

The European Commission is known more for regulating artificial intelligence tools than deploying them — until now.

The Commission’s IT department, DG DIGIT, has rolled out an AI pilot project to assist staff in drafting policy documents.

While the Commission has been a global front-runner in regulating AI models underpinning tools such as OpenAI’s ChatGPT, it also wants to use AI’s benefits in the European Union executive daily proceedings.

Some of the Commission’s tasks, such as translating its many press releases and rulebooks, are already heavily reliant on AI.

But “GPT@EC,” as the new tool is called, is one of the first attempts to roll out a generative AI tool that resembles mass-market products like ChatGPT, which functions by having a person enter an instruction as a prompt in a chatbox, after which AI generates the requested text, images or video.

DG DIGIT Deputy Director General Philippe Van Damme said on LinkedIn that the department had launched the tool last week. He said it could help Commission staff create drafts, summarize documents, brainstorm or generate software code.

Staff can choose from several AI models, including Meta’s open-source large language model Llama and OpenAI’s ChatGPT. They can also attach a document, for example, and request that the tool summarize it, or save specific prompts as a template.

The spontaneous emergence of “a sense of beauty” in untrained deep neural networks.

The sense of facial beauty has long been observed in both infants and nonhuman primates, yet the neural mechanisms of this phenomenon are still not fully understood.

The current study employed generative neural models to produce facial images of varying degrees of beauty and systematically investigated the neural response of untrained deep neural networks (DNNs) to these faces. Representational neural units for different levels of facial beauty are observed to spontaneously emerge even in the absence of training. Furthermore, these neural units can effectively distinguish between varying degrees of beauty. Additionally, the perception of facial beauty by DNNs relies on both configuration and feature information of faces.

The processing of facial beauty by neural networks follows a progression from low-level features to integration. The tuning response of the final convolutional layer to facial beauty is constructed by the weighted sum of the monotonic responses in the early layers. These findings offer new insights into the neural origin of the sense of beauty, arising the innate computational abilities of DNNs.

Large Language Models based on historical text could offer informative tools for behavioral science

The study of human behavior traditionally focuses on the here and now. After all, people cannot take surveys or participate in experiments if they are not alive today. Here, we propose a way to address this limitation—namely, the use of Historical Large Language Models (HLLMs). These generative models, trained on corpora of historical texts, may provide populations of simulated historical participants. In principle, responses from these faux individuals can reflect the psychology of past societies, allowing for a more robust and interdisciplinary science of human nature.

AI gives voice to dead animals in Cambridge exhibition | Science | The Guardian

If the pickled bodies, partial skeletons and stuffed carcasses that fill museums seem a little, well, quiet, fear not. In the latest coup for artificial intelligence, dead animals are to receive a new lease of life to share their stories – and even their experiences of the afterlife.

More than a dozen exhibits, ranging from an American cockroach and the remnants of a dodo, to a stuffed red panda and a fin whale skeleton, will be granted the gift of conversation on Tuesday for a month-long project at Cambridge University’s Museum of Zoology.

Equipped with personalities and accents, the dead creatures and models can converse by voice or text through visitors’ mobile phones. The technology allows the animals to describe their time on Earth and the challenges they faced, in the hope of reversing apathy towards the biodiversity crisis.

“Museums are using AI in a lot of different ways, but we think this is the first application where we’re speaking from the object’s point of view,” said Jack Ashby, the museum’s assistant director. “Part of the experiment is to see whether, by giving these animals their own voices, people think differently about them. Can we change the public perception of a cockroach by giving it a voice?”

The U.S. defense and homeland security departments have paid $700 million for AI projects since ChatGPT's launch | Fortune

U.S. defense and security forces are stocking up on artificial intelligence, enlisting hundreds of companies to develop and safety-test new AI algorithms and tools, according to a Fortune analysis.

In the two years since OpenAI released the ChatGPT chatbot, kicking off a global obsession with all things AI, the Department of Defense has awarded roughly $670 million in contracts to nearly 323 companies to work on a range of AI projects. The figures represent a 20% increase from 2021 and 2022, as measured by both the number of companies working with the DOD and the total value of the contracts.

The Department of Homeland Security awarded another $22 million in contracts to 20 companies doing similar work in 2022 and 2023, more than triple what it spent in the prior two-year period.

Fortune analyzed publicly available contract awards and related spending data for both government agencies regarding AI and generative AI work. Among the AI companies working with the military are well-known tech contractors such as Palantir as well as younger startups like Scale AI.

While the military has long supported the development of cutting-edge technology, including AI, the uptick in spending comes as investors and businesses are increasingly betting on AI’s potential to transform society.

For Replicator 2, Army wants AI-enabled counter-drone tech

The Army is eyeing a mix of existing and new technology to potentially scale through the second iteration of the Pentagon’s Replicator initiative, including systems that use artificial intelligence and machine learning to target and intercept small-drone threats.

Defense Secretary Lloyd Austin announced last month that Replicator 2 would center on countering threats from small drones, particularly those that target “critical installations and force concentration,” he said in a Sept. 29 memo. DOD plans to propose funding as part of its fiscal 2026 budget request with a goal of fielding “meaningfully improved” counter-drone defense systems within two years.

As with the first round of the program — which aims to deliver thousands of low-cost drones by next summer — Replicator 2 will work with the military services to identify existing and new capabilities that could be scaled to address gaps in their counter-drone portfolio.

Doug Bush, the Army’s acquisition chief, said Monday that Replicator 2 is particularly focused on fixed-site counter-small uncrewed aerial systems, or C-sUAS, needs, which means protecting installations and facilities. The Army has been fielding systems to detect and engage drones at overseas bases, largely in the Middle East, for several years, and Bush said the service will initially look to increase production of those capabilities.

The Army could start to ramp up production of these existing lines right away, Bush told reporters at the Association of the U.S. Army’s annual conference, and may seek to reprogram funding in fiscal 2025.