A Survivor's Guide to the (maybe?) Coming AIpocalypse

The debate on if it will happen, the timing, and how to think about it.

Glendower: I can call spirits from the vasty deep.

Hotspur: Why, so can I, or so can any man;

But what will they do when you do call for them?

The above slightly modified quote from Shakespeare captures the question on many minds today; in the future, will an ever more powerful AI be beneficial to humanity or end it?

The last few days has seen a raft of essays about the world inevitably ending due to uncontrollable AI, pieces saying “not to worry, it’s all good” and (perhaps most useful of all) articles that provide food for thought on how to think about your life given the uncertainty of it all.

What I’ve done here is not so much add my own take on the subject but to try and provide readers useful essays on this important subject and provide context around them. I hope this provides a service, especially to those who may be new to this issue.

I, like many, have been enthralled but also concerned about the democratization of artificial intelligence via generative AI (“enthralled” is perhaps an appropriate word here as it comes to us from old Norse via Old English, and means “bondman, serf, slave”).

Per a Monmouth University poll, 46% percent of Americans think AI will do the same amount of good and harm to society, while only 9% think it will do more good than harm. Meanwhile, 41% think AI will do more harm than good. Furthermore, 55% of Americans are somewhat or very worried AI could spell the end of the human race. The majority of Americans are not alone or luddites. There are some very smart people who agree with them.

“The world is going to end (and we can’t do anything about it)” argument

I may be wrong but I believe the kickoff to the latest round of this debate* was a podcast in which AI guru Eliezer Yudkowsky stated fairly unequivocally that AGI (artificial general intelligence) would inevitable destroy the world.

* This debate about AI ending humanity is not new; it’s been going on for years but only got mass attention now with the rise of ChatGPT. In fact, the idea that an AI guided robot could hurt humans was first posited in the Greek myth of Talos in 700 B.C.E.

If I can summarize his argument, it is this: we will eventually make an AGI that is smarter than us, it will continually become smarter, and though its objective is to do A, in its quest to do A it will do B as it tries to optimize for A. Oh…and B includes ending the human race. This is called the “alignment problem”.

A fatal example of the alignment problem occurred in 2018 when an Uber autonomous vehicle hit and killed Elaine Herzberg as she was crossing the road with her bicycle. The AI in the vehicle was not trained to a) recognize anyone outside the crosswalk (jaywalkers) and b) it assumed bicycles were traveling in the same direction as it was. For the last 5 seconds before it hit Herzberg, the AI debated itself on if she was a vehicle, a bike or something else until it was too late, so it continued to drive forward (which is what it is programmed to do).

A fundamental characteristic of the alignment challenge is that it’s impossible to prevent all potential alignment problems in advance because reality is more complex than what the AI has been trained to deal with. This haiku I penned attempts to capture the idea.

Though meant to do A

The AI does B. And that

Is how the world ends.

If we could foresee all alignment problems we would never have alignment problems. This is what I (and perhaps others as well) call The Alignment Problem Paradox. The only way to find alignment problems is to unleash the AI system into the real world and hope for the best. If you could find all the alignment problems beforehand, we wouldn’t have alignment problems.

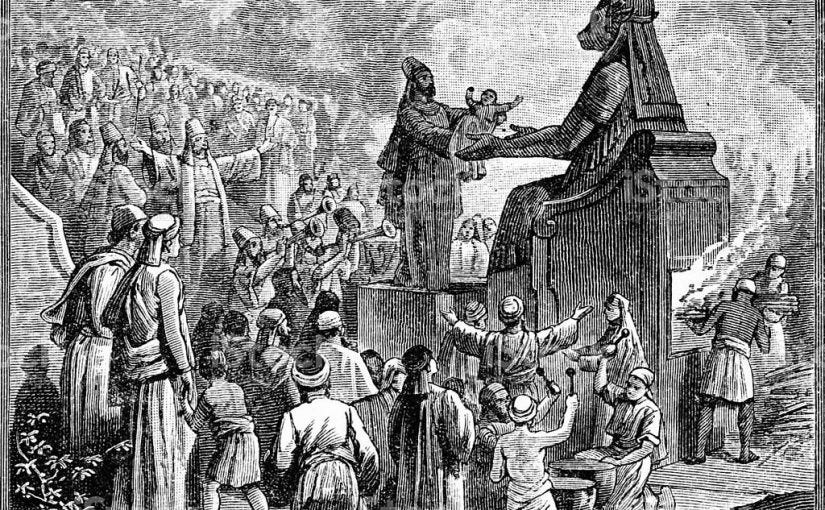

This raises the following question: if we know AI research may lead to the end of humanity, why do we continue doing it? Well, that's due to something Scott Alexander charmingly decided to call the Moloch Trap in his amazing 2014 article, Meditations on Moloch.

Per the Moloch Trap piece…

“Moloch is the personification of the forces that coerce competing individuals to take actions which, although locally optimal, ultimately lead to situations where everyone is worse off. Moreover, no individual is able to unilaterally break out of the dynamic.

Here is a good example of this dynamic from Moloch: Briefly Explained.

“Take global warming as a real-world example. From each country’s perspective, they’d like to avoid the tail-end risks of global warming. But it costs money to mitigate carbon emissions, be it through policy or technology. So each country does best if everyone else pays the cost (but they don’t), so they can reap the benefits and avoid the costs.

Sitting out may be the best choice from each individual country’s perspective, but the problem is that we don’t actually mitigate climate change if everyone sits out (because then nothing gets done), and then everyone suffers terribly.”

To really reflect and understand this phenomenon, I highly recommend you read Alexander’s Meditations on Moloch essay (but set aside some time to do it - it’s something that takes time to absorb). It is both beautiful and haunting. An example of a segment he uses from the Apocrypha Discordia:

Time flows like a river.

Which is to say, downhill.

We can tell this because everything is going downhill rapidly.

It would seem prudent to be somewhere else when we reach the sea.

A bit about the name “Moloch”. Moloch is a Canaanite god named in the Bible who was worshipped via child sacrifice (lovely, eh?). So what does this ancient middle eastern god have to do with AI?

As you read this, the tech world (like the meme below) is shoveling billions into the boilers of AI firms. Each of these companies are working like modern alchemists to turn artificial intelligence tools into gold. Thus the Moloch Trap; in the rush (which here has a double-meaning) to push the technology further, each firm is optimizing its situation, while as a group they may be making all of us ultimately worse off. An example of this problem can be found in the classic example of “the Paperclip Apocalypse.”

Moving back from alchemists and paperclips to the boiler analogy, the question is; “Is the ship we are on one that is bringing all of us to a new life in the New World or is it the Titanic?”

While only small group focused on AGI and tech initially heard Yudkowsky’s podcast, Ezra Klein brought the story to the masses with his New York Times essay, This Changes Everything:

“In a 2022 survey, A.I. experts were asked, “What probability do you put on human inability to control future advanced A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species?” The median reply was 10 percent.

I find that hard to fathom, even though I have spoken to many who put that probability even higher. Would you work on a technology you thought had a 10 percent chance of wiping out humanity?

We typically reach for science fiction stories when thinking about A.I. I’ve come to believe the apt metaphors lurk in fantasy novels and occult texts. As my colleague Ross Douthat wrote, this is an act of summoning. The coders casting these spells have no idea what will stumble through the portal. What is oddest, in my conversations with them, is that they speak of this freely. These are not naifs who believe their call can be heard only by angels. They believe they might summon demons. They are calling anyway.”

(An aside…this thread is Emile P. Torres’ attempt to explain why AI researchers are summoning up artificial yet supernatural beings through the portal. Torres’ view is their philosophy is captured by the acronym, TESCREAL, which stands for “transhumanism, extropianism, singularitarianism, cosmism, Rationalism, Effective Altruism, and longtermism.” Worth a read).

Back to the main narrative…of course, it is the advent of ChatGPT that brings these questions to the forefront now. When asked how long about it and the future, Yudkowsky didn’t think ChatGPT itself is the doom machine, but he does think it may be the match that rekindles the fire.

“Predictions are hard, especially about the future. I sure hope that this is where it saturates — this or the next generation, it goes only this far, it goes no further. It doesn't get used to make more steel or build better power plants, first because that's illegal, and second because the large language model technologies’ basic vulnerability is that it’s not reliable. It's good for applications where it works 80% of the time, but not where it needs to work 99.999% of the time. This class of technology can't drive a car because it will sometimes crash the car.

So I hope it saturates there. I hope they can't fix it. I hope we get, like, a 10-year AI winter after this. This is not what I actually predict. I think that we are hearing the last winds start to blow, the fabric of reality start to fray. This thing alone cannot end the world. But I think that probably some of the vast quantities of money being blindly and helplessly piled into here are going to end up actually accomplishing something. Not most of the money—that just never happens in any field of human endeavor. But 1% of $10 billion is still a lot of money to actually accomplish something.”

“Why can’t the U.S. government just put a hold on AI research or at least slow it down?” you ask. One reason is that the government still hasn’t figured out how to deal with the harms social media, which is the most impactful instance of AI to date. Just recall the 2018 social media hearings where the one senator asked Facebook’s Mark Zuckerberg how the company makes money if it doesn’t charge users for using it. The politicians clearly had no understanding of the technology, the business models and social media’s impact on society. And social media has been around for decades.

But even if it the government could find a way to slow AI development down, it cannot afford to because China, Russia and even our allies will be racing to develop AI capabilities for their own military and commercial advantage. We are in a global Moloch Trap.

Nevermind. There’s less than a 1% chance AI will destroy the world.

For the counterargument to Doomsday School of Thought, one should consider the views of George Mason University Econ Professor, Robin Hanson. Hanson has done groundbreaking work on things such as prediction markets and is both brilliant and grounded in reality, which makes his points well worth considering. In this transcript of a podcast with Richard Hanania called Robin Hanson Says You’re Going to Live, Hanson explains in great depth why he believes an AIpocalypse has a less than 1% chance of occurring. The whole conversation is worth reading so you should read it all, but if you can’t, this section I think does a decent job of making his point. He’s talking about the assumption in the end-of-the-world scenario is that it will happen quickly and be due to an extremely rapid increase in the intelligence of the AI such that it can outsmart our every move like AlphaGo (which so dominates human players that the best Go player in the world gave up playing Go).

“Again, the key thing is there’s this key unusual part of the scenario, that is if you lay out the scenario, you say which of these parts looks the most different from prior experience? It’s this postulate of this sudden acceleration, and very, very large acceleration.

So we have a history of innovation, that is we’ve seen a distribution of the size of innovations in the past. Most innovation is lots of small things. There’s a distribution, a few of them are relatively big, but none of them are that huge.

I would say the largest innovations ever were in essence the innovations underlying the arrival of humans, farming, and industry, because they allowed the world economy to accelerate in its growth, but they didn’t allow one tiny part of the world to accelerate in its growth. They allowed the whole world to accelerate in its growth. So we’re postulating something of that magnitude or even larger, but concentrated in one very tiny system.

That’s the kind of scenario we’re postulating, when this one tiny system finds this one thing that allows it to grow vastly faster than everything else. And I’ve got to say, don’t we need a prior probability on this compared to our data set of experience? And if it’s low enough, shouldn’t we think it’s pretty unlikely?”

Scott Alexander (aka Slate Star Codex and Meditation on Moloch author) has a more positive take as well in Why I Am Not (As Much Of) A Doomer (As Some People).

He has this prediction information and much more on how to think about both the case for and case against Doomerism, so definitely worth your time.

“The average online debate about AI pits someone who thinks the risk is zero, versus someone who thinks it’s any other number. I agree these are the most important debates to have for now.

But within the community of concerned people, numbers vary all over the place:

Scott Aaronson says says 2%

Will MacAskill says 3%

The median machine learning researcher on Katja Grace’s survey says 5 - 10%

Paul Christiano says 10 - 20%

The average person working in AI alignment thinks about 30%

Top competitive forecaster Eli Lifland says 35%

Holden Karnofsky, on a somewhat related question, gives 50%

Eliezer Yudkowsky seems to think >90%

As written this makes it look like everyone except Eliezer is <=50%, which isn’t true; I’m just having trouble thinking of other doomers who are both famous enough that you would have heard of them, and have publicly given a specific number.”

How You Can Think About All This

Now you’ve heard different views on the matter (and there are more out there and surely more to come). So what should you do? Act as if the world is going to end in a couple years? Not have kids? Thrash about in despair?

I found this essay titled, AI: Practical Advice for the Worried by Zvi to be a very insightful way to think about this matter. After offering some useful ways to think about the many possibilities, the piece offers answers to very practical questions one might have, such as;

Q: Should I still save for retirement?

Short Answer: Yes. Long Answer: Yes, to most (but not all) of the extent that this would otherwise be a concern and action of yours in the ‘normal’ world.

and

Q: Does It Still Make Sense to Try and Have Kids?

Short Answer: Yes. Long Answer: Yes. Kids are valuable and make the world and your own world better, even if the world then ends. I would much rather exist for a bit than never exist at all. Kids give you hope for the future and something to protect, get you to step up…

and after answering a lot of extremely pragmatic questions, ends with this one:

Q: How do you deal with the distraction of all this and still go about your life?

A: The same way you avoid being distracted by all the other important and terrible things and risks out there. Nuclear risks are a remarkably similar problem many have had to deal with. Without AI and even with world peace, the planetary death rate would still be expected to hold steady at 100%. Memento mori.

My takeaway from reading Zvi’s piece was that I should continue to live pretty much as before; continue to grow, plan for the future, save, have kids and enjoy life. After considering this advice, I think it is the proper approach for me.

Amazing Haiku embedded in this posting:

Though meant to do A

The AI does B. And that

Is how the world ends.

Thank you Mark for thinking big and writing well!

I found the Moloch trap to be a particularly unsettling yet insightful concept. We feel compelled to compete and strive to improve our individual circumstances, even if we think that the outcome is likely worse for humanity.

"They believe they might summon demons. They are calling anyway." Yikes!