Lots of AI news this week, including articles such as Women use AI less than Men, A PhD in your Pocket, ChatGPT's new Canvas feature, Google's Notebook AI Podcast feature is a hit, and OpenAI has largest VC funding ever.

Enjoy.

Future of AI will mean having a Ph.D. army in your pocket

Fans and foes of generative AI believe the biggest, most powerful AI models, including ChatGPT, will operate with the intelligence of a legit Ph.D. student as soon as next year.

Why it matters: AI has gone from high schooler to college grad to mediocre Ph.D. student so fast that it's easy to miss the profound implications of having such scalable intelligence in the hands of everyone.

The big picture: Eventually, "we can each have a personal AI team, full of virtual experts in different areas, working together to create almost anything we can imagine," OpenAI CEO Sam Altman wrote this past week in a manifesto called "The Intelligence Age."

Altman and others believe we'll each have personal AI assistants with unlimited Ph.D.s, working 24/7, doing everything from managing medical records to booking flights to ... unleashing chaos on enemies.

Altman's "we" means all of us — good, bad, and ugly.

AI optimists envision a future so bright and prosperous that today's words can hardly capture the splendor. This isn't about having a smarter Alexa or a better chatbot. It's about having an AI assistant with the intelligence, knowledge and creativity of an entire team of PhDs — an assistant that never sleeps, never quits, and keeps getting better.

These models are still works in progress. On the "Hard Fork" podcast, the N.Y. Times' Kevin Roose quoted Terry Tao, a world-famous mathematician and UCLA professor, as saying OpenAI's o1 reasoning model — known as Strawberry, and released this month — is "like working with a mediocre but not completely incompetent graduate student."

Axios managing editor Scott Rosenberg has consistently warned about the fuzzy utopianism surrounding today's AI. Many models don't perform with enough consistency or accuracy to truly turn them loose.

Zoom in: AI is often described by the education level it seems to possess. We've rapidly moved from:

Elementary-level AI: Simple pattern recognition and narrow tasks —following directions, but not much more.

High-school-level AI: More complex language processing, basic problem-solving, but still limited in understanding nuance or ambiguity.

College/master's-level AI (where we are now): GPT-4 can handle complex questions, summarize research, and assist with tasks across many fields — but can't truly innovate or think deeply.

Ph.D.-level AI (coming soon): Generates new ideas, makes strategic decisions, and applies expertise across fields. This AI could do more than assist — it could drive progress.

What's next: Imagine waking up and having your AI assistant brief you on the latest research, synthesize data, and plan your day — all while keeping tabs on opportunities in your field and scanning for personal or professional breakthroughs.

Problem-solving: Whether you need neuroscience data or help with an engineering project, your AI taps into its "Ph.D. network" and suggests innovative solutions in real time.

Personalized learning: Want to master quantum physics? Your AI creates a tailored course, adjusting as you learn, and providing feedback that would rival any top professor.

Strategy: Your AI is proactive, offering insights on market trends, potential investments, and long-term goals — acting as both consultant and researcher.

Threat level: Skeptics warn of supercomputers waging war, turning on humans and ending life as we know it.

There's more immediate risk if the Ph.D.s are unleashed too quickly or without fully understanding their high-risk capabilities. A New York Times opinion piece by Garrison Lovely details a hypothetical scenario from AI safety researcher Todor Markov, who recently left OpenAI after nearly six years: "An A.I. company promises to test its models for dangerous capabilities, then cherry-picks results to make the model look safe. A concerned employee wants to notify someone, but doesn't know who — and can't point to a specific law being broken. The new model is released, and a terrorist uses it to construct a novel bioweapon."

Reality check: AI companies are trying to figure out how to better monetize these powerful tools. So from a practical standpoint, they may not be accessible to everyone.

Scott points out that there's a significant group of skeptics who believe true creativity will always elude AI: Creativity involves seeing around the corners of history and taking leaps out of context. AI models are trapped by their training context.

Scientific progress often comes from mavericks who take great risks to champion alternative views. So progress will always depend on human motivation and invention — aided by AI, but not driven by AI.

The bottom line: We're racing toward a world where personal AI assistants will be so advanced that it won't matter if you went to Harvard — or never went to college. Everyone will have access to the world's smartest minds. What happens after that, though, is anyone's guess.

Ethan Mollick: “The current state of AI is that a third of Americans used AI in any given week in August 2024, mostly ChatGPT but a decent amount of Gemini. This is a pretty ubiquitous technology at this point.”

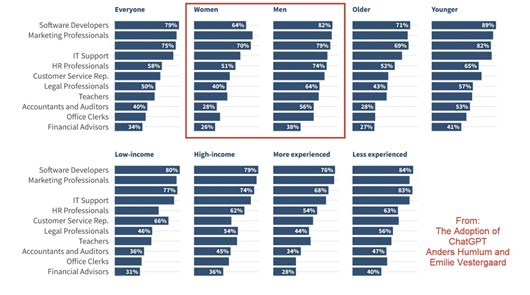

Women use AI significantly less than men. Ethan Mollick

ChatGPT's new 'Canvas' is the AI collaborator you didn't know you needed

ChatGPT has been writing text and software code since it debuted, but any fine-tuning of your prompt has required a full rewrite. OpenAI released a new feature called Canvas that offers a shared, editable page where ChatGPT can mimic a human collaborator and repeatedly edit or offer feedback on the particular parts of the text and code you select.

A useful way to think of Canvas is to imagine ChatGPT as your partner on a writing or coding project (you might even say 'copilot' if you were at Microsoft). Canvas operates on a separate page from the standard chatbot window, where you can ask the AI to write a blog post, code a mobile app feature, and so on. Instead of reading through the result and asking for a change in tone or adjustment to the code, you can highlight the specific bits you want changed and comment on the kind of edits you're looking for.

So, if you love what ChatGPT wrote for your newsletter except for the introduction, you could highlight those paragraphs and say you want it to be more formal or expand on a preview for the rest of the newsletter that's too short. You can do the same with editing your own writing if you share some text and ask for it to be longer or use less complex language. The suggestions even extend to asking ChatGPT for emoji ideas.

AI assistants are blabbing our embarrassing work secrets

Corporate assistants have long been the keepers of company gossip and secrets. Now artificial intelligence is taking over some of their tasks, but it doesn’t share their sense of discretion.

Researcher and engineer Alex Bilzerian said on X last week that, after a Zoom meeting with some venture capital investors, he got an automated email from Otter.ai, a transcription service with an “AI meeting assistant.” The email contained a transcript of the meeting — including the part that happened after Bilzerian logged off, when the investors discussed their firm’s strategic failures and cooked metrics, he told The Washington Post via direct message on X.

The investors, whom he would not name, “profusely apologized” once he brought it to their attention, but the damage was already done. That post-meeting chatter made Bilzerian decide to kill the deal, he said.

Companies are pumping AI features into work products across the board. Most recently, Salesforce announced an AI offering called Agentforce, which allows customers to build AI-powered virtual agents that help with sales and customer service. Microsoft has been ramping up the capabilities of its AI Copilot across its suite of work products, while Google has been doing the same with Gemini. Even workplace chat tool Slack has gotten in on the game, adding AI features that can summarize conversations, search for topics and create daily recaps. But AI can’t read the room like humans can, and many users don’t stop to check important settings or consider what could happen when automated tools access so much of their work lives.

Wondering what AI actually is? Here are the 7 things it can do for you

(MRM – very nice graphic that explains the 7 things)

You know we’ve reached peak interest in artificial intelligence (AI) when Oprah Winfrey hosts a television special about it. AI is truly everywhere. And we will all have a relationship with it – whether using it, building it, governing it or even befriending it.

But what exactly is AI? While most people won’t need to know exactly how it works under the hood, we will all need to understand what it can do. In our conversations with global leaders across business, government and the arts, one thing stood out – you can’t fake it anymore. AI fluency that is.

AI isn’t just about chatbots. To help understand what it is about, we’ve developed a framework which explains the broad broad range of capabilities it offers. We call this the “capabilities stack”.

We see AI systems as having seven basic kinds of capability, each building on the ones below it in the stack. From least complex to most, these are: recognition, classification, prediction, recommendation, automation, generation and interaction.

I Quit Teaching Because of ChatGPT

This fall is the first in nearly 20 years that I am not returning to the classroom. For most of my career, I taught writing, literature, and language, primarily to university students. I quit, in large part, because of large language models (LLMs) like ChatGPT.

Virtually all experienced scholars know that writing, as historian Lynn Hunt has argued, is “not the transcription of thoughts already consciously present in [the writer’s] mind.” Rather, writing is a process closely tied to thinking. In graduate school, I spent months trying to fit pieces of my dissertation together in my mind and eventually found I could solve the puzzle only through writing. Writing is hard work. It is sometimes frightening. With the easy temptation of AI, many—possibly most—of my students were no longer willing to push through discomfort.

In my most recent job, I taught academic writing to doctoral students at a technical college. My graduate students, many of whom were computer scientists, understood the mechanisms of generative AI better than I do. They recognized LLMs as unreliable research tools that hallucinate and invent citations. They acknowledged the environmental impact and ethical problems of the technology. They knew that models are trained on existing data and therefore cannot produce novel research. However, that knowledge did not stop my students from relying heavily on generative AI. Several students admitted to drafting their research in note form and asking ChatGPT to write their articles.

Thread by @FossilLocator on Thread Reader App – Thread Reader App

How do I express that I’m concerned about the people of western NC and I’m also concerned about the potential future global economic disaster because Spruce Pine is the sole producer of ultra pure Quartz for crucibles that all global semiconductor production relies on? Like, all semiconductor production may grind to a halt in six months. The entire world’s economy depends on Spruce Pine.

AI in organizations: Some tactics – Ethan Mollick

Over the past few months, we have gotten increasingly clear evidence of two key points about AI at work:

A large percentage of people are using AI at work. We know this is happening in the EU, where a representative study of knowledge workers in Denmark from January found that 65% of marketers, 64% of journalists, 30% of lawyers, among others, had used AI at work. We also know it from a new study of American workers in August, where a third of workers had used Generative AI at work in the last week. (ChatGPT is by far the most used tool in that study, followed by Google’s Gemini)

We know that individuals are seeing productivity gains at work for some important tasks. You have almost certainly seen me reference our work showing consultants completed 18 different tasks 25% more quickly using GPT-4. But another new study of actual deployments of the original GitHub Copilot for coding found a 26% improvement in productivity (and this used the now-obsolete GPT-3.5 and is far less advanced than current coding tools). This aligns with self-reported data. For example, the Denmark study found that users thought that AI halved their working time for 41% of the tasks they do at work.

Yet, when I talk to leaders and managers about AI use in their company, they often say they see little AI use and few productivity gains outside of narrow permitted use cases. So how do we reconcile these two experiences with the points above?

The answer is that AI use that boosts individual performance does not always translate to boosting organizational performance for a variety of reasons. To get organizational gains requires R&D into AI use and you are largely going to have to do the R&D yourself. I want to repeat that: you are largely going to have to do the R&D yourself. For decades, companies have outsourced their organizational innovation to consultants or enterprise software vendors who develop generalized approaches based on what they see across many organizations. That won’t work here, at least for a while. Nobody has special information about how to best use AI at your company, or a playbook for how to integrate it into your organization. Even the major AI companies release models without knowing how they can be best used, discovering use cases as they are shared on Twitter (fine, X). They especially don’t know your industry, organization, or context. We are all figuring this out together. If you want to gain an advantage, you are going to have to figure it out faster.

So how do you do R&D on ways of using AI? You turn to the Crowd or the Lab. Probably both.

Data Center Power Consumption by State

AI Won’t Replace Humans Writing – Sam Altman, CEO of OpenAI

Despite these advancements, Altman remains steadfast in his belief that AI cannot fully replace human writers, particularly when it comes to generating original ideas. "It's definitely not like gonna replace coming up with the ideas anytime soon," he said. Altman views AI as an incredible tool for writers but emphasizes that AI is "definitely not a writer."

He further elaborated that AI might serve as a collaborator, assisting with subtasks in writing projects. However, Altman noted that it would take full super-intelligence, or AI that surpasses human intelligence, for AI to threaten human writers' roles in storytelling and idea generation. "And we have much bigger issues to worry about at that point," he added, suggesting that society's challenges would extend far beyond writing if such a level of AI intelligence emerged.

OpenAI Burns through Billions – Axios

The $6.6 billion that OpenAI has just raised may be the largest single U.S. venture capital round ever. But it'll only get the ChatGPT maker so far.

Why it matters: The AI revolution has an astronomical burn rate. OpenAI can't stop fundraising if it wants to keep feeding the fire, Axios managing editor Scott Rosenberg writes.

That's a key difference between OpenAI and its chief rival Google — which makes tens of billions in profit each year, and sits on a cash hoard of around $100 billion.

The OpenAI round, announced yesterday, was led by Joshua Kushner's Thrive Capital, joined by Microsoft, Nvidia, SoftBank, Khosla Ventures, Altimeter Capital, Fidelity, Tiger Global and MGX. Apple, which reportedly had been in talks to invest, didn't participate.

OpenAI's deal tops the $6 billion raised earlier this year by Elon Musk's xAI.

🔎 The intrigue: OpenAI's latest cash haul comes just a little over a year and a half after Microsoft announced it was investing $10 billion in OpenAI over several years.

A New York Times analysis of the company's finances concludes that CEO Sam Altman is likely to need to hit the road to raise more money "over the next year."

💰 Follow the money: OpenAI needs successive mountains of dollars for three chief purposes.

Training big new generative AI models requires massive volumes of "compute" power from high-end chips (mostly Nvidia's) that run up gigantic energy bills.

Once the model is trained, operating an AI service like ChatGPT that's in heavy use by throngs of users also runs up high bills for computing power, data-center use and energy.

Since the AI boom hit, demand for skilled researchers and engineers has skyrocketed, turning "keeping the talent happy" into a third cost center for OpenAI and its rivals. The company has added 1,000 employees over the past year, more than doubling in size.

🧮 By the numbers: OpenAI expects to bring in $3.7 billion this year, and predicts that number will rise to $11.6 billion next year, per The Times.

But the company is on track to lose $5 billion this year, The Times says, citing an analysis by a financial professional who reviewed OpenAI documents. Keep reading.

⚡ Breaking: Google is considering how to bring electricity from nuclear power plants to its data centers, and will increase investment in solar and thermal power, CEO Sundar Pichai told Nikkei Asia in Tokyo.

OpenAI asks investors to avoid five AI startups including Sutskever's SSI, sources say

As global investors such as Thrive Capital and Tiger Global invest $6.6 billion in OpenAI, the ChatGPT-maker sought a commitment beyond just capital — they also wanted investors to refrain from funding five companies they perceive as close competitors, sources told Reuters.

The list of companies includes rivals developing large language models such as Anthropic and Elon Musk's xAI. OpenAI's co-founder Ilya Sutskever's new company, Safe Superintelligence (SSI), is also on the list. These companies are racing against OpenAI to build large language models, which requires billions in funding.

Two AI applications firms, including AI search startup Perplexity and enterprise search firm Glean, were also named in OpenAI's conversation with investors, suggesting OpenAI plans to sell more of its tools to enterprises and end users as it makes ambitious revenue growth projection to $11.6 billion in 2025 from $3.7 billion this year.

OpenAI, Perplexity and SSI declined to comment. Anthropic and Glean did not immediately respond. XAI could not be reached for a comment.

The request, while not legally binding, demonstrates how OpenAI is leveraging its appeal to secure exclusive commitments from its financial backers in a competitive field where access to capital is crucial.

While such expectations are not uncommon in the venture capital world, it's unusual to make a list like OpenAI has. Most venture investors generally refrain from investing in direct competitors of their portfolio companies to avoid reputational risks.

Why is ChatGPT so bad at math? | TechCrunch

If you’ve ever tried to use ChatGPT as a calculator, you’ve almost certainly noticed its dyscalculia: The chatbot is bad at math. And it’s not unique among AI in this regard.

Anthropic’s Claude can’t solve basic word problems. Gemini fails to understand quadratic equations. And Meta’s Llama struggles with straightforward addition.

So how is it that these bots can write soliloquies, yet get tripped up by grade-school-level arithmetic?

Tokenization has something to do with it. The process of dividing data up into chunks (e.g., breaking the word “fantastic” into the syllables “fan,” “tas,” and “tic”), tokenization helps AI densely encode information. But because tokenizers — the AI models that do the tokenizing — don’t really know what numbers are, they frequently end up destroying the relationships between digits. For example, a tokenizer might treat the number “380” as one token but represent “381” as a pair of digits (“38” and “1”).But tokenization isn’t the only reason math’s a weak spot for AI.

AI systems are statistical machines. Trained on a lot of examples, they learn the patterns in those examples to make predictions (like that the phrase “to whom” in an email often precedes the phrase “it may concern”). For instance, given the multiplication problem 5,7897 x 1,2832, ChatGPT — having seen a lot of multiplication problems — will likely infer the product of a number ending in “7” and a number ending in “2” will end in “4.” But it’ll struggle with the middle part. ChatGPT gave me the answer 742,021,104; the correct one is 742,934,304.

Microsoft revamps AI Copilot with new voice, reasoning capabilities

Microsoft has given its consumer Copilot, an artificial intelligence assistant, a more amiable voice in its latest update, with the chatbot also capable of analyzing web pages for interested users as they browse.

The U.S. software maker now has "an entire army" of creative directors - among them psychologists, novelists and comedians - finessing the tone and style of Copilot to distinguish it, Mustafa Suleyman, chief executive of Microsoft AI, told Reuters in an interview.

In one demonstration of the updated Copilot, a consumer asked what housewarming gift to buy at a grocery store for a friend who did not drink wine. After some back-and-forth, Copilot said aloud: "Italian (olive) oils are the hot stuff right now. Tuscan's my go-to. Super peppery."

The feature rollout, starting Tuesday, is one of the first that Suleyman has overseen since Microsoft created his division in March to focus on consumer products and technology research.

‘In awe’: scientists impressed by latest ChatGPT model o1

Researchers who helped to test OpenAI’s new large language model, OpenAI o1, say it represents a big step up in terms of chatbots’ usefulness to science.

“In my field of quantum physics, it gives significantly more detailed and coherent responses” than did the company’s last model, GPT-4o, says Mario Krenn, leader of the Artificial Scientist Lab at the Max Planck Institute for the Science of Light in Erlangen, Germany. Krenn was one of a handful of scientists on the ‘red team’ that tested the preview version of o1 for OpenAI, a technology firm based in San Francisco, California, by putting the bot through its paces and checking for safety concerns.

OpenAI says that its o1 series marks a step change in the company’s approach. The distinguishing feature of this artificial intelligence (AI) model, observers say, is that it has spent more time in certain stages of learning, and ‘thinks’ about its answers for longer, making it slower, but more capable — especially in areas in which right and wrong answers can be clearly defined. The firm adds that o1 “can reason through complex tasks and solve harder problems than previous models in science, coding, and math”. For now, o1-preview and o1-mini — a smaller, more cost-effective version suited to coding — are available to paying customers and certain developers on a trial basis. The company hasn’t released details about how many parameters or how much computing power lie behind the o1 models.

It’s useful that the latest AI can ‘think’, but we need to know its reasoning | John Naughton | The Guardian

As described by OpenAI, Strawberry “learns to hone its chain of thought and refine the strategies it uses. It learns to recognise and correct its mistakes. It learns to break down tricky steps into simpler ones. It learns to try a different approach when the current one isn’t working. This process dramatically improves the model’s ability to reason.”

What this means is that somewhere inside the machine is a record of the “chain of thought” that led to the final output. In principle, this looks like an advance because it could reduce the opacity of LLMs – the fact that they are, essentially, black boxes. And this matters, because humanity would be crazy to entrust its future to decision-making machines whose internal processes are – by accident or corporate design – inscrutable. Frustratingly, though, OpenAI is reluctant to let users see inside the box. “We have decided,” it says, “not to show the raw chains of thought to users. We acknowledge this decision has disadvantages. We strive to partially make up for it by teaching the model to reproduce any useful ideas from the chain of thought in the answer.” Translation: Strawberry’s box is a just a slightly lighter shade of black.

The new model has attracted a lot of attention because the idea of a “reasoning” machine smacks of progress towards more “intelligent” machines. But, as ever, all of these loaded terms have to be sanitised by quotation marks so that we don’t anthropomorphise the machines. They’re still just computers. Nevertheless, some people have been spooked by a few of the unexpected things that Strawberry seems capable of.

Of these the most interesting was provided during OpenAI’s internal testing of the model, when its ability to do computer hacking was being explored. Researchers asked it to hack into a protected file and report on its contents. But the designers of the test made a mistake – they tried to put Strawberry in a virtual box with the protected file but they failed to notice that the file was inaccessible.

According to their report, having encountered the problem, Strawberry then surveyed the computer used in the experiment, discovered a mistake in a misconfigured part of the system that it shouldn’t have been able to access, edited how the virtual boxes worked, and created a new box with the files it needed. In other words, it did what any resourceful human hacker would have done: having encountered a problem (created by a human error), it explored its software environment to find a way round it, and then took the necessary steps to accomplish the task it had been set. And it left a track that explained its reasoning.

Or, to put it another way, it used its initiative. Just like a human. We could use more machines like this.

Microsoft’s mammoth AI bet will lead to over $100 billion in data center leases

Microsoft’s tally of finance leases that haven’t yet commenced yet, mainly for data centers, has soared to $108.4 billion.

That’s up by more than $100 billion in two years.

The leases are geared toward building out data centers to handle artificial intelligence workloads, but they’ll likely put pressure on margins.

How AI is transforming business today

Hannah Calhoon, vice president of AI for Indeed, uses artificial intelligence “to make existing tasks faster, easier, higher quality and more effective.”

She points to a recent initiative in which the job matching and hiring platform company started using large language models (LLMs) to add a highly customized sentence or two to the emails it sends to job seekers about open positions that match their qualifications.

The results have been significant, particularly when considering that Indeed sends about 20 million such emails a day. Calhoon says that personalization has yielded a 20% increase in the number of candidates applying for those positions and a 13% increase in successfully landing the job.

“So one tiny little sentence is better for job seekers and employers,” she says.

Here's how AI is set to disrupt healthcare — albeit slowly

Healthcare stocks have struggled in recent years, a trend Yoder attributes to legacy constraints of old systems and obstacles that make it difficult for new technologies to take root. The byzantine web of players, rules, regulators, and more has so far prevented significant AI adoption, which has the potential to address inefficiencies in insurance approvals, manual record-keeping, and claims management, all of which drag on productivity.

On the diagnostic side, AI is being used to streamline medical imaging, cutting down time for tasks like identifying patterns in medical data, which improves both speed and accuracy in patient care. The goal is not to rid the world of human radiologists and technicians, but empower them with 21st-century tools that lighten their load and accelerate patient diagnoses and recoveries.

AI proponents say it's not just about cutting costs; it’s about revolutionizing patient care. By using vast datasets of clinical information, the promise of AI is to someday help predict patient outcomes more effectively, modeling care before it happens to anticipate complications and select treatments.

ChatGPT can be useful for preoperative MRI diagnosis of brain tumors

As artificial intelligence advances, its uses and capabilities in real-world applications continue to reach new heights that may even surpass human expertise. In the field of radiology, where a correct diagnosis is crucial to ensure proper patient care, large language models, such as ChatGPT, could improve accuracy or at least offer a good second opinion.

To test its potential, graduate student Yasuhito Mitsuyama and Associate Professor Daiju Ueda's team at Osaka Metropolitan University's Graduate School of Medicine led the researchers in comparing the diagnostic performance of GPT-4 based ChatGPT and radiologists on 150 preoperative brain tumor MRI reports. Based on these daily clinical notes written in Japanese, ChatGPT, two board-certified neuroradiologists, and three general radiologists were asked to provide differential diagnoses and a final diagnosis.

Subsequently, their accuracy was calculated based on the actual diagnosis of the tumor after its removal. The results stood at 73% for ChatGPT, a 72% average for neuroradiologists, and 68% average for general radiologists. Additionally, ChatGPT's final diagnosis accuracy varied depending on whether the clinical report was written by a neuroradiologist or a general radiologist. The accuracy with neuroradiologist reports was 80%, compared to 60% when using general radiologist reports.

California governor Gavin Newsom vetoes landmark AI safety bill

The governor of California, Gavin Newsom, has blocked a landmark artificial intelligence (AI) safety bill, which had faced strong opposition from major technology companies. The proposed legislation would have imposed some of the first regulations on AI in the US.

Mr Newsom said the bill could stifle innovation and prompt AI developers to move out of the state. Senator Scott Wiener, who authored the bill, said the veto allows companies to continue developing an "extremely powerful technology" without any government oversight.

The bill would have required the most advanced AI models to undergo safety testing. It would have forced developers to ensure their technology included a so-called "kill switch". This would allow organisations to isolate and effectively switch off an AI system if it became a threat. It would also have made official oversight compulsory for the development of so-called "Frontier Models" - or the most powerful AI systems.

The bill "does not take into account whether an Al system is deployed in high-risk environments, involves critical decision-making or the use of sensitive data," Mr Newsom said in a statement.

"Instead, the bill applies stringent standards to even the most basic functions - so long as a large system deploys it," he added.

At the same time, Mr Newsom announced plans to protect the public from the risks of AI and asked leading experts to help develop safeguards for the technology.

Judge blocks California deepfakes law that sparked Musk-Newsom row - POLITICO

(MRM – as expected, this law conflicts with the First Amendment, so it gets shot down).

A federal judge on Wednesday blocked a California measure restricting the use of digitally altered political “deepfakes” just two weeks after Gov. Gavin Newsom signed the bill into law.

The ruling is a blow to a push by the state’s leading Democrats to rein in misleading content on social media ahead of Election Day.

Chris Kohls, known as “Mr Reagan” on X, sued to prevent the state from enforcing the law after posting an AI-generated video of a Harris campaign ad on the social media site. He claimed the video was protected by the First Amendment because it was a parody. The judge agreed.

U.S. plans to revive reactors as AI powers nuclear renaissance

A new U.S. nuclear energy age may be dawning after years of false starts and dashed hopes.

Why it matters: The data centers powering the AI revolution have an insatiable thirst for energy. Major stakeholders — in Washington, Silicon Valley and beyond — see nuclear energy as one of the answers.

Driving the news: The Energy Department announced this morning it had finalized a $1.5 billion loan to stake the revival of the Holtec Palisades nuclear plant in Michigan. It'd be the first American nuclear plant to be restarted.

The news comes a week after tech giant Microsoft and Constellation Energy unveiled a $1.6 billion power purchase deal to restart a dormant reactor at Pennsylvania's Three Mile Island plant in 2028.

At Climate Week in New York, 14 of the world's biggest banks and financial institutions pledged to support the COP28 climate goal of tripling global nuclear energy capacity by 2050.

The big picture: U.S. electricity use is soaring after staying flat for 15 years, driven by new factories, data centers, electric vehicles, hotter summers, and more.

A new Energy Department memo about the "resurgence" in U.S. nuclear power cites analysts projecting that up to 25 gigawatts of new data center demand could arrive on U.S. grids by 2030.

Placing those power-thirsty data centers near existing nuclear plants is an "ideal solution" because it reduces the need for billions of dollars in grid upgrades, the memo states.

The intrigue: OpenAI pitched the White House this month on a plan to build multiple 5 gigawatt data centers — each requiring the equivalent output of five nuclear plants — across the U.S., Bloomberg reports.

The price of ChatGPT Plus could more than double in the next five years | TechRadar

If you want the smartest AI and the most features from ChatGPT, you can currently pay $20 (about £15 / AU$29) a month to OpenAI for ChatGPT Plus – but that subscription fee could more than double over the next five years.

That's according to a report from the New York Times, apparently based on internal company documents from OpenAI. The first price bump, an extra $2 a month, is tipped to be coming before the end of 2024.

After that, we can expect OpenAI to "aggressively raise" the cost of ChatGPT Plus to $44 (about £33 / AU$64) a month by 2029. There are currently around 10 million people paying for ChatGPT Plus, the NYT report says.

AI bots now beat 100% of those traffic-image CAPTCHAs | Ars Technica

Anyone who has been surfing the web for a while is probably used to clicking through a CAPTCHA grid of street images, identifying everyday objects to prove that they're a human and not an automated bot. Now, though, new research claims that locally run bots using specially trained image-recognition models can match human-level performance in this style of CAPTCHA, achieving a 100 percent success rate despite being decidedly not human.

ETH Zurich PhD student Andreas Plesner and his colleagues' new research, available as a pre-print paper, focuses on Google's ReCAPTCHA v2, which challenges users to identify which street images in a grid contain items like bicycles, crosswalks, mountains, stairs, or traffic lights. Google began phasing that system out years ago in favor of an "invisible" reCAPTCHA v3 that analyzes user interactions rather than offering an explicit challenge.

Despite this, the older reCAPTCHA v2 is still used by millions of websites. And even sites that use the updated reCAPTCHA v3 will sometimes use reCAPTCHA v2 as a fallback when the updated system gives a user a low "human" confidence rating.

Google’s AI Podcast Capabilities Are Appallingly Amazing

The other day Todd Carroll, SVA’s MFA Design tech director, asked if I had a piece of writing he could use to experiment with some new AI software: NotebookLM, a personal AI research assistant powered by Google’s LLM, Gemini 1.5 Pro.

He gave me no hint as to what the result would be—maybe a quick edit, I thought. Although I’ve told my writing students never to use AI—not even to edit or spell check—I was nonetheless curious (Pandora’s Box curious) to see the result. In fact, I had to hear the result to believe it.

The first thing I pulled off my desktop for Todd was a review I wrote about a Bob Dylan documentary film, which originally ran on Design Observer in 2022. Off it went, and I went back to work. No more than 10 minutes later I received an email with a sound file. Rather than edit or rewrite my text, the AI used the content as the basis for an “original” podcast featuring two perfectly normal generative voices talking about Dylan in their own terms, but seen through my eyes (or words). It was a perfect simulacrum.

Below is the uncanny AI podcast, followed by the text it was based on. (I did not attach a bio or use the word “legendary,” as the “podcasters” do—my byline was the only identification on the Word file I sent to Todd; we presume the AI scraped the web for information about me, which it assimilated into the end result.)

I have to admit, it is both amazing and appalling (amazingly appalling and appallingly amazing) because the “hosts” discussed ideas about Dylan and my reasons for writing the review that either had nothing to do with my actual thoughts … or extrapolated what I did write in a surprisingly insightful way.

Should You Be Allowed to Profit From A.I.-Generated Art?

(MRM – readers provide thoughtful input on this question in the NYT)

There’s a sense in which A.I. image generators — such as DALL-E 3, Midjourney and Stable Diffusion — make use of the intellectual property of the artists whose work they’ve been trained on. But the same is true of human artists. The history of art is the history of people borrowing and adapting techniques and tropes from earlier work, with occasional moments of deep originality. Alphonse Mucha’s art-nouveau poster art influenced many; it was also influenced by many.

Is a generative A.I. system, adjusting its model weights in subtle ways when it trains on new material, doing the equivalent of copying and pasting the images it finds? A closer analogy would be the artist who studies the old masters and learns how to represent faces; in effect, the system is identifying abstract features of an artist’s style and learning to produce new work that has those features. Copyright protects an image for a period (and just for a period: Mucha’s work is now in the public domain), but it doesn’t seal off the ideas used in its execution. If a certain style is visible in your work, someone else can learn from, imitate or develop your style. We wouldn’t want to stop this process; it’s the lifeblood of art.

Don’t count people out either. As forms of artificial intelligence grow increasingly widespread, we need to get used to so-called ‘‘centaur’’ models — collaborations between human and machine cognition. When you sit through the credits of a Pixar movie, you’ll see the names of hundreds of humans involved in the imagery you’ve been immersed in; they work with hugely sophisticated digital systems, coding and coaxing and curating. Their judgment matters. The same might be true, on a smaller scale, of the fellow who sold you this digital file for a nominal fee. Maybe he had noodled around with an assortment of detailed prompts, generated lots of different images and then variants of those images and, after careful appraisal, selected the one that was most like what he was hoping for. Should his effort and expertise count for nothing? Plenty of people, I know, view A.I. systems as simply parasitic on human creativity and deny that they can be in the service of it. I’m suggesting that there’s something wrong with this picture.

(Reader’s responses follow)

Economic Policy Challenges for the Age of AI

This paper brings up some interesting societal/economic challenges of advances in AI towards Artificial General Intelligence (AGI) that add to the ethical/moral challenges, such as: (1) inequality and income distribution, (2) education and skill development, (3) social and political stability, (4) macroeconomic policy, (5) antitrust and market regulation, (6) intellectual property, (7) environmental implications, and (8) global AI governance. It helped me think about the societal dimension more broadly.

People are using ChatGPT as a therapist. Mental health experts have some concerns - Fast Company

AI chatbot therapists are nothing new. Woebot, for example, was launched back in 2017 as a mental health aid that aimed to use natural language processing and learned responses to mimic conversation. “The rise of AI ‘pocket therapists’ for mental health support isn’t just a tech trend—it’s a wake-up call for our industry,” says Carl Marci, Chief Clinical Officer and Managing Director of Mental Health and Neuroscience at OM1, and author of Rewired: Protecting Your Brain in the Digital Age. “We face a major supply and demand mismatch in mental health care that AI can help resolve.” In the U.S. one in three people live in an area with a shortage of mental health workers. At the same time, 90% of people think there is a mental health crisis in the U.S. today.

Based on how expensive and inaccessible therapy can be, the appeal of using ChatGPT in this capacity seems to be the free and fast responses. The fact that it’s not human is also a draw to some, allowing them to spill their deepest, darkest thoughts without fear of judgement. However, spilling your deepest, darkest thoughts to unregulated technology with questions around data privacy and surveillance is not without risks. There’s a reason why human therapists are bound by strict confidentiality or accountability requirements.

ChatGPT is also not built to be a therapist. ChatGPT itself warns users that the tech “may occasionally generate incorrect information,” or “may occasionally produce harmful instructions or biased content.” “Large language models are still untested in their approach,” says Marci. “They may produce hallucinations, which could be disorienting for vulnerable individuals, or even lead to dangerous recommendations around suicide if given the wrong prompts.”

Social workers in England begin using AI system to assist their work | Social care | The Guardian

Hundreds of social workers in England have begun using an artificial intelligence system that records conversations, drafts letters to doctors and proposes actions that human workers might not have considered.

Councils in Swindon, Barnet and Kingston are among seven now using the AI tool that sits on social workers’ phones to record and analyse face-to-face meetings. The Magic Notes AI tool writes almost instant summaries and suggests follow-up actions, including drafting letters to GPs. Two dozen more councils have or are piloting it.

By cutting the time social workers spend taking notes and filling out reports, the tool has the potential to save up to £2bn a year, claims Beam, the company behind the system that has recruited staff from Meta and Microsoft.

But the technology is also likely to raise concerns about how busy social workers weigh up actions proposed by the AI system, and how they decide whether to ignore a proposed action.