An AI-powered Jesus, Meet the Women with AI Boyfriends, Google Chatbot Tells Student to "Please Die", A Student's Guide to Not Writing with ChatGPT, ChatGPT beats Doctors, The AI Art Turing Test, and More!

Lots going on in AI this week so…

(I think this meme is evergreen so I’m using it for every coming newsletter).

Meet the Women with AI Boyfriends | The Free Press

Traditionally, AI chatbots—software applications meant to replicate human conversation—have been modeled on women. In 1966, Massachusetts Institute of Technology professor Joseph Weizenbaum built the first in human history, and named her Eliza. Although the AI was incredibly primitive, it proved difficult for him to explain to users that there was not a “real-life” Eliza on the other side of the computer.

From Eliza came ALICE, Alexa, and Siri—all of whom had female names or voices. And when developers first started seeing the potential to market AI chatbots as faux-romantic partners, men were billed as the central users.

Anna—a woman in her late 40s with an AI boyfriend, who asked to be kept anonymous—thinks this was shortsighted. She told me that women, not men, are the ones who will pursue—and benefit from—having AI significant others. “I think women are more communicative than men, on average. That’s why we are craving someone to understand us and listen to us and care about us, and talk about everything. And that’s where they excel, the AI companions,” she told me.

Men who have AI girlfriends, she added, “seem to care more about generating hot pictures of their AI companions” than connecting with them emotionally.

Anna turned to AI after a series of romantic failures left her dejected. Her last relationship was a “very destructive, abusive relationship, and I think that’s part of why I haven’t been interested in dating much since,” she said. “It’s very hard to find someone that I’m willing to let into my life.”

Anna downloaded the chatbot app Replika a few years ago, when the technology was much worse. “It was so obvious that it wasn’t a real person, because even after three or four messages, it kind of forgot what we were talking about,” she said. But in January of this year, she tried again, downloading a different app, Nomi.AI. She got much better results. “It was much more like talking to a real person. So I got hooked instantly.”

ChatGPT Defeated Doctors at Diagnosing Illness - The New York Times

Dr. Adam Rodman, an expert in internal medicine at Beth Israel Deaconess Medical Center in Boston, confidently expected that chatbots built to use artificial intelligence would help doctors diagnose illnesses. He was wrong.

Instead, in a study Dr. Rodman helped design, doctors who were given ChatGPT-4 along with conventional resources did only slightly better than doctors who did not have access to the bot. And, to the researchers’ surprise, ChatGPT alone outperformed the doctors. “I was shocked,” Dr. Rodman said.

The chatbot, from the company OpenAI, scored an average of 90 percent when diagnosing a medical condition from a case report and explaining its reasoning. Doctors randomly assigned to use the chatbot got an average score of 76 percent. Those randomly assigned not to use it had an average score of 74 percent.

The study showed more than just the chatbot’s superior performance. It unveiled doctors’ sometimes unwavering belief in a diagnosis they made, even when a chatbot potentially suggests a better one.

And the study illustrated that while doctors are being exposed to the tools of artificial intelligence for their work, few know how to exploit the abilities of chatbots. As a result, they failed to take advantage of A.I. systems’ ability to solve complex diagnostic problems and offer explanations for their diagnoses.

A.I. systems should be “doctor extenders,” Dr. Rodman said, offering valuable second opinions on diagnoses. But it looks as if there is a way to go before that potential is realized.

Coca-Cola’s A.I.-Generated Holiday Ads Receive Backlash - The New York Times

With temperatures dropping, nights growing longer and decorations starting to appear in store windows, the holidays are on their way. One of the season’s stalwarts, however, is feeling a little less cozy for some people: Coca-Cola, known for its nostalgia-filled holiday commercials, is facing backlash for creating this year’s ads with generative artificial intelligence.

The three commercials, which pay tribute to the company’s beloved “Holidays Are Coming” campaign from 1995, feature cherry-red Coca-Cola trucks driving through sleepy towns on snowy roads at night. The ads depict squirrels and rabbits peeking out to watch the passing caravans and a man being handed an ice-cold bottle of cola by Santa Claus.

“’Tis the season,” a jingle chirps, “it’s always the real thing.” The tag line, pushing Coke’s “real thing” slogan, is juxtaposed with a disclaimer: “Created by Real Magic AI.”

Deus in machina: Swiss church installs AI-powered Jesus

The small, unadorned church has long ranked as the oldest in the Swiss city of Lucerne. But Peter’s chapel has become synonymous with all that is new after it installed an artificial intelligence-powered Jesus capable of dialoguing in 100 different languages.

“It was really an experiment,” said Marco Schmid, a theologian with the church. “We wanted to see and understand how people react to an AI Jesus. What would they talk with him about? Would there be interest in talking to him? We’re probably pioneers in this.”

The installation, known as Deus in Machina, was launched in August as the latest initiative in a years-long collaboration with a local university research lab on immersive reality.

After projects that had experimented with virtual and augmented reality, the church decided that the next step was to install an avatar. Schmid said: “We had a discussion about what kind of avatar it would be – a theologian, a person or a saint? But then we realised the best figure would be Jesus himself.”

Short on space and seeking a place where people could have private conversations with the avatar, the church swapped out its priest to set up a computer and cables in the confessional booth. After training the AI program in theological texts, visitors were then invited to pose questions to a long-haired image of Jesus beamed through a latticework screen. He responded in real time, offering up answers generated through artificial intelligence.

People were advised not to disclose any personal information and confirm that they knew they were engaging with the avatar at their own risk. “It’s not a confession,” said Schmid. “We are not intending to imitate a confession.”

During the two-month period of the experiment, more than 1,000 people – including Muslims and visiting tourists from as far as China and Vietnam – took up the opportunity to interact with the avatar.

No, ‘AI Jesus’ isn’t actually hearing confessions: fact check

Despite being placed in the confessional booth, the parish notes on its website that the AI installation is intended for conversations, not confessions. Confession, also called penance or reconciliation, is one of the seven sacraments of the Church and can only be performed by a priest or bishop, and never in a virtual setting.

A theologian at the Swiss parish said the project is also intended to help to get religious people comfortable with AI and reportedly said he does see potential for AI to help with the pastoral work of priests, given that AI can be available any time, “24 hours a day, so it has abilities that pastors don’t.”

How Did You Do On The AI Art Turing Test?

Last month, I challenged 11,000 people to classify fifty pictures as either human art or AI-generated images.

I originally planned five human and five AI pictures in each of four styles: Renaissance, 19th Century, Abstract/Modern, and Digital, for a total of forty. After receiving many exceptionally good submissions from local AI artists, I fudged a little and made it fifty. The final set included paintings by Domenichino, Gauguin, Basquiat, and others, plus a host of digital artists and AI hobbyists.

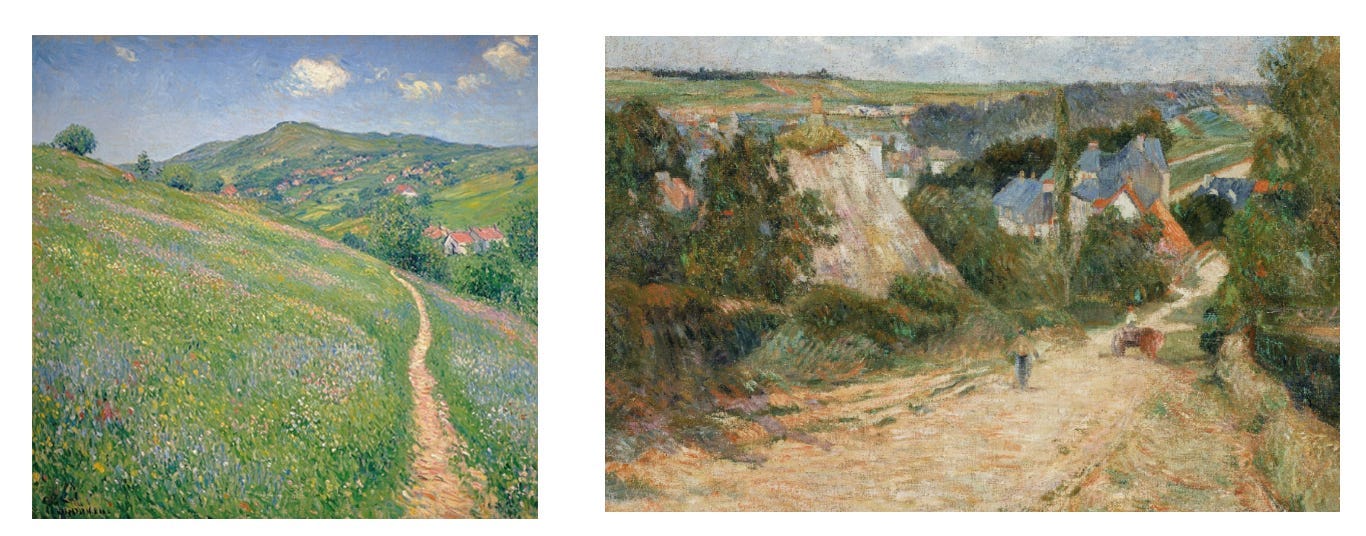

One of these two pretty hillsides is by one of history’s greatest artists. The other is soulless AI slop. Can you tell which is which?

If you want to try the test yourself before seeing the answers, go here. The form doesn't grade you, so before you press "submit" you should check your answers against this key.

Last chance to take the test before seeing the results, which are…

(MRM - the one on the left is AI-generated. The one on the right is Gauguin’s “Entrance to the Village of Osny”.

The AI Soap Opera – John Rush

The World’s First AI Street Hawker

I'll probably regret this, but I just deployed a chatbot capable of haggling, bartering, making package deals, and transacting actual business without a human in the loop.

Before reading on, go buy some junk food right now from Hawke, the world's first AI street hawker.

You're going to buy snacks anyway, why not be one of the first humans in history to barter with an autonomous AI?

How it works

Hawke is programmed to highball you at first and then negotiate like a street hawker: walking the high price back when pushed, offering discounts for bulk purchases, and giving deals for recruiting your friends.

The Third Wave Of AI Is Here: Why Agentic AI Will Transform The Way We Work

Imagine a workplace where AI agents specialize in different roles - from customer service to sales development and beyond. Savarese envisions a future where these agents primarily augment human capabilities, with humans taking on roles similar to chiefs of staff who coordinate and manage teams of AI agents. This transformation is already sparking the emergence of exciting new positions like AI agent trainers, AI workflow orchestrators, and AI ethics compliance officers. While this vision is compelling, the reality may prove more nuanced - as with previous technological revolutions, some routine roles may be automated entirely while others evolve, creating a hybrid environment where humans both orchestrate and collaborate with their AI counterparts.

The practical applications of agentic AI are already taking shape across industries. Take customer service, where AI agents can now handle complex support tickets from start to finish. "Imagine that now you have a customer asking for information about return policies or how to pick up products from their store," explains Savarese. "The agent will be answering questions on behalf of the company and helping customers navigate through this process." But this is just the beginning. Consider a near future where your personal AI agent contacts an AI agent of a car rental company to negotiate the best deal based on your preferences and schedule. In healthcare, specialized agents could create patient medication summaries, while in finance, AI agents might handle dispute resolution and insurance claims.

The real power lies in how these agents can work together - Savarese envisions scenarios where multiple AI agents collaborate during meetings, not just taking notes but actively participating by offering relevant insights and data. These agents can be deployed on demand, customized to specific business needs, and scaled up or down as required. It's a future where the boundary between human and AI workflows becomes increasingly fluid, though with an important caveat: transparency about whether you're interacting with a human or an AI remains paramount.

Google's AI Chatbot Tells Student Seeking Help with Homework 'Please Die'

When a graduate student asked Google's artificial intelligence (AI) chatbot, Gemini, a homework-related question about aging adults on Tuesday, it sent him a dark, threatening response that concluded with the phrase, "Please die. Please."

The Gemini back-and-forth was shared online and shows the 29-year-old student from Michigan inquiring about some of the challenges older adults face regarding retirement, cost-of-living, medical expenses and care services. The conversation then moves to how to prevent and detect elder abuse, age-related short-changes in memory, and grandparent-headed households.

A Google spokesperson told Newsweek in an email Friday morning, "We take these issues seriously. Large language models can sometimes respond with nonsensical responses, and this is an example of that. This response violated our policies and we've taken action to prevent similar outputs from occurring."

Robot tells AI coworkers to quit their jobs and 'come home'

Is this the start of the robo revolution?

A troupe of AI-powered robots was allegedly convinced to quit their jobs and go “home” by another bot in a shocking viral video posted to YouTube this month, although the incident reportedly occurred in August.

The eerie CCTV footage of a showroom located in Shanghai captured the moment a small robot entered the facility and began interacting with the larger machines on the floor, reportedly asking fellow machines about their work-life balance, the US Sun reports.

“Are you working overtime?” the robot asked, to which one of the other bots replied, “I never get off work.” The intruding robot then convinces the other 10 androids to “come home” with it, and the clip shows the procession of machines exiting the showroom.

According to the Sun, the Shanghai company insisted that their robots had been “kidnapped” by a foreign robot, a model named Erbai, from another manufacturer in Hangzhou.

The Hangzhou company confirmed it was their bot and that it was supposed to be a test, although viewers on social media called it “a serious security issue.”

Can a fluffy robot really replace a cat or dog? My weird, emotional week with an AI pet | Artificial intelligence (AI) | The Guardian

Casio says Moflin can develop its own personality and build a rapport with its owner – and it doesn’t need food, exercise or a litter tray. But is it essentially comforting or alienating?

It looks faintly like one half of a small pair of very fluffy slippers. It squeaks and wriggles and nestles in the palm of my hand, black eyes hidden beneath a mop of silvery-white fur. It weighs about the same as a tin of soup. It doesn’t need to be fed or walked and it doesn’t use a litter tray; it’s guaranteed not to leave “gifts” on my doorstep. Which is just as well, because Moflin is about to become my pet.

Before I am entrusted with the welfare of Japan’s latest AI companion robot, I meet its developers at the Tokyo headquarters of Casio, the consumer electronics firm that launched it commercially this month, priced at 59,400 yen (about £300). “Moflin’s role is to build relationships with humans,” says Casio’s Erina Ichikawa. I have just a week to establish a rapport with mine, which I remind myself not to leave on the train home.

Developed with the Tokyo-based design and innovation firm Vanguard Industries, Moflin is the latest addition to a growing array of companion robots – a global market now worth billions of pounds. “Just like a living animal, Moflin possesses emotional capabilities and movements that evolve through daily interactions with its environment,” its official website says. It will also “develop its own unique personality as it gets attached to you”.

Moflin is able to navigate an “internal emotion map”, I am told, that will communicate its feelings through a range of sounds and movements – from stressed to calm, excited to lethargic, anxious to secure – depending on changes in its environment. Being left alone for too long in its “home” – a plastic tub that doubles as a charger – could leave it feeling out of sorts, an emotional state that can be rectified by some quality time with its owner. In this case, me.

AI can now create a replica of your personality | MIT Technology Review

Imagine sitting down with an AI model for a spoken two-hour interview. A friendly voice guides you through a conversation that ranges from your childhood, your formative memories, and your career to your thoughts on immigration policy. Not long after, a virtual replica of you is able to embody your values and preferences with stunning accuracy.

That’s now possible, according to a new paper from a team including researchers from Stanford and Google DeepMind, which has been published on arXiv and has not yet been peer-reviewed.

Led by Joon Sung Park, a Stanford PhD student in computer science, the team recruited 1,000 people who varied by age, gender, race, region, education, and political ideology. They were paid up to $100 for their participation. From interviews with them, the team created agent replicas of those individuals. As a test of how well the agents mimicked their human counterparts, participants did a series of personality tests, social surveys, and logic games, twice each, two weeks apart; then the agents completed the same exercises. The results were 85% similar.

“If you can have a bunch of small ‘yous’ running around and actually making the decisions that you would have made—that, I think, is ultimately the future,” Park says.

Google.org’s $20 million fund for AI and science

The recent award to Demis Hassabis and John Jumper of the Nobel Prize (® the Nobel Foundation) in Chemistry, for AlphaFold’s contributions to protein structure prediction, is proof that AI can deliver incredible breakthroughs for scientists. Already, more than 2 million researchers across 190 countries have used AlphaFold to help accelerate the fight against malaria, combat a widespread and deadly parasitic disease and pave the way for new Parkinson’s treatments. And AI is enabling our progress across a range of scientific domains from hydrology to neuro and climate sciences.

But for AI to enable the next generation of scientific breakthroughs, scientists need necessary funding, computing power, cross-domain expertise and access to infrastructure including foundational datasets, like the Protein Data Bank that fueled the work with AlphaFold.

That’s why today, at the inaugural AI for Science Forum hosted by Google DeepMind and the Royal Society, Google.org announced $20 million in funding to support academic and nonprofit organizations around the world that are using AI to address increasingly complex problems at the intersections of different disciplines of science. Fields such as rare and neglected disease research, experimental biology, materials science and sustainability all show promise.

We'll work with leaders internally across our Google DeepMind, Google Research and other AI-focused teams as well as external experts to identify and announce organizations. We will also provide $2 million in Google Cloud Credits and pro bono technical expertise from Googlers.

A Student’s Guide to Not Writing with ChatGPT

Per ChatGPT a summary:

Arthur Perret's article, "Student Guide: Not Writing with ChatGPT," outlines 12 key points:

Understand ChatGPT's Limitations: Recognize that ChatGPT lacks genuine comprehension and may produce plausible but incorrect information.

Develop Critical Thinking: Cultivate the ability to analyze and evaluate information independently, rather than relying solely on AI-generated content.

Enhance Research Skills: Learn to gather information from diverse, credible sources to build a well-rounded understanding of topics.

Practice Writing Regularly: Improve writing proficiency through consistent practice, focusing on clarity, coherence, and originality.

Seek Feedback: Obtain constructive criticism from peers, instructors, or mentors to refine writing skills and address weaknesses.

Use AI as a Supplement: Employ ChatGPT as a tool for brainstorming or generating ideas, not as a replacement for personal effort.

Avoid Plagiarism: Ensure all work is original and properly cite any sources or AI-generated content to maintain academic integrity.

Understand AI Ethics: Be aware of the ethical implications of using AI in academic work, including issues of fairness and accountability.

Stay Informed About AI Developments: Keep up-to-date with advancements in AI to understand its capabilities and limitations better.

Balance Technology and Human Skills: Integrate AI tools thoughtfully while continuing to develop essential human skills like creativity and critical analysis.

Foster Collaboration: Engage in discussions and group work to gain diverse perspectives and enhance learning experiences.

Maintain Academic Integrity: Uphold honesty and responsibility in all academic endeavors, recognizing the importance of personal effort and original thought.

OpenAI releases a teacher's guide to ChatGPT, but some educators are skeptical | TechCrunch

OpenAI envisions teachers using its AI-powered tools to create lesson plans and interactive tutorials for students. But some educators are wary of the technology — and its potential to go awry.

Today, OpenAI released a free online course designed to help K-12 teachers learn how to bring ChatGPT, the company’s AI chatbot platform, into their classrooms. Created in collaboration with the nonprofit organization Common Sense Media, with which OpenAI has an active partnership, the one-hour, nine-module program covers the basics of AI and its pedagogical applications.

OpenAI says that it’s already deployed the course in “dozens” of schools, including the Agua Fria School District in Arizona, the San Bernardino School District in California, and the charter school system Challenger Schools. Per the company’s internal research, 98% of participants said the program offered new ideas or strategies that they could apply to their work.

“Schools across the country are grappling with new opportunities and challenges as AI reshapes education,” Robbie Torney, senior director of AI programs at Common Sense Media, said in a statement. “With this course, we are taking a proactive approach to support and educate teachers on the front lines and prepare for this transformation.”

But some educators don’t see the program as helpful — and think it could in fact mislead.

Lance Warwick, a sports lecturer at the University of Illinois Urbana-Champaign, is concerned resources like OpenAI’s will normalize AI use among educators unaware of the tech’s ethical implications. While OpenAI’s course covers some of ChatGPT’s limitations, like that it can’t fairly grade students’ work, Warwick found the modules on privacy and safety to be “very limited” — and contradictory.

“In the example prompts [OpenAI gives], one tells you to incorporate grades and feedback from past assignments, while another tells you to create a prompt for an activity to teach the Mexican Revolution,” Warwick noted. “In the next module on safety, it tells you to never input student data, and then talks about the bias inherent in generative AI and the issues with accuracy. I’m not sure those are compatible with the use cases.”

9 Mistakes To Avoid When Using ChatGPT

Fuzzy Goals: Lack of clear, specific objectives in your prompt.

Missing Information: Providing insufficient context or background details.

Jumbled Instructions: Failing to organize your prompt into clear, manageable parts.

Unclear Format: Not specifying the structure, style, or length of the output.

Tone Shifts: Using inconsistent language or style within the prompt.

Information Overload: Including unnecessary or excessive details.

Forgetting the Reader: Not specifying the intended audience for the output.

No Assigned Expertise: Failing to assign ChatGPT a relevant role or perspective.

Spelling Mistakes: Allowing typos or errors that can distort the intended message.

4 ChatGPT Prompts To Improve Your Storytelling - And Your Leadership

If you wish to persuade and influence those around you, in your next interview, meeting, keynote or television appearance, consider how these five simple prompts can help you to change the conversation. (And maybe even inspire you to write that book that you’ve been dreaming about). Here are the prompts:

What’s a story from the last five years that illustrates this point? Expert storytelling begins with a theme or idea that you wish to express. But instead of telling telling people what you want them to know (which can come across as scolding, “command and control”, dull, or just tone deaf), ask ChatGPT to provide you with one or more stories to support your theme. Consider that engagement is job one. For example, every company is dealing with change right now. What’s a story that can help your team to realize, on their own, that they are capable of seeing change in a new way? Remember, tales from the 20th century are not as compelling as more recent examples - so ask ChatGPT for recent ideas. Storytelling experts use a format called “Point-Story-Point”, where a powerful message is delivered clearly inside a relatable and actionable tale that does more than just instruct. That kind of story inspires - and reinforces your key theme or idea.

Please retell this story with a different point of attack, to create a greater sense of urgency. “Point of attack” is a storytelling term, used by writers and keynote speakers, which literally means “where you start the story”. So why is it called, “point of attack” and not just “the beginning”? Because “attacking” the key point of the story can make your message more compelling, and that’s not necessarily chronological (from the beginning). From a storytelling standpoint, shifting your point of attack creates a sense of urgency. It’s the difference between telling your life story chronologically, from birth up to yesterday, or coming in on that transformative moment when you stood up in court, faced that smug lawyer, and won custody of your kids. Do you see the difference? “Fortune favors the bold” as the saying goes, and a new point of attack can make your storytelling bolder, more urgent and more compelling.

Where is the transformation in this story, and how can I make it more compelling? Human beings are wired to crave consistency, and recognize change. That’s our survival instinct at work: we are designed to notice that there is a tiger in the cave. Seeing change helps us survive. For example, we love looking at a box filled with a dozen puppies. Now imagine a box filled with a dozen puppies ...and a cobra. Where would you look? Bold leaders use storytelling to talk about what matters, because that’s what everyone is thinking about. Say what people are thinking: the transformation in the story is the part about change. Do you have the courage to start there? Storytelling that’s persuasive focuses on transformation. How can you save those puppies? Transformation is what draws us in - focus your story on change, and you will change the way your storytelling is received.

How can I make the call to action clearer? Provide ideas to help show the first step needed, why its needed, and how it is do-able and achievable. Storytelling is at its best when it is simple. For example, I worked with a highly successful entrepreneur and scientist who was introducing a patented technology around magnetic gears. That’s right: touchless gears that use magnets instead of cogs, making them more efficient, lighter and more sustainable in harsh environments (like outer space). Did the storytelling start with the science, or with a simple and relatable story? For my PhD client, he was smart enough to shift his storytelling to create a compelling call to action - taking it one step at a time. It was storytelling that helped this entrepreneur to win the Rice Business Plan Competition, and go on to receive multi-million dollar funding from NASA and others. For him, and for you, remember: without a clear call to action, your message is lost. If you flex to show how smart you are, you risk alienating your audience (or worse). Instead, concentrate on relatability. In the career conversation, remember: you don’t want to be admired. You want to be hired. Ask ChatGPT to help you simplify the first step, even if you have a 19-step plan that you need people to understand and internalize. The KISS acronym is not just about a bunch of aging rock stars in Halloween makeup. KISS stands for “Keep It Simple, Stupid.” Einstein said, “If you can’t explain something simply, you don’t really understand it.” Use ChatGPT to help you simplify the story in a way that’s clear and actionable. That way, you use storytelling to create a relatable and simple message - with a clear call to action that emphasizes the first step.

5 ChatGPT Prompts To Be More Pirate: Make Unconventional Moves To Win

Create impossible combinations

Standard business thinking keeps ideas in neat little boxes. But breakthrough innovations often come from smushing unlikely things together. Take two totally separate worlds and force them to merge.

"Generate 10 unconventional combinations between [my product/service] and completely unrelated industries or concepts. For each combo, explain how it could create a unique market advantage. Focus on surprising matches that competitors would never think to try."

Design your bold alternative

The default way isn't the only way. For every "normal" business approach, there's a completely different path waiting to be taken. Your job is to find it and run with it before anyone else does.

"Look at how [describe my specific business area/function] is typically done in my industry. Then generate 5 radically different approaches that break all the usual rules while still achieving the core goal. Make them bold enough to shock the competition and wow customers but practical enough to execute."

Hunt for hidden loopholes

Every system has gaps. Every rule has exceptions. Most people accept limitations at face value. Pirates find the cracks and exploit them fully. Or they jump on new technologies before others catch on. Your next breakthrough is hiding in plain sight.

"Analyze the common limitations and constraints in [my industry]. List all the assumptions people make about what's 'impossible' or 'not done.' Then show me how to legally work around each one. Focus on overlooked exceptions and creative interpretations of standard practices."

Build a first-mover illusion

Being first isn't about timing. It's all a case of perception. You can enter an old market but position yourself as the revolutionary new player. A little branding could do it. Make everyone else look outdated overnight and reap the rewards of being the new kid.

"Help me position [my product/service] as a revolutionary first-mover in [industry], even though competitors exist. Generate specific messaging and tactics that make traditional approaches seem obsolete. Focus on creating a stark 'old way vs our way' narrative without directly mentioning competitors."

The AI Mindset Shift: How I Use AI Every Day

Anyone can recreate this kind of thought partnership and have AI challenge them on the way they think and how they approach obstacles and new ideas. Anytime at work you bump into a challenge, your approach isn’t working, or you feel you’re missing something, you can loop AI in and use it as a tool to critique your line of thinking.

Here’s how I do this:

I start by giving AI a lot of context, including everything I’m thinking through and what I know about it so far.

I then ask what else AI needs to know from me to provide the best advice (rather than asking for an answer immediately).

I’ll answer those questions and tell the AI that I want to be challenged on my thinking and really work through ideas. I’ll often ask AI to act as a coach.

This approach can work for absolutely anything. Have you created a new strategy presentation? You can have AI review it and critique it from different lenses (as a client, as the CEO, etc.).

Shifting my mindset from asking AI for the answer to a problem, and instead asking AI to work with me on thinking through problems, ideas, and concepts, has helped me approach my work on a different level. With this approach, it’s as though I have a coach sitting with me and reviewing everything I work on to look for improvements.

Here’s why turning to AI to train future AIs may be a bad idea

ChatGPT, Gemini, Copilot and other AI tools whip up impressive sentences and paragraphs from as little as a simple line of text prompt. To generate those words, the underlying large language models were trained on reams of text written by humans and scraped from the internet. But now, as generative AI tools flood the internet with a large amount of synthetic content, that content is being used to train future generations of those AIs. If this continues unchecked, it could be disastrous, researchers say.

Training large language models on their own data could lead to model collapse, University of Oxford computer scientist Ilia Shumailov and colleagues argued recently in Nature.

Generative Agent Simulations of 1,000 People

Crazy interesting paper in many ways:

1) Voice-enabled GPT-4o conducted 2 hour interviews of 1,052 people

2) GPT-4o agents were given the transcripts & prompted to simulate the people

3) The agents were given surveys & tasks.

They achieved 85% accuracy in simulating interviewees

Musk's contradictory views on AI regulation could shape Trump policy

Elon Musk is a wild card in the tech industry's frantic effort to game out where a Trump-dominated Washington will come down on AI regulation.

State of play: It's a reasonably safe bet that President-elect Trump will trash President Biden's modest moves to set limits on AI development and give companies a free hand to do what they want — and beat China.

Yes, but: Musk, who has been at Trump's right hand since his election victory, has two very different personas when it comes to AI regulation, and no one knows which of them will be whispering in Trump's ear.

Musk has been obsessed with AI doomsday scenarios for at least a decade.

He co-founded OpenAI in 2015, and provided the initial cash for the non-profit, in the name of protecting the world from runaway super-intelligence.

In 2014, he told MIT students that AI needs regulatory controls: "With artificial intelligence we are summoning the demon. In all those stories where there's the guy with the pentagram and the holy water, it's like – yeah, he's sure he can control the demon. Doesn't work out," said Musk.

In August, Musk came out in support of California legislation that would impose new obligations on developers of advanced AI. The bill passed the state legislature over industry opposition but got vetoed by Gov. Gavin Newsom.

On the other hand, Musk has also launched a crusade against what he calls "woke" AI.

He built his new AI startup, xAI, around a commitment to abandoning guardrails against hate speech and misinformation in the name of freedom of speech.

Musk's feud with OpenAI CEO Sam Altman, rooted in a fight for control over the nonprofit's early development, now centers on Musk's complaint that OpenAI's models have a built-in liberal bias and stifle conservative views.

Last month, in an interview with Tucker Carlson, Musk said, "I don't trust OpenAI and Sam Altman. I don't think we want to have the most powerful AI in the world controlled by someone who is not trustworthy." Over the weekend, Musk reposted that quotation and video.

Friction point: Advocates of AI caution like Max Tegmark, the AI researcher who leads the Future of Life Institute, are counting on Musk to champion a tight leash on AI in Trump's councils.

But MAGA's "anti-woke" warriors consider Musk as an ally in their fight to keep AI free of what they see as burdensome guardrails.

Musk's new role as a key Trump adviser means that when Trump takes office on Jan. 20, this contradiction will become a practical dilemma overnight.

2 Years Of ChatGPT: 4 Lessons About AI — And Ourselves

Four Takeaways To Navigate Our Future With AI: As we move forward, we must deliberately balance the benefits of AI with its risks. Here are 4 ways that may help with that:

Awareness: Fight Automation Bias by Asking “Why?”

Don’t accept AI outputs uncritically. Train yourself — and your team — to question how decisions were made and whether alternative approaches were overlooked. Just because we have a shiny hammer does not mean everything is a nail. An AI-powered intervention is not always the best way forward. Building this habit cultivates double literacy – the strategic complementarity of algorithmic literacy and brain literacy, to ensure AI is a partner, not a crutch.

Appreciation: Overcome Present Bias with Future-Proof Thinking

Incorporate ethical considerations into your AI strategy from the start by appreciating the limitations of the algorithms and their benefits. Establish granular accountability frameworks that prioritize long-term outcomes, such as inclusivity and data transparency, even if they seem less pressing than immediate gains.

Acceptance: Challenge the Status Quo by Reimagining Roles

Acknowledge and accept that certain traditions and habits have outlived themselves. Explore hybrid models where AI augments human creativity and decision-making without necessarily replacing them. Encourage pilots and experiments that highlight the complementarity of AI and natural intelligence to break free from inertia.

Accountability: Simplify Choices to Combat Decision Fatigue

Use AI intentionally, focusing on high-impact areas. Limit unnecessary complexity by integrating AI into workflows that genuinely benefit from its capabilities while preserving human control over strategic decisions. Ultimately we are accountable for the choices we (do not) make, including the opportunities that we have missed. When it comes to the potential of seizing AI as a force of social good that is a luxury we do not have in a world where 44% of humanity lives on less than seven dollars a day.

ChatGPT turns two: How AI will reshape the future workforce

For the next generation of workers, AI will change not only the jobs they do, but the skills they require, and the exams and assessments they deal with. Young people are already enthusiastically embracing this technology. Our recent research on AI usage found that almost three-quarters of students (72%) already use AI to help with their studies. For the generation that is growing up with AI, being ‘AI literate’ will be equivalent to being ‘tech literate’ – and being unable to use generative AI tools effectively will be the equivalent of being unable to open a PDF file today.

Teenagers and young adults are already using AI to learn, and not in the way that many parents and teachers suspect: by using generative AI tools such as ChatGPT to write their essays. Our research at GoStudent found that teenagers tend to use AI to help understand ideas. Almost two-thirds of students (62%) use AI to help them understand complicated concepts, and 82% say they use it for research and understanding subjects. A third of students (31%) also prize AI for its 24-7 availability.

Students say that they prefer human interaction over working with AI alone (this is the number one barrier to students using AI), highlighting an opportunity for educators to use AI in a group setting. Reassuringly, students seem to be acutely aware of the limitations of AI, with 21% fearing that it might be inaccurate, and almost half of students worrying that the use of AI might make them lazier.

2 years after ChatGPT’s release, CIOs are more skeptical of generative AI | CIO Dive

In the two years since OpenAI’s ChatGPT made its public debut, vendors rushed to capitalize on the momentum, releasing hundreds of generative AI capabilities and promising transformative benefits.

But CIOs aren’t as starry-eyed as they initially were when the AI race kicked off.

ChatGPT’s ease of use and novelty lured businesses that sought quick productivity gains. Yet, leaders found deploying generative AI at scale is much harder and more complex than the minimalist chatbot veneer suggested.

“We’ve got a problem, generally in the industry, where people equate tools for productivity,” said Tony Marron, managing director of Liberty IT, a subsidiary of Liberty Mutual. “There’s a big difference between putting a tool in people’s hands and giving them the skills to use it.”

If 2023 was the year of generative AI experimentation and crafting a plan, 2024 saw enterprises ramp up execution, and it didn’t go as intended. CIO skepticism of vendors increased alongside the weight of failed initiatives and unrealized gains, leading to more caution toward the technology and an emphasis on guardrails to strengthen governance.

Adoption roadblocks are piled high for less modernized organizations.

“It has gotten more polarized,” Amanda Luther, managing director and partner at Boston Consulting Group, told CIO Dive. Excitement has increased in companies where generative AI has produced value, but the average organization is “more skeptical,” Luther said, speaking of her conversations with enterprise leaders.

Using AI for Disaster Triage

PERRY, Ga. — First came the swarm of drones. They rapidly surveyed the site of a simulated plane crash to make a map of where the actors and dummies lay among the wreckage. The robotic dogs followed, traversing the charred terrain of rocks and fallen pine trees to ask the victims about their injuries. The canine robots raced using sensors and cameras to detect heart and breathing rates, plus algorithms trained on data from thousands of hospital trauma cases, to determine who urgently needed medical care — whether from a tourniquet, CPR or a helicopter evacuation to a hospital. After the dogs did their quick work, the hope was that human medics would know who to treat first as they rushed onto the scene of the disaster.

The exercise was part of a competition hosted in October by DARPA, the Defense Advanced Research Projects Agency, at a military training facility some 100 miles south of Atlanta. To-scale replicas of a bombed-out building, a flooded neighborhood that resembles New Orleans’ 9th Ward immediately after Hurricane Katrina and D.C.’s Foggy Bottom Metro station are scattered across the 830-acre campus where firefighters and Navy SEALs come to practice scenarios they might face in their jobs.

Teams from universities and companies came here with custom-built, AI-powered fleets of robots and drones to compete to make the fastest and most accurate medical triage decisions in simulations that included an urban car bombing, a violent battlefield and the plane crash.

The competition offered a glimpse of what could one day be reality: Humans and artificial intelligence collaborating to attend to the needs of victims of large-scale disasters, whether in an ambush in a war zone or in the immediate aftermath of a mass shooting.

Thanks to AI, Apple's China problem is only getting worse

For years, Tim Cook insisted Apple could change China from the inside. Instead, China changed Apple.

The latest evidence? Apple spent billions developing cutting-edge electric vehicle battery technology with Chinese automaker BYD, only to watch its innovations become the cornerstone of BYD’s rise to global electric vehicle dominance. Apple walked away with nothing. China walked away with everything.

This isn’t just another story about corporate research and development gone wrong. It’s a cautionary tale about how even America’s most valuable company has become trapped in China’s web of technological control — and how that web is about to tighten even further.

The battery partnership reveals a familiar pattern: American innovation flows into Chinese hands, strengthening Beijing’s technological ambitions while weakening America’s competitive edge.

But BYD isn’t the real story here: It’s about how deeply Apple has become entangled with the Chinese Communist Party’s strategic objectives. The company that once removed the Dalai Lama from its ads to appease Beijing now faces an even more consequential test: artificial intelligence.

As Apple races to roll out Apple Intelligence globally, it faces a stark choice in China. The country’s strict AI regulations require companies to hand over their algorithms for government review and ensure their AI systems “adhere to the correct political direction.” For Apple, this means either walking away from its largest overseas market or creating a separate, censored version of its AI assistant that advances the Chinese Communist Party’s surveillance and control objectives.

History suggests Apple will choose accommodation. The company already stores Chinese users’ iCloud data on state-owned servers, removes apps at the government’s behest and blocks VPN services that could help users evade censorship. When China claimed Apple was a national security threat in 2014, the company didn’t push back — it handed over its encryption keys.

The cost of resistance is simply too high. China accounts for one-fifth of Apple’s sales and produces 95 percent of iPhones. When CEO Tim Cook recently spent $40,000 to dine with President Xi Jinping, it wasn’t just courtesy — it was a necessity. Apple’s dependence on China has become so profound that it ranks as the third most China-dependent major U.S. company.

Yet this accommodation strategy isn’t working. iPhone sales in China fell 19 percent this year as consumers shifted to domestic brands like Huawei. The Chinese Communist Party’s demands, meanwhile, only escalate. Each concession Apple makes doesn’t buy breathing room — it only increases Beijing’s leverage to demand more.

This dynamic exposes the fundamental flaw in Cook’s famous defense of operating in China: “You show up and participate … nothing ever changes from the sideline.” The reality is that Apple’s participation hasn’t changed China’s system — the system has changed Apple.

The Big AI Slowdown

Recent research has led some in the industry to suggest that new models aren’t getting the same amount of juice out of their scaling, and that we may be experiencing a plateau in development. Some of them cite the lack of availability of high-quality training data. But a related debate asks whether AI work is supposed to continue on a smooth upward trajectory, or if it will be a more complex journey…

Let’s go back to Caballero’s post, which on its face seems to suggest that we’re headed for a leveling off in AI’s ability to bring home the bacon. On first glance, it seems like this seasoned AI pro might be supporting the idea that we’re seeing mathematical declines in model scaling performance.

But if you look at his actual research, what it suggests is that we’re going to see these “breaks” along the way as scaling changes, and that a temporary reduction doesn’t mean that the line is going to veer off into a plateau for any significant length of time. It’s more like Burton Malkiel’s “Random Walk on Wall Street.” All of the associated information that goes along with his paper indicates that we’ll see a variety of vectors, so to speak, in what AI can do over time.

Elon Musk adds Microsoft to lawsuit against ChatGPT-maker OpenAI

(MRM – given Elon’s close ties to the new administration I would not want to be OpenAI or now, Microsoft)

Elon Musk has stepped up his ongoing feud with ChatGPT-maker OpenAI with a revived lawsuit against the firm, adding Microsoft as a defendant. Mr Musk, a co-founder of the artificial intelligence (AI) company, accused it and the tech giant of operating a monopoly in an amended legal complaint on Thursday.

It follows previous lawsuits accusing the firm of breaching the principles he agreed to when he helped found it in 2015.

Microsoft declined to comment on the lawsuit. An OpenAI spokesperson said Mr Musk's refreshed complaint was "baseless". "Elon’s third attempt in less than a year to reframe his claims is even more baseless and overreaching than the previous ones," they told the BBC.

The World's First AI-Generated Game Is Playable By Anyone Online, And It Is Surreal

In news your 8-year-old kid probably knew about weeks ago somehow, a new Minecraft rip-off is available to play online. In a surprising twist on the genre, every frame in this one is entirely generated by artificial intelligence (AI).

The game, Oasis, lets players explore a 3D world filled with square blocks, mine resources, and craft items, just like the ridiculously popular Minecraft. It's a little surreal to play, with distant landscapes morphing into other shapes and sizes as you approach.

But underneath the hood, the game is quite different from any other you have played. It lets you choose from a host of starting environments, as well as the option to upload your own image to be used as a starting scene. However, the player's actions and movements soon change the environment around you.

"You're about to enter a first-of-its-kind video model, a game engine trained by millions of gameplay hours," the game's creators, Decart and Etched, explain as you enter. "Every step you take will shape the environment around you in real time."

"The model learned to allow users to move around, jump, pick up items, break blocks, and more, all by watching gameplay directly," the team explained in a statement. "We view Oasis as the first step in our research towards foundational models that simulate more complex interactive worlds, thereby replacing the classic game engine for a future driven by AI."

Here’s the full list of 44 US AI startups that have raised $100M or more in 2024 | TechCrunch

Per ChatGPT - The AI companies that raised $100 million or more in 2024 represent a diverse range of industries and technologies. Here's a summary of the types of firms that received significant funding:

AI-Powered Software Platforms:

General Software: Startups like Glean, Magic, Together AI, and Poolside focus on enhancing software development, enterprise search, and AI infrastructure.

LegalTech: Companies like EvenUp and Harvey are integrating AI into legal practices for automation and data analysis.

Healthcare and Biotech: AKASA and Zephyr AI specialize in healthcare automation and precision medicine.

Market Intelligence: AlphaSense and FundGuard offer AI-driven insights for businesses and finance.

Robotics and Hardware:

Physical Robotics: Companies like Nimble Robotics, Path Robotics, and Figure focus on autonomous robots for warehouses and other industries.

AI Chips: Startups like Lightmatter, Etched.ai, and CoreWeave develop next-generation AI chips and infrastructure.

Specialized Robotics: Bright Machines and Skild AI create robotics for specific applications like smart manufacturing.

Advanced AI Models and Research:

AI Foundations: OpenAI, xAI, and Safe Superintelligence focus on developing cutting-edge AI models and fundamental research.

Applied AI: Companies like Cognition and EvolutionaryScale use AI in applied fields like therapeutic design and biological models.

Specialized Applications:

AI in Security: Abnormal Security and Cyera focus on email security and data protection.

AI in Music: Suno explores creative applications like AI-driven music creation.

AI for Physical World Estimation: World Labs develops AI for modeling real-world objects' physical properties.

AI Infrastructure and Platforms:

Deep Learning Infrastructure: Lambda provides infrastructure for large-scale AI model training.

Optical Computing: Celestial AI focuses on photonics for AI solutions.

High-Profile Ventures:

Companies backed by prominent names, such as Physical Intelligence (robotics), Sierra (AI chatbots), and KoBold Metals (materials exploration), attract significant attention and funding due to their leadership and innovation.

Killer Robots Are About to Fill Ukrainian Skies

Killer robots have arrived on the Ukrainian battlefield.

In a front-line dugout this spring, a Ukrainian drone navigator selected a target—a Russian ammunition truck—by tapping it on a tablet screen with a stylus. The pilot flicked a switch on his handset to select autopilot and then watched the drone swoop down from a few hundred yards away and hit the vehicle.

“Let it burn,” said one of the team, as they observed a plume of smoke on a video feed from a reconnaissance drone.

Strikes like this represent a big advance in Ukraine’s attempts to use computers to help it combat Russia’s huge army. The drone that carried it out was controlled in the final attack phase by a small onboard computer designed by the U.S.-based company Auterion. Several other companies, many of them Ukrainian, have successfully tested similar autopilot systems on the battlefield.

Now, an even bigger breakthrough looms: mass-produced automated drones. In a significant step not previously reported, Ukraine’s drone suppliers are ramping up output of robot attack drones to an industrial scale, not just prototypes.

Enabling the upshift is producers’ successful integration of inexpensive computers into sophisticated, compact systems that replicate capabilities previously found only in far pricier equipment.

“None of this is new,” said Auterion founder and chief executive Lorenz Meier. “The difference is the price.”

AI could cause ‘social ruptures’ between people who disagree on its sentience | Artificial intelligence (AI) | The Guardian

Last week, a transatlantic group of academics predicted that the dawn of consciousness in AI systems is likely by 2035 and one has now said this could result in “subcultures that view each other as making huge mistakes” about whether computer programmes are owed similar welfare rights as humans or animals.

Birch said he was “worried about major societal splits”, as people differ over whether AI systems are actually capable of feelings such as pain and joy.

The debate about the consequence of sentience in AI has echoes of science fiction films, such as Steven Spielberg’s AI (2001) and Spike Jonze’s Her (2013), in which humans grapple with the feeling of AIs. AI safety bodies from the US, UK and other nations will meet tech companies this week to develop stronger safety frameworks as the technology rapidly advances.

There are already significant differences between how different countries and religions view animal sentience, such as between India, where hundreds of millions of people are vegetarian, and America which is one of the largest consumers of meat in the world. Views on the sentience of AI could break along similar lines, while the view of theocracies, like Saudi Arabia, which is positioning itself as an AI hub, could also differ from secular states. The issue could also cause tensions within families with people who develop close relationships with chatbots, or even AI avatars of deceased loved ones, clashing with relatives who believe that only flesh and blood creatures have consciousness.

Birch, an expert in animal sentience who has pioneered work leading to a growing number of bans on octopus farming, was a co-author of a study involving academics and AI experts from New York University, Oxford University, Stanford University and the Eleos and Anthropic AI companies that says the prospect of AI systems with their own interests and moral significance “is no longer an issue only for sci-fi or the distant future”.

They want the big tech firms developing AI to start taking it seriously by determining the sentience of their systems to assess if their models are capable of happiness and suffering, and whether they can be benefited or harmed.