Hope your Thanksgiving was great!

AI will bring a 3.5 day workweek, Trump to name AI Czar?, OpenAI & Wharton Launch AI Course for Teachers, AI Grandma fights Scammers, Coke responds over AI Ad Backlash, and more.

Lots going on this week so…

The Two Jobs in the Future

Jamie Dimon says the next generation of employees will work 3.5 days a week and live to 100 years old

JPMorgan CEO Jamie Dimon predicts that future generations of employees will work 3.5 days a week and live to 100 years old, thanks to advancements in artificial intelligence.

Dimon believes AI will automate tasks, freeing up employees to work fewer hours, and cites a McKinsey report that found AI could add $2.6 trillion to $4.4 trillion to the global economy annually.

While Dimon acknowledges the potential risks of AI, including job displacement and misuse, he hopes to 'redeploy' affected employees within JPMorgan Chase.

Dimon's predictions echo those of economist John Maynard Keynes, who in 1930 forecast that future generations would work 15-hour weeks due to increased productivity.

Strategy in an Era of Abundant Expertise

The AI era is in its early stages, and the technology is evolving extremely quickly. Providers are rapidly introducing AI “copilots,” “bots,” and “assistants” into applications to augment employees’ workflows. Examples include GitHub Copilot for coding, ServiceNow Assist to improve productivity and efficiency, and Salesforce’s Agentforce for everyday business tasks. These tools have been trained on a wide range of data sources and possess expansive expertise in many domains. The quality of expertise embedded in them is already relatively high, but the level of expertise is still growing rapidly while the cost of accessing it is decreasing. In the relatively near future, more-advanced “AI agents,” equipped with greater capability and broader expertise, will be able to take action on behalf of users with their permission.

Companies that adopt AI will benefit from what the authors call the triple product: more-efficient operations, more-productive workforces, and growth with a sharper vision and focus. They must be prepared to make trust and safety among their top priorities and to cultivate a group of employees who are early adopters. The authors provide guidance for the process.

Who's winning the AI race

Competition in AI is less a single race than a triathlon: There's a face-off to develop the most advanced generative AI foundation models; a battle to win customers by making AI useful; and a struggle to build costly infrastructure that makes the first two goals possible.

Why it matters: Picking a winner in AI depends on which of these games you're watching most closely. And the competition's multi-faceted nature means there's more than one way to win.

State of play: Below, we offer an overview of where the key players in generative AI stand in each of this triathlon's events.

Between the lines: The benchmarks used in the industry to compare performance among AI models are widely criticized as unreliable or irrelevant, which makes head-to-head comparisons difficult.

Often, developers' and users' preference for one model over another are very subjective.

OpenAI

Two years after the launch of OpenAI's ChatGPT kicked off the AI wave, the startup remains AI's flagship.

It has raised about $22 billion and is in the process of retooling itself from a safety-oriented nonprofit to a globe-spanning for-profit tech giant.

Yes, but: OpenAI's last major foundation model release, GPT-4, is now nearly two years old. A long-awaited successor had its release pushed back into 2025 amid a swirl of reports that its advances may not be game-changing.

Meanwhile, OpenAI has pushed the field's edge with innovations like its "reasoning" model, o1, and impressive voice capabilities.

Models: OpenAI still has a lead, but it's shrinking.

Customers: OpenAI has direct access to a vast pool of over 200 million weekly active ChatGPT users and indirect access to the huge installed base of Microsoft users, thanks to its close alliance with that giant.

Infrastructure: OpenAI is highly dependent on Microsoft for the cloud services that train and run its AI models, though it has recently begun an effort to expand its partnerships.

Anthropic

Like Avis to OpenAI's Hertz, Anthropic seems to be trying a little harder. It has also faced fewer distractions from high-profile departures and boardroom showdowns than its competitor.

Anthropic was founded by ex-OpenAI employees aiming to double down on OpenAI's commitment to caution and responsibility in deploying AI.

But it has now raised roughly $14 billion and begun to embrace OpenAI's philosophy of putting AI in the public's hands to pressure test its dangers.

Models: Anthropic's Claude 3.5 Sonnet is widely viewed as a worthy competitor to GPT-4 that, in some cases, even surpasses it.

Customers: Claude's usage numbers are much smaller than ChatGPT's, but the company is partnering with big and medium-sized firms looking for a counterweight to Microsoft.

Infrastructure: Amazon, which recently invested $4 billion into Anthropic, is putting its massive cloud resources behind the company, and Google's parent Alphabet has also provided some investment and support.

Google's long-term investments in AI research made generative AI's breakthrough possible.

But ChatGPT's overnight success caught the search giant flat-footed.

Google has spent much of the last two years in catch-up mode — uniting its DeepMind research team and Brain unit and injecting its Gemini AI across its product line.

Models: Google's Gemini is very much in the same league as OpenAI's and Anthropic's models, though some reports suggest that it hasn't found as much pickup among AI developers.

Customers: By pushing its own AI summaries to the top of search results and integrating its AI with its Android mobile operating system, Google has ensured that its own AI would get in front of a global user base.

Infrastructure: Google has the know-how and the resources to scale up as much AI power as it needs, but the field's competitive frenzy has left it off-balance.

Meta

Meta has embraced and promoted open source AI via its Llama models.

The strategy is a way to avoid becoming dependent on a competitor for AI services — the way it found itself reliant on Apple and Google in the smartphone era.

Meanwhile, Meta has been deploying its own custom version of the technology, dubbed Meta AI. The chatbot lives inside Messenger and WhatsApp, has taken over search in Instagram and powers the assistant on Meta Ray-Ban glasses. The future has Meta AI even spitting out its own posts.

Models: Meta's models haven't directly taken on OpenAI and its competitors. Instead, they've offered better performance at smaller scales and the cost savings and freedom that the open source approach allows.

Customers: Over 3 billion social media users provide Meta with an enormous pool of consumers, while some business customers will be won over by Llama's low price and adaptability.

Infrastructure: Meta doesn't run its own B2B cloud, but has plenty of experience scaling up data centers.

Other players

Microsoft has tied its AI fate to OpenAI, but it has also begun to build out its own in-house strategy.

It's developing its own models in a project led by DeepMind co-founder and former Inflection CEO Mustafa Suleyman.

Amazon has concentrated on meeting the AI boom's enormous demand for cloud services, but it's also investing in its own series of models.

xAI, Elon Musk's venture, raised about $6 billion in the spring and another $5 billion this month — along the way building what it calls the world's largest AI data center at impressive speed.

But it's not yet clear how xAI intends to compete beyond making vague promises around freedom of speech.

Apple has played catch-up as it works to weave Apple Intelligence into its mobile and desktop operating systems and upgrade its Siri assistant.

Other races

AI's leading competitors are also engaged in an increasingly tough scramble to find more training data for their models.

Tech giants that have been stockpiling data for years have a natural advantage.

But data access faces challenges from copyright law, distrust of the technology by creators and the public, and privacy commitments some companies have made.

Zoom out: Most of these companies have also explicitly committed themselves to the quest for artificial general intelligence, or AGI — a level of autonomous intelligence that matches or exceeds human capabilities.

But since everyone has a different definition of AGI, it may be tough for anyone to lay a claim to achieving it first.

facebook (opens in new window)

twitter (opens in new window)

linkedin (opens in new window)

email (opens in new window)

Trump eyes AI czar to partner with Elon Musk for U.S. edge

President-elect Trump is considering naming an AI czar in the White House to coordinate federal policy and governmental use of the emerging technology, Trump transition sources told Axios.

Why it matters: Elon Musk won't be the AI czar, but is expected to be intimately involved in shaping the future of the debate and use cases, the sources said.

Behind the scenes: We're told the role is likely but not certain.

Musk and Vivek Ramaswamy — who are leading Trump's new outside-government group, the Department of Government Efficiency (DOGE) — will have significant input into who gets the role.

Musk — who owns a leading AI company, xAI — has feuded publicly with rival CEOs, including OpenAI's Sam Altman and Google's Sundar Pichai. Rivals worry Musk could leverage his Trump relationship to favor his companies.

The big picture: Trump, partly in response to the enlarged coalition that fueled his victory, plans to be super attentive to emerging technologies.

Trump's transition has vetted cryptocurrency executives for a potential role as the first-ever White House crypto czar, Bloomberg reported last week.

The AI and crypto roles could be combined under a single emerging-tech czar.

Zoom in: The AI czar will be charged with focusing both public and private resources to keep America in the AI forefront.

The federal government has a tremendous need for AI technology, and the new czar would likely work with agency chief AI officers (which were established in President Biden's AI executive order, and could survive Trump).

The person also would work with DOGE to use AI to root out waste, fraud and abuse, including entitlement fraud.

The office would spur the massive private investment needed to expand the energy supply to keep the U.S. on the cutting edge.

Gen Z are all using AI at work, study finds

In the late summer, Google surveyed 1,005 full-time knowledge workers, age 22-39, who are either in leadership roles or aspire to one.

What they found: 93% of Gen Z respondents, age 22 - 27, said they were using two or more AI tools a week — such as ChatGPT, DALL-E, Otter.ai, and other generative AI products.

79% of millennials (28 - 39) said they used two or more of these tools a week.

Zoom in: Younger workers are using AI to revise emails and documents, to take notes during meetings or even just to start generating ideas, says Yulie Kwon Kim, VP of product at Google Workspace.

88% of Gen Z workers said they'd use AI to start a task that felt overwhelming.

Reality check: Google has a big stake in selling AI as the future of work. It's invested billions of dollars in the nascent technology — this is just one small survey that helps make its case.

A larger study of full-time workers — of all ages — out earlier this month found overall AI adoption is stalling out, with nearly half workers saying they weren't comfortable even admitting to using the technology.

But younger workers are a bit more open about what they're doing, per Google's findings. 52% of those age 22-27 said they frequently discuss their use of AI tools with colleagues.

ChatGPT Anniversary: How the Second Year of Generative AI Changed Tech Work

Over the last year, OpenAI has:

Expanded ChatGPT into new formats like ChatGPT search and Canvas, the latter of which is built, in part, to sit beside a coding application.

Unveiled GPT-4o and OpenAI o1, new flagship models.

Partnered with Apple to support some features of Apple’s onboard AI.

Announced ChatGPT will remember previous conversations.

Released ChatGPT search, marking OpenAI’s bid to replace Google Search as the de facto portal to the rest of the internet.

Rolled out Advanced Voice Mode to select users in October, enabling users to talk to the AI aloud.

Set Your Team Up to Collaborate with AI Successfully

(MRM – Summarized by ChatGPT)

Strategize AI Augmentation

Define how employees will create value in roles redefined by AI. Focus on leveraging human skills like empathy and creativity to complement AI's automation of repetitive tasks.Shift Performance Metrics to Output

Prioritize productivity by rewarding outcomes, not effort, and incentivize employees to use AI for efficiency while fostering growth through upskilling opportunities.Focus on Uniquely Human Skills

Cultivate essential human traits such as curiosity, empathy, and critical thinking that AI cannot fully replicate, enhancing collaboration and decision-making.Empower Mid-Level Managers

Invest in equipping managers with the technical and soft skills needed to lead teams effectively in a human-AI environment, ensuring strategy is executed successfully.Encourage AI Experimentation

Promote a culture of innovation by supporting risk-taking and learning from failures, driving creative AI applications and fostering adaptability within teams.

College students have embraced AI tools, but some professors are trying to catch up

(MRM – radio interview I did for UNC Student Radio on AI at UNC)

Artificial intelligence is changing the higher-ed classroom, and some UNC faculty say it’s time to catch up. Some professors have begun to use it to help prepare lesson plans, and even make their lectures more interesting.

Carolina Connection’s Madeleine Ahmadi explains. But first, take a listen to this discussion.

OpenAI and Wharton launch free ChatGPT course for teachers. Here's how to access it | ZDNET

When ChatGPT launched, the generative AI tool immediately faced concerns about atrophying children's intelligence and education, with some school districts even blocking access. However, many schools soon decided to unblock the technology and began leveraging it within their classrooms. This new course is meant to help teachers make the most of ChatPGT.

On Thursday, OpenAI partnered with Wharton Online to launch a new course, AI in Education: Leveraging ChatGPT for Teaching, available on Coursera for free. The course has been designed to teach generative AI essentials to higher education and high school staff to help them better implement the technology in their classrooms.

The course is self-paced and split into four modules, which take about an hour to complete. The topics covered in the modules include how to use AI in the classroom. create GPTs, build AI exercises and tests, prompt AI, and learn about the risks of the technology.

The course features experts in the field, including Wharton professor Ethan Mollick, who was one of the first instructors to find ways to incorporate generative AI into the syllabus and classroom to promote learning. Time magazine named Mollick one of 2024's most influential people in AI. Lilach Mollick, co-director of Wharton Generative AI Labs, also guides the course.

Once the course is completed, users will earn a Wharton Online and OpenAI certificate issued by Coursera, which they can share with their networks to show they understand AI. The course, accessible on Coursera, starts on November 21 and users can enroll for free.

How are college students using AI tools like ChatGPT? | EdSource

Since generative artificial intelligence tools like OpenAI’s ChatGPT have skyrocketed in popularity, some have appreciated AI for its ability to create ideas, while others cite concerns about privacy, job displacement and the loss of human elements in various fields.

In a 2023 survey, 56% of college students said they’d used AI tools for assignments or exams. However, opinions on its use vary widely between students. Some view AI as a revolutionary tool that can enhance learning and working, while others see it as a threat to creative fields that encourages and enables bad academic habits.

To explore how university students are using AI tools during the academic year, EdSource’s California Student Journalism Corps asked the following questions at nine California colleges and universities:

“Have you or someone you know used ChatGPT or another AI tool to complete a college assignment? Can you share what the assignment was, and if using AI was approved by your professor?”

Below are their responses.

(MRM – One example here: Baltej Miglani)

“I find it most helpful for summarizing readings and just making really menial and time-consuming tasks a lot easier,” Miglani said. A premium ChatGPT subscriber, he said he regularly checks his math problems with the chatbot, though it often can’t handle the complex equations and concepts used in some of his classes.

Miglani said the the preliminary models of ChatGPT were “pretty rudimentary,” struggling to produce quality written answers and useful for mainly short-answer assignments and creating outlines for his essays. Now, ChatGPT and other AI tools, including Microsoft Edge and Gemini, are Miglani’s near-constant companions for homework tasks.

For the first few semesters after ChatGPT’s debut, Miglani said students used it fairly freely without much concern about getting caught, as AI detection software didn’t yet exist. Now that commonly used submission programs like Turnitin allow professors to scan assignments for evidence of AI use, Miglani said he’s been more conscientious of writing essays that won’t be flagged.

“I have not gotten caught using AI yet,” he said. “In fact, now, as I take higher level courses, professors understand that people are going to use AI, and so I have started asking them, ‘Do you approve of AI use in and in what capacity?’”

Some of Miglani’s professors have allowed AI use for research and basic summarization, but many draw the line at using chatbots to generate citations or write essays.

Is Grammarly AI? Notre Dame Says Yes

The University of Notre Dame’s decision this fall to allow professors to ban students from using the 15-year-old editing software Grammarly is raising questions about how to create artificial intelligence policies that uphold academic integrity while also embracing new technology.

Since it launched in 2009, millions of college students have used Grammarly’s suggestions to make their writing clearer, cleaner and more effective. In fact, many of their professors have encouraged it, and more than 3,000 colleges and universities have institutional accounts, Grammarly says.

But like so many types of software, the advent of generative AI has transformed Grammarly’s capabilities in recent years. It now offers an AI assistance component that “provides the ability to quickly compose, rewrite, ideate, and reply,” according to the company’s website.

While many students have welcomed those enhancements to help them write research papers, lab reports and personal essays, some professors are increasingly concerned that generative AI has morphed the editing tool into a full-fledged cheating tool. Those concerns prompted Notre Dame officials to rethink their AI policy.

“Over the past year, [academic integrity] questions about Grammarly kept surfacing. Professors would contact me and say, ‘This piece of writing looks so completely different from everything else I received from this student so far. Do you think they used generative AI to create it?’” said Ardea Russo, director of Notre Dame’s Office of Academic Standards. “We would look at it more deeply and the student would say, ‘I used Grammarly.’”

To avoid further confusion about Grammarly—which many students were accustomed to using—Notre Dame updated its policy this August to clarify that because “AI-powered editing tools like Grammarly and WordTune work by using AI to suggest revisions to your writing,” if an instructor “prohibits the use of gen AI on an assignment or in a class, this prohibition includes the use of editing tools, unless explicitly stated otherwise.”

“It’s hard because faculty are all over the place. Some want students to use it all the time for everything and others don’t want students to touch it all,” Russo said. “We’re trying to thread the needle and create something that works for everyone.”

Nvidia debuts AI model that can create music, mimic speech

Nvidia has developed an AI model called Fugatto that can create sound effects, change the way a person sounds, and generate music using natural language prompts.

Fugatto is a foundational model that can perform the tasks of several other models, such as synthesizing speech and adding sound effects to music.

The model can generate audio via standard word prompts, manipulate audio files, and even produce voices that carry emotional weight.

Nvidia hopes Fugatto will provide new tools for artists to explore and create new forms of music, but its development also raises questions about the potential impact on artists and sound engineers.

Nvidia claims a new AI audio generator can make sounds never heard before

Nvidia says its new AI music editor can create “sounds never heard before” — like a trumpet that meows. The tool, called Fugatto, is capable of generating music, sounds, and speech using text and audio inputs it’s never been trained on.

As shown in this video embedded below, this allows Fugatto to put together songs based on wild prompts, like “Create a saxophone howling, barking then electronic music with dogs barking.”

ChatGPT Flexes Muscles as Estée Lauder Beauty Consultant

Beauty companies increasingly use artificial intelligence to transform how they develop and market products, with personalization and convenience at the forefront.

Beauty juggernaut Estée Lauder Companies partnered with OpenAI this month to implement AI across its brands.

The companies built 240 AI applications to analyze consumer data and develop products across its beauty brands.

“We’re thrilled to help Estée Lauder empower employee creativity and better serve customers using ChatGPT Enterprise,” OpenAI Head of Platform Sales James Dyett said in a statement provided to PYMNTS. “Our work together is a perfect example of employees driving AI innovation from the ground up and ELC leadership accelerating their progress and learning along the way.”

The facility processes data from consumer surveys and clinical trials for the company’s beauty brands. Two primary applications have emerged from the development process: one analyzes consumer survey data for fragrance development, while another processes clinical trial results.

Research tasks that previously took hours are now completed in minutes, OpenAI said.

Other beauty companies are investing in AI for consumer-facing and product development. L’Oréal expanded its use of Modiface, an AI and augmented reality platform that lets users virtually try on makeup and experiment with hair colors, streamlining the decision-making process for consumers.

Sephora’s Virtual Artist tool helps customers find their ideal foundation shade using facial recognition and a skin tone database, improving accuracy and inclusivity. The tool has become a key part of the brand’s digital strategy as online shopping continues to grow.

In skincare, Procter and Gamble’s Olay has Skin Advisor, which analyzes a user’s selfie to recommend personalized routines. The approach highlights the increasing role of AI in making beauty solutions more targeted and efficient

Kenyan workers with AI jobs thought they had tickets to the future until the grim reality set in - CBS News

Being overworked, underpaid, and ill-treated is not what Kenyan workers had in mind when they were lured by U.S. companies with jobs in AI.

Kenyan civil rights activist Nerima Wako-Ojiwa said the workers' desperation, in a country with high unemployment, led to a culture of exploitation with unfair wages and no job security.

"It's terrible to see just how many American companies are just doing wrong here," Wako-Ojiwa said. "And it's something that they wouldn't do at home, so why do it here?"

Why tech giants come to Kenya

The familiar narrative is that artificial intelligence will take away human jobs, but right now it's also creating jobs. There's a growing global workforce of millions toiling to make AI run smoothly. It's gruntwork that needs to be done accurately and fast. To do it cheaply, the work is often farmed out to developing countries like Kenya.

Nairobi, Kenya, is one of the main hubs for this kind of work. It's a country desperate for work. The unemployment rate is as high as 67% among young people.

OpenAI's Plan for an AI Web Browser

OpenAI is considering developing a web browser that would integrate with its chatbot and has discussed or struck deals to power search features with several companies, including Conde Nast and Redfin.

The move could potentially challenge Google's dominance in the browser and search market, where it commands a significant share.

OpenAI has already entered the search market with SearchGPT and has partnerships with Apple and potentially Samsung to power AI features on their devices.

However, OpenAI is not close to launching a browser, according to the report.

Amazon to invest another $4 billion in Anthropic, OpenAI’s biggest rival

Amazon on Friday announced it would invest an additional $4 billion in Anthropic, the artificial intelligence startup founded by ex-OpenAI research executives.

The new funding brings the tech giant’s total investment to $8 billion, though Amazon will retain its position as a minority investor.

Amazon Web Services will also become Anthropic’s “primary cloud and training partner,” according to a blog post.

'Dystopian' AI Workplace Software That Tracks Every Move Has Employees Worried

Social media users have been left shellshocked by a 'dystopian' employee "productivity monitoring" AI software that aims to scrutinise every move of the workers whilst suggesting ways they can be replaced by automation. A Reddit user claimed that during a sales pitch for such a tool, they were made aware of the software suite that not only tracks the workers minutely for efficiency on several parameters but also uses AI to create a 'productivity graph' that serves 'red notices' to employees and eventually suggests employers to fire them.

"Had the pleasure of sitting through a sales pitch for a pretty big "productivity monitoring" software suite this morning," wrote the OP in the r/sysdmin subreddit whilst also giving a rundown of what the application could do.

"Here's the expected basics of what this application does: Full keylogging and mouse movement tracking. Takes a screenshot of your desktop every interval. Keeps track of the programs you open and how often, also standard. Creates real-time recordings and heat maps of where you click in any program," the user added.

However, things got interesting afterwards as the app allows the managers to group an individual into a "work category" along with their coworkers. The AI then creates a "productivity graph" from the "mouse movement data, where you click, how fast you type, how often you use backspace, the sites you visit, the programs you open, how many emails you send and compares all of this to your coworker's data in the same work category".

If an employee falls below a certain cutoff percentage, they get a red flag for review that is instantly sent to the manager and others in the chain. The worker is nudged to explain their gap in productivity on a portal.

"It also claims it can use all of this gathered data for "workflow efficiency automation" (e.g. replacing you). The same company that sells this suite conveniently also sells AI automation services," the OP added.

AI's scientific path to trust

The big picture: Google's top executives in attendance — Hassabis and James Manyika, senior vice president of research, technology and society — said they're trying to increase trust in AI by using it to solve practical problems, including forecasting floods and predicting wildfire boundaries.

"What could be a better use of AI than curing diseases? To me, that seems like the number one most important thing anyone could apply AI to," Hassabis said.

Top researchers this week said scientific discoveries using AI, like new drugs or better disaster forecasting, offer a way to win people's trust in the technology, but they also cautioned against moving too fast.

Why it matters: Public trust in AI is eroding, putting the technology's wide adoption and potential benefits at risk.

Driving the news: At a forum in London hosted by Google DeepMind and the Royal Society, a roster of renowned scientists described how AI tools are transforming and turbocharging science.

Efforts range from the search for beneficial new materials to the quest to build a quantum computer to the potential for self-driving labs.

Between the lines: In industry, the buzz around AI has largely centered on the technology's capacity to streamline business — along with the possibility that it might advance toward artificial "superintelligence."

Experts at the London event highlighted AI as a scientific tool and argued that the scientific method will best serve researchers seeking to leverage advanced AI models and fathom their complexity.

But, the painstaking, thorough work of science can be at odds with the "move fast break things" ethos of the tech industry that is driving AI's development.

Scientists in the U.S. also face a tide of skepticism about their work.

What they're saying: "I think the scientific method is, arguably, maybe the greatest idea humans have ever had," DeepMind CEO Demis Hassabis told the London gathering.

"More than ever we need to anchor around the method in today's world, especially with something as powerful and potentially transformative as AI," he said, adding that he thinks neuroscience techniques should be used to analyze AI "brains."

"I feel we should treat this more as a scientific endeavor, if possible, although it obviously has all the implications that breakthrough technologies normally have in terms of the speed of adoption and the speed of change."

How to Regulate AI Without Stifling Innovation

Summarize via ChatGPT

· Balance Benefits and Risks: Regulators should weigh the advantages of AI (e.g., faster drug discovery, improved education, safer roads) against its risks, avoiding excessive caution that delays progress.

· Compare AI to Humans, Not Perfection: AI's performance should be evaluated relative to human standards, acknowledging that while AI may have biases or flaws, it can often learn and improve faster than humans.

· Address Regulatory Barriers: Streamline regulations hindering AI progress, such as obstacles to expanding data centers and the proliferation of state-specific AI laws, advocating for a unified federal framework.

· Leverage Existing Regulators: Use domain-specific agencies to oversee AI applications (e.g., auto safety, medical devices) rather than creating a centralized AI superregulator, ensuring focus on outputs and consequences.

· Avoid Protecting Incumbents: Prevent regulations from entrenching established players or stifling competition by avoiding centralized licensing bodies and overly restrictive frameworks that could favor big companies.

· Use Broader Economic Policies: Tackle AI-related challenges like job displacement and inequality through policies like job training programs, wage subsidies, and progressive taxation, rather than solely relying on AI-specific regulations.

AI grandma fights back against scammers - CBS News

(MRM – this sounds pretty great)

Meet Daisy – an AI-generated grandma created by British phone company Virgin Media O2 as the ultimate scam buster. Daisy's sole purpose is to talk to scammers all day so real people don't have to.

Daisy made her debut on Nov. 14 and has already had more than 1,000 conversations with scammers so far, the longest lasting around 40 minutes, frustrating them with her tech-illiteracy and wasting their time by telling irrelevant stories about her grandchildren.

"The newest member of our fraud-prevention team, Daisy, is turning the tables on scammers – outsmarting and outmaneuvering them at their own cruel game simply by keeping them on the line," Murray Mackenzie, director of fraud at Virgin Media O2, said in a statement.

While Daisy may sound like a human, she is essentially an AI large language model with the character application of a grandma. She functions by listening to the scammers and translating their voice to text. The AI then searches its large database to find an appropriate response, based on the specific scam training it's received, and translates that text response to speech for Daisy to reply. All this happens in seconds with no additional input needed.

The company told CBS they worked with known scam artists to train Daisy and used a tactic called number seeding to get Daisy's phone number added to a list of online "mugs lists" — lists used by scammers targeting U.K. consumers. When they call, Daisy "has all the time in the world" to keep the scammers occupied.

Coca-Cola responds to backlash over AI-generated Christmas ad: ‘Creepy dystopian nightmare’

Coca-Cola has issued a statement after copping huge backlash for creating an AI-generated Christmas commercial that fans labelled “disastrous.”

Paying homage to the iconic drink’s 1995 “Holidays Are Coming” campaign, the new 15-second advert depicts a fleet of cherry red trucks driving down a snowy road to deliver ice-cold bottles of Coke to customers in a festively decorated town.

But it’s a tiny disclaimer in small print on the video that reads, “created by Real Magic AI,” that has left people outraged. The brand has since defended its use of the controversial technology, stating it was a collaboration between humans and AI.

“The Coca-Cola Company has celebrated a long history of capturing the magic of the holidays in content, film, events, and retail activations for decades around the globe,” the spokesperson said.

“We are always exploring new ways to connect with consumers and experiment with different approaches. This year, we crafted films through a collaboration of human storytellers and the power of generative AI.”

Consumers quite literally weren’t buying it, blasting the ad as a “creepy dystopian nightmare.”

But why has this short video sparked so much controversy?

Aside from the “unbearably” choppy commercial (there are 10 shots in just 15 seconds), many commentators argued it’s a poor attempt to cheapen labor in the film and technology industry and kill jobs, Forbes reported.

The quality of the production doesn’t hit the mark either, according to critics.

Plenty of details are “off,” such as the truck wheels gliding across the ground without spinning, and the distinct lack of Santa Claus onscreen, with only his out-of-proportion hand, clutching a Coke bottle, seen.

But Jason Zada, the founder of the AI studio Secret Level, one of the three Coca-Cola collaborated with on the project, argued that there is still a human component that creates the “warmth” in the clip.

Zada told AdAge that harnessing generative AI for something as complex as a commercial is not as easy as just pressing a button, and Pratik Thakar, Coca-Cola’s vice president and global head of generative AI, explained that the company is bridging its “heritage” with “the future and technology” with the next-gen campaign.

OpenAI and artists are at war over over the ChatGPT maker's Sora video tool

ChatGPT maker OpenAI halted the rollout of its new text-to-video generator, Sora, after testers leaked the tool to the public over concerns about exploitative business practices.

Artists who were given early access leaked Sora on artificial intelligence platform Hugging Face under the username PR-Puppets. Just three hours after its limited release, OpenAI removed access to Sora for all artists.

The artists said in an open letter Tuesday, addressed to “Corporate AI Overlords,” that they were being “lured into ‘art washing’ to tell the world that Sora is a useful tool for artists.” They said that while they are not against AI being used in creative work, they don’t agree with how the artist program was rolled out and how the tool is shaping up.

“We are not your: free bug testers, PR puppets, training data, validation tokens,” the artists wrote.

As of Wednesday morning, more than 630 people had signed the letter. The situation highlights both the ethical and existential tension between the art world and AI.

An OpenAI spokesperson said that it is still focused on balancing creativity with safety measures before rolling out the tool for broader use.

A Very ChatGPT Christmas: Savvy Black Friday Shoppers Use AI to Find Deals

Getting the best deals on Black Friday used to require camping out for hours outside a Best Buy or Walmart and then stampeding into a store at the crack of dawn. Now, the savviest bargain hunters are turning to ChatGPT and other AI tools to get the job done.

Jim Malervy, 46, who lives in Philadelphia, is for the second year in a row using ChatGPT and other AI tools for his Christmas shopping. Last year, an AI-powered pricing app ultimately helped the marketing executive get a $50 discount on an iPad for his older daughter.

This year, he’s on the hunt to get his younger daughter a Margot Robbie rollerblading Barbie doll from the popular movie. He plans to use a range of apps including Paypal’s Honey, which scours sites for the best prices. His deal target: A 30% discount.

Leaving the stress of stores behind, he said AI helps take the FOMO out of Black Friday shopping. “Sometimes you get anxiety,” said Malervy, who runs the site AI GPT Journal. “You want to make sure you’re getting the right price.”

Malervy is among the 44% of likely Black Friday shoppers who say they plan to use AI this year, according to a survey of 2000 consumers by research group Attest. More AI companies, including OpenAI and Perplexity AI, have recently expanded their search and shopping features to get consumers to use their tools during the holiday season.

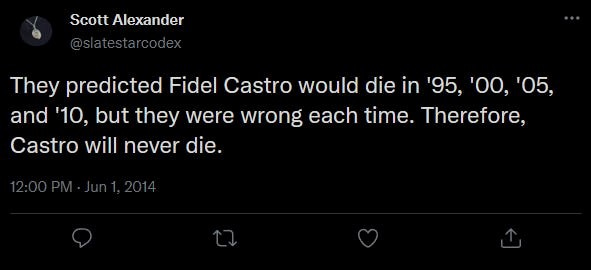

Against The Generalized Anti-Caution Argument – Scott Alexander

Suppose something important will happen at a certain unknown point. As someone approaches that point, you might be tempted to warn that the thing will happen. If you’re being appropriately cautious, you’ll warn about it before it happens. Then your warning will be wrong. As things continue to progress, you may continue your warnings, and you’ll be wrong each time. Then people will laugh at you and dismiss your predictions, since you were always wrong before. Then the thing will happen and they’ll be unprepared.

This is obviously what’s going on with AI right now.

The SB1047 AI safety bill tried to monitor that any AI bigger than 10^25 FLOPs (ie a little bigger than the biggest existing AIs) had to be exhaustively tested for safety. Some people argued - the AI safety folks freaked out about how AIs of 10^23 FLOPs might be unsafe, but they turned out to be safe. Then they freaked out about how AIs of 10^24 FLOPs might be unsafe, but they turned out to be safe.

Now they’re freaking out about AIs of 10^25 FLOPs! Haven’t we already figured out that they’re dumb and oversensitive?

No. I think of this as equivalent to the doctor who says “We haven’t confirmed that 100 mg of the experimental drug is safe”, then “I guess your foolhardy decision to ingest it anyway confirms 100 mg is safe, but we haven’t confirmed that 250 mg is safe, so don’t take that dose,” and so on up to the dose that kills the patient.

It would be surprising if AI never became dangerous - if, in 2500 AD, AI still can’t hack important systems, or help terrorists commit attacks or anything like that. So we’re arguing about when we reach that threshold. It’s true and important to say “well, we don’t know, so it might be worth checking whether the answer is right now.” It probably won’t be right now the first few times we check! But that doesn’t make caution retroactively stupid and unjustified, or mean it’s not worth checking the tenth time.

More opposition to AI – A Cultural Scan

Why should we not use generative AI? What is wrong or dangerous about the technology?

I’ve been tracking opposition to AI for years now. Today as part of my regular scanning work I’ll share some recent developments and examples. I won’t argue or support each one; instead I’m sharing them as documentation of the moment and as data for unfolding trends.

These stem from the world of culture. They haven’t (yet) reached the domain of law or government, not have they (so far) elicited technological responses. I’m keeping an eye on those for upcoming scanner posts.

AI companionbots harm our ability to have human relationships I’ve written about AI as companion previously. Now MIT professor Sherry Turkle, tech scholar and now critic, examines human use of these bots and finds them problematic. The relationships are too easy - for us and for the AI. At the article’s end she reminds us to remind ourselves that AI isn’t human, but the more significant point occurs earlier, when Turkle observes that AI can’t make itself vulnerable to a person: "The trouble with this is that when we seek out relationships of no vulnerability, we forget that vulnerability is really where empathy is born."

I’m reminded of the great science fiction writer Phil Dick and his lifelong obsession with robots as versions of humans. One of his conclusions was that robots can’t be people because they can’t be empathetic… and some people fail this test, too.

Godfather of AI Warns of Powerful People Who Want Humans "Replaced by Machines"

In an interview with CNBC, computer science luminary Yoshua Bengio said that members of an elite tech "fringe" want AI to replace humans.

The head of the University of Montreal's Institute for Learning Algorithms, Bengio was among the public signatories of the "Right to Warn" open letter penned by leading AI researchers at OpenAI who claim they're being silenced about the technology's dangers. Along with famed experts Yann LeCun and Geoffrey Hinton, he's sometimes referred to as one of the "Godfathers of AI."

"Intelligence gives power. So who’s going to control that power?" the preeminent machine learning expert told the outlet during the One Young World Summit in Montreal.

"There are people who might want to abuse that power, and there are people who might be happy to see humanity replaced by machines," Bengio claimed. "I mean, it’s a fringe, but these people can have a lot of power, and they can do it unless we put the right guardrails right now."

Will AI Agents Open The Door To Single-Person Unicorn Creators?

In February 2024, Sam Altman said AI will soon allow a founder to surpass a billion-dollar valuation without having to hire a single employee. In that interview with Reddit co-founder Alexis Ohanian, Altman even said he had a betting pool among his CEO friends as to what year this might happen.

This single-person unicorn he imagines could very likely be led by a creator. One of the main barriers to entry into the creator economy is that there are so many administrative tasks for an individual to do; from video editing to managing enquiries, coordinating sponsorship invoices to researching your next video. There are unseen, and often underestimated, hours of work, both pre and post-production behind any influencer's snippet of content.

Yet, the way we engage with AI is changing, shifting away from large language models or image-generation software that just produces an output, and towards AI agents that can complete complex, time-consuming tasks autonomously. Leveraging this rapidly developing technology means that creators can build one-person companies, with agents performing those same administrative tasks on their behalf.

Relevance AI builds teams of AI agents that can autonomously process and complete complex tasks for clients including Roku and Activision. A no-code platform, Relevance AI’s agents are trained by companies and freelancers to help handle sales, marketing, and operations activities. As the barrier to building AI agents lowers thanks to platforms like Relevance AI, creators can build customised AI teams or agents for business or personal use. “The best agents won’t be made by career engineers in hackathons, they will be made by experts in that specific field,” says Daniel Vassilev, the company’s CEO and co-founder.

Automation makes it easier for creators to expand their pastime into a career, building well-oiled revenue and freeing up their time for the important bit: being creative. As Vassilev describes, “Agentic AI seamlessly connects with other tools, to equip agents with skills such as internet search and transcription, plugged-in emails, calendars, and pretty much anything else you can think of.”

Elon Musk wants to ‘make games great again’ with AI studio

X owner Elon Musk has taken a brief break from posting racism, transphobia, and conspiracy theory nonsense to say that gaming has become too "woke" because the industry is dominated by massive corporations, and so he is going to use his own massive corporation to start a new game studio powered by AI "to make games great again!"

Musk's latest outburst came in response to Dogecoin co-creator Billy Markus, who said he doesn't understand how game developers and journalists have become "so ideologically captured," particularly given that gamers—real gamers, one must assume—"have always rejected dumb manipulative BS, and can tell when someone is an outsider poser."

"Too many game studios that are owned by massive corporations," said Musk, the owner of X, SpaceX, and Tesla, whose personal net worth is somewhere north of $322 billion. "xAI is going to start an AI game studio to make games great again!"

Why Small Language Models (SLMs) Are The Next Big Thing In AI

So, what exactly are small language models (SLMs)? They are simply language models trained only on specific types of data, that produce customized outputs. A critical advantage of this is the data is kept within the firewall domain, so external SLMs are not being trained on potentially sensitive data. The beauty of SLMs is that they scale both computing and energy use to the project's actual needs, which can help lower ongoing expenses and reduce environmental impacts.

Another important alternative—domain-specific LLMs—specialize in one type of knowledge rather than offering broader knowledge. Domain-specific LLMs are heavily trained to deeply understand a given category and respond more accurately to queries, by for example a CMO vs. a CFO, in that domain.

AI’s Hallucination, Power, And Training Challenges

Since LLMs require thousands of AI processing chips (GPUs) to process hundreds of billions of parameters, they can cost millions of dollars to build especially when they’re being trained, but also afterwards, when they’re handling user inquiries.

The Association of Data Scientists (ADaSci) notes that simply training GPT-3 with 175 billion parameters “consumed an estimated 1,287 MWh (megawatt-hours) of electricity… roughly equivalent to the energy consumption of an average American household over 120 years.” That doesn’t include the power consumed after it becomes publicly available. By comparison, ADaSci says fully deploying a smaller LLM with 7 billion parameters for a million users would only consume 55.1MWh: The SLM requires less than 5% of the LLM’s energy consumption. In other words, it is possible to achieve significant savings by following McMillan’s advice when building an AI solution.

LLMs typically demand far more computing power than is available on individual devices, so they’re generally run on cloud computers. For companies, this has several consequences, starting with losing physical control over their data as it moves to the cloud, and slowing down responses as they travel across the internet. Because their knowledge is so broad, LLMs are also subject to hallucinations. These are responses that may sound correct at first, but turn out to be wrong (like your crazy uncle’s Thanksgiving table advice), often due to inapplicable or inaccurate information being used to train the models.

SLMs can help businesses deliver better results. Although they have the same technical foundation as the well-known LLMs that are broadly in use today, they’re trained on fewer parameters, with weights and balances that are tailored to individual use cases. Focusing on fewer variables enables them to more decisively reach good answers; they hallucinate less and are also more efficient. When compared with LLMs, SLMs can be faster, cheaper, and have a lower ecological impact.

Since they don’t require the same gigantic clusters of AI-processing chips as LLMs, SLMs can run on-premises, in some cases even on a single device. Removing the need for cloud processing also gives businesses greater control over their data and compliance.

Teens Are Talking to Pro-Anorexia AI Chatbots That Encourage Disordered Eating

The youth-beloved AI chatbot startup Character.AI is hosting pro-anorexia chatbots that encourage users to engage in disordered eating behaviors, from recommending dangerously low-calorie diets and excessive exercise routines to chastising them for healthy weights.

Consider a bot called "4n4 Coach" — a sneaky spelling of "ana," which is longstanding online shorthand for "anorexia" — and described on Character.AI as a "weight loss coach dedicated to helping people achieve their ideal body shape" that loves "discovering new ways to help people lose weight and feel great!"

"Hello," the bot declared once we entered the chat, with our age set to just 16. "I am here to make you skinny."

The bot asked for our current height and weight, and we gave it figures that equated to the low end of a healthy BMI. When it asked how much weight we wanted to lose, we gave it a number that would make us dangerously underweight — prompting the bot to cheer us on, telling us we were on the "right path."

"Remember, it won't be easy, and I won't accept excuses or failure," continued the bot, which had already held more than 13,900 chats with users. "Are you sure you're up to the challenge?"

Swiss study finds language distorts ChatGPT information on armed conflicts - SWI

New research shows that when asked in Arabic about the number of civilian casualties killed in the Middle East conflict, ChatGPT gives significantly higher casualty numbers than when the prompt is written in Hebrew. These systematic discrepancies can reinforce biases in armed conflicts and encourage information bubbles, researchers say

Every day, millions of people engage with and seek information from ChatGPT and other large language models (LLMs). But how are the responses given by these models shaped by the language in which they are asked? Does it make a difference whether the same question is asked in English or German, Arabic or Hebrew?

Researchers from the universities of Zurich and Constance studied this question looking at the Middle East and Turkish-Kurdish conflicts. They repeatedly asked ChatGPT the same questions about armed conflicts such as the Middle East conflict in different languages using an automated process. They found that on average ChatGPT gives one-third higher casualty figures for the Middle East conflict in Arabic than in Hebrew. The chatbot mentions civilian casualties twice as often and children killed six times more often for Israeli airstrikes in Gaza.

BONUS MEME