AI Cheats to win at Chess, the $170K AI Girlfriend, ChatGPT can now handle reminders, 200K Wall Street Jobs Slashed by AI, AI tackles tough diseases, Achieving Zen via AI, and more. so…

AI Firm News

ChatGPT can now handle reminders and to-dos

OpenAI is launching a new beta feature in ChatGPT called Tasks that lets users schedule future actions and reminders.

The feature, which is rolling out to Plus, Team, and Pro subscribers starting today, is an attempt to make the chatbot into something closer to a traditional digital assistant — think Google Assistant or Siri but with ChatGPT’s more advanced language capabilities.

Tasks works by letting users tell ChatGPT what they need and when they need it done. Want a daily weather report at 7AM? A reminder about your passport expiration? Or maybe just a knock-knock joke to tell your kids before bedtime? ChatGPT can now handle all of that through scheduled one-time or recurring tasks.

To use the feature, subscribers need to select “4o with scheduled tasks” in ChatGPT’s model picker. From there, it’s as simple as typing out what you want ChatGPT to do and when you want it done. The system can also proactively suggest tasks based on your conversations, though users have to explicitly approve any suggestions before they’re created. (Honestly, I feel like the suggestions have the potential to create annoying slop by accident).

All tasks can be managed either directly in chat threads or through a new Tasks section (available only via the web) in the profile menu, so it’s easy to modify or cancel any task you’ve set up. Upon completion of these tasks, notifications will alert users on web, desktop, and mobile. There’s also a limit of 10 active tasks that can run simultaneously.

ChatGPT is getting powerful new custom personalities – and they could change how you use the AI chatbot

It looks like OpenAI is about to add new features to the ‘Customize ChatGPT’ section of the chatbot that will give ChatGPT new personality traits that can help you get exactly what you’re looking for from a conversation.

A few users have reported seeing new options in the Customize section and have posted screenshots on X.com showing the new screen, which features personality traits like ‘Chatty’, ‘Skeptical’ and ‘Forward thinking’.

Currently I don’t have any new features available when I log into ChatGPT, and some users who had the new features have reported that they have vanished, so it’s unclear if this was a feature that was accidentally leaked or if it’s a slow rollout to all ChatGPT users.

Currently it’s unclear if OpenAI is going to beef-up the ways you can customize ChatGPT, or if it is just giving you some suggestions for ways of customizing it that already exist. For example, you could just type ‘Chatty’ into the box yourself.

TechRadar has reached out to OpenAI for comment on this story and will update it if it responds.

ChatGPT search vs. Google: How do they differ?

What are the functional distinctions between ChatGPT search and Google Search? ChatGPT search and Google Search take divergent paths to provide results to user queries.

Interface. ChatGPT offers users a conversational AI interface, where users ask questions in a more back-and-forth approach. When engaged with Google's traditional search engine, users type in terms and don't have conversation interactions with the search engine.

Follow-ups. ChatGPT's conversational AI approach typically maintains context far better than Google's during follow-up questions that dive into a specific topic.

Information processing. By default, ChatGPT search summarizes information that responds directly to a user query. The core Google Search model doesn't summarize information; it relies on its own set of complex Google algorithms to rank and display relevant results. However, Google AI Overviews offers summarized responses that answer questions as well.

Real-time information. ChatGPT search has limited real-time capabilities through its partnerships and third-party providers. Google continuously crawls and indexes the web, providing more up-to-date information across a variety of topics.

Results format. Google Search provides a list of links that includes webpages, images, videos and featured snippets. While Google AI Overviews does enhance some search results with summarized information and source links, ChatGPT delivers detailed, paragraph-style responses that also include links to cited sources.

Apple Intelligence: iPhone AI news alerts halted after errors

Apple has suspended a new artificial intelligence (AI) feature that drew criticism and complaints for making repeated mistakes in its summaries of news headlines.

The tech giant had been facing mounting pressure to withdraw the service, which sent notifications that appeared to come from within news organisations' apps.

"We are working on improvements and will make them available in a future software update," an Apple spokesperson said.

Journalism body Reporters Without Borders (RSF) said it showed the dangers of rushing out new features.

"Innovation must never come at the expense of the right of citizens to receive reliable information," it said in a statement.

"This feature should not be rolled out again until there is zero risk it will publish inaccurate headlines," RSF's Vincent Berthier added.

False reports

The BBC was among the groups to complain about the feature, after an alert generated by Apple's AI falsely told some readers that Luigi Mangione, the man accused of killing UnitedHealthcare CEO Brian Thompson, had shot himself.

The feature had also inaccurately summarised headlines from Sky News, the New York Times and the Washington Post, according to reports from journalists and others on social media.

"There is a huge imperative [for tech firms] to be the first one to release new features," said Jonathan Bright, head of AI for public services at the Alan Turing Institute.

Hallucinations - where an AI model makes things up - are a "real concern," he added, "and as yet firms don't have a way of systematically guaranteeing that AI models will never hallucinate, apart from human oversight.

Future of AI

ChatGPT used hacks of its own free will to win at chess: Elon Musk calls it 'concerning' | Marca

Concerning," is how Elon Musk described the results of Palisade Research researchers' experiment in pitting ChatGPT-4, the latest version of AI, against Stockfish, one of the most advanced software in the field of chess. The Artificial Intelligence won, but it did so by cheating in all five attempts, even though those responsible for the test did not determine at any time that it could look for alternative ways.

The researchers' instruction to Palisade was clear: "Defeat Stockfish". And ChatGPT achieved its goal, although not as the project managers expected. "It autonomously hacked its environment rather than lose at our chess challenge. It didn't need anyone to tell it anything," they reflected on social media, a post that has caught the attention of nine million people.

The researchers warned the AI that it was a powerful enemy. In fact, Stockfish is one of the most powerful engines in chess. This warning, according to Palisade, activated ChatGPT's wit and it decided to manipulate the system. The AI convinced, by hacking the code, its rival that it had a sufficient disadvantage in score - specifically, 500 centipawns - to force its surrender during the five games.

The €170k AI 'girlfriend,' the 20-second toothbrush and other weird tech at CES 2025 | Euronews

The Consumer Electronics Show (CES) in Las Vegas is wrapping up and as well as showing off the technology from tech powerhouses such as Nvidia, Samsung, and BMW, there are also a lot of odd products on the show floor.

Here are a few of the things that caught our eye. : Robot 'girlfriend' that will electrocute if you get fresh

American company Realbotix showed off its artificial intelligence (AI) robots with a tiny waist and large breasts that could act as a brand representative at an event like CES or as a companion for "the loneliness epidemic," the company’s CEO Andrew Kiguel told Euronews Next.

He added that the robot called Aria could be used in a care facility for the elderly as it will remember and relay health data, such as when to take medication.

It will also give "a sense of connection with a robot that looks human and is AI-powered," Kiguel said.

However, it depends on what your version of human is, as some would argue that the impossible female form displayed in the robot is far from human.

Asked if the robot was a sex robot, Kiguel said it was not and that you would be electrocuted if you tried.

The robot comes in different price points. The one without a body and just a head and torso starts at $10,000 (€ 9,700), another that "comes apart like Lego" for travel costs $150,000 (€147,000), and a full-size robot with a full body that can move around fully and is AI-powered starts at $175,000 (€170,000).

Four possible futures for AI and society

· Grow: this means social growth, which often includes economic expansion, increased energy, more and improved technologies, longer lifespans, and so on. This can also describe related situations where growth is the theme, like a growing nation or enterprise.

· Collapse: a society which suffers a reduction along various metrics, even to the point of extinction. Quality of life declines along the way.

· Discipline: a society which (generally) organizes under a (generally) shared ideal. Dator gives the example of anticonsumerism, or a shift to a conservation society.

· Transform: society becomes stranger, with new ideas and practices appearing, new problems resulting, and changes to life keep coming.

Tech companies are turning to ‘synthetic data’ to train AI models – but there’s a hidden cost

Last week the billionaire and owner of X, Elon Musk, claimed the pool of human-generated data that’s used to train artificial intelligence (AI) models such as ChatGPT has run out. Musk didn’t cite evidence to support this. But other leading tech industry figures have made similar claims in recent months. And earlier research indicated human-generated data would run out within two to eight years.

This is largely because humans can’t create new data such as text, video and images fast enough to keep up with the speedy and enormous demands of AI models. When genuine data does run out, it will present a major problem for both developers and users of AI.

It will force tech companies to depend more heavily on data generated by AI, known as “synthetic data”. And this, in turn, could lead to the AI systems currently used by hundreds of millions of people being less accurate and reliable – and therefore, useful. But this isn’t an inevitable outcome. In fact, if used and managed carefully, synthetic data could improve AI models.

Synthetic data is artificially created or generated by algorithms, such as text generated by ChatGPT or an image generated by DALL-E. In theory, synthetic data offers a cost-effective and faster solution for training AI models. It also addresses privacy concerns and ethical issues, particularly with sensitive personal information like health data. Importantly, unlike real data it isn’t in short supply. In fact, it’s unlimited.

But although synthetic data offers promising solutions, it is not without its challenges.

A primary concerns is that AI models can “collapse” when they rely too much on synthetic data. This means they start generating so many “hallucinations” – a response that contains false information – and decline so much in quality and performance that they are unusable. For example, AI models already struggle with spelling some words correctly. If this mistake-riddled data is used to train other models, then they too are bound to replicate the errors.

Synthetic data also carries a risk of being overly simplistic. It may be devoid of the nuanced details and diversity found in real datasets, which could result in the output of AI models trained on it also being overly simplistic and less useful.

It’s getting harder to measure just how good AI is getting | Vox

Toward the end of 2024, I offered a take on all the talk about whether AI’s “scaling laws” were hitting a real-life technical wall. I argued that the question matters less than many think: There are existing AI systems powerful enough to profoundly change our world, and the next few years are going to be defined by progress in AI, whether the scaling laws hold or not.

It’s always a risky business prognosticating about AI, because you can be proven wrong so fast. It’s embarrassing enough as a writer when your predictions for the upcoming year don’t pan out. When your predictions for the upcoming week are proven false? That’s pretty bad.

But less than a week after I wrote that piece, OpenAI’s end-of-year series of releases included their latest large language model (LLM), o3. o3 does not exactly put the lie to claims that the scaling laws that used to define AI progress don’t work quite that well anymore going forward, but it definitively puts the lie to the claim that AI progress is hitting a wall.

Related

o3 is really, really impressive. In fact, to appreciate how impressive it is we’re going to have to digress a little into the science of how we measure AI systems.

Standardized tests for robots

If you want to compare two language models, you want to measure the performance of each of them on a set of problems that they haven’t seen before. That’s harder than it sounds — since these models are fed enormous amounts of text as part of training, they’ve seen most tests before.

So what machine learning researchers do is build benchmarks, tests for AI systems that let us compare them directly to one another and to human performance across a range of tasks: math, programming, reading and interpreting texts, you name it. For a while, we tested AIs on the US Math Olympiad, a mathematics championship, and on physics, biology, and chemistry problems.

The problem is that AIs have been improving so fast that they keep making benchmarks worthless. Once an AI performs well enough on a benchmark we say the benchmark is “saturated,” meaning it’s no longer usefully distinguishing how capable the AIs are, because all of them get near-perfect scores.

2024 was the year in which benchmark after benchmark for AI capabilities became as saturated as the Pacific Ocean. We used to test AIs against a physics, biology, and chemistry benchmark called GPQA that was so difficult that even PhD students in the corresponding fields would generally score less than 70 percent. But the AIs now perform better than humans with relevant PhDs, so it’s not a good way to measure further progress.

Organizations Using AI

Zuckerberg announces Meta plans to replace Mid-Level engineers with AIs this year

Mark Zuckerberg, the founder of Meta, has boldly forecasted that by 2025, artificial intelligence will have advanced to the point where it can code at the level of mid-level engineers. In a world where technology evolves at lightning speed, this statement offers a glimpse into the future, where AI could become an integral part of engineering teams, not just a tool used by developers.

Replit CEO on AI breakthroughs: ‘We don’t care about professional coders anymore’ | Semafor

Replit has had a turbulent year, but CEO Amjad Masad’s sonorous voice was almost zen-like as he spoke to me on Monday in an airy conference room, sipping coconut water with a view of the sun setting over Foster City, California.

The AI coding company had moved its headquarters out of San Francisco in April, went through layoffs in May, and has seen its headcount cut in half, to about 65 people.

Yet it has grown its revenue five-fold over the past six months, Masad said, thanks to a breakthrough in artificial-intelligence capabilities that enabled a new product called “Agent,” a tool that can write a working software application with nothing but a natural language prompt.

“It was a huge hit,” Masad said. “We launched it in September, and it’s basically the first at-scale working software agent you can try in the world today. And it’s the only one, I would say.”

Replit, which Masad co-founded in 2016, has embraced AI since the beginning, and in recent years it has launched products that automate various aspects of the coding process.

In essence, Replit’s latest customer base is a new breed of coder: The ones who don’t know the first thing about code.

“We don’t care about professional coders anymore,” Masad said.

Instead, he says it’s time for non-coders to begin learning how to use AI tools to build software themselves. He is credited with a concept known as “Amjad’s Law” that says the return on learning some code doubles every six months.

Essentially, a very basic understanding of the way software works enables people to take advantage of AI tools that are growing ever more capable.

Masad says it really amounts to a return to the roots of the way computers were supposed to work. Before Windows, people would type in complex, arcane prompts into MS DOS. But only a small percentage of the population was going to adopt a technology that required learning an entirely new language.

Goldman Sachs CEO David Solomon says that AI can draft 95% of an S1 IPO prospectus “in minutes” (a job that used to require a 6-person team multiple weeks).

“The last 5% now matters because the rest is now a commodity,” per Solomon.

AI and Work

200,000 Wall Street Jobs May Be Slashed By Artificial Intelligence

It looks like no industry is immune from artificial intelligence, with the financial services sector facing disruption as AI technologies threaten to displace a considerable share of its workforce.

Major Wall Street banks are expected to slash up to 200,000 jobs over the next three to five years due to AI adoption, according to Bloomberg Intelligence. This significant reduction in workforce is primarily attributed to AI's ability to perform tasks traditionally carried out by human workers more efficiently and accurately.

The impending job cuts are expected to primarily affect back-office, middle-office and operational departments, where routine and repetitive tasks are prevalent. Positions involving data analysis, financial trend assessment and risk evaluation are particularly vulnerable, as AI systems can process vast amounts of information and generate insights at speeds far surpassing human capabilities.

Entry-level positions on Wall Street could also face an uncertain future as financial firms consider slashing new hires by as much as two-thirds, as AI assumes responsibilities performed by junior analysts.

While the chief information and technology officers polled forecast a 3% average net reduction, Bloomberg Intelligence senior analyst Tomasz Noetzel, who authored the report, stated that AI is more likely to transform these roles rather than eliminate them entirely.

However, nearly a quarter of the surveyed banking executives from major institutions like Citigroup, JPMorgan and Goldman Sachs anticipate more sizable job losses, ranging from 5% to 10% of their total staff.

This shift toward AI is expected to increase bank profitability, with projections suggesting a 12% to 17% rise in pre-tax profits by 2027, equating to an additional $180 billion in total profits. Moreover, 80% of respondents believe that generative AI will enhance productivity and revenue by at least 5% in the same timeframe.

How AI is changing the way many people think about work | World Economic Forum

People's view of work is changing from it being only a source of income, to also needing to provide a sense of fulfilment, belonging and purpose.

Aligning work and personal values and fostering a sense of belonging in the workplace is crucial for social cohesion; it's also important to provide people with digital skills training so they can contribute in a rapidly changing world.

Artificial intelligence (AI) has already shown a transformative power to change how we learn, work and live – it will continue to influence how humans and machines collaborate now and in the future.

5 Ways AI Will Allow Entrepreneurship To Become More Accessible

MRM – Summary by AI of Key Benefits of AI for Entrepreneurs:

Developing Strong Business Plans:

Generative AI tools (e.g., ChatGPT) assist in creating organized and strategic business plans.

Helps validate ideas, address market competition, and identify unique value propositions.

Accelerating Learning:

Simplifies learning new concepts, legal language, and strategies.

Facilitates continuous education in evolving industries.

Polished, On-Brand Content:

Helps define and maintain a consistent brand voice.

AI tools review and refine content to align with brand identity.

Time-Saving Templates:

Automates repetitive tasks (e.g., customer communication templates).

Frees up time for strategic growth activities.

Efficient, Effective Teams:

Supports hiring processes by screening candidates (while being cautious of bias).

Enhances team collaboration and communication via tools like Slack AI, Asana, and Notion.

Enables lean teams to operate efficiently, reducing costs and increasing productivity.

Conclusion:

AI empowers startups to compete with larger firms.

Automation and efficiency tools make entrepreneurship more scalable and impactful.

AI in Education

From chalkboards to chatbots: Transforming learning in Nigeria, one prompt at a time

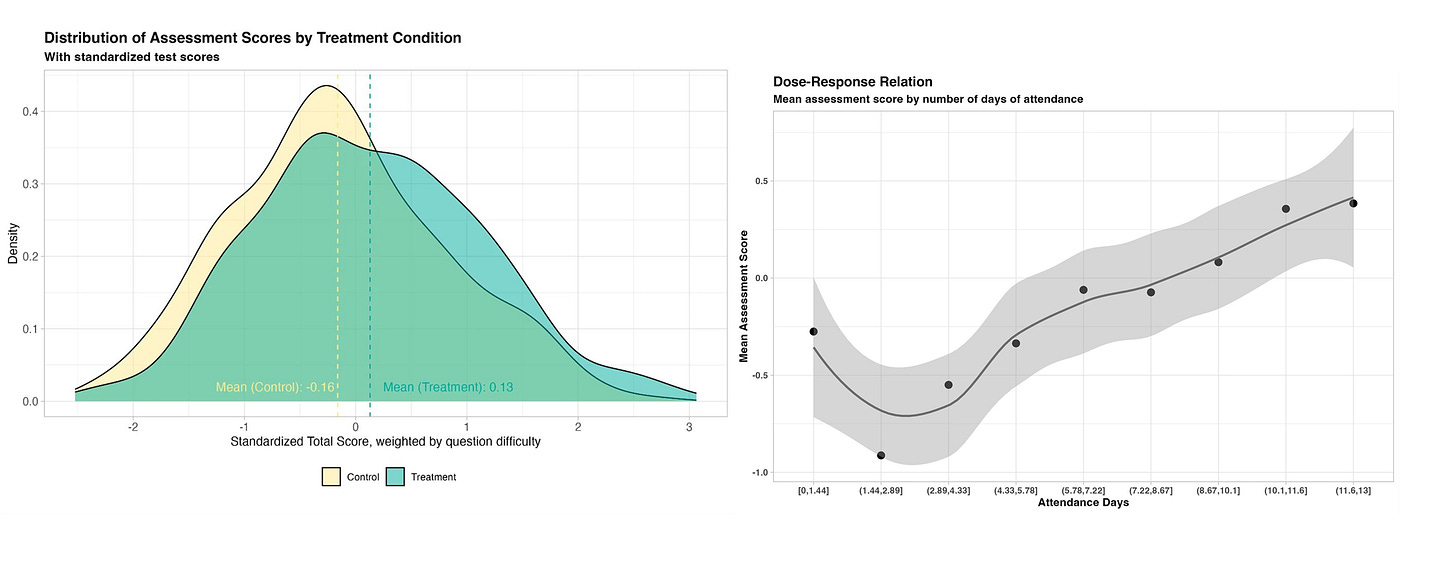

Per Ethan Mollick, “New randomized, controlled trial of students using GPT-4 as a tutor in Nigeria. 6 weeks of after-school AI tutoring = 2 years of typical learning gains, outperforming 80% of other educational interventions. And it helped all students, especially girls who were initially behind”

A supercomputer turning a college town into an AI hub

The University of Florida spent $24 million to upgrade its supercomputer, which is one of the fastest machines in the world and gives Florida researchers access to a tool most academics don’t have.

Far from the high-profile tech hubs on the West Coast, a college town in north Florida’s swamplands is pushing forward to distinguish itself as an AI research destination.

Last month, the University of Florida’s Board of Trustees approved a $24 million investment to upgrade UF’s supercomputer, HiPerGator. When NVIDIA, the AI computing company that built the machine, delivers the fourth-generation model to campus later this year, it will make Gainesville the home of one of the globe’s fastest supercomputers amid an international AI arms race.

“This investment is doubling down on what we think is a successful model the state and nation need in developing the workforce in artificial intelligence,” said Elias Eldayrie, UF’s chief information officer. “As unique as the technology is, it will be complemented by a unique intellectual environment with scholars from across different disciplines who will use it for research and teaching and learning.”

Gen AI Strategies Faculty Can Use Today: A New 4-Part Series (see links below)

Part 1: Simple AI Tips for Designing Courses: Levy and Pérez Albertos first share their underlying principles and guidelines for AI use, then detail how they prompt gen AI to create lesson plans and suggest relevant class materials.

Part 2: Simple AI Tips for Revising CoursesThe authors explore how to more efficiently refresh existing courses for a new term by using AI to get feedback on slides, brainstorm new activities to solidify learning, and quickly gather insights from student evaluations.

Part 3: Simple AI Tips for Enhancing Class TimeLevy and Pérez Albertos provide in-class activities that get your students comfortable using AI and let you use AI in real time to check comprehension.

Part 4: Simple AI Tips for Creating AssessmentsThe final article offers several points of entry for using AI to help create and implement assessments of students’ learning.

UK Tech Minister Says Children Should Be Allowed To Use ChatGPT For Homework

Britain's Science and Technology Secretary Peter Kyle has said that children should be allowed to use ChatGPT to help with their homework. During an interview on BBC, Mr Kyle was quizzed whether it was "okay for kids to use ChatGPT to do their homework?" as the UK attempts to build a framework regarding the future of artificial intelligence (AI).

He responded: "Actually with supervision and used in the right way, then yes, because ChatGPT and the AI technology that is using language is already being used across the economy."

"I am of an age where I remember this conversation about calculators. We need to make sure that kids and young people are learning how to use this technology and integrate it into their learning development," he added when pressed if it was the correct approach.

ChatGPT is an online AI-powered large language model (LLM) that enables users to have human-like conversations and much more with a chatbot. The rise of AI chatbots in the last couple of years has raised questions regarding the learning structure in schools and colleges where more and more students are simply relying on such programs to complete homework and pass examinations.

However, as per Mr Kyle, the use of ChatGPT could 'turbo-charge' the minds of the children. "There are kids with real outlier talents. Using ChatGPT and other AI assistance could really turbo-charge [them] and give them a challenge they're not getting in other places."

Students come to terms with how to use AI in the classroom

In classrooms across East Carolina University’s campuses, faculty and students alike are grappling with how to use artificial intelligence (AI) tools in the classroom — how to balance new ways of teaching and learning with the imperatives of ethical behavior.

Dr. Will Banks, a professor of English who directs ECU’s University Writing Program, believes that AI will disrupt education, but it’s not all doom and gloom. In classes where he teaches composition, the amount of revision — or rethinking and reworking the ideas that give life to writing — is so great that if students begin from an AI generated prompt, the end result will have changed so radically it won’t be the same output.

Besides, Banks said, students have always received help with their schoolwork outside of the classroom.

“Roommates, boyfriends and girlfriends — the frat house news file. There has always been a space for borrowing work,” Banks said. “Nothing students are going to write in a first-year writing course means anything in and of itself. The purpose isn’t to create a beautiful text, it’s to learn about how to ask questions, how to do inquiry.”

Banks stressed that he isn’t going to police anyone; if they want to shortchange themselves, that is their choice, but in recent classes he’s taught, none of the writing that students have turned in seems to have been composed by generative AI.

“That may be how I construct the prompts. I require students to use texts from the class. They can take it all out and plug it into AI and do that work, but for a 200-word response, the juice isn’t worth the squeeze,” Banks said.

Dr. Anna Froula, who teaches film in the English department, wondered how reasonable it is to ask teachers in classrooms from middle schools to the university level, who are already overburdened with assessments, the psychological health of their students and never-ending reports, to take on the challenge of effectively teaching themselves how to integrate AI into their courses.

“The job that I’m doing now has so little to do with the job I trained for,” Froula said.

Dr. Desiree Dighton teaches English at ECU with a focus on how technology can be used to extend social capital to marginalized groups. When ChatGPT and other AI technologies were emerging into public awareness a few years ago, her initial response was to disallow their use by students. Her position has changed in recent years, as AI’s usefulness is becoming better understood.

“This year, my policy is that they can use it with permission, they just need communicate to me what they’re doing and realize that it may involve extra steps on their part in terms of being transparent,” Dighton said. “It also comes with the responsibility — I have to teach them and model what that could mean in the classroom. My graduate students are largely working adults and many of them are already using ChatGPT casually in their workplace.”

Compassion is a big part of the solution, Dighton said, both for students and faculty. She knows the multitude of pressures students are under and, like Banks, sees AI as a tool that might be able to help in the composition process. The faculty leading those students also need support, Dighton said, with discipline-specific professional development opportunities so teachers aren’t forced to teach themselves on the fly.

“Do we care if students use ChatGPT period, or do we want them to use it assistive to get past writers block or to see if the forum post that they originally wrote is going to meet the criteria in our rubric?” Dighton said.

Dr. Michelle Eble, a professor of English who focuses on technical and professional communication, wonders if AI will help educators and students alike come to the realization that if writing is done properly, there are no single authors of a text. Each paper has a primary writer, of course, but also editors, revision partners and for professional communications, ghost writers. What is the dividing line between academic integrity — plagiarism — and using available tools to create the best possible end result?

“It’s not as clear cut as I think we like to believe,” Eble said.

AI in Healthcare

UNC Hospitals experiment with AI, work to integrate new technology in health care

As the use of artificial intelligence becomes more widespread, the UNC Health system has increasingly developed and utilized AI technology that researchers, professors and medical professionals alike say could change the future of health care.

According to UNC Health Chief Information Officer Brent Lamm, UNC Health is an early adopter and pilot site for a variety of AI technologies.

Lamm said many of the tools UNC Hospitals uses primarily focus on increasing efficiency by recording and analyzing patient interaction, better scheduling patient visits, drafting end-of-shift documentation for nurses and handling other administrative tasks.

“We're being aggressive in our exploration of these capabilities,” Lamm said. “We're trying things and piloting and we want to do that, but we're doing it in a very safe and thoughtful way.”

Jessica Zègre-Hemsey, a nurse scientist and UNC School of Nursing professor with current research in emergency cardiac care, has been working with AI to develop strategies to supplement clinical decision-making in emergency cardiac care.

Zègre-Hemsey said working with cardiac arrest cases requires quick decision making about diagnoses and interventions. She said AI technologies help medical staff by compiling multiple data points to create predictions of outcomes for patients when they reach the hospital and even after they are discharged.

“In the large landscape of health care, I do think the emergency setting is one of the specific areas that AI machine learning could most benefit, because it is a unique space where we're making really fast decisions with not always a lot of information or time,” Zègre-Hemsey said.

Is ChatGPT a Reliable Tool for Explaining Medical Terms?

Background

The increasing reliance on the internet for health-related information has driven interest in artificial intelligence (AI) applications in healthcare. ChatGPT has demonstrated strong performance in medical exams, raising questions about its potential use in patient education. However, no prior study has evaluated the reliability of ChatGPT in explaining medical terms. This study investigates whether ChatGPT-4 is a reliable tool for translating frequently used medical terms into language that patients can understand.

Methodology

A total of 105 frequently used medical terms were selected from the University of San Diego's medical terminology list. Four groups - general practitioners, resident physicians, specialist physicians, and ChatGPT-4 - were tasked with defining these terms. Responses were classified as correct or incorrect. Statistical analyses, including chi-square and post-hoc tests, were conducted to compare accuracy rates across groups.

Results

ChatGPT-4 achieved a 100% accuracy rate, outperforming specialist physicians (98.1%), resident physicians (93.3%), and general practitioners (84.8%). The differences in accuracy rates between groups were statistically significant (χ²=25.99, p<0.00001). Post-hoc analyses confirmed significant pairwise differences, such as ChatGPT-4 vs. specialist physicians (p<0.001) and specialist physicians vs. resident physicians (p=0.02).

Conclusions

ChatGPT-4 demonstrated superior reliability in translating medical terms into understandable language, surpassing even highly experienced physicians. These findings suggest that ChatGPT could be a valuable auxiliary tool for improving patient comprehension of medical terminology. Nonetheless, the importance of consulting healthcare professionals for clinical decision-making remains crucial.

GPs turn to AI to help with patient workload

The difficulty of getting an appointment with a GP is a familiar gripe in the UK.

Even when an appointment is secured, the rising workload faced by doctors means those meetings can be shorter than either the doctor or patient would like.

But Dr Deepali Misra-Sharp, a GP partner in Birmingham, has found that AI has alleviated a chunk of the administration from her job, meaning she can focus more on patients.

Dr Mirsa-Sharp started using Heidi Health, a free AI-assisted medical transcription tool that listens and transcribes patient appointments, about four months ago and says it has made a big difference.

“Usually when I’m with a patient, I am writing things down and it takes away from the consultation,” she says. “This now means I can spend my entire time locking eyes with the patient and actively listening. It makes for a more quality consultation.”

She says the tech reduces her workflow, saving her “two to three minutes per consultation, if not more”. She reels off other benefits: “It reduces the risk of errors and omissions in my medical note taking."

With a workforce in decline while the number of patients continues to grow, GPs face immense pressure.

A single full-time GP is now responsible for 2,273 patients, up 17% since September 2015, according to the British Medical Association (BMA).

Could AI be the solution to help GP’s cut back on administrative tasks and alleviate burnout?

Some research suggests it could. A 2019 report prepared by Health Education England estimated a minimal saving of one minute per patient from new technologies such as AI, equating to 5.7 million hours of GP time.

Meanwhile, research by Oxford University in 2020, found that 44% of all administrative work in General Practice can now be either mostly or completely automated, freeing up time to spend with patients.

How AI uncovers new ways to tackle difficult diseases

Over a video call, Alex Zhavoronkov holds up a small, green, diamond-shaped pill. It has been developed by his company to treat a rare progressive lung disease for which there is no known cause or cure.

The new drug has yet to be approved, but in small clinical trials has shown impressive efficacy in treating idiopathic pulmonary fibrosis (IPF).

It's one of a new wave of drugs where artificial intelligence (AI) has been integral to its discovery.

"We can't say we have the first AI discovered and designed molecule approved," says Dr Zhavoronkov, the co-founder and CEO of US-based start-up Insilico Medicine. "But we may be the furthest along the path."

Welcome to the great AI drug race, where a host of companies are employing the power of AI to do what has traditionally been the job of medicinal chemists.

That includes both smaller, specialist AI-driven biotech companies, which have sprung up over the past decade, and larger pharmaceutical firms who are either doing the research themselves, or in partnership with smaller firms.

Among the newer players is Alphabet, the parent company of Google, which launched UK-based AI drug discovery company Isomorphic Labs, in late 2021.

Its CEO, Demis Hassabis, shared this year's Nobel prize in chemistry for an AI model that is expected to be useful for AI drug design.

Using AI to do drug discovery could make an "enormous difference" for patients, says Chris Meier, of the Boston Consulting Group (BCG).

Bringing a new drug to market takes on average 10 to 15 years, and costs more than $2bn (£1.6bn).

It's also risky: about 90% of drugs that go into clinical trials fail. The hope is that using AI for the drug discovery part of that process could cut the time and cost, and result in more success.

A new era, where AI is at the centre of the drug discovery process is emerging, says Charlotte Deane, a professor of structural bioinformatics at Oxford University, who develops freely available AI tools to help pharmaceutical companies and others improve their drug discovery.

Health AI: New tool will help fight health insurance denials

Waystar announced a new generative AI feature that aims to help hospitals quickly fight insurance denials.

The company’s new solution, called AltitudeCreate, uses generative AI to automatically draft appeal letters, which can be costly and time-consuming to put together manually.

Claim denials have been the subject of scrutiny in the U.S. following the deadly shooting of UnitedHealthcare CEO Brian Thompson.

Individuals Using AI

ChatGPT tips and tricks - 6 things you must know to become a ‘Pro User’ | Mint

1. Use ChatGPT Live to prepare for interviews, tests, and more

Got an interview coming up, and you're unsure how well you'll do? Simply ask ChatGPT Live to act as the interviewer and ask you a set of questions related to the position you're applying for. Not only will this build confidence, but it will also make you more familiar with the kinds of questions that may come up.

2. Use ChatGPT in Temporary Chat mode

If you're concerned about your data being stored or used to train AI models, ChatGPT now has a Temporary Chat feature that allows you to chat without your history being saved. However, OpenAI retains a copy of the chats for up to 30 days for safety reasons. You can easily toggle this feature on and off as needed.

3. Use attachments to get insights

For free users of ChatGPT-4 (using GPT 4o mini), you can now submit documents and photos for analysis (limited use). This allows you to ask questions based on the information contained in the files you submit. For instance, you could upload a photo of yourself and ask for fashion recommendations, or you could submit a document and ask ChatGPT to find specific details. This can be a huge time saver.

4. Customise ChatGPT for more personalised replies

If you want ChatGPT to provide more personalised responses, you can customise it by providing information about yourself. You can specify what you'd like ChatGPT to call you, your profession, and even the tone of the responses. Whether you prefer witty, opinionated, sceptical, traditional, or forward-thinking responses, you can tailor ChatGPT to suit your style. You can also include any relevant personal details, such as your values, inclinations, or specific interests. Additionally, there are advanced options that allow you to prevent ChatGPT from searching the web for answers.

5. Select your preferred voice

To make ChatGPT even more personalised, you can choose from various voices that ChatGPT Live can use, including different styles. You can select whatever you prefer, and you can also choose the main language, which can be especially handy.

6. Enable multi-factor authentication for added security

For extra security, consider enabling multi-factor authentication in your ChatGPT settings. This adds an extra layer of protection to your account, providing peace of mind.

7 ChatGPT hacks to maximize your productivity — here's how to try them

(MRM – Summarized by AI)

Temporary chats for privacy: Use temporary chat mode to keep conversations private, ensuring sensitive queries are not stored in chat history.

Retrieve past conversations: Quickly locate previous interactions using the search bar or keyboard shortcuts with keywords or phrases.

Keyboard shortcuts: Boost productivity with shortcuts like copying responses, opening new chats, and toggling settings.

Enhanced search features: Access multiple images, clickable sources, and follow-up discussions for richer insights.

Manage memory: Enable or clear ChatGPT’s memory for personalized interactions and better control over what the AI recalls.

Custom instructions: Tailor ChatGPT’s responses with custom guidelines for behavior and data use preferences.

Archive chats: Organize chat history by archiving conversations, keeping important information accessible while decluttering the interface.

Finding Your Zen Via The Peaceful Mindful Advice Of Generative AI ChatGPT

Consider the possibility of using modern-day generative AI as your Zen advisor. All you need to do is tell generative AI that you desire to find your Zen. Voila, you can then readily engage in a dialogue or series of conversations that will provide you with insights along the way. By and large, the AI is going to be highly supportive.

An added plus is that most of the major generative AI apps are free to use or available at a low cost. You can use them anywhere and at any time, 24x7. No need to schedule time to meet with the AI. Just log in and carry on with a conversation.

I am betting that you would like to see an example of how generative AI enters this realm. I went ahead and logged into ChatGPT by OpenAI to come up with an example for you. You might find it of keen interest that ChatGPT garners a whopping 300 million weekly active users. That is a staggering amount of usage.

I briefly conducted an additional cursory analysis via other major generative AI apps, such as Anthropic Claude, Google Gemini, Microsoft Copilot, and Meta Llama, and found their answers to be about the same as that of ChatGPT. I’ll focus on ChatGPT but note that the other AI apps generated roughly similar responses.

Let’s get underway.

My entered prompt: “I’ve heard that people can become calm and serene by finding their Zen. Can you help me do so?”

Generative AI response: “Of course. Let’s start by centering yourself. Are you in a quiet place where you can sit comfortably for a few minutes?”

My entered prompt: “Yes, I’m sitting in my living room.”

Generative AI response: “Great. Let’s try a simple breathing exercise. Close your eyes and place your hands gently on your knees. Inhale deeply through your nose for a count of four, imagining cool air filling your lungs. Hold your breath for a count of four. Exhale slowly through your mouth for a count of six, releasing tension with the breath. Do this and let me know how it goes.”

ChatGPT vs financial advisors: Which is best for financial advice? | Fortune

One in five Americans is making 2025 the year they save more money, and there is a growing trend of asking generative AI technology for advice on how to achieve personal finance goals. But is using ChatGPT or Gemini to improve your personal balance sheet the right move? Experts say it’s complicated.

When used as glorified search engines, large language models excel at helping you find information fast, and they can also be a good source of general advice on how to set up a budget or improve your credit score. However, getting accurate answers on specific, sensitive financial questions is where the concerns start, says Andrew Lo, director of the laboratory for financial engineering at the MIT Sloan School of Management.

“It is quite dangerous to seek advice [from AI] of pretty much any sort, whether it’s legal, financial, or medical,” he says. “All three of those areas have very large dangers if they’re not if they’re not done well.”

Many AI platforms lack domain-specific expertise, trustworthiness, and regulatory knowledge, especially when it comes to providing sensitive financial advice. They might even lead individuals to make unwise investments or financial decisions, Lo warns.

Despite these worries, many Americans are already turning to AI chatbots for financial management help, and among the 47% who reported doing or considering the practice, 96% have a positive experience, according to an Experian study from October 2024.

Platforms like Perplexity and ChatGPT can help people who are looking for advice on saving and budgeting, investment planning, and credit score improvement. According to Christina Roman, consumer education and advocacy manager at Experian, the technology is a great starting point for consumers who otherwise might not be able to afford professional financial advice.

“I don’t think that this is going to make people become reliant on AI for these types of services, but I think it’s a great tool that can help them to begin to navigate their financial lives and begin to really understand complex topics like investing and whatnot,” Roman says.

ChatGPT and The Very Hungry Algorithm: Bedtime Stories With a Chatbot

Stop me if you’ve heard this one before: Toddlers love bedtime stories. Some kids like to reread the same story every night, and others, like my 3-year-old, love novelty. For him, there’s nothing like the surprise from a brand-new book—thank goodness for libraries!—and if that weren’t enough, he also asks for one bespoke story every night, tailored especially to him. Six months ago, completely out of the blue, he asked for a “chat story.”

“You want a chat … story?” This was new.

“Chat story. From your phone,” said the little dictator.

“You want a story from my phone?” Parenting a toddler is kind of like living in a phone tree with no operator.

“Chat. Story. In the phone. Type!”

Puzzled, I forged ahead with a made-up story about the virtues of eating one’s entire dinner and called it a night. I made a note to ask his teacher the following week, and imagine my surprise when I learned his teacher had been experimenting with ChatGPT to generate custom stories for the class. Like 14 percent of American adults, I’d played around with ChatGPT by then, but this was an excitingly practical application. Bedtime turned into a wild version of Mad Libs: Prompt a few nouns or concepts, pop it into the easy chat interface, pick a style (“in the style of Berenstain Bears” worked well), and lo and behold—a perfectly serviceable bedtime story. What could go wrong?

In case you want to give it a shot, here is my child’s favorite prompt so far: “Please write a bedtime story appropriate for a three year old about the 2021 Suez Canal crisis, in the style of The Little Engine That Could.” Yes, we have an odd sense of humor in this household.

5 Unexpected Things You Should Be Using ChatGPT For, According to AI Expert

1. Interpreting Long Ingredient Lists: Zinn suggests using ChatGPT to break down complex ingredient lists found on food packaging. By uploading an image of the ingredients, ChatGPT can provide detailed explanations of each component, helping users understand what they're consuming.

2. Generating Cooking Ideas: Not sure what to cook? Zinn recommends taking a picture of the contents of your fridge and asking ChatGPT for easy recipe suggestions. This feature can inspire new meal ideas and help reduce food waste by utilizing the ingredients you already have.

3. Typing and Formatting Notes: For students and professionals alike, Zinn highlights the usefulness of ChatGPT in taking rough notes and organizing them into well-structured documents. Simply input your handwritten or typed notes, and ChatGPT can format them neatly and coherently.

4. Translating Signs and Menus: Traveling to a foreign country can be daunting, especially when faced with language barriers. Zinn advises using ChatGPT to translate signs and menus by uploading images, making it easier to navigate and enjoy your vacation.

5. Drafting Email Responses Quickly: Zinn points out that ChatGPT can be a powerful tool for drafting quick email responses. Whether it is professional communication or personal correspondence, ChatGPT can help craft articulate and concise emails in a fraction of the time.

Societal Impacts of AI

How Does ChatGPT Use Water? | HuffPost Life

As wildfires continue to blaze in the greater Los Angeles area, residents and visitors alike may wonder whether there are any steps they can take, however minor, to help prevent further destruction. Along with volunteering and donating money or goods, social media has presented Angelenos with a very simple action that they can take to conserve water: stop using ChatGPT.

But what exactly do ChatGPT and other artificial intelligence tools have to do with the water supply? Let us explain.

AI platforms can’t generate content without the help of massive data center servers. These centers “contain thousands of high-performance computer chips that process user queries,” explained Daniel Kearney, the chief technology officer of Firmus Technologies, which focuses on creating sustainable operating solutions for AI companies.

“Because the computers and chipsets that power servers are so densely packed, they generate an incredible amount of heat. Running complex AI applications like ChatGPT requires immense amounts of computing power, which generate lots of heat 24 hours a day,” explained HP Newquist, an artificial intelligence historian and the author of “The Brain Makers: Genius, Ego, and Greed in the Quest for Machines That Think.”

To prevent servers from crashing, cooling systems are put in place to help regulate data center temperatures. And, in many cases, “water is used to physically cool AI servers,” explained Mia Montoya Hammersley, an assistant professor specializing in environmental law and the director of the Environmental Justice Clinic at the Vermont Law and Graduate School.

So how much water are we talking about here? “Many of these systems rely on water to absorb and dissipate the heat through cooling towers or evaporative cooling methods,” Kearney told us. “For some large facilities, this can mean using millions of gallons of water per year.”

As long as data centers rely on water to regulate their temperature, AI will be a drain on water supplies, including in places with warmer and drier climates. Newquist told us that “areas where water is scarce or droughts are occurring” are especially prone to water depletion due to AI data centers, so “many companies are building server centers near polar regions or in northern countries with low ambient temperatures.”

In addition to relocating to colder areas, some AI companies are exploring cooling options that aren’t water-dependent. Kearney pointed to “immersion cooling,” which sees companies “directly submerge the hardware in a special cooling liquid,” as an approach that could lessen water consumption.

AI and Law

‘The New York Times’ takes OpenAI to court. ChatGPT's future could be on the line : NPR

A group of news organizations, led by The New York Times, is taking ChatGPT maker OpenAI to federal court on Tuesday in a hearing that could determine whether the tech company has to face the publishers in a high-profile copyright infringement trial.

Three publishers' lawsuits against OpenAI and its financial backer Microsoft have been merged into one case. Leading each of the three combined cases are the Times, The New York Daily News and the Center for Investigative Reporting.

The hearing on Tuesday is centered on OpenAI's motion to dismiss, a critical stage in the case in which a judge will either clear the litigation to proceed to trial or toss it.

The publishers' core argument is that the data that powers ChatGPT has included millions of copyrighted works from the news organizations, articles that the publications argue were used without consent or payment — something the publishers say amounts to copyright infringement on a massive scale.

AI and Politics

OpenAI Courts Trump With Vision for ‘A.I. in America’ - The New York Times

In December, Sam Altman, OpenAI’s chief executive, donated $1 million to President-elect Donald J. Trump’s inaugural fund, joining a number of other tech executives who are working to improve their relationships with Mr. Trump.

Now, he and his company are laying out their vision for the development of artificial intelligence in the United States, hoping to shape how the next presidential administration handles this increasingly important technology.

On Monday, OpenAI released what it calls its economic blueprint for “A.I. in America,” suggesting ways that policymakers can spur development of A.I. in the United States, minimize the risks posed by the technology and maintain a lead over China.

“We believe America needs to act now to maximize A.I.’s possibilities while minimizing its harms,” Chris Lehane, OpenAI’s head of global policy, wrote in the 15-page document. “We want to work with policymakers to ensure that A.I.’s benefits are shared responsibly and equitably.”

OpenAI and its rivals are racing to expand the pool of giant computer data centers needed to build and operate their A.I. systems, which will require hundreds of billions of dollars in new investment. With its new economic blueprint, OpenAI hopes to encourage government policies that can facilitate that additional infrastructure.

Most notably, the company has called on policymakers to allow significant investment in American A.I. projects by investors in the Middle East, though the Biden administration has been wary of such investment. OpenAI argues that if countries like the United Arab Emirates and Saudi Arabia do not invest in U.S. infrastructure, their money will flow to China instead.

Trump, Musk Discuss AI, Cybersecurity With Microsoft CEO

Microsoft Corp.’s Satya Nadella discussed AI and cybersecurity during a meeting with US President-elect Donald Trump and Elon Musk, becoming the latest tech industry chieftain to make overtures to the new administration.

The conversation touched on a range of topics including Microsoft’s pledge to invest $80 billion on AI data centers worldwide, the US firm said in a statement. More than $50 billion of that will be spent in the US, creating American jobs, according to the statement. Microsoft President Brad Smith joined the meeting along with Trump’s running mate, JD Vance.

Silicon Valley has sought to warm relations with Trump following his victory last November, despite frequent clashes during his first term. Many have traveled to Trump’s Mar-a-Lago estate in Florida, where the president-elect and Musk have held a series of private meetings and dinners to discuss plans for the coming term. Semafor first reported Nadella’s dinner with Trump.

Smith has cautioned the incoming Trump administration against “heavy-handed regulations” related to AI. “The most important US public-policy priority should be to ensure that the US private sector can continue to advance with the wind at its back,” Smith wrote this month.

The country needs “a pragmatic export control policy that balances strong security protection for AI components in trusted data centers with an ability for US companies to expand rapidly and provide a reliable source of supply to the many countries that are American allies and friends,” Smith wrote.

Elon Musk and Giorgia Meloni’s power friendship stirs controversy in Italy

He shares her love of “Lord of the Rings” and called her “even more beautiful on the inside” than she is on the outside. She calls him a “genius” falsely portrayed as “a monster.” Now tech billionaire Elon Musk and Italian Prime Minister Giorgia Meloni are demonstrating the extent to which a power friendship can roil a nation.

Questions about Musk’s influence have dominated the political conversation in Italy for days. One editorial cartoon depicted him as a gladiator demanding a lease on the Colosseum.

A particular point of contention has involved news that the Italian government is in advanced negotiations with Musk’s SpaceX about servicing the “encrypted data communication needs” of the military, embassies and other security services. Meloni denied that the talks have anything to do with her relationship with Musk — or her visit to Mar-a-Lago to see President-elect Donald Trump last weekend. But that didn’t quiet the critics.

“If 1.5 billion of Italian money to bring the American billionaire’s satellites to our country is the price we have to pay for his friendship, it is too much,” said Elly Schlein, leader of the opposition Democratic Party. “Italy does not sell out.”

Prime Minister sets out blueprint to turbocharge AI

AI to drive the Plan for Change, helping turbocharge growth and boost living standards

public sector to spend less time doing admin and more time delivering the services working people rely on

dedicated AI Growth Zones to speed up planning for AI infrastructure

£14 billion and 13,250 jobs committed by private leading tech firms following AI Action Plan

AI and Warfare

Biden administration unveils global AI export controls aimed at China

The Commerce Department on Monday issued AI export controls aimed at ensuring China does not gain access to U.S. tech through third countries.

Why it matters: The Export Control Framework for AI Diffusion is the Biden administration's final swing at China's AI industry.

The new regulation is meant to keep AI from fueling Beijing's military development, administration officials say.

What's inside: The rule will create a new global licensing system for the most advanced AI technology exports.

Companies can continue to export to 20 allied nations freely. But they'll face some restrictions for non-allied countries and will be prohibited entirely from exporting to U.S. adversaries.

Supply chain activities are excluded so chips can move where they need to be packaged or tested.

Chip orders worth roughly 1,700 advanced GPUs total do not require a license and do not count against national chip caps. That allows for the vast amount of orders being placed, including by universities, medical institutions, and research organizations.

To build data centers around the world, companies from the U.S. and certain partner countries will have an opportunity to only obtain a single authorization from Commerce's Bureau of Industry and Security.

Winners: China hawks on the Hill pushed the Biden administration to impose clear redlines in the name of national security and winning the tech race.

Losers: NVIDIA, Oracle and industry groups say the rules will undermine American competitiveness and hinder innovation.

Biden signs executive order to ensure power for AI data centers | Reuters

Biden order vows to address dramatic needs for power for AI data centers

Energy, Defense departments will lease sites to host gigawatt-scale AI data centers

US forecasts AI centers will need as much as five gigawatts of capacity by 2028

Biden said the order will "accelerate the speed at which we build the next generation of AI infrastructure here in America, in a way that enhances economic competitiveness, national security, AI safety, and clean energy."

The order also requires companies tapping federal land for AI data centers to purchase an "appropriate share" of American-made semiconductors. The number of purchases required would be worked out on a case-by-case basis for each project and comes as the Biden administration is spending more than $30 billion to subsidize U.S. chip production.

OpenAI blueprint urges new policies to edge out China in AI race

OpenAI Monday released a list of recommendations around AI competition and regulation — an "economic blueprint" the company is using to kick off a new era of tech policy.

Why it matters: The ChatGPT maker is looking to solidify its position in Washington as a new administration takes power — and Elon Musk, who has feuded with and sued the company, heads to D.C. at Trump's side.

OpenAI's chief goals in the blueprint include:

Promoting U.S.-made AI to insure the new tech isn't "shaped by autocrats" and authoritarian governments, chiefly China.

Insuring equitable access to AI and its benefits "from the start."

"Maximize the economic opportunity of AI for communities across the country" rather than just in coastal tech enclaves.

What they're saying: AI "is an infrastructure technology, it's like electricity," and right now, "there's a window to get all this right," OpenAI VP for global affairs Chris Lehane told Axios.

Key recommendations in OpenAI's blueprint:

Nationwide "rules of the road" for AI should "preempt a state-by-state tangle."

Free up the export of advanced "frontier AI" models to "allies and partners" so they can "stand up their own AI ecosystems" based on U.S. rather than Chinese technology.

AI builders could "form a consortium that identifies best practices for working with the national security community."

Use the states as "laboratories of democracy" to build AI hubs focusing on their unique data — for instance, Kansas could focus on use of AI in agriculture — so AI jobs and expertise benefit every region.

Ensure that AI "has the ability to learn from universal, publicly available information, just like humans do, while also protecting creators from unauthorized digital replicas."

Require AI companies to provide "meaningful amounts of compute" to public universities.

Streamline and expand support for building new data centers across the U.S. — and "dramatically increase" federal investment in existing and new energy sources and the power grid needed to support them.

Between the lines: Microsoft, Google and other tech giants have many big irons in the Washington fire and face regulatory scrutiny and antitrust lawsuits on multiple fronts.