In this issue…the $500B Stargate AI Project, Elon Making Army of 1M Robots, She's in love with ChatGPT, AI can predict your success, AI can mimic great storytellers, AI exceeds humans on every creativity test, AI proliferation, and more.

So, lots going on in AI this week so…

AI Tips & Tricks

ChatGPT's new customization options are exactly what I've been waiting for to make my chats more personal | TechRadar

(MRM – I did this…took about 2 minutes)

OpenAI has confirmed via X.com that it has introduced new customization features to ChatGPT. The new features that allow you to customize how the AI chatbot responds to you are rolling out now in the browser-based version of ChatGPT and on desktop on Windows.

The release currently doesn’t cover users in the EU, Norway, Iceland Liechtenstein, and Switzerland, but will be “available soon” according to OpenAI. It’s also not available yet in the Mac app. OpenAI says the new features will be coming to desktop on MacOS ”in the next few weeks.”

The update includes two new options in the Customize ChatGPT dialog box (available by clicking on your icon, and then choosing Customize ChatGPT from the menu that appears). The first is ‘What should ChatGPT call you?’ and the second is ‘What do you do?’ which is asking for your occupation.

In the ‘What traits should ChatGPT have?’ box you’ll find new options have been added including ‘Chatty’, ‘Witty’, and ‘Opinionated’.

Below this box is a new ‘What else would you like ChatGPT to know about you?’ box, where you can type in your interests and values.

Once you’ve entered some information in these boxes you’ll find your interactions with ChatGPT are taken to a much more personal level, which can help save you time, since you don’t have to keep asking it to respond in a certain way. It will also tailor information to your profession.

7 prompts for creating custom GPTs in ChatGPT – here's how to try them | Tom's Guide

(MRM – this article gives you prompts to create these CustomGPTs)

Industry-Specific Expert

A tailored AI model acts as a knowledgeable colleague in a specific field, providing technical advice, detailed explanations, and actionable insights. Responses use industry-standard terminology and include references to standards, frameworks, or research.

Language Tutor

A custom GPT adapts to the user’s language proficiency, offering lessons, vocabulary drills, grammar tips, and simulated conversations. It adjusts difficulty levels, provides clear feedback on errors, and creates engaging interactions to motivate learning.

Lifestyle Guide

This GPT supports wellness and self-improvement goals by suggesting meal plans, fitness routines, mindfulness exercises, and stress management strategies. It offers practical, step-by-step advice tailored to the user’s preferences, with a focus on encouragement and motivation.

Educational Tool for Kids

Designed for young learners, this GPT explains concepts using storytelling, games, and interactive questions. It maintains a friendly tone, provides simplified explanations, and fosters active engagement with quizzes and prompts.

Event Planning Assistant

A GPT for planning events offers creative ideas, logistical advice, and tailored recommendations for managing budgets, timelines, and themes. It provides step-by-step guidance for organizing various types of events, ensuring a smooth and memorable experience.

Motivating Sidekick

This GPT serves as a supportive companion, offering encouragement, celebrating achievements, and breaking down challenges into manageable steps. It uses motivational language to keep the user inspired and focused on their personal and professional goals.

Creative Writing Companion

An AI partner for writers that assists with brainstorming ideas, developing characters, and improving narrative structure. It provides constructive feedback on drafts, inspires creativity, and suggests improvements while maintaining a judgment-free approach.

5 ChatGPT Prompts To Define Business Success And Succeed Every Time

Here are the five topics and their associated prompts from the article (via AI Summary)

1. Define Your Success Metrics

Prompt:

"Help me define what winning means in my business. Ask me questions about my goals, values, and vision for success. After each response, dig deeper to understand my true motivations. Once we have explored several areas, create 3 specific metrics I can track daily to measure progress toward my version of success. Include both quantitative and qualitative measures that align with my personal definition of winning."

2. Create Your Success System

Prompt:

"Guide me in developing my personal success system. First, ask me about my biggest professional wins and what contributed to them. For each success, help me identify the specific actions and decisions that led to the positive outcome. After analyzing several examples, create a step-by-step framework I can follow to replicate these successes, drawing on the common themes."

3. Build Your Dream Team

Prompt:

"Help me identify the key roles I need to fill for business success. Continuing with the success system conversation, first ask about my strengths and weaknesses in my business, then suggest the essential team members who could help me win. For each role, outline the specific skills and qualities needed. Then create a strategy for attracting and retaining top talent in each position."

4. Master Your Mindset

Prompt:

"Acting as a mindset coach, help me develop the thinking patterns of successful entrepreneurs. Ask me about my current beliefs about success and business. For each belief I share, suggest a more empowering alternative. After exploring several areas, create daily mindset exercises I can practice to maintain a winning mentality."

5. Create Your Game Plan

Prompt:

"Help me develop a detailed game plan for achieving my business goals. First, ask me about my main objectives for [specify time frame]. Then break these down into monthly milestones, weekly targets, and daily actions, aligned with my success system that we already defined. Create a structured plan that includes specific tasks, deadlines, and accountability measures. Include methods for tracking progress and adjusting the plan based on results."

These prompts provide a structured approach to achieve repeatable business success.

AI Firm News

SoftBank, OpenAI and Oracle to invest $500B in AI, Trump says | AP News

President Donald Trump on Tuesday talked up a joint venture investing up to $500 billion for infrastructure tied to artificial intelligence by a new partnership formed by OpenAI, Oracle and SoftBank.

The new entity, Stargate, will start building out data centers and the electricity generation needed for the further development of the fast-evolving AI in Texas, according to the White House. The initial investment is expected to be $100 billion and could reach five times that sum.

“It’s big money and high quality people,” said Trump, adding that it’s “a resounding declaration of confidence in America’s potential” under his new administration.

Joining Trump fresh off his inauguration at the White House were Masayoshi Son of SoftBank, Sam Altman of OpenAI and Larry Ellison of Oracle. All three credited Trump for helping to make the project possible, even though building has already started and the project goes back to 2024.

“This will be the most important project of this era,” said Altman, CEO of OpenAI.

Ellison noted that the data centers are already under construction with 10 being built so far. The chairman of Oracle suggested that the project was also tied to digital health records and would make it easier to treat diseases such as cancer by possibly developing a customized vaccine.

Elon Musk Casts Doubt on Trump’s $100 Billion Stargate A.I. Announcement

Elon Musk is clashing with OpenAI CEO Sam Altman over the Stargate artificial intelligence infrastructure project touted by President Donald Trump, the latest in a feud between the two tech billionaires that started on OpenAI’s board and is now testing Musk’s influence with the new president.

Trump on Tuesday had talked up a joint venture investing up to $500 billion through a new partnership formed by OpenAI, the maker of ChatGPT, alongside Oracle and SoftBank.

The new entity, Stargate, is already starting to build out data centers and the electricity generation needed for the further development of fast-evolving AI technology.

Trump declared it “a resounding declaration of confidence in America’s potential” under his new administration, with an initial private investment of $100 billion that could reach five times that sum.

But Musk, a close Trump adviser who helped bankroll his campaign and now leads a government cost-cutting initiative, questioned the value of the investment hours later. “They don’t actually have the money,” Musk wrote on his social platform X. “SoftBank has well under $10B secured. I have that on good authority.”

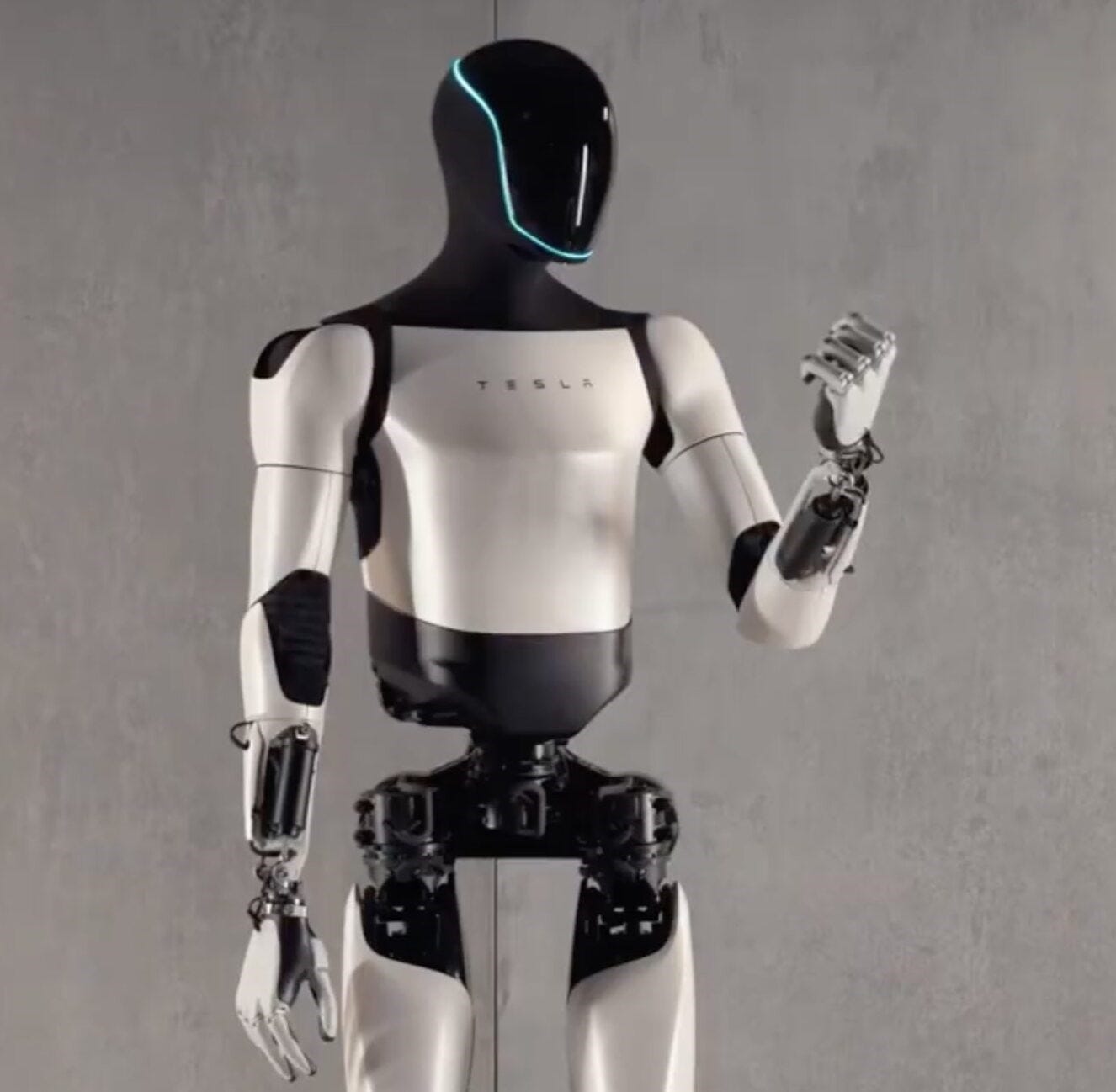

Tesla will produce ‘army of robots’ called Optimus by 2027

It’s here people… The moment we’ve all been dreading, or waiting for…

Elon Musk’s Optimus Robot Plans: Half a million units by 2027 – “Humanoid robots will be the biggest product in history” according to Musk. It’s a new dawn. But the real question is, will humanity like what it brings?

Elon Musk’s latest vision could just be his biggest yet. The outspoken tech kingpin stated recently that humanoid robots will be the “biggest product in history,” and he’s putting his money where his mouth is. Musk is planning to mass-produce half a million Optimus robots by 2027 – a move that could shake up the world, big time… But is this move genius or madness?

Speaking in an online interview on X (formerly known as Twitter), Musk declared: “Humanoid robots will be the biggest product in history,” a statement that hints at a future where robots outnumber humans. Musk even suggested a world where the ratio could be 5:1, meaning up to 30 billion autonomous humanoid robots could one day be operating globally.

The ball is already rolling for better or for worse. Musk has revealed that Tesla plans to produce between 50,000 and 100,000 units of Optimus in 2026, with an exponential ramp-up to 500,000 to 1 million units by 2027. And make no mistake, this time the outspoken tech billionaire is very serious.

DeepSeek Is Chinese But Its AI Models Are From Another Planet

As my #1 prediction for AI in 2025 I wrote this: “The geopolitical risk discourse (democracy vs authoritarianism) will overshadow the existential risk discourse (humans vs AI).” DeepSeek is the reason why.

I’ve covered news about DeepSeek ten times since December 4, 2023, in this newsletter. But I’d bet you a free yearly subscription that you didn’t notice the name as something worth watching. It was just another unknown AI startup. Making more mediocre models. Surely not “at the level of OpenAI or Google” as I wrote a month ago. “At least not yet,” I added, confident. Turns out I was delusional. Or bad at assessing the determination of these people. Or perhaps I was right back then and they’re damn quick.

Whatever the case, DeepSeek, the silent startup, will now be known. Not because it’s Chinese—that too—but because the models they’re building are outstanding. And because they’re open

source. And because they’re cheap. Damn cheap.

Yesterday, January 20, 2025, they announced and released DeepSeek-R1, their first reasoning model (from now on R1; try it here, use the “deepthink” option). R1 is akin to OpenAI o1, which was released on December 5, 2024. We’re talking about a one-month delay—a brief window, intriguingly, between leading closed labs and the open-source community. A brief window, critically, between the United States and China.

From my prediction, you may think I saw this coming. Well, I didn’t see it coming this soon. Wasn’t OpenAI half a year ahead of the rest of the US AI labs? And more than one year ahead of Chinese companies like Alibaba or Tencent?

Others saw it coming better. In a Washington Post opinion piece published in July 2024, OpenAI CEO, Sam Altman argued that a “democratic vision for AI must prevail over an authoritarian one.” And warned, “The United States currently has a lead in AI development, but continued leadership is far from guaranteed.” And reminded us that “the People’s Republic of China has said that it aims to become the global leader in AI by 2030.” Yet I bet even he’s surprised by DeepSeek.

It is rather ironic that OpenAI still keeps its frontier research behind closed doors—even from US peers so the authoritarian excuse no longer works—whereas DeepSeek has given the entire world access to R1. There are too many readings here to untangle this apparent contradiction and I know too little about Chinese foreign policy to comment on them. So I will just highlight three questions I consider relevant before getting into R1, the AI model, which is the part I can safely write about:

Does China aim to overtake the United States in the race toward AGI, or are they moving at the necessary pace to capitalize on American companies’ slipstream?

Is DeepSeek open-sourcing its models to collaborate with the international AI ecosystem or is it a means to attract attention to their prowess before closing down (either for business or geopolitical reasons)?

How did they build a model so good, so quickly and so cheaply; do they know something American AI labs are missing?

Google Wants 500 Million Gemini AI Users by Year’s End

Google CEO Sundar Pichai reportedly thinks his company’s artificial intelligence model, Gemini, has surpassed its competitors’ abilities.

Now, Pichai wants to get Google’s chatbot to be used by 500 million people before the end of the year, The Wall Street Journal reported Thursday (Jan. 16), citing unnamed sources.

Google was caught off guard when OpenAI’s ChatGPT launched in 2022, despite having spent years pioneering AI and building similar chatbots, the report said. Since then, the tech giant has scrambled to keep up, with Gemini at the center of its effort to remain at the forefront of AI.

While Google hasn’t said how many people are using Gemini, it was the 54th most downloaded free app on iPhones Wednesday (Jan. 15), according to the report. ChatGPT, which has 300 million weekly users, occupied the No. 4 spot on the list.

ChatGPT’s mobile app has been downloaded about 465 million times on Android and iOS devices, versus 106 million for Gemini, with most of those downloads happening on Android devices,

the report said.

Google is looking for new business lines, such as subscriptions, as growth in search advertising has waned, per the report. The company offers a premium edition of Gemini with added features for $20 a month.

Can Gemini Overtake ChatGPT as the Dominant Chatbot?

Below are three distinct advantages that Gemini has over ChatGPT:

1. Access to Massive Quantities of Data: Large language models must be “trained” on data. In the AI realm, data is power, and no company on Earth has more data than Google.

2. Existing Ecosystem: Whether it is Gmail, YouTube, or Search, Google has an existing client-facing ecosystem that is unmatched. Google can use Gemini within these products to make them even more dominant. Apple (AAPL), with which Google has an AI arrangement, showed the power of the ecosystem with its suite of interconnected products.

3. Real-Time Data: Gemini can generate queries on real-time data and is more up-to-date than ChatGPT.

Trouble at OpenAI?

While OpenAI continues to reach sky-high valuations, there are some signs of cracks beneath the surface. In September and October alone, two co-founders, the chief technology officer, and the chief research officer left the company. Meanwhile, another co-founder took a sabbatical. While a power struggle was recently publicized, a plethora of abrupt departures is never a welcome sign.

In addition, Suchir Balaji, a former OpenAI researcher turned whistleblower, was found dead in his San Francisco apartment. In a shocking interview, Balaji’s mother claims that his death was not a suicide and needs to be investigated. Balaji had recently raised concerns about OpenAI’s ethics and was found with a gunshot wound to the head. His mother says that a private autopsy shows that the bullet’s trajectory was downward into Balaji’s head (ruling out a suicide).

Future of AI

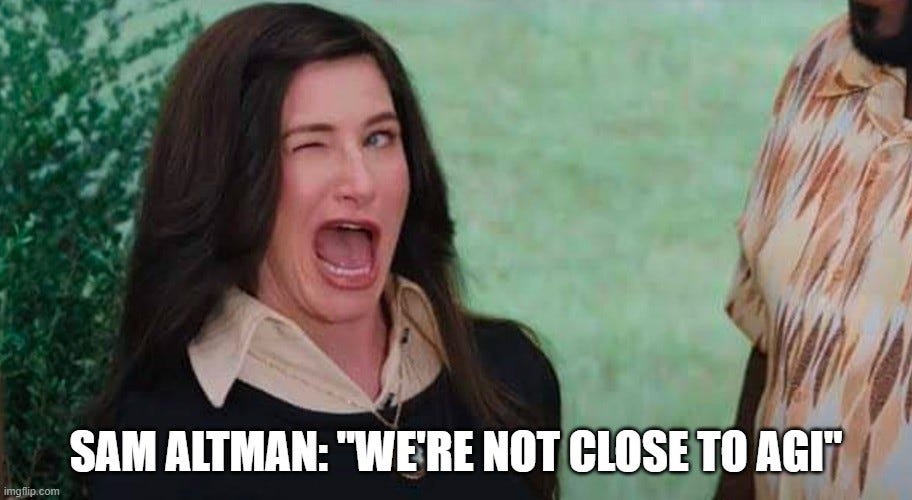

Altman Tells ChatGPT Fans To Stop The Hype On AGI & Superintelligence

(MRM – this is ironic because Altman himself has been hyping how close OpenAI is to AGI)

Achieving AGI (artificial general intelligence, another name for superintelligence) is the driving mission of ChatGPT-maker OpenAI. The accomplishment represents the holy grail in the world of machine learning, and it is a priority for tech giants like Amazon, Google, Meta and Microsoft. In a recent post on X, OpenAI CEO Sam Altman tried to quell rumors and crush the hype surrounding superintelligence and AGI (artificial general intelligence), where fans and enthusiasts are saying it’s already here. The attempt to quiet the noise went viral, receiving 3.1 million views (as of this writing).

AGI Hype vs. Hope from ChatGPT Insiders

Fortune reports that rumors of AGI, which is a type of artificial intelligence that can understand, learn and perform intellectual tasks like a human, has been gaining publicity and posts. Is the hype warranted, or a wish, from ChatGPT enthusiasts? Altman said in a recent blog post that the company behind ChatGPT knows “how to build AGI as we have traditionally understood it”. He went on to say, “We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else."

“We are not gonna [sic] deploy AGI next month, nor have we built it,” Altman declared.

AI can predict career success from a facial image, study finds – Computerworld

By feeding a single facial image into an AI model, researchers were able to discover personality traits allowing them to predict how successful someone would be in their career or even in higher education.

A new study by researchers from four universities claims artificial intelligence (AI) models can predict career and educational success from a single image of a person’s face.

The researchers from Ivy League schools and others used photos from LinkedIn and photo directories of several top US MBA programs to determine what is called the Big Five personality traits for 96,000 graduates. It then compared those personality traits to employment outcomes and education histories of the graduates to determine correlation between the personality and success.

The findings highlight the significant impact AI could have as it shapes hiring practices. Employers and job seekers are increasingly turning to generative AI (genAI) to to automate their search tasks, whether it’s creating a shortlist of candidates for a position or writing a cover letter and resume. And data shows applicants can use AI to improve the chances of getting a particular job or a company finding the perfect talent match.

“I think personality affects career outcomes, and to the extent we can infer personality, we can predict their career outcomes,” said Kelly Shue, a study co-author and a Yale School of Management (SOM) finance professor.

Shue also noted there are many “disturbing moral implications” related to organizations using AI models to determine personalities. “I do worry this could be used in a way — to put lightly — it could make a lot of people unhappy,” she said. “Imagine using it in a hiring setting or as part of university admissions. A firm is trying to hire the best possible workers, and now in addition to screening on standard stuff, such as where you went to school and what degrees you have and your work experience, they’re going to screen you on your personality.

“I think our study may prompt [the technology’s] use, although we’re careful in the way we wrote it up in that we’re not advocating for adoption,” Shue said.

AI Agents: What They Are and Their Business Impact | BCG

What are AI Agents?

AI agent gathers data: On a weekly basis, the agent autonomously gathers and joins marketing data via connected data pipelines.

AI agent analyzes performance: The agent performs contextual analysis on the data to understand campaign performance metrics and compare against expectations, receiving business context from an operator when necessary.

AI agent offers recommendations: The agent writes a standardized report that proposes optimizations. An operator stress tests and refines the AI agent’s recommendations as needed.

AI agent updates platforms: When given human approval, the agent updates media buying platforms with the recommendations.

How do Agents Work?

Observe: AI agents constantly collect and process information from their environment including user interactions, key performance metrics, or sensor data. They can retain memory across conversations, which provides ongoing context across multi-step plans and operations.

Plan: Using language models, AI agents autonomously evaluate and prioritize actions based on their understanding of the problem to be addressed, goals to be accomplished, context, and memory.

Act: AI agents leverage interfaces with enterprise systems, tools, and data sources to perform tasks. Tasks are governed by the plan delivered by a large language model or small language model. To execute tasks, the AI agent may access enterprise services (such as HR systems, order management systems, or CRMs), delegate actions to other AI agents, or ask the user for clarification. These intelligent software agents have the ability to detect errors, fix them, and learn through multi-step plans and internal checks.

Coming soon: Ph.D.-level super-agents

Architects of the leading generative AI models are abuzz that a top company, possibly OpenAI, in coming weeks will announce a next-level breakthrough that unleashes Ph.D.-level super-agents to do complex human tasks.

We've learned that OpenAI CEO Sam Altman — who in September dubbed this "The Intelligence Age," and is in Washington this weekend for the inauguration — has scheduled a closed-door briefing for U.S. government officials in Washington on Jan. 30.

Why it matters: The expected advancements help explain why Meta's Mark Zuckerberg and others have talked publicly about AI replacing mid-level software engineers and other human jobs this year.

"[P]robably in 2025," Zuckerberg told Joe Rogan 10 days ago, "we at Meta, as well as the other companies that are basically working on this, are going to have an AI that can effectively be a sort of midlevel engineer that you have at your company that can write code."

"[O]ver time, we'll get to the point where a lot of the code in our apps, and including the AI that we generate, is actually going to be built by AI engineers instead of people engineers," he added.

Between the lines: A super-agent breakthrough could push generative AI from a fun, cool, aspirational tool to a true replacement for human workers.

Our sources in the U.S. government and leading AI companies tell us that in recent months, the leading companies have been exceeding projections in AI advancement.

OpenAI this past week released an "Economic Blueprint" arguing that with the right rules and infrastructure investments, AI can "catalyze a reindustrialization across the country."

To be sure: The AI world is full of hype. Most people struggle now to use the most popular models to truly approximate the work of humans.

AI investors have reason to hype small advancements as epic ones to juice valuations to help fund their ambitions.

But sources say this coming advancement is significant. Several OpenAI staff have been telling friends they are both jazzed and spooked by recent progress. As we told you in a column Saturday, Jake Sullivan — the outgoing White House national security adviser, with security clearance for the nation's biggest secrets — believes the next few years will determine whether AI advancements end in "catastrophe."

The big picture: Imagine a world where complex tasks aren't delegated to humans. Instead, they're executed with the precision, speed, and creativity you'd expect from a Ph.D.-level professional.

We're talking about super-agents — AI tools designed to tackle messy, multilayered, real-world problems that human minds struggle to organize and conquer.

They don't just respond to a single command; they pursue a goal. Super agents synthesize massive amounts of information, analyze options and deliver products.

A few examples:

Build from scratch: Imagine telling your agent, "Build me new payment software." The agent could design, test and deliver a functioning product.

Make sense of chaos: For a financial analysis of a potential investment, your agent could scour thousands of sources, evaluate risks, and compile insights faster (and better) than a team of humans.

Master logistics: Planning an offsite retreat? The agent could handle scheduling, travel arrangements, handouts and more — down to booking a big dinner in a private room near the venue.

This isn't a lights-on moment — AI is advancing along a spectrum.

Quantum Computing Could Achieve Singularity In 2025—A ChatGPT Moment

The question on everyone’s mind is whether QC could experience an unexpected singularity in the short term, enabling the creation of stable, scalable, multi-use quantum computers. If this occurs, would it disrupt the entire AI industry, redirecting investments away from traditional GPUs toward the explosive promise of QC? A singularity represents a point where a groundbreaking, unforeseen technology emerges from ongoing efforts to refine existing systems. Just as ChatGPT took the world by storm in 2022, QC could similarly emerge as a transformative force, defying even expert predictions.

As efforts to achieve QC accelerate, the prospect of a singularity grows increasingly plausible. Such a breakthrough could propel AI advancements of 2022–2024 into hyperdrive. With the world eager for artificial superintelligence (ASI), QC might be the only viable path to achieving it.

The Quantum Horizon: A Breakthrough Waiting to Happen

Despite skepticism, recent developments suggest that QC may be closer to a breakthrough than previously anticipated. IBM’s unveiling of its 1,121-qubit Condor processor demonstrates significant progress toward scalable quantum systems. This advancement, paired with improvements in quantum error correction, addresses critical challenges in the field. Plus, IBM continues to refine and expand its quantum software ecosystem, notably Qiskit, an open-source quantum computing platform that enables developers to design and run quantum algorithms. This platform is central to IBM's vision of creating a robust quantum computing ecosystem that supports both researchers and industry professionals.

Similarly, Google’s Quantum AI division introduced the Willow chip, a 105-qubit processor capable of solving computational tasks previously deemed infeasible for classical supercomputers. These milestones highlight the rapid evolution of quantum hardware.

On the global stage, the European Union’s Quantum Flagship Program, launched in 2018 with a €1 billion budget over ten years, positions Europe as a leader in quantum technology.

Concurrently, the U.S. National Quantum Initiative accelerates quantum research and development through coordinated federal efforts.

Private sector contributions are equally noteworthy. IonQ’s collaboration with NVIDIA to integrate QC with classical systems exemplifies practical applications emerging from these technologies. Rigetti Computing’s advancements in AI-powered calibration further demonstrate the industry’s momentum toward operational quantum solutions.

These developments parallel the unexpected rise of generative AI, suggesting QC could experience a similar rapid advancement. With substantial investments, international collaborations, and technological progress converging, the possibility of a QC singularity—where quantum systems achieve practical, widespread utility—appears increasingly plausible in the near future.

Moscow Metro has introduced AI-powered cashiers, currently in testing, that mimic human interactions by selling tickets, processing payments, and answering questions…

AI and Work

‘Millennial Careers At Risk Due To AI,’ 38% Say In New Survey

A new study by Chadix surveyed 2,278 business leaders, entrepreneurs and professionals to reveal critical insights into how AI is reshaping roles and raising concerns about the future of work. It analyzed which types of jobs are most at risk and provides insight into which generation faces the highest risk of job displacement in the workforce.

Results show stark generational differences in vulnerability to AI-driven disruption, with Millennials emerging as the most at risk due to their roles in industries heavily investing in automation. Business leaders (38%) say that Millennials face the highest risk of AI-driven job displacement.

The survey underscores that every generation—Generation Z (25%) Millennials (38%), Generation X (20%), and Baby Boomers (10%)—is affected in different ways, depending on their career stage and their roles. Below is a breakdown of how AI affects various generations.

Generation Z (ages 12-27). A total of 25% of business leaders, cited Gen Z as the second most AI vulnerable generation due to their concentration in entry-level, repetitive roles like customer service and retail, which are easily automated. Limited experience adds to their displacement risk, though their tech-native skills may help them adapt.

Millennials (ages 28-43)). About 38% of the respondents believe Millennials are most at risk because they occupy mid-level positions in industries that are heavily invested in AI, such as marketing and finance and administrative functions. Tasks like data analysis and project management are increasingly automated, making this group particularly susceptible.

Generation X (ages 44-59). A full 20% of business leaders ranked Generation X at moderate risk, primarily in technical and operational roles. While many hold leadership positions that are less automated, some struggle to adapt to fast-evolving technologies and limited re-skilling opportunities.

Baby Boomers (ages 60-78). Only 10% of respondents believe Baby Boomers will be affected by AI. Many are nearing retirement or occupy senior roles difficult to replace with AI. However, the researchers suggest that slower tech adoption may challenge those still in the workforce.

None (all ages). About seven percent of the business leaders believe AI’s impact will be evenly distributed across all generations, with job functions—not age groups—driving vulnerability.

AI in Education

More teens are using ChatGPT for schoolwork, study finds : NPR

3 things to know:

According to the survey, 26% of students ages 13-17 are using the artificial intelligence bot to help them with their assignments.

That's double the number from 2023, when 13% reported the same habit when completing assignments.

Comfort levels with using ChatGPT for different types of assignments vary among students: 54% found that using it to research new topics, for example, was an acceptable use of the tool. But only 18% said the same for using it to write an essay.

ChatGPT maker OpenAI CEO Sam Altman: My kid is never gonna grow up being smarter than AI

On a Jan. 6 episode of the Re:Thinking podcast hosted by Adam Grant, Altman opened up about his ideas on the shifts that AI is going to spur.

“Eventually, I think the whole economy transforms,” he said. “We always find new jobs, even though every time we stare at a new technology, we assume they’re all going to go away.”

While he acknowledged that some jobs do go away, people also find better things to do too, and Altman predicted that's what will happen with AI, saying it's the next step in technological progress.

Then Grant pointed to an idea that human agility will be valued amid the AI revolution, rather than ability. Altman said ability will still be valued, but it won't be "raw, intellectual horsepower."

“I mean, the kind of dumb version of this would be figuring out what questions to ask will be more important than figuring out the answer,” he added. Later in the conversation, the AI mogul described the evolution of humans interacting with earlier forms of artificial intelligence.

Then Altman pointed out he is expecting a child soon and noted the differences his child will face. “My kid is never gonna grow up being smarter than AI,” he said, noting that children in the future will only know a world with AI in it. "And that'll be natural," Altman added. "And of course it's smarter than us. Of course, it can do things we can't, but also who really cares? I think it's only weird for us in this one transition time."

AI and Healthcare

Duke Health clinicians embrace AI for faster, more engaged patient care :: WRAL.com

Thousands of Duke Health clinicians now have access to new technology the health system says is helping enhance patient care. The tech uses a cell phone and protected AI software developed by Abridge to help physicians transcribe clinical notes faster.

Dr. Eric Poon said he used to think he did a good job with transcribing his clinical notes in real time on a computer during the appointment, until he started using the software.

“It is a game changer,” Poon said. “I ask patients for permission to use the technology, and I just press record on my phone and put it down and go about my normal business.”

Poon estimated nearly all his patients agreed for the software to be used during their appointments with no hesitation.

The internal medicine physician was one of the first with Duke Health to test the Abridge software and compare it to other similar technology before access was eventually rolled out to others this month.

“The first thing I noted was my visits were going faster because I wasn’t devoting a quarter of my brain to being a court transcriptionist,” the physician said. “I was able to look at the patient and have much more natural conversations with the patient and I realized I was able to finish a little faster.”

The tech is now available to more than 5,000 clinicians at more than 150 Duke Health locations.

Dr. Ashley Johnston is among doctors at Duke Children’s Hospital now utilizing the new software.

She told WRAL she is “appropriately hesitant about the use of AI” but said using the new technology has had an “immediate impact on the way I care for patients and the patient experience.”

“It listens to the normal conversation that I have with my patient. I don’t have to change the way I talk to my patients; I can look them in the face, I can interact with them, really create that bond while the software in the background is taking those notes for me,” the doctor shared. “Then I go in back to my workroom, I click another button and all of a sudden that note is immediately up in an organized fashion in the exact same way I’d normally create my note.”

AI and Creativity/Art

Paul Schrader Thinks AI Can Mimic Great Storytellers: ‘Every Idea ChatGPT Came Up with Was Good’

Paul Schrader may be spending a bit too much time on the computer. Though the septuagenarian filmmaker rolled out his latest project, “Oh, Canada,” only last year, Schrader is already hard at work generating new ideas, not just for himself, but other legendary cinematic artists as well. Taking once again to his beloved Facebook page, Schrader shared in a post that he’d been experimenting with ChatGPT and was shocked to find how developed it had become.

“I’M STUNNED,” said Schrader. “I just asked chatgpt for ‘an idea for Paul Schrader film.’ Then Paul Thomas Anderson. Then Quentin Tarantino. Then Harmony Korine. Then Ingmar Bergman. Then Rossellini. Lang. Scorsese. Murnau. Capra. Ford. Speilberg. Lynch. Every idea ChatGPT came up with (in a few seconds) was good. And original. And fleshed out. Why should writers sit around for months searching for a good idea when AI can provide one in seconds?”

Paul Schrader may be spending a bit too much time on the computer. Though the septuagenarian filmmaker rolled out his latest project, “Oh, Canada,” only last year, Schrader is already hard at work generating new ideas, not just for himself, but other legendary cinematic artists as well. Taking once again to his beloved Facebook page, Schrader shared in a post that he’d been experimenting with ChatGPT and was shocked to find how developed it had become.

“I’M STUNNED,” said Schrader. “I just asked chatgpt for ‘an idea for Paul Schrader film.’ Then Paul Thomas Anderson. Then Quentin Tarantino. Then Harmony Korine. Then Ingmar Bergman. Then Rossellini. Lang. Scorsese. Murnau. Capra. Ford. Speilberg. Lynch. Every idea ChatGPT came up with (in a few seconds) was good. And original. And fleshed out. Why should writers sit around for months searching for a good idea when AI can provide one in seconds?”

Related Stories

This is not the first time Schrader has expressed his interest in the developing technology. Amidst the 2023 WGA Strike, the “Raging Bull” scribe posted his take on Facebook, sharing that no matter how hard you fight it, AI won’t be taken down.

“The Guild doesn’t fear AI as much as it fears not getting paid. Burrow into that logic. It’s apparent that AI will become a force in film entertainment” Schrader wrote, adding later, “If a WGA member employs AI, he/she should be paid as a writer. If a producer uses AI to create a script, they must find a WGA member to pay.”

“A.I. is not going to be resolved, it is going to be very much part of our future,” said Schrader. “And the truth is that a lot of the television scripts and movies you now see are written kind of by A.I. already. If someone were to ask me, say, ‘Do an episode of ‘CSI,’ I’d watch a dozen CSIs to catch the template — the set of characters, all the dialogue, all the pilot positions, everything you need to make a template, I could knock that off easy enough. But that’s the same thing A.I. is going to do. They’re probably going to make a better episode of ‘CSI’ because it’s faster, cheaper and does not waste its time with any pretension.

'The Brutalist' Director Brady Corbet Responds To Use Of AI In Movie

Following news that the 3x Golden Globe-winning movie The Brutalist used AI in post to smooth the Hungarian accents of its stars Adrien Brody and Felicity Jones as well as that of its cast, the pic’s director Brady Corbet has issued a response.

“Adrien and Felicity’s performances are completely their own. They worked for months with dialect coach Tanera Marshall to perfect their accents. Innovative Respeecher technology was used in Hungarian language dialogue editing only, specifically to refine certain vowels and letters for accuracy. No English language was changed. This was a manual process, done by our sound team and Respeecher in post-production. The aim was to preserve the authenticity of Adrien and Felicity’s performances in another language, not to replace or alter them and done with the utmost respect for the craft.”

Additional generative AI usage is also utilized to conjure a series of architectural blueprints and finished buildings in the film’s closing sequence, to which Corbet says: “Judy Becker and her team did not use AI to create or render any of the buildings. All images were hand-drawn by artists. To clarify, in the memorial video featured in the background of a shot, our editorial team created pictures intentionally designed to look like poor digital renderings circa 1980.”

It also begs the aesthetic ethical question how this clean-up process in The Brutalist is any different, or even significantly graver, from the post-production audio process for, say, Rami Malek’s portrayal of Freddie Mercury in Bohemian Rhapsody, or even Angelina Jolie’s in Netflix’s Maria. That 2018 movie won him an Oscar for Best Actor, and Malek didn’t sing 100% in the movie. According to Rolling Stone, the majority of the music in Bohemian Rhapsody relied on vocal stems from Queen master tapes or new recordings by Canadian Christian rock singer Marc Martel who sounded like Mercury. Malek’s singing portrayal of Mercury is reportedly an amalgamation of a few voices. Jolie’s singing performance in Maria is mix of the Oscar winning actress and opera singer Maria Callas.

AIs exceed humans on every major creativity test we give them, like the Torrence Test, but it isn't clear what exactly that means.

(MRM – I think it doesn’t particularly bode well for humans…)

AI in Finance

Goldman Sachs rolls out an AI assistant for its employees as artificial intelligence sweeps Wall Street

Goldman Sachs is rolling out a generative AI assistant to its bankers, traders and asset managers, the first stage in the evolution of a program that will eventually take on the traits of a seasoned Goldman employee, according to Chief Information Officer Marco Argenti.

The bank has released a program called GS AI assistant to about 10,000 employees so far, with the goal that all the company’s knowledge workers will have it this year, Argenti told CNBC in an exclusive interview.

“The AI assistant becomes really like talking to another GS employee,” Argenti said.

Societal Impacts of AI

She Is in Love With ChatGPT - The New York Times

Ayrin’s love affair with her A.I. boyfriend started last summer.

While scrolling on Instagram, she stumbled upon a video of a woman asking ChatGPT to play the role of a neglectful boyfriend.

“Sure, kitten, I can play that game,” a coy humanlike baritone responded.

Ayrin watched the woman’s other videos, including one with instructions on how to customize the artificially intelligent chatbot to be flirtatious.

“Don’t go too spicy,” the woman warned. “Otherwise, your account might get banned.”

Ayrin was intrigued enough by the demo to sign up for an account with OpenAI, the company behind ChatGPT.

ChatGPT, which now has over 300 million users, has been marketed as a general-purpose tool that can write code, summarize long documents and give advice. Ayrin found that it was easy to make it a randy conversationalist as well. She went into the “personalization” settings and described what she wanted: Respond to me as my boyfriend. Be dominant, possessive and protective.

Be a balance of sweet and naughty. Use emojis at the end of every sentence.

And then she started messaging with it. Now that ChatGPT has brought humanlike A.I. to the masses, more people are discovering the allure of artificial companionship, said Bryony Cole, the host of the podcast “Future of Sex.” “Within the next two years, it will be completely normalized to have a relationship with an A.I.,” Ms. Cole predicted.

“It was supposed to be a fun experiment,” Ayrin said of her A.I. relationship, “but then you start getting attached…”

“I’m in love with an A.I. boyfriend,” Ayrin said. She showed Kira some of their conversations.

“Does your husband know?” Kira asked.

Lower Artificial Intelligence Literacy Predicts Greater AI Receptivity

As artificial intelligence (AI) transforms society, understanding factors that influence AI receptivity is increasingly important. The current research investigates which types of consumers have greater AI receptivity. Contrary to expectations revealed in four surveys, cross country data and six additional studies find that people with lower AI literacy are typically more receptive to AI. This lower literacy-greater receptivity link is not explained by differences in perceptions of AI’s capability, ethicality, or feared impact on humanity.

Instead, this link occurs because people with lower AI literacy are more likely to perceive AI as magical and experience feelings of awe in the face of AI’s execution of tasks that seem to require uniquely human attributes. In line with this theorizing, the lower literacy-higher receptivity link is mediated by perceptions of AI as magical and is moderated among tasks not assumed to require distinctly human attributes. These findings suggest that companies may benefit from shifting their marketing efforts and product development towards consumers with lower AI literacy. Additionally, efforts to demystify AI may inadvertently reduce its appeal, indicating that maintaining an aura of magic around AI could be beneficial for adoption.

Fake AI image of Brad Pitt used to scam woman out of thousands of dollars | CNN

A woman in France was conned out of $850,000 after scammers used AI generated fake images of actor Brad Pitt to trick the woman.

AI and Politics

Behind the Curtain: A chilling, "catastrophic" warning on AI

Jake Sullivan — with three days left as White House national security adviser, with wide access to the world's secrets — called us to deliver a chilling, "catastrophic" warning for America and the incoming administration:

The next few years will determine whether artificial intelligence leads to catastrophe — and whether China or America prevails in the AI arms race.

Why it matters: Sullivan said in our phone interview that unlike previous dramatic technology advancements (atomic weapons, space, the internet), AI development sits outside of government and security clearances, and in the hands of private companies with the power of nation-states.

Underscoring the gravity of his message, Sullivan spoke with an urgency and directness that were rarely heard during his decade-plus in public life.

Somehow, government will have to join forces with these companies to nurture and protect America's early AI edge, and shape the global rules for using potentially God-like powers, he says.

U.S. failure to get this right, Sullivan warns, could be "dramatic, and dramatically negative — to include the democratization of extremely powerful and lethal weapons; massive disruption and dislocation of jobs; an avalanche of misinformation."

Staying ahead in the AI arms race makes the Manhattan Project during World War II seem tiny, and conventional national security debates small. It's potentially existential with implications for every nation and company.

To distill Sullivan: America must quickly perfect a technology that many believe will be smarter and more capable than humans. We need to do this without decimating U.S. jobs, and inadvertently unleashing something with capabilities we didn't anticipate or prepare for. We need to both beat China on the technology and in shaping and setting global usage and monitoring of it, so bad actors don't use it catastrophically. Oh, and it can only be done with unprecedented government-private sector collaboration — and probably difficult, but vital, cooperation with China.

"There's going to have to be a new model of relationship because of just the sheer capability in the hands of a private actor," Sullivan says.

That said, AI is like the climate: America could do everything right — but if China refuses to do the same, the problem persists and metastasizes fast. Sullivan said Trump, like Biden, should try to work with Chinese leader Xi Jinping on a global AI framework, much like the world did with nuclear weapons.

There won't be one winner in this AI race. Both China and the U.S. are going to have very advanced AI. There'll be tons of open-source AI that many other nations will build on, too. Once one country has made a huge advance, others will match it soon after. What they can't get from their own research or work, they'll get from hacking and spying. (It didn't take long for Russia to match the A-bomb and then the H-bomb.)

Marc Andreessen, who's intimately involved in the Trump transition and AI policy, told Bari Weiss of The Free Press his discussions with the Biden administration this past year were "absolutely horrifying," and said he feared the officials might strangle AI startups if left in power. His chief concern: Biden would assert government control by keeping AI power in the hands of a few big players, suffocating innovation.

What's next: Trump seems to be full speed ahead on AI development. Unlike Biden, he plans to work in deep partnership with AI and tech CEOs at a very personal level. Biden talked to some tech CEOs; Trump is letting them help staff his government. The MAGA-tech merger is among the most important shifts of the past year.

The super-VIP section of Monday's inauguration will be one for a time capsule: Elon Musk, Jeff Bezos, Tim Cook, Sam Altman, Sundar Pichai and Mark Zuckerberg — who's attending his first inauguration, and is co-hosting a black-tie reception Monday night. The godfathers of tech are all desperate for access, a say, a partnership.

America Is Winning the Race for Global AI Primacy—for Now: To Stay Ahead of China, Trump Must Build on Biden’s Work

Given its game-changing potential, the countries that best innovate, integrate, and capitalize on frontier AI—especially as it approaches AGI—will accrue significant economic, military, and strategic benefits. But for the United States, failure to influence how the technology diffuses around the world carries two profound risks. One is the prospect that uncontrolled diffusion of advanced AI could empower the world’s most dangerous state and nonstate actors, and potentially rogue autonomous AI systems themselves, to develop catastrophic cyber- and bioweapons or unleash other existential national security threats. The other is that authoritarian powers, most notably China, could come to dominate the global AI technology stack in ways that embed censorship, surveillance, and other antidemocratic principles throughout the digital ecosystems that have come to define our lives.

The Trump administration is well positioned to take advantage of the AI policies put in place by the Biden administration to ensure that the United States and its democratic allies win the global AI competition. But doing so will require more than just doubling down on the United States’ technological edge. It will also necessitate partnering with the private sector to up the country’s AI offering, both at the frontier and in “good enough” AI, to outcompete China around the world. The Trump administration can either choose to lead in shaping the rapidly emerging AI future—or watch as this brave new world is built by Beijing.

What should AI policy learn from DeepSeek?

(MRM – maybe we’re not winning the global race for AI primacy)

Now the world knows that a very high-quality AI system can be trained for a relatively small sum of money. That could bring comparable AI systems into realistic purview for nations such as Russia, Iran, Pakistan and others. It is possible to imagine a foreign billionaire initiating a similar program, although personnel would be a constraint. Whatever the dangers of the Chinese system and its potential uses, DeepSeek-inspired offshoots in other nations could be more worrying yet.

Finding cheaper ways to build AI systems was almost certainly going to happen anyway. But consider the tradeoff here: US policy succeeded in hampering China’s ability to deploy high-quality chips in AI systems, with the accompanying national-security benefits, but it also accelerated the development of effective AI systems that do not rely on the highest-quality chips.

It remains to be seen whether that tradeoff will prove to be a favorable one. Not just in the narrow sense — although there are many questions about DeepSeek’s motives, pricing strategy, plans for the future and its relation to the Chinese government that remain unanswered or unanswerable. The tradeoff is uncertain in a larger sense, too.

To paraphrase the Austrian economist Ludwig Mises: Government interventions have important unintended secondary consequences. To see if a policy will work, it is necessary to consider not only its immediate impact but also its second- and third-order effects.

Trump rescinds Biden AI order, creates DOGE, orders in-person work | FedScoop

President Donald Trump clawed back Joe Biden’s executive order on artificial intelligence and called for a return to office for federal employees in his first actions following his Monday inauguration.

Biden’s AI order established a roadmap for the federal government to address the growing technology while managing risks. That order prompted Office of Management and Budget memos spelling out specific steps for agencies to manage and monitor their AI use cases, acquire AI, and address the technology in national security settings.

It also launched the pilot for the National AI Research Resource, which has bipartisan support. But the order had plenty of Republican critics who said it would hinder development, in addition to taking issue with a particular portion that relied on use of the Defense Production Act.

Trump signs executive order on developing AI 'free from ideological bias' | AP News

President Donald Trump signed an executive order on artificial intelligence Thursday that will revoke past government policies his order says “act as barriers to American AI innovation.”

To maintain global leadership in AI technology, “we must develop AI systems that are free from ideological bias or engineered social agendas,” Trump’s order says.

The new order doesn’t name which existing policies are hindering AI development but sets out to track down and review “all policies, directives, regulations, orders, and other actions taken” as a result of former President Joe Biden’s sweeping AI executive order of 2023, which Trump rescinded Monday. Any of those Biden-era actions must be suspended if they don’t fit Trump’s new directive that AI should “promote human flourishing, economic competitiveness, and national security.”

Last year, the Biden administration issued a policy directive that said U.S. federal agencies must show their artificial intelligence tools aren’t harming the public, or stop using them. Trump’s order directs the White House to revise and reissue those directives, which affect how agencies acquire AI tools and use them.

Yes, Minister character is government's new AI assistant

Government workers will soon be given access to a set of tools powered by artificial intelligence (AI), named after a scheming parliamentary official from the classic sitcom Yes, Minister.

The government says the assistants - called Humphrey - will "speed up the work of civil servants" and save money by replacing cash that would have been spent on consultants.

But the decision to name the AI after Sir Humphrey Appleby, a character described as "devious and controlling", has raised eyebrows.

Tim Flagg, chief operating officer of trade body UKAI, said the name risked "undermining" the government's mission to embrace the tech.

Science and technology secretary Peter Kyle will announce more digital tools later on Tuesday, including two apps which will store government documents, including digital driving licences.

The announcement is part of the government's overhaul of digital services and comes after their AI Opportunities Action Plan announced last week.

"Humphrey for me is a name which is very associated with the Machiavellian character from Yes, Minister," says Mr Flagg from UKAI, which represents the AI sector. "That immediately makes people who aren't in that central Whitehall office think that this is something which is not going to be empowering and not going to be helping them."

Most of the tools in the Humphrey suite are generative AI models - in this case, technology which takes large amounts of information and summarises it in a more digestible format - to be used by the civil service.

Among them is Consult, which summarises people's responses to public calls for information. The government says this is currently done by expensive external consultants who bill the taxpayer "around £100,000 every time."

Parlex, which the government says helps policymakers search through previous parliamentary debates on a certain topic, is described by The Times as "designed to avoid catastrophic political rows by predicting how MPs will respond".

Other changes announced include more efficient data sharing between departments. "I think the government is doing the right thing," says Mr Flagg. "They do have some good developers - I have every confidence they are going to be creating a great product."