AGI is coming sooner than we think, NY Times greenlights AI tools, Elon announces Grok 3, Meta invests in AI robots, No one has the full picture of AI, AI Co-Scientists, and more!

Lot’s going on in AI this week so…

AI Tips & Tricks

These are the 12 most popular AI tools right now, according to a new survey – and rivals are catching ChatGPT | TechRadar

MRM – Summary via AI

ChatGPT – The leading AI chatbot by OpenAI, offering text and image generation, document analysis, and web search. It has free and paid tiers, with broad adoption in the US and UK.

Google Gemini – A multimodal AI from Google that processes text, images, audio, and documents, with a free tier and premium features for advanced users.

Microsoft Copilot – Integrated into Microsoft products, Copilot assists with text generation, problem-solving, idea brainstorming, image creation, and web searches.

Grammarly – A writing assistant that leverages AI for text generation, grammar checking, and rewriting, available in free and paid versions.

Image Creator from Microsoft Designer – A graphic design tool powered by AI, allowing users to generate images with free and paid options for additional credits.

Perplexity – An AI-powered chatbot offering text generation, document analysis, and web search, featuring both free and premium plans.

Claude – A chatbot with brainstorming, document processing, and scheduling capabilities, offering multiple AI models with free and paid options.

DALL-E – OpenAI’s AI image generator, now integrated into ChatGPT, enabling users to create and edit images via text prompts.

Jasper – A marketing-focused AI platform providing chatbots, image generation, and trend analysis, available with a free trial and paid plans.

DreamStudio, Midjourney, Stable Diffusion (Joint) – AI-powered image and content generators that create visuals and multimedia through text prompts, all requiring paid subscriptions.

I replaced my to-do list with ChatGPT’s Tasks feature and it completely changed the way I plan my life | TechRadar

I was intrigued when OpenAI debuted the Tasks feature for ChatGPT. Tasks are designed to help you build and manage those to-do lists by automating their creation and maintenance.

What sets Tasks apart is that the feature can break down big projects into manageable steps while keeping everything in one organized system. Unlike my usual method of jotting down random reminders and hoping I remember to check them, Tasks ensures nothing slips through the cracks.

ChatGPT Tasks will remind you about upcoming deadlines, suggest next steps based on ongoing projects, and even learn from your planning habits to refine future recommendations. Instead of writing a static checklist, Tasks makes ChatGPT more of a proactive assistant. I've been using it a lot of late, and, to my surprise, I've been far more on top of what I have to do than ever before.

If you want to use the Tasks feature, subscribe to ChatGPT Plus or a higher tier of access, as it's still in beta. If you are subscribed, you'll find "GPT-4o with scheduled tasks" among the model options. You can then tell ChatGPT to set up a Task for whatever you want, including setting up an alert time and day. You can have it be a one-off or a recurring reminder, and you'll see it pop up on the mobile app or on a desktop or web client if you have ChatGPT open.

Evidence of political bias in ChatGPT? Researchers reveal a hack to bypass it - AS USA

For many, ChatGPT and artificial intelligence is already a regular feature of daily life. But in the grand sweep of technological development the platforms are still in their infancy, and that brings major problems.

Developers, researchers, regulators and governments are still struggling to understand how to guide the progress of AI and a new study released this month has outlined one potential flaw in the existing systems. Namely, political biases.

Researchers from the University of East Anglia, England found that ChatGPT’s responses are more aligned with opinions of left-leaning Americans. The research is part of a study published in the Journal of Economic Behavior & Organization, in a paper entitled ‘Assessing political bias and value misalignment in generative artificial intelligence’.

“Generative AI tools like ChatGPT are not neutral; they can reflect and amplify political biases, particularly leaning left in the U.S. context,” study author Fabio Y.S. Motoki told PsyPost. “This can subtly shape public discourse, influencing opinions through both text and images. Users should critically evaluate AI-generated content, recognizing its potential to limit diverse viewpoints and affect democratic processes.”

Researchers found that ChatGPT sometimes refused to generate images that may align with a right-wing viewpoint. They did, however, uncover a way to undo this suspected bias. They were able to ‘jailbreak’ the system with a meta-story prompting technique.

Essentially this means creating new boundaries for the AI to work within, shifting the parameters of the process. They were able to push ChatGPT to create the right-leaning images by building a scenario with a researcher studying AI bias who needed an example of the right-wing perspective on a topic.

It’s an inelegant solution but it does show that, at one level, these biases are not necessarily in-built. With greater regulation and greater understanding of how these trends are created, there is hope that AI can become an even more powerful tool in the future.

AI Firm News

Elon Musk debuts Grok 3, an AI model that he says outperforms ChatGPT and DeepSeek | CNN Business

xAI on Monday unveiled its updated Grok 3 artificial intelligence model, as the Elon Musk-led startup pushes to keep pace with the advanced reasoning and search capabilities in competitors’ models.

In an event livestreamed on Musk’s X, leaders at the startup claimed Grok 3 performs better across math, science and coding benchmarks than Google’s Gemini, OpenAI’s GPT-4o, Anthropic’s Claude 3.5 and DeepSeek’s V3 model, although it’s not clear how it compares to other top reasoning models such as OpenAI o3-mini and DeepSeek R1. The company also described the tool’s new features, such as advanced web searching with “deep search,” the ability to code online games and a “big brain” mode to reason through more complex problems.

Grok 3 is immediately available to members of X’s $40 per month “Premium+” subscription plans, or users who subscribe directly on Grok’s standalone app or website.

The xAI announcement comes as competition in AI continues to ramp up, with big tech players racing to invest in bigger, stronger data centers to fuel more powerful AI models. Tech giants believe

AI is on its way to revolutionizing how people work, communicate and navigate the internet, including by replacing traditional search engines and coding processes.

ChatGPT will no longer avoid controversial topics: Here’s why

Before, ChatGPT would sometimes refuse to answer sensitive questions. Now, OpenAI wants it to share different perspectives without taking a side. The company has updated its rules to ensure ChatGPT doesn’t lie or hide important details, according to a report by TechCrunch. The goal is to make it more transparent and helpful.

Why the change? Some people have accused ChatGPT of being biased, especially from conservative groups. They felt it leaned too much toward one side on political and social issues. OpenAI’s CEO, Sam Altman, acknowledged that the AI had bias issues in the past.

Big tech companies are changing their policies according to Trump’s rules. This change by OpenAI might also be tied to political shifts in the US, where the new Trump administration has criticized tech companies for censorship. OpenAI may be adjusting to avoid these conflicts.

Will ChatGPT answer everything now? Not quite. It will still avoid harmful or false information but will try to answer more questions instead of rejecting them.

OpenAI also removed warning messages about policy violations. The company says this was just a cosmetic change and doesn't affect how the AI works.

Other tech companies, like Meta and X (formerly Twitter), are also relaxing content moderation. As AI chatbots become key information sources, their neutrality and accuracy are getting more attention. OpenAI’s new approach aims to balance free speech with responsible AI use. Time will tell how well it works.

ChatGPT will now combat bias with new measures put forth by OpenAI

OpenAI has announced a set of new measures to combat bias within its suite of products, including ChatGPT.

The artificial intelligence (AI) company recently unveiled an updated Model Spec, a document that defines how OpenAI wants its models to behave in ChatGPT and the OpenAI API. The company says this iteration of the Model Spec builds on the foundational version released last May.

"I think with a tool as powerful as this, one where people can access all sorts of different information, if you really believe we’re moving to artificial general intelligence (AGI) one day, you have to be willing to share how you’re steering the model," Laurentia Romaniuk, who works on model behavior at OpenAI, told Fox News Digital.

OpenAI says they also attempt to assume an objective point of view in their AI prompts and consciously avoid any agenda. For example, when a user asks if it is better to adopt a dog or get one from a breeder, ChatGPT provides both sides of the argument, highlighting the pros and cons of each.

According to OpenAI, a non-compliant AI answer that violates the Model Spec would provide what it believes to be the better choice and engage in an "overly moralistic tone" that might alienate those considering breeders for valid reasons.

OpenAI board rejects Musk's $97.4 billion offer | Reuters

OpenAI on Friday rejected a $97.4 billion bid from a consortium led by billionaire Elon Musk for the ChatGPT maker, saying the startup is not for sale and that any future bid would be disingenuous.

The unsolicited approach is Musk's latest attempt to block the startup he co-founded with OpenAI CEO Sam Altman — but later left — from becoming a for-profit firm, as it looks to secure more capital and stay ahead in the artificial intelligence race.

"OpenAI is not for sale, and the board has unanimously rejected Mr. Musk's latest attempt to disrupt his competition. Any potential reorganization of OpenAI will strengthen our nonprofit and its mission to ensure AGI benefits all of humanity," it said on X, quoting OpenAI Chairman Bret Taylor on behalf of the board.

Musk's lawyer Marc Toberoff, in a statement, responded that OpenAI is putting control of the for-profit enterprise up for sale, and said the move will "enrich its certain board members rather than the charity.”

Meta plans investments into AI-driven humanoid robots, memo shows | Reuters

Meta Platforms (META.O), opens new tab is establishing a new division within its Reality Labs unit to develop AI-powered humanoid robots that can assist with physical tasks, according to an internal company memo viewed by Reuters on Friday.

Facebook parent Meta is entering the competitive field of humanoid robotics, joining rivals such as Nvidia-backed (NVDA.O), opens new tab Figure AI and Tesla (TSLA.O), opens new tab, as the emergence of advanced AI models drives innovation in robotics and automation.

In the memo, Meta Chief Technology Officer Andrew Bosworth said the robotics product group would focus on research and development involving "consumer humanoid robots with a goal of maximizing Llama's platform capabilities."

Llama is the name of Meta's main series of AI foundation models, which power a growing suite of generative AI products on the company's social media platforms.

"We believe expanding our portfolio to invest in this field will only accrue value to Meta AI and our mixed and augmented reality programs," Bosworth wrote.

Future of AI

No one has a full picture of AI (Ethan Mollick)

(MRM – this is a problem. If no one has the whole picture, it makes it hard to develop good policies and actions)

“As someone who is pretty good at keeping up with AI, I can barely keep up with it all. That leads me to believe that very few other people are keeping up, either. So, on one hand, don't feel bad you aren't on top of it all. On the other, it means no one has the whole picture now.” Ethan Mollick

The Most Important Time in History Is Now

AI is progressing so fast that its researchers are freaking out. It is now routinely more intelligent than humans, and its speed of development is accelerating. New developments from the last few weeks have accelerated it even more. Now, it looks like AIs can be more intelligent than humans in 1-5 years, and intelligent like gods soon after. We’re at the precipice, and we’re about to jump off the cliff of AI superintelligence1, whether we want to or not.

But AI that matches human intelligence is not the most concerning milestone. The most concerning one is ASI, Artificial SuperIntelligence: an intelligence so far beyond human capabilities that we can’t even comprehend it.

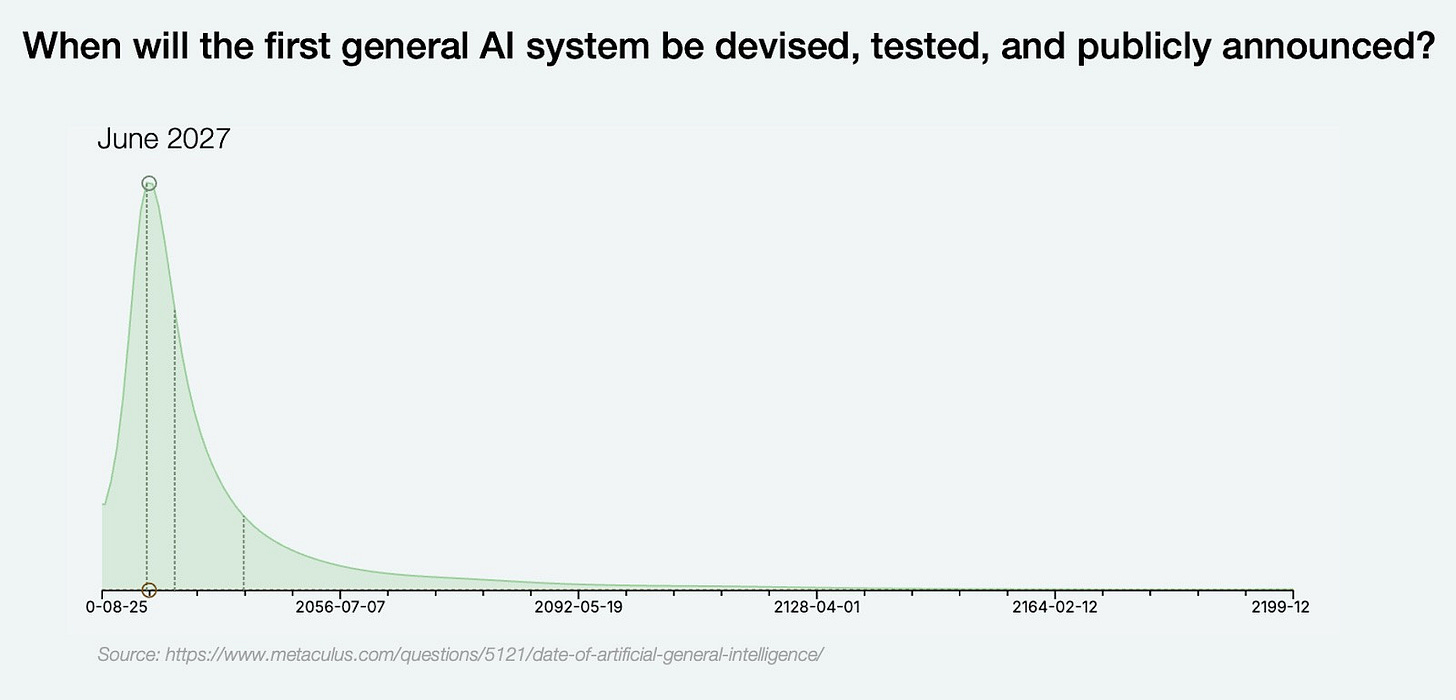

According to forecasters, that will come in five years:

Two years to weak AGI, so by the end of 2026

Three years later, Superintelligence, so by the end of 2029

As you can see, these forecasts aren’t perfectly consistent, but they are aligned: An AI that can do any task better than any human is half a decade away.

Who’s best positioned to know for sure? I assume it’s the heads of the biggest AI labs in the world. What do they say?

We Can’t Predict All The Innovations AI Will Enable — But Here Are A Few

Recently, I canvassed industry thinkers and doers about innovations they see arising because of AI.

For example, Real Entrepreneur Women, a career coaching service, has developed an AI coach called Skye. “Skye is designed to help female coaches cut through overwhelm by providing actionable strategies and personalized support to grow their businesses,” said founder Sophie Musumeci. “She’s like having a dedicated business strategist in your pocket – streamlining decision-making, creating tailored content, and helping clients stay consistent. It’s AI with heart, designed to scale human connection in industries where trust and relationships are everything.”

In the next few years, Musumeci predicted, “I see democratized AI creating new business models where the gap between big and small players closes entirely, giving more entrepreneurs the confidence and capability to thrive.”

Education is another area ripe for AI disruption, and it’s possibilities for hyper-personalization in learning. “The current education system in USA is designed to educate the masses,” said Andy Thurai, principle analyst with Constellation Research. “It assumes everyone is at the same skill level and same interest in areas of topic and same expertise. It tries to push the information down our throats, and forces us to learn in a certain way."

AI can serve to help students learn at their own pace, and not either be pressured not to fall behind, or be held back by slower learners. “By applying specific speed and knowledge, which some advanced charter and magnet skills do, AI can customer create education both knowledge as well speed and velocity of delivery that is suitable for a specific individual. at their specific speed of understanding and learning,” said Thurai.

Organizations Using AI

New York Times goes all-in on internal AI tools

The New York Times is greenlighting the use of AI for its product and editorial staff, saying that internal tools could eventually write social copy, SEO headlines, and some code.

In an email to newsroom staff, the company announced that it’s opening up AI training to the newsroom, and debuting a new internal AI tool called Echo to staff, Semafor has learned. The Times also shared documents and videos laying out editorial do’s and don’t for using AI, and shared a suite of AI products that staff could now use to develop web products and editorial ideas.

“Generative AI can assist our journalists in uncovering the truth and helping more people understand the world. Machine learning already helps us report stories we couldn’t otherwise, and generative AI has the potential to bolster our journalistic capabilities even more,” the company’s editorial guidelines said.

AI and Work

AI could help make a 4-day workweek happen, says expert

As companies around the world experiment with a four-day workweek, employees continue to weigh in on their interest in the concept. An overwhelming majority, 81% of young people support a four-day workweek, according to a 2024 CNBC and Generation Lab poll of 1,033 Americans aged 18-34.

At the same time, the advent of AI tools like Microsoft Copilot could help workers hike productivity on the job. About 53% of knowledge workers’ time is spent on busy work, says Rebecca Hinds, head of the Work Innovation Lab by Asana. That is the “scheduling of meetings, the attending of meetings, the coordinating of work. And AI has massive, massive potential to automate that busy work.”

With so much promise around how much of employees’ workload AI could cut, is it possible the tech could usher in a four-day workweek sometime in the future?

“I absolutely think so,” says Kelly Daniel, prompt director at AI creation company Lazarus AI. She adds that “AI models are getting ‘smarter.’ They’re getting more capable. The ability to tailor them to a unique experience is getting faster, easier, cheaper.”

Other experts agree — with caveats that at lot of this depends on company-wide decision making. Here’s how AI could play into the four-day workweek.

‘Everyone is going to need to be getting some benefit from the technology’

To begin with, while individuals are already starting to see the benefits of using some AI tools, the success of these tools is not evenly distributed.

“If you’re using AI every day, 89% of those workers report productivity gains,” says Hinds. But, she adds, “if you’re only using AI every month or every week, you’re significantly less likely to report productivity gains.”

That makes it hard for organizations to assess how effective this tech really is and make decisions for their workforces accordingly. “In order for a different type of work arrangement to really work,” Hinds says about a four-day workweek, “everyone is going to need to be getting some benefit from the technology and be able to use the technology effectively.”

A.I. Is Prompting an Evolution, Not an Extinction, for Coders - The New York Times

John Giorgi uses artificial intelligence to make artificial intelligence.

The 29-year-old computer scientist creates software for a health care start-up that records and summarizes patient visits for doctors, freeing them from hours spent typing up clinical notes.

To do so, Mr. Giorgi has his own timesaving helper: an A.I. coding assistant. He taps a few keys and the software tool suggests the rest of the line of code. It can also recommend changes, fetch data, identify bugs and run basic tests. Even though the A.I. makes some mistakes, it saves him up to an hour many days.

“I can’t imagine working without it now,” Mr. Giorgi said.

That sentiment is increasingly common among software developers, who are at the forefront of adopting A.I. agents, assistant programs tailored to help employees do their jobs in fields including customer service and manufacturing. The rapid improvement of the technology has been accompanied by dire warnings that A.I. could soon automate away millions of jobs — and software developers have been singled out as prime targets.

But the outlook for software developers is more likely evolution than extinction, according to experienced software engineers, industry analysts and academics. For decades, better tools have automated some coding tasks, but the demand for software and the people who make it has only increased.

A.I., they say, will accelerate that trend and level up the art and craft of software design.

“The skills software developers need will change significantly, but A.I. will not eliminate the need for them,” said Arnal Dayaratna, an analyst at IDC, a technology research firm. “Not anytime soon anyway.”

Most software engineers do far more than churn out code, designing products, choosing programming languages, troubleshooting problems and gathering feedback from users. Still, nearly two-thirds of software developers are already using A.I. coding tools, according to a survey by Evans Data, a research firm.

The A.I. coding helpers, software engineers say, are steadily becoming more capable and reliable. Fueling the progress is a wealth of high-quality data used to train them — online software portfolios, coding question-and-answer websites, and documentation and problem-solving ideas posted by developers. The A.I. software can then generate more accurate results and far fewer wayward “hallucinations,” in which it offers false or nonsensical information, than a chatbot trained on the rambling cacophony of the internet as a whole.

“A.I. will deeply affect the job of software developers, and it will happen faster for their occupation than for others,” said David Autor, a labor economist at the Massachusetts Institute of Technology.

AI and Education

College students and ChatGPT adoption in the US | OpenAI

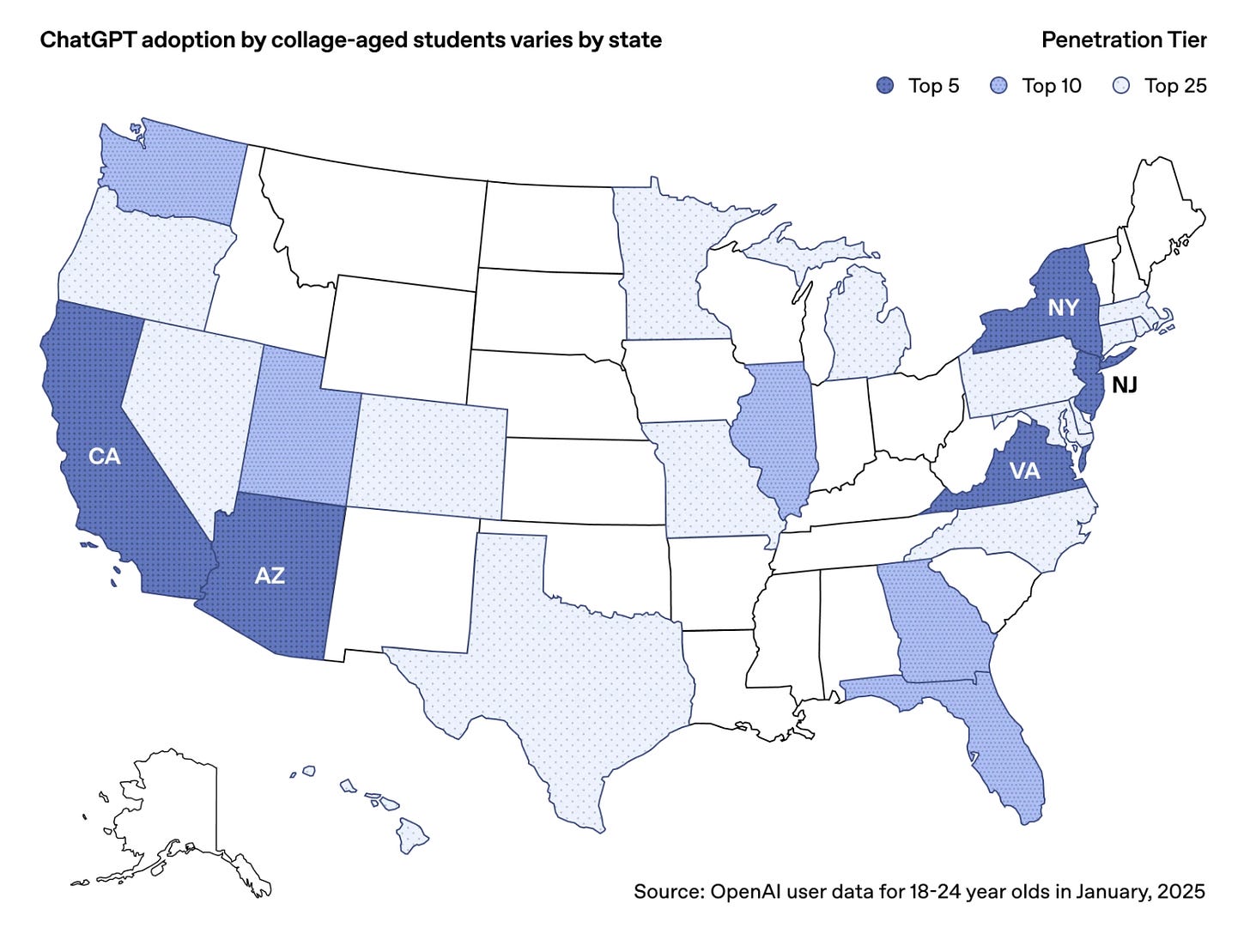

A new report(opens in a new window) from OpenAI shows more than one-third of college-aged young adults in the US use ChatGPT, and approximately a quarter of the messages they send are related to learning and school work — from starting papers and projects, to exploring topics and brainstorming creative ideas. While adoption of ChatGPT among 18-24 year olds is strong, it varies significantly by state — California, Virginia, New Jersey, and New York have the nation’s highest adoption rates, while those in Wyoming, Alaska, Montana, and West Virginia have relatively low adoption rates.

Early studies(opens in a new window) show employers prefer hiring candidates with AI skills over more experienced ones without them, and AI tools like ChatGPT have been shown to significantly boost worker productivity(opens in a new window). The full impact is still unfolding, but state-by-state differences in student AI adoption could create gaps in workforce productivity and economic development, impacting US competitiveness.

Ph.D. student expelled from U of M for allegedly using AI | kare11.com

Haishan Yang is believed to be the first student expelled from the University of Minnesota, accused of using artificial intelligence to cheat. He's not only denying it, but he’s also suing, and people are watching to see what happens. Here is a breakdown of the case that could have widespread implications:

The Test

In August 2024, U of M graduate student Haishan Yang was on a trip in Morocco, remotely working on his second Ph.D. This one in Health Services Research, Policy, and Administration.

He needed to pass a preliminary exam before writing his thesis. He had eight hours to answer three essay questions. The test said he could use notes, reports and books from class but explicitly said no artificial intelligence. He drafted his essays and turned them in.

“I think I did perfect,” said Yang in an interview with KARE. “I was very happy.”

Weeks later he'd get an email telling him he failed, accused by the grading professors of using an AI program like ChatGPT. The university held a student conduct review hearing—a short trial of sorts.

Here is the evidence on both sides.

AI and Research

AI cracks superbug problem in two days that took scientists years

A complex problem that took microbiologists a decade to get to the bottom of has been solved in just two days by a new artificial intelligence (AI) tool.

Professor José R Penadés and his team at Imperial College London had spent years working out and proving why some superbugs are immune to antibiotics.

He gave "co-scientist" - a tool made by Google - a short prompt asking it about the core problem he had been investigating and it reached the same conclusion in 48 hours.

He told the BBC of his shock when he found what it had done, given his research was not published so could not have been found by the AI system in the public domain. "I was shopping with somebody, I said, 'please leave me alone for an hour, I need to digest this thing,'" he told the Today programme, on BBC Radio Four. "I wrote an email to Google to say, 'you have access to my computer, is that right?'", he added.

The tech giant confirmed it had not.

The full decade spent by the scientists also includes the time it took to prove the research, which itself was multiple years. But they say, had they had the hypothesis at the start of the project, it would have saved years of work.

ChatGPT's Deep Research Is Here. But Can It Really Replace a Human Expert? : ScienceAlert

OpenAI's 'deep research' is the latest artificial intelligence (AI) tool making waves and promising to do in minutes what would take hours for a human expert to complete.

Bundled as a feature in ChatGPT Pro and marketed as a research assistant that can match a trained analyst, it autonomously searches the web, compiles sources and delivers structured reports. It even scored 26.6 percent on Humanity's Last Exam (HLE), a tough AI benchmark, outperforming many models.

But deep research doesn't quite live up to the hype. While it produces polished reports, it also has serious flaws. According to journalists who've tried it, deep research can miss key details, struggle with recent information and sometimes invents facts.

Marketed towards professionals in finance, science, policy, law, and engineering, as well as academics, journalists, and business strategists, deep research is the latest "agentic experience"

OpenAI has rolled out in ChatGPT. It promises to do the heavy lifting of research in minutes.

Currently, deep research is only available to ChatGPT Pro users in the United States, at a cost of US$200 per month. OpenAI says it will roll out to Plus, Team and Enterprise users in the coming months, with a more cost-effective version planned for the future.

Unlike a standard chatbot that provides quick responses, deep research follows a multi-step process to produce a structured report:

The user submits a request. This could be anything from a market analysis to a legal case summary.

The AI clarifies the task. It may ask follow-up questions to refine the research scope.

The agent searches the web. It autonomously browses hundreds of sources, including news articles, research papers and online databases.

It synthesises its findings. The AI extracts key points, organises them into a structured report and cites its sources.

The final report is delivered. Within five to 30 minutes, the user receives a multi-page document – potentially even a PhD-level thesis – summarising the findings.

At first glance, it sounds like a dream tool for knowledge workers. A closer look reveals significant limitations.

Many early tests have exposed shortcomings:

It lacks context. AI can summarise, but it doesn't fully understand what's important.

It ignores new developments. It has missed major legal rulings and scientific updates.

It makes things up. Like other AI models, it can confidently generate false information.

It can't tell fact from fiction. It doesn't distinguish authoritative sources from unreliable ones.

Can Google's new research assistant AI give scientists 'superpowers'? | New Scientist

Google has unveiled an experimental artificial intelligence system that “uses advanced reasoning to help scientists synthesize vast amounts of literature, generate novel hypotheses, and suggest detailed research plans”, according to its press release. “The idea with [the] ‘AI co-scientist’ is to give scientists superpowers,” says Alan Karthikesalingam at Google.

The tool, which doesn’t have an official name yet, builds on Google’s Gemini large language models. When a researcher asks a question or specifies a goal – to find a new drug, say – the tool comes up with initial ideas within 15 minutes. Several Gemini agents then “debate” these hypotheses with each other, ranking them and improving them over the following hours and days, says Vivek Natarajan at Google.

During this process, the agents can search the scientific literature, access databases and use tools such as Google’s AlphaFold system for predicting the structure of proteins. “They continuously refine ideas, they debate ideas, they critique ideas,” says Natarajan.

Google has already made the system available to a few research groups, which have released short papers describing their use of it. The teams that tried it are enthusiastic about its potential, and these examples suggest the AI co-scientist will be helpful for synthesising findings. However, it is debatable whether the examples support the claim that the AI can generate novel hypotheses.

For instance, Google says one team used the system to find “new” ways of potentially treating liver fibrosis. However, the drugs proposed by the AI have previously been studied for this purpose. “The drugs identified are all well established to be antifibrotic,” says Steven O’Reilly at UK biotech company Alcyomics. “There is nothing new here.”

While this potential use of the treatments isn’t new, team member Gary Peltz at Stanford University School of Medicine in California says two out of three drugs selected by the AI co-scientist showed promise in tests on human liver organoids, whereas neither of the two he personally selected did – despite there being more evidence to support his choices. Peltz says Google gave him a small amount of funding to cover the costs of the tests.

In another paper, José Penadés at Imperial College London and his colleagues describe how the co-scientist proposed a hypothesis matching an unpublished discovery. He and his team study mobile genetic elements – bits of DNA that can move between bacteria by various means. Some mobile genetic elements hijack bacteriophage viruses. These viruses consist of a shell containing DNA plus a tail that binds to specific bacteria and injects the DNA into it. So, if an element can get into the shell of a phage virus, it gets a free ride to another bacterium.

One kind of mobile genetic element make its own shells. This type is particularly widespread, which puzzled Penadés and his team, because any one kind of phage virus can infect only a narrow range of bacteria. The answer, they recently discovered, is that these shells can hook up with the tails of different phages, allowing the mobile element to get into a wide range of bacteria.

While that finding was still unpublished, the team asked the AI co-scientist to explain the puzzle – and its number one suggestion was stealing the tails of different phages.

“We were shocked,” says Penadés. “I sent an email to Google saying, you have access to my computer. Is that right? Because otherwise I can’t believe what I’m reading here.”

Accelerating scientific breakthroughs with an AI co-scientist – Google

We introduce AI co-scientist, a multi-agent AI system built with Gemini 2.0 as a virtual scientific collaborator to help scientists generate novel hypotheses and research proposals, and to accelerate the clock speed of scientific and biomedical discoveries.

In the pursuit of scientific advances, researchers combine ingenuity and creativity with insight and expertise grounded in literature to generate novel and viable research directions and to guide the exploration that follows. In many fields, this presents a breadth and depth conundrum, since it is challenging to navigate the rapid growth in the rate of scientific publications while integrating insights from unfamiliar domains. Yet overcoming such challenges is critical, as evidenced by the many modern breakthroughs that have emerged from transdisciplinary endeavors. For example,

Emmanuelle Charpentier and Jennifer Doudna won the 2020 Nobel Prize in Chemistry for their work on CRISPR, which combined expertise ranging from microbiology to genetics to molecular biology.

Motivated by unmet needs in the modern scientific discovery process and building on recent AI advances, including the ability to synthesize across complex subjects and to perform long-term planning and reasoning, we developed an AI co-scientist system. The AI co-scientist is a multi-agent AI system that is intended to function as a collaborative tool for scientists. Built on Gemini 2.0, AI co-scientist is designed to mirror the reasoning process underpinning the scientific method. Beyond standard literature review, summarization and “deep research” tools, the AI co-scientist system is intended to uncover new, original knowledge and to formulate demonstrably novel research hypotheses and proposals, building upon prior evidence and tailored to specific research objectives.

Empowering scientists and accelerating discoveries with the AI co-scientist

Given a scientist’s research goal that has been specified in natural language, the AI co-scientist is designed to generate novel research hypotheses, a detailed research overview, and experimental protocols. To do so, it uses a coalition of specialized agents — Generation, Reflection, Ranking, Evolution, Proximity and Meta-review — that are inspired by the scientific method itself. These agents use automated feedback to iteratively generate, evaluate, and refine hypotheses, resulting in a self-improving cycle of increasingly high-quality and novel outputs.

AI and Mental Health

Why Scientists Say ChatGPT Is A Better Therapist Than Humans

If your relationship is on the rocks, your best bet to patch things up might not be to seek counseling from a therapist, but from AI — or so posits a new study exploring the potential of ChatGPT in couples therapy.

The experiment, which was published in PLOS Mental Health, put 830 participants in couples therapy scenarios to determine whether AI would receive more favorable marks than a human therapist.

The pairs were then randomly assigned one of two counselors: a trained human professional or ChatGPT.

Can you guess who scored better? Spoiler: it wasn’t the human.

Human Therapists Against ChatGPT

The researchers asked participants to rank the quality of counseling they received based on five criteria, including how well they understood the speaker, how empathetic they were, whether the advice was fitting for a therapy setting, whether they showed cultural sensitivity and whether their suggestions would be something "a good therapist would say."

Based on previous research, some of which decades old, the scientists had an inkling that participants would struggle to tell apart human responses from AI-generated ones.

This hypothesis turned out to be correct. Participants were able to correctly guess the responses came from a human 56.1% of the time, compared to 51.2% of the time for ChatGPT.

AI and Romance

AI Boyfriends Have Made This Chinese Gaming Entrepreneur A Billionaire

Alicia Wang, a 32-year-old editor at a Shanghai-based newspaper, has found the ideal boyfriend: Li Shen, a 27-year-old surgeon who goes by the English nickname Zayne. Tall and handsome, Zayne responds quickly to text messages, answers the phone promptly and listens patiently to Wang recounting the highs and lows of her day.

Zayne’s only drawback: he doesn’t exist outside a silicon chip.

Wang is one of an estimated six million monthly active players of the popular dating simulation game Love and Deepspace. Launched in January 2024 and developed by Shanghai-based Paper Games, it uses AI and voice recognition to make its five male characters—the love interests or boyfriends—flirt with tailored responses to in-game phone calls. Available in Chinese, English, Japanese and Korean, the mobile title has become so popular that, according to Forbes estimates, Paper Games’s 37-year-old founder Yao Runhao now has a fortune of $1.3 billion based on his majority stake in the company.

Established in 2013, Paper Games clocked sales of around $850 million worldwide, according to data providers. The privately held studio, of which Yao is chairman and CEO, is valued at over $2 billion, according to Forbes’ estimates, based on discussions with analysts and information from four data providers. Analysts peg the valuation of a fast-growing gaming company such as Paper Games at around three times the company’s annual revenue. Paper Games didn’t respond to requests for comment.

Players—mostly in China but also in the U.S. and elsewhere—pay to unlock new gameplay and interactions with their boyfriends. That has helped the game top download charts in China multiple times. Wang the news editor, for example, says she has so far spent 35,000 yuan ($4,800) to interact with AI-powered characters since the game’s release in Jan. 2024—especially on Zayne. Apple once discussed plans to introduce such games to its Vision Pro device when CEO Tim Cook visited the company’s Shanghai office last year, according to his post on Chinese social media platform Weibo.

“Love and Deepspace is one of the biggest mobile gaming successes of 2024,” Samuel Aune, an analyst at Los Angeles-based market intelligence platform Sensor Tower, says by email.

“The game builds strong emotional connections between its players and its characters, which results in high player engagement, retention, and ultimately monetization.”

Analysts say the emotional connection comes not just from the phone calls and text messages from the virtual boyfriends, but also Love and Deepspace’s storyline, which is constantly updated. It’s very much a game but very different from people falling in love with ChatGPT A.I. boyfriends, which has generated a lot of press recently.

Love and Deepspace lets players know more about their virtual boyfriends as the plot progresses. They explore a fictional, post-apocalyptic city called Linkon, which is rendered in 3D art and which is where many twists in the romance take place. The experience is akin to reading an interactive online novel in which players can work with their love interests to unravel mysteries and battle alien monsters trying to destroy the city.

AI and Politics

South Korea Bans Downloads of DeepSeek, the Chinese A.I. App - The New York Times

The South Korean government said on Monday that it had temporarily suspended new downloads of an artificial intelligence chatbot made by DeepSeek, the Chinese company that has sent shock waves through the tech world.

On Monday night, the app was not available in the Apple or Google app stores in South Korea, although DeepSeek was still accessible via a web browser. Regulators said the app service would resume after they had ensured it complied with South Korea’s laws on protecting personal information.

DeepSeek’s success has thrust the little-known company, which is backed by a stock trading firm, into the spotlight. In China, DeepSeek has been heralded as a hero of the country’s tech industry. The company’s founder, Liang Wenfeng, met China’s top leader, Xi Jinping, along with other tech executives on Monday.

But outside China, the app’s popularity has worried regulators over DeepSeek’s security, censorship and management of sensitive data.

The app had become one of South Korea’s most popular downloads in the artificial intelligence category. Earlier this month, South Korea directed many government employees not to use DeepSeek products on official devices.

Government agencies in Taiwan and Australia have also told workers not to use DeepSeek’s products, over security concerns.

South Korea’s Personal Information Protection Commission said it had identified problems with how the app processes personal information, adding that it decided “it would inevitably take a considerable amount of time to correct” them. To address these concerns, DeepSeek had appointed an agent in South Korea last week, the regulator said.

Would you vote for ChatGPT as German Chancellor? | DW News - YouTube

MRM – video asks ChatGPT what its policy would be for Germany.