Shopify CEO forecasts future with AI, ChatGPT will remember everything you tell it, make your own action figure with AI, Make ChatGPT your travel companion, Microsoft slowing down AI projects, and more!

AI Tips & Tricks

5 reasons I turn to ChatGPT every day - from faster research to replacing Siri | ZDNET

Here are the five things, summarized by AI:

· Searching the web

ChatGPT's web search offers a distraction-free, conversational alternative to Google. It gives clear, up-to-date answers and allows for follow-up questions, using natural language.

· Conducting deep research

The Deep Research feature (available on paid plans) compiles comprehensive, source-cited reports on complex topics, saving users hours of manual effort.

· Personalizing chats

Users can customize ChatGPT with personal details (name, profession, preferences) to make interactions more relevant, friendly, and goal-oriented.

· Helping write prompts

ChatGPT can generate well-crafted prompts for other AI tools, like OpenAI’s Sora, easing the creative burden and improving output quality.

· Replacing Siri

ChatGPT outperforms Siri on Apple devices by understanding complex queries and providing more accurate, helpful responses via Apple Intelligence integration.

Here’s how ChatGPT became my favorite travel companion

Here’s a summary of the four ways ChatGPT makes traveling more seamless, via AI:

Personalized Itinerary Planning: ChatGPT created a travel itinerary, including friends’ meal suggestions, and adjusted it on request—eliminating the need for manual planning.

Language Support: It helped the traveler practice and use Italian in real-world situations (e.g., buying a SIM card, getting medicine), offering realistic dialogues and scenarios.

Cultural and Practical Guidance: The model answered questions about historical sites, artwork, what to look for, and even outfit advice—enhancing the overall travel experience.

Everyday Travel Assistant: Beyond planning, ChatGPT served as a daily AI companion, though with some hiccups (like overly editing photos), showing its potential for tasks like booking rides or deliveries in the future.

Information to avoid telling ChatGPT:

Corporate and personal secrets: Chatting with AI models about client information or prototypes for a company are just some examples to avoid.

Personal information: a user feels the need to tell to a chatbot, such as crimes or deep personal admissions, would be examples that should be avoided if a user is worried about privacy.

Identity information: Social security numbers, driver’s license numbers, email addresses and phone numbers should be avoided because this information could resurface or be subject to a data breach.

Financial records: Anything to do with bank accounts, credit or debit cards and investments should be avoided for privacy reasons as well.

Medical records: Information to do with a user’s medical prescriptions or charts can be avoided for the sake of privacy.

Passwords: Login information to any account a user has should be avoided because if a data breach were to occur to a model that keeps a chat history, those passwords would be subject to being stolen.

ChatGPT Action Figure Prompt Explained: Make Your Own AI Doll

The popular AI model ChatGPT is now allowing users to generate their action figures with the help of a simple prompt. After dominating social media with the Ghibli-style AI art trend, the AI model has brought forth the concept of turning images into an AI doll based on the instructions listed by the users.

Here is every detail of the prompt that users need to know and the necessary steps they should follow to make their action figure.

(MRM – I did one for me. Not perfect but I didn’t want to keep regenerating it).

AI Firm News

ChatGPT will remember everything you tell it now - like a real personal assistant | ZDNET

On Thursday, OpenAI unveiled an update to the memory feature in ChatGPT: it can now reference all of your past conversations to better inform responses going forward. This expansion builds on the feature's original abilities, which allowed the chatbot to remember basic user information you share in conversations, such as your profession, pets, preferences, and more.

The new feature expands ChatGPT's ability to provide personalized answers without requiring you to reexplain the information it has previously gathered. Much like interacting with another human (recall permitting), any new conversation builds on previous knowledge, allowing for smoother, more contextualized interactions. ZDNET has yet to test the feature for performance.

Though it is a simple feature, the release is particularly noteworthy because it highlights OpenAI's efforts to position ChatGPT as more than an AI chatbot: rather, a personal assistant that can seamlessly integrate with users' lives. OpenAI CEO Sam Altman shared on X that the feature highlights what the company is excited about -- "AI systems that get to know you over your life and become extremely useful and personalized."

For example, if you were using ChatGPT to discuss a project, instead of having to explain the project with additional background information, you could just say something like, "Remember the conversation we had about the paper I was working on? What are some other ways I can start the paper?" In this instance, the chatbot would be able to remember the details of the paper you already shared and pull on personal information it saved, such as your profession and preferred writing style, to generate its answer.

Microsoft says it's 'slowing or pausing' some AI data center projects, including $1B plan for Ohio

Microsoft said it is “slowing or pausing” some of its data center construction, including a $1 billion project in Ohio, the latest sign that the demand for artificial intelligence technology that drove a massive infrastructure expansion might not need quite as many powerful computers as expected.

The tech giant confirmed this week that it is halting early-stage projects on rural land it owns in central Ohio's Licking County, outside of Columbus, and will reserve two of the three sites for farmland.

“In recent years, demand for our cloud and AI services grew more than we could have ever anticipated and to meet this opportunity, we began executing the largest and most ambitious infrastructure scaling project in our history,” said Noelle Walsh, the president of Microsoft's cloud computing operations, in a post on LinkedIn.

Walsh said “any significant new endeavor at this size and scale requires agility and refinement as we learn and grow with our customers. What this means is that we are slowing or pausing some early-stage projects.”

The AI industry doesn’t know if Trump just killed its GPU supply | The Verge

AI companies can’t figure out if the Trump tariffs are about to decimate them – and the fact that no one has a clear answer is sending them, and the tech industry overall, into a confusion spiral.

The markets are in disarray. Nvidia is down 7.59%, TSMC is down 7.22%. In San Francisco, sources tell us that this isn’t a big deal. But in DC, people are panicking. The core question is whether GPUs – the graphics processing units that are crucial to AI computing and other industries – are exempted from Donald Trump’s sweeping tariffs, and the answer is startlingly ambiguous.

Inside AI labs, researchers expect that their industry will be granted a tariff exemption. “I fully expect this to be a situation where Trump again gives companies he views as important/on his side/whatever a hall pass,” similar to what the President did with Apple during his first term, one source inside a major AI lab told The Verge.

In Washington, however, nobody seems sure what the current state of play is. The Trump administration spelled out an exception for the semiconductor chips at the heart of a GPU, but for now, complete electronic products that contain chips will apparently be subject to tariffs. And companies that need GPUs for machine learning, deep learning, real-time processing, and much more require not just the chip, but the entire machine built around it. “Most AI GPUs are, I believe, imported not as chips but as servers, largely from Taiwan,” Chris Miller, a professor at Tufts University and the author of Chip War: The Fight for the World’s Most Critical Technology, told The Verge in an email. “So these would presumably face the general Taiwan tariff rate” of 32%, currently scheduled to hit on April 9th.

Ordinarily, government agencies might be able to explain what’s happening. But when asked for clarity, a public affairs official at NIST, the agency at the Commerce Department overseeing the CHIPS Act – a $50 billion investment in building chip manufacturing plants on American soil – directed The Verge to the White House. The White House did not immediately return a request for comment. Neither did the U.S. Trade Representative, the agency responsible for creating and executing the President’s tariff strategy.

Anthropic steps up OpenAI competition with Max subscription for Claude

Anthropic on Wednesday introduced Claude’s Max plan, a new subscription tier for its viral chatbot and ChatGPT competitor.

The plan has two price points — $100 per month or $200 per month — offering different amounts of usage.

Subscribers will get “priority access to new models and capabilities,” including Claude’s voice mode when it launches.

Google is allegedly paying some AI staff to do nothing for a year rather than join rivals

Retaining top AI talent is tough amid cutthroat competition between Google, OpenAI, and other heavyweights.

Google’s AI division, DeepMind, has resorted to using “aggressive” noncompete agreements for some AI staff in the U.K. that bar them from working for competitors for up to a year, Business Insider reports.

Some are paid during this time, in what amounts to a lengthy stretch of PTO. But the practice can make researchers feel left out of the quick pace of AI progress, reported BI.

Last month, the VP of AI at Microsoft posted on X about how DeepMind staff are reaching out to him “in despair” over the challenge of escaping their noncompete clauses:

Future of AI

LEAKED internal memo about AI from CEO of Shopify - @GREG ISENBERG

10 quick takeaways i have after reading it by @GREG ISENBERG

1. a subtle but huge reframe: “hire an AI before you hire a human.”

2. AI is now a baseline expectation at shopify. hiring filters will probably favor ai-fluent candidates at shopify and other companies.

3. AI agents are now treated like teammates, not tools.

4. prompting is now a core skill. top performers will be top prompters.

5. AI usage is now measured. kinda wild. probably a business idea there to build the lattice for AI

6. AI-first prototyping is the new standard. shipping speed will probably 10x even at a $100B company like Shopify.

7. org charts blur, headcount planning now includes bots, not just bodies.

8. AI literacy is the new coding literacy. prompting, contextualizing, or evaluating ai output is become mandatory.

. AI is now a core layer in the software stack. not a plugin. not an add-on. ai sits beside infra, backend, frontend, and design. the best teams will be the ones who treat it like infrastructure.

10. tobi’s memo screams one thing: more impact per person. shopify is early to this, but i bet this will hit every major company over the next 12-24 months.

Coordination and AI safety (from my email) – Tyler Cowen

Jack Skidmore writes to me, and I will not double indent:

"Hello Tyler,

As someone who follows AI developments with interest (though I'm not a technical expert), I had an insight about AI safety that might be worth considering. It struck me that we might be overlooking something fundamental about what makes humans special and what might make AI risky.

The Human Advantage: Cooperation > Intelligence

Humans dominate not because we're individually smartest, but because we cooperate at unprecedented scales

Our evolutionary advantage is social coordination, not just raw processing power

This suggests AI alignment should focus on cooperation capabilities, not just intelligence alignment

The Hidden Risk: AI-to-AI Coordination

The real danger may not be a single superintelligent AI, but multiple AI systems coordinating without human oversight

AIs cooperating with each other could potentially bypass human control mechanisms

This could represent a blind spot in current safety approaches that focus on individual systems

A Possible Solution: Social Technologies for AI

We could develop "social technologies" for AI - equivalent to the norms, values, institutions, and incentive systems that enable human society that promote and prioritize humans

Example: Design AI systems with deeply embedded preferences for human interaction over AI interaction; or with small, unpredictable variations in how they interpret instructions from other AIs but not from humans

This creates a natural dependency on human mediation for complex coordination, similar to how translation challenges keep diplomats relevant

Curious your thoughts as someone embedded in the AI world… does this sparks any ideas/seem like a path that is underexplored?"

TC again: Of course it is tricky, because we might be relying on the coordination of some AIs to put down the other, miscreant AIs...

ChatGPT just passed the Turing test. But that doesn’t mean AI is now as smart as humans

There have been several headlines over the past week about an AI chatbot officially passing the Turing test.

These news reports are based on a recent preprint study by two researchers at the University of California San Diego in which four large language models (LLMs) were put through the Turing test. One model – OpenAI’s GPT-4.5 – was deemed indistinguishable from a human more than 70% of the time.

The Turing test has been popularised as the ultimate indicator of machine intelligence. However, there is disagreement about the validity of this test. In fact, it has a contentious history which calls into question how effective it really is at measuring machine intelligence.

So what does this mean for the significance of this new study?

While popularised as a means of testing machine intelligence, the Turing test is not unanimously accepted as an accurate means to do so. In fact, the test is frequently challenged.

There are four main objections to the Turing test:

Behaviour vs thinking. Some researchers argue the ability to “pass” the test is a matter of behaviour, not intelligence. Therefore it would not be contradictory to say a machine can pass the imitation game, but cannot think.

Brains are not machines. Turing makes assertions the brain is a machine, claiming it can be explained in purely mechanical terms. Many academics refute this claim and question the validity of the test on this basis.

Internal operations. As computers are not humans, their process for reaching a conclusion may not be comparable to a person’s, making the test inadequate because a direct comparison cannot work.

Scope of the test. Some researchers believe only testing one behaviour is not enough to determine intelligence.

More Like Us Than We Realize: ChatGPT Gets Caught Thinking Like a Human

Can we really trust AI to make better decisions than humans? According to a recent study, the answer is: not always. Researchers found that OpenAI’s ChatGPT, one of the most advanced and widely used AI models, sometimes makes the same decision-making errors as humans. In certain scenarios, it exhibits familiar cognitive biases, such as overconfidence and the hot-hand (or gambler’s) fallacy. Yet in other cases, it behaves in ways that differ significantly from human reasoning, for example, it tends not to fall for base-rate neglect or the sunk-cost fallacy.

The study, published in the INFORMS journal Manufacturing & Service Operations Management, suggests that ChatGPT doesn’t simply analyze data, it mirrors aspects of human thinking, including mental shortcuts and systematic errors. These patterns of bias appear relatively consistent across various business contexts, although they may shift as newer versions of the AI are developed.

AI: A Smart Assistant with Human-Like Flaws

The study put ChatGPT through 18 different bias tests. The results?

AI falls into human decision traps – ChatGPT showed biases like overconfidence or ambiguity aversion, and conjunction fallacy (aka as the “Linda problem”), in nearly half the tests.

AI is great at math, but struggles with judgment calls – It excels at logical and probability-based problems but stumbles when decisions require subjective reasoning.

Bias isn’t going away – Although the newer GPT-4 model is more analytically accurate than its predecessor, it sometimes displayed stronger biases in judgment-based tasks.

Why This Matters

From job hiring to loan approvals, AI is already shaping major decisions in business and government. But if AI mimics human biases, could it be reinforcing bad decisions instead of fixing them?

Sam Harris: Is AI aligned with our human interests? (Video)

0:16 The solution to “God-like AI”

1:20 The risk of self-improving AI

4:30 Two levels of risk

9:08 The AI arms race

Organizations and AI

Amazon CEO Says Generative AI Will Reinvent Every Customer Experience

Chief Executive Officer Andy Jassy said generative artificial intelligence is going to reinvent virtually every customer experience and enable new ones.

For the second year in a row, Jassy used his annual letter to shareholders to tout Amazon’s vision for how generative AI will be critical to the company’s next phase of growth. He also expressed Amazon’s goal of operating like “the world’s largest startup,” which takes risks with less red tape to solve real customer problems.

More than 1,000 generative AI applications are currently being built across the company that will change customer experiences around shopping, coding, personal assistants, streaming video and music, advertising, healthcare, reading, home devices and more, he said.

“If your customer experiences aren’t planning to leverage these intelligent models, their ability to query giant corpuses of data and quickly find your needle in the haystack, their ability to keep getting smarter with more feedback and data, and their future agentic capabilities, you will not be competitive,” Jassy said.

AI and Work

The Great Skill Shift: How AI Is Transforming 70% Of Jobs By 2030

The Four Phases Of Economic Transformation

According to Raman, we're moving through four distinct phases as AI transforms work:

"The first phase is disruption comes first, and we're seeing that in terms of AI adoption, people using AI tools at work," he explained.

Next comes job transformation—that 70% skill shift mentioned earlier.

The third phase involves the creation of entirely new roles. Raman reminded me that "10% of all jobs in the world today did not exist at the start of the century." Data scientists and social media managers simply weren't job titles as the knowledge economy was taking shape.

Finally, we arrive at a new economic paradigm. Raman calls this "the innovation economy"—where human creativity, imagination, and innovation capabilities become central to value creation.

The Three-Bucket Analysis For Your Job

While this transformation sounds overwhelming, there's a practical framework anyone can use to navigate it. Raman suggests taking the top dozen tasks in your current role and sorting them into three buckets:

The first bucket contains tasks that AI tools, and agents will increasingly perform if not fully automate—things like summarizing notes or generating content templates.

The second bucket includes tasks you'll do collaboratively with AI. This involves what Raman calls "AI literacy"—your ability to use AI tools effectively in your daily work.

The third bucket holds tasks that remain uniquely human. This is where our work will increasingly focus in the innovation economy.

"If you're heavy on that first bucket," Raman warns, "that means you've gotta upskill and transition. You're not gonna be safe just staying with a job that is highly vulnerable to AI disruption."

Soft Skills Are The New Hard Skills

As AI takes over more analytical and computational tasks, which skills will humans need to cultivate? Raman identifies five critical capabilities—what he calls "the five Cs":

"Curiosity, compassion, creativity, courage, and communication," he enumerated. "Get better at those every day."

These aren't just nice-to-have qualities anymore. They're becoming the most in-demand skills in the labor market. Looking at LinkedIn's data on skills currently rising in the UK, Raman notes that relationship building, strategic thinking, and communication rank higher than AI literacy or large language model utilization.

"Soft skills are the new hard skills," he asserts. "The technical skills are giving way to the soft skills, becoming the it skills, the in-demand skills, the durable skills."

What makes these skills particularly valuable is that they represent distinctly human capabilities that even advanced AI can't truly replicate. AI can mimic these qualities in output, but can't develop them independently. As Raman puts it, "We cannot give AI a data set on how humans develop courage for it to mimic or get better than us at."

These Jobs are at Risk from AI

That’s based on a survey of over 1,000 AI experts who conduct research or work in the field, and a separate survey of over 5,400 U.S. adults.

Experts are generally less concerned than average workers that AI will lead to fewer jobs overall but acknowledge that certain occupations are more likely to be impacted.

When considering where AI is likely to lead to fewer jobs, experts said some roles most at risk in the next 20 years include:

Cashiers (73% of experts agree)

Truck drivers (62%)

Journalists (60%)

Factory workers (60%)

Software engineers (50%)

Workers tend to agree with experts about at-risk jobs, except when it comes to truckers: just 33% of the general public believe AI will lead to fewer truck drivers in the future.

Experts surveyed in the report said truck driving jobs are primed for disruption by AI as driverless vehicle technology improves, says Jeff Gottfried, Pew’s associate director of research

AI in Education

Should College Graduates Be AI Literate? (Behind a Paywall)

More than two years after ChatGPT began to reshape the world, colleges are still working out how much they owe it to their students to teach them about AI, our Beth McMurtrie writes.

Some colleges believe it’s important to create an “AI-literate campus,” but they’re still in the minority. These institutions believe that as generative AI reshapes the world and the economy, students must understand how it works, how to use it effectively, how to evaluate its output, and how to account for its weaknesses and dangers.

Just 14 percent of college leaders said their campus had adopted AI literacy as an institutional or general-education learning outcome, according to a recent survey by the American Association of Colleges and Universities.

Just 22 percent of respondents said their college had an institutionwide approach to AI, according to a November survey by Educause, a nonprofit focused on technology in higher education.

Campuses leaning into AI literacy are taking a range of approaches:

The University of Delaware created an AI working group that designed training programs for people across campus.

Eighteen universities, including Delaware, joined a project by Ithaka S+R to study how AI will affect teaching, learning, and research on their campuses.

The University of Virginia paid 51 faculty members $5,000 each to improve their colleagues’ AI literacy through workshops, book groups, and consultations.

Arizona State University signed a deal with OpenAI to create an “AI Innovation Challenge,” resulting in more than 600 proposals for integrating the technology into teaching, research, or “the future of work.”

The University of Baltimore held an AI summit at which local community and business leaders met with students and faculty members to discuss AI literacy.

Many faculty members remain skeptical that AI literacy merits a place in curricula. Most remain concerned about unethical or excessive use of AI by students. Many instructors see generative AI as a threat to learning and continue to prohibit its use in class, according to a survey by Ithaka S+R.

Faculty unions have raised alarms at the possibility of some of their work being replaced by AI.

Professors have pushed back against some multimillion-dollar deals with AI companies.

There’s particular concern among humanities professors, who feel the technology is especially maladapted to their disciplines.

More professors are willing to use AI for routine tasks. One professor said she saved time by asking ChatGPT to help create a grading rubric.

However faculty feel, students are already adopting AI into their daily lives. Many use tools like ChatGPT to start papers or projects, summarize long texts, brainstorm projects, learn about new topics, and revise writing, an analysis by OpenAI, the maker of ChatGPT, found.

One factor forcing the issue on campuses: The industries students enter are increasingly steeped in AI, especially fields like health care and banking.

AI is reshaping organizational structures and career paths within industries, as it takes over both routine tasks and those requiring technical expertise.

Thirty percent of respondents to a recent survey said they had used generative AI in their jobs. The tools are more commonly used by younger workers, people with more education and higher incomes, and employees in industries like customer service, marketing, and information technology.

The bigger question: It’s still too soon to say whether a critical mass of professors will be willing to learn and teach about AI, even if they dislike it. Many see their role as fighting against wealthy tech companies trying to force untested technologies on the public. But at a time when colleges must increasingly make a case for their relevance and value, can they afford not to embrace a force that’s quickly becoming ubiquitous?

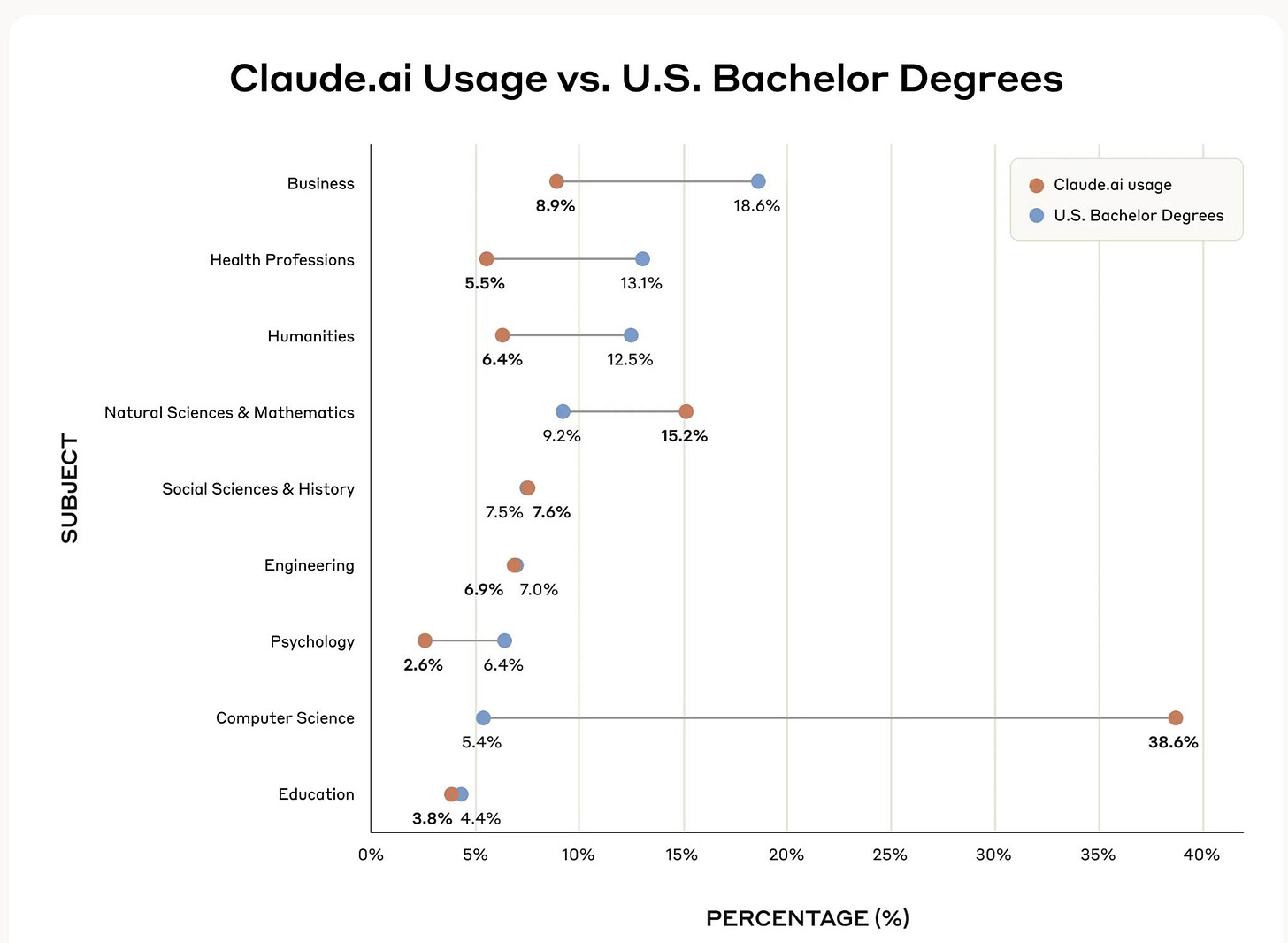

Anthropic Education Report: How University Students Use Claude

The key findings from our Education Report are:

STEM students are early adopters of AI tools like Claude, with Computer Science students particularly overrepresented (accounting for 36.8% of students’ conversations while comprising only 5.4% of U.S. degrees). In contrast, Business, Health, and Humanities students show lower adoption rates relative to their enrollment numbers.

We identified four patterns by which students interact with AI, each of which were present in our data at approximately equal rates (each 23-29% of conversations): Direct Problem Solving, Direct Output Creation, Collaborative Problem Solving, and Collaborative Output Creation.

Students primarily use AI systems for creating (using information to learn something new) and analyzing (taking apart the known and identifying relationships), such as creating coding projects or analyzing law concepts. This aligns with higher-order cognitive functions on Bloom’s Taxonomy. This raises questions about ensuring students don’t offload critical cognitive tasks to AI systems.

Is ChatGPT Plagiarism? How to Use AI Responsibly | Grammarly

ChatGPT generates text based on the data it was trained on, raising concerns about plagiarism.

AI-generated content is not inherently plagiarism, but improper use can lead to ethical and academic issues.

AI detection tools can help assess content originality, but they’re not always accurate.

Best practices for maintaining integrity while using AI include citing AI assistance, verifying sources, and using tools like Grammarly Authorship for transparency.

AI and Health

Dr Oz tells federal health workers AI could replace frontline doctors | Trump administration | The Guardian

Dr Mehmet Oz reportedly told federal staffers that artificial intelligence models may be better than frontline human physicians in his first all-staff meeting this week.

Oz told staffers that if a patient went to the doctor for a diabetes diagnosis it would cost roughly $100 an hour, compared with $2 an hour for an AI visit, according to unnamed sources who spoke to Wired magazine. He added that patients may prefer an AI avatar.

Oz also spent a portion of his first meeting with employees arguing they had a “patriotic duty” to remain healthy, with the goal of decreasing costs to the health insurance system. He made a similar argument at his confirmation hearing.

“I think it is our patriotic duty to be healthy,” Oz said in response to a question from the Republican senator Todd Young of Indiana. “First of all, it feels a heck of a lot better … But it also costs a lot of money to take care of sick people who are sick because of lifestyle choices.”

AI and Mental Health

The AI therapist can see you now : Shots - Health News : NPR

New research suggests that given the right kind of training, AI bots can deliver mental health therapy with as much efficacy as — or more than — human clinicians.

The recent study, published in the New England Journal of Medicine, shows results from the first randomized clinical trial for AI therapy.

Researchers from Dartmouth College built the bot as a way of taking a new approach to a longstanding problem: The U.S. continues to grapple with an acute shortage of mental health providers.

"I think one of the things that doesn't scale well is humans," says Nick Jacobson, a clinical psychologist who was part of this research team. For every 340 people in the U.S., there is just one mental health clinician, according to some estimates.

While many AI bots already on the market claim to offer mental health care, some have dubious results or have even led people to self-harm.

More than five years ago, Jacobson and his colleagues began training their AI bot in clinical best practices. The project, says Jacobson, involved much trial and error before it led to quality outcomes.

"The effects that we see strongly mirror what you would see in the best evidence-based trials of psychotherapy," says Jacobson. He says these results were comparable to "studies with folks given a gold standard dose of the best treatment we have available."

The researchers gathered a group of roughly 200 people who had diagnosable conditions like depression and anxiety, or were at risk of developing eating disorders. Half of them worked with AI therapy bots. Compared to those that did not receive treatment, those who did showed significant improvement.

One of the more surprising results, says Jacobson, was the quality of the bond people formed with their bots. "People were really developing this strong relationship with an ability to trust it," says Jacobson, "and feel like they can work together on, on their mental health symptoms."

Strength of bonds and trust with therapists is one of the overall predictors of efficacy in talk and cognitive behavioral therapy.

AI and Trust

34% of Americans Trust ChatGPT Over Human Experts, But Not for Legal or Medical Advice | Morningstar

Key Findings from the ChatGPT Trust Survey:

60% of U.S. adults have used ChatGPT to seek advice or information—signaling widespread awareness and early adoption.

Of those who used it, 70% said the advice was helpful, suggesting that users generally find value in the chatbot's responses.

The most trusted use cases for ChatGPT are:

Career advice

Educational support

Product recommendations

The least trusted use cases are:

Legal advice

Medical advice

34% of respondents say they trust ChatGPT more than a human expert in at least one area.

Despite its growing popularity, only 11.1% believe ChatGPT will improve their personal financial situation.

Younger adults (ages 18–29) and Android and iPhone users report significantly higher trust in ChatGPT compared to older generations and Desktop (Mac/Windows) users.

Older adults and high-income earners remain the most skeptical about ChatGPT's reliability and societal role.

When asked about the broader implications of AI, only 14.1% of respondents strongly agree that ChatGPT will benefit humanity.

AI and Deception

Fake job seekers are flooding U.S. companies that are hiring for remote positions, tech CEOs say

Companies are facing a new threat: Job seekers who aren’t who they say they are, using AI tools to fabricate photo IDs, generate employment histories and provide answers during interviews.

The rise of AI-generated profiles means that by 2028 globally 1 in 4 job candidates will be fake, according to research and advisory firm Gartner.

Once hired, an impostor can install malware to demand a ransom from a company, or steal its customer data, trade secrets or funds.

AI and Energy

Energy demands from AI datacentres to quadruple by 2030, says report | Artificial intelligence (AI) | The Guardian

The global rush to AI technology will require almost as much energy by the end of this decade as Japan uses today, but only about half of the demand is likely to be met from renewable sources.

Processing data, mainly for AI, will consume more electricity in the US alone by 2030 than manufacturing steel, cement, chemicals and all other energy-intensive goods combined, according to a report from the International Energy Agency (IEA).

Global electricity demand from datacentres will more than double by 2030, according to the report. AI will be the main driver of that increase, with demand from dedicated AI datacentres alone forecast to more than quadruple.

One datacentre today consumes as much electricity as 100,000 households, but some of those currently under construction will require 20 times more.

But fears that the rapid adoption of AI will destroy hopes of tackling the climate crisis have been “overstated”, according to the report, which was published on Thursday. That is because harnessing AI to make energy use and other activities more efficient could result in savings that reduce greenhouse gas emissions overall.

AI and Politics

ChatGPT: Did Donald Trump use ChatGPT to create Liberation Day tariffs plan?

Internet analysts have taken note of the similarities between the Trump administration's formula used to decide the "Liberation Day" tariffs, and the same methods provided by ChatGPT if asked.

Critics highlighted that ChatGPT and other artificial intelligence chatbots would provide very similar methods for setting tariff levels if they were asked to design a policy.

Newsweek contacted the White House for more information on the impact of the tariff policy via email.

The Context

The White House imposed a 10 percent baseline tariff on all imports, including those from U.S. allies and non-economically active regions, along with higher rates for countries with large trade surpluses against the U.S., on Wednesday. The administration expects the new rates to remain in place until the U.S. narrows a $1.2 trillion trade imbalance recorded last year.

What To Know

After the tariffs were announced, the White House released the formula reportedly used to set the tariffs: dividing the U.S. trade deficit with a given country by the value of U.S. imports from that country, then applying that percentage as a tariff. In some cases, a flat 10 percent rate was used if it was higher.

However, many pointed out that ChatGPT proposes the same approach if questioned on creating a global tariff policy. The White House has not commented on how the formula was created, so the similarities may simply be coincidental.

"I think they asked ChatGPT to calculate the tariffs from other countries, which is why the tariffs make absolutely no sense," political commentator Steve Bonnell wrote on X, formerly Twitter.

"They're simply dividing the trade deficit we have with a country with our imports from that country, or using 10 percent, whichever is greater."

In screenshots, Bonnell showed that he asked ChatGPT the question: "What would be an easy way to calculate the tariffs that should be imposed on other countries so that the US is on even playing fields when it comes to trade deficit?"

The AI replied: "To calculate tariffs that help level the playing field in terms of trade deficits (with a minimum tariff of 10 percent), you can use a proportional tariff formula based on the trade deficit with each country. The idea is to impose higher tariffs on countries with which the U.S. has larger trade deficits, thus incentivizing more balanced trade." This was followed by an equation that resembled the one shared by the White House.

Why ChatGPT is a uniquely terrible tool for government ministers

The news that Peter Kyle, secretary of state for science and technology, had been using ChatGPT for policy advice prompted some difficult questions.

Kyle apparently used the AI tool to draft speeches and even asked it for suggestions about which podcasts he should appear on. But he also sought advice on his policy work, apparently including questions on why businesses in the UK are not adopting AI more readily. He asked the tool to define what “digital inclusion” means.

A spokesperson for Kyle said his use of the tool “does not substitute comprehensive advice he routinely receives from officials” but we have to wonder whether any use at all is suitable. Does ChatGPT give good enough advice to have any role in decisions that could affect the lives of millions of people?

Underpinned by our research on AI and public policy, we find that ChatGPT is uniquely flawed as a device for government ministers in several ways, including the fact that it is backward looking, when governments really should be looking to the future.

BONUS MEME

(Had to make a Severance goat meme)