I am back from Kenya now…a great trip despite our 4x4 vehicle being attacked by a rhino named Kenyattah. I highly recommend it (going to Kenya…not being attacked by a Rhino).

Anyway…on to AI.

The AI Gender Gap, Pope Leo focused on AI, It would be hard for AI to destroy humanity, Zuckerberg wants to give us AI friends, 9 ChatGPT tips to get ahead at work, and more so…

AI Tips & Tricks

I’m a ChatGPT power user — 9 tips to help you get ahead at work | Tom's Guide

Here are the nine tips via an AI summary.

Set Smart Reminders with ChatGPT Tasks

Utilize ChatGPT's Tasks feature to schedule recurring reminders, such as "Send expense report every Friday" or "Don't forget to ask for next Thursday off," helping you stay organized and on top of your responsibilities.Use ChatGPT for Strategic Brainstorming

Enhance your strategic thinking by prompting ChatGPT with scenarios like, "Company X just launched [product]. What are 3 possible implications for our business, and how might we respond?" to gain insights and plan responses.Refine Your Writing Instead of Copy-Pasting

Rather than directly copying AI-generated text, paste your draft into ChatGPT and ask for improvements in clarity, tone, or structure to maintain your authentic voice while enhancing quality.Organize Your Prompts with Custom Labels

Keep your ChatGPT interactions organized by labeling prompts with specific titles, making it easier to reference and manage your AI-assisted tasks.Paraphrase AI Outputs to Match Your Voice

After receiving a response from ChatGPT, paraphrase the content to align with your personal communication style, ensuring consistency and authenticity in your work.Treat ChatGPT as a Junior Assistant

Delegate tasks to ChatGPT as you would to a junior team member, providing clear instructions and context to receive helpful drafts and suggestions.Save Effective Prompts for Reuse

Maintain a collection of prompts that yield good results, allowing you to reuse and adapt them for similar tasks in the future, enhancing efficiency.Avoid Overusing AI-Generated Content

Be cautious not to rely excessively on ChatGPT, especially for tasks requiring a personal touch, to prevent your work from appearing generic or impersonal.Use ChatGPT to Enhance, Not Replace, Your Work

Leverage ChatGPT to support and improve your work processes, using it as a tool to augment your skills rather than as a substitute for your efforts.

AI Firm News

Zuckerberg Says in Response to Loneliness Epidemic, He Will Create Most of Your Friends Using Artificial Intelligence

Meta CEO Mark Zuckerberg is more concerned about his billions of customers making friends with AI chatbots than creating bonds with real human beings.

In an interview with podcaster Dwarkesh Patel this week, Zuckerberg asserted that more people should be connecting with chatbots on a social level — because, in a striking line of argumentation, they don't have enough real-life friends.

When asked if AI chatbots can help fight the loneliness epidemic, the billionaire painted a dystopian vision of a future in which we spend more time talking to AIs than flesh-and-blood humans.

"There's the stat that I always think is crazy, the average American, I think, has fewer than three friends," Zuckerberg told Patel. "And the average person has demand for meaningfully more, I think it's like 15 friends or something, right?"

"The average person wants more connectivity, connection, than they have," he concluded, hinting at the possibility that the discrepancy could be filled with virtual friends.

Zuckerberg argued that we simply don't have the "vocabulary" yet to ascribe meaning to a future in which we seek connection from an AI chatbot.

However, he also admitted there was a "stigma" surrounding the practice right now and that the tech was "still very early."

He has a point. The current state of AI chatbots leaves a lot to be desired. Zuckerberg's interview with Patel was published just days after the Wall Street Journal reported that Meta staffers had raised concerns over underage users being exposed to sexually explicit discussions by the company's AI chatbots.

Sam Altman, the architect of ChatGPT, is rolling out an orb that verifies you're human - CBS News

Sam Altman, the CEO of OpenAI and the architect of ChatGPT, is behind a venture that wants to solve a modern-day problem: proving you're human amidst a proliferation of bots and artificial intelligence.

The startup, called World (formerly Worldcoin), is launching in the U.S. with the distribution of 20,000 tech devices called Orbs that scan a person's retina to verify they are human. After confirming a person's humanity, World then creates a digital ID for users that proves their personhood, distinguishing them from a bot or AI program that can mimic human behavior.

The device, which looks like something out of "Black Mirror," may seem ironic coming from Altman, given that its purpose is to help people stand out from the very same types of technology he helped develop. But World's backers say the Orb and its "proof of personhood" is addressing a problem that can stymie everything from finance to online dating: bots impersonating people.

The Orb "is a privacy-first way to prove you are a human in the world of AI and bots," said Jake Brukhman, co-founder of CoinFund, one of the project's earliest backers. "That is getting relevant as AI is becoming much more prevalent in the world."

OpenAI’s flagship GPT-4.1 model is now in ChatGPT | The Verge

The latest versions of OpenAI’s multimodal GPT AI models are now rolling out to ChatGPT. OpenAI announced on Wednesday that GPT-4.1 will be available across all paid ChatGPT account tiers and can now be accessed by Plus, Pro, or Team users under the model picker dropdown menu. Free users are excluded from the rollout, but OpenAI says that Enterprise and Edu users will get access “in the coming weeks.”

GPT-4o mini, the more affordable and efficiency-focused model that OpenAI launched last year, is also being replaced with GPT-4.1 mini as the default option in ChatGPT for all users, including free accounts. Users on paid accounts will also see GPT-4o mini replaced by GPT-4.1 mini in the model picker options.

Both GPT-4.1 and GPT‑4.1 mini are optimized for coding tasks and instruction following, and outperform GPT‑4o and GPT‑4o mini “across the board,” according to OpenAI. Both of the new models support a one million context token window — the amount of text, images, or videos in a prompt that an AI model can process — that greatly surpasses GPT-4o’s 128,000-token limit. OpenAI says that speed improvements also make GPT-4.1 more appealing for everyday coding tasks compared to the powerful OpenAI o3 & o4-mini reasoning models it introduced in April.

OpenAI for Countries aims to build global AI infrastructure and beat China

OpenAI announced a push to help countries build AI infrastructure and promote AI rooted in democratic, rather than authoritarian, values.

Why it matters: Global expansion will be one key to ensuring that OpenAI's massive investments pay off — and the company is arguing that it will help the U.S. counter China's influence, too.

How it works: OpenAI chief global affairs officer Chris Lehane said the new "OpenAI for Countries" effort, announced Wednesday, aims to partner with countries or regions to build and operate data centers that would serve up localized versions of ChatGPT for their citizens, with particular focus on health care and education.

Countries that take part would help fund infrastructure as part of a broadening of the Project Stargate effort that OpenAI announced with Oracle and SoftBank earlier this year.

OpenAI will be working closely with the U.S. government, which has export control powers, to determine where OpenAI technology can be deployed.

Catch up quick: OpenAI is in the midst of restructuring its business from a "capped-profit" partnership to a public benefit corporation.

That process is not done. Bloomberg reported late Monday that Microsoft and OpenAI are still negotiating and regulators have yet to give their blessing.

The big picture: OpenAI's announcement comes a day before CEO Sam Altman is set to testify before the Senate Commerce Committee at a hearing on "Winning the AI race."

What they're saying: "We have a window here to help create pathways so that a large portion of the world is building on democratic AI at a moment when the world's going to have to choose between democratic AI and autocratic [AI]," Lehane told Axios.

The project offers countries local sovereignty over data and the ability to partner with OpenAI on a fund to help seed a "national AI ecosystem" by backing local startups.

Between the lines: OpenAI for Countries builds on the notion of "democratic AI" that Altman laid out in a 2024 Washington Post op-ed.

It also aims to capitalize on strong demand from other countries to have their own version of Stargate, a sentiment OpenAI heard frequently during this year's AI Action Summit in Paris.

ChatGPT is getting smarter, but its hallucinations are spiraling | TechRadar

OpenAI’s latest AI models, GPT o3 and o4-mini, hallucinate significantly more often than their predecessors

The increased complexity of the models may be leading to more confident inaccuracies

The high error rates raise concerns about AI reliability in real-world applications

Anthropic CEO Admits We Have No Idea How AI Works

The CEO of one of the world's leading artificial intelligence labs just said the quiet part out loud: that nobody really knows how AI works.

In an essay published to his personal website, Anthropic CEO Dario Amodei announced plans to create a robust "MRI on AI" within the next decade. The goal is not only to figure out what makes the technology tick, but also to head off any unforeseen dangers associated with what he says remains its currently enigmatic nature.

"When a generative AI system does something, like summarize a financial document, we have no idea, at a specific or precise level, why it makes the choices it does — why it chooses certain words over others, or why it occasionally makes a mistake despite usually being accurate," the Anthropic CEO admitted.

On its face, it's surprising to folks outside of AI world to learn that the people building these ever-advancing technologies "do not understand how our own AI creations work," he continued — and anyone alarmed by that ignorance is "right to be concerned."

But on another level, maybe it isn't; all the image and text generators that have exploded in popularity over the last few years work under the same principle of feeding in a gigantic pile of data and letting statistical systems mine it for patterns that can be reproduced. The whole thing is driven by ingested human creative works, not from first principles of machine intelligence.

"This lack of understanding," Amodei wrote, "is essentially unprecedented in the history of technology."

In Amodei's telling, that ignorance about how AI works and what unforeseen risks it may pose is a driving factor behind Anthropic.

Future of AI

An Interview With the Herald of the Apocalypse

Ross Douthat: How fast is the artificial intelligence revolution really happening? What would machine superintelligence really mean for ordinary human beings? When will Skynet be fully operational?

Are human beings destined to merge with some kind of machine god — or be destroyed by our own creation? What do A.I. researchers really expect, desire and fear?

My guest today is an A.I. researcher who’s written a dramatic forecast suggesting that we may get answers to all of those questions a lot sooner than you might think. His forecast suggests that by 2027, which is just around the corner, some kind of machine god may be with us, ushering in a weird, post-scarcity utopia — or threatening to kill us all.

Douthat: Daniel, I read your report pretty quickly — not at A.I. speed or superintelligence speed — when it first came out. And I had about two hours of thinking a lot of pretty dark thoughts about the future. Then, fortunately, I have a job that requires me to care about tariffs and who the new pope is, and I have a lot of kids who demand things of me, so I was able to compartmentalize and set it aside.

I would say you’re thinking about this all the time. How does your psyche feel day to day if you have a reasonable expectation that the world is about to change completely in ways that dramatically disfavor the entire human species?

Kokotajlo: Well, it’s very scary and sad. It does still give me nightmares sometimes. I’ve been involved with A.I. and thinking about this thing for a decade or so, but 2020 with GPT-3 was the moment when I was like: Oh, wow, it seems like it’s probably going to happen in my lifetime, maybe in this decade or so. That was a bit of a blow to me psychologically. But I don’t know — you can get used to anything, given enough time, and like you, the sun is shining and I have my wife and my kids and my friends, and keep plugging along and doing what seems best.

On the bright side, I might be wrong about all this stuff.

Douthat: For a lot of people, that’s a story of swift human obsolescence right across many, many domains. When people hear a phrase like “human obsolescence,” they might associate it with: I’ve lost my job and now I’m poor.

The assumption is that you’ve lost your job, but society is just getting richer and richer. I just want to zero in on how that works. What is the mechanism whereby that makes society richer?

Kokotajlo: The direct answer to your question is that when a job is automated and that person loses their job, the reason they lost their job is that now it can be done better, faster and cheaper by the A.I.s. That means that there’s lots of cost savings, and possibly also productivity gains.

Viewed in isolation, that’s a loss for the worker but a gain for their employer. But if you multiply this across the whole economy, it means that all of the businesses are becoming more productive and less expensive. They’re able to lower their prices for the services and goods they’re producing. So the overall economy will boom: G.D.P. goes to the moon, we’ll see all sorts of wonderful new technologies, the pace of innovation increases dramatically, the costs of goods go down, et cetera.

Douthat: Just to make it concrete: The price of soup-to-nuts designing and building a new electric car goes way down, you need fewer workers to do it, the A.I. comes up with fancy new ways to build the car, and so on. You can generalize that to a lot of different things, like solving the housing crisis in short order because it becomes much cheaper and easier to build homes.

But in the traditional economic story, when you have productivity gains that cost some people jobs — but free up resources that are then used to hire new people to do different things — those people are paid more money, and they use that money to buy the cheaper goods. In this scenario, it doesn’t seem like you are creating that many new jobs.

Kokotajlo: Indeed, and that’s a really important point to discuss. Historically, when you automate something, the people move on to something that hasn’t been automated yet. Overall, people still get their jobs in the long run. They just change what jobs they have.

When you have A.G.I. — or artificial general intelligence — and when you have superintelligence — even better A.G.I. — that is different. Whatever new jobs you’re imagining that people could flee to after their current jobs are automated, A.G.I. could do, too. That is an important difference between how automation has worked in the past and how I expect it to work in the future.

Douthat: So this is a radical change in the economic landscape. The stock market is booming. Government tax revenue is booming. The government has more money than it knows what to do with and lots and lots of people are steadily losing their jobs. You get immediate debates about universal basic income which could be quite large because the companies are making so much money.

What do you think people are doing day to day in that world?

Kokotajlo: I imagine that they are protesting because they’re upset that they’ve lost their jobs, and then the companies and the governments will buy them off with handouts.

Could AI Really Kill Off Humans? | Scientific American

There’s a popular sci-fi cliché that one day artificial intelligence goes rogue and kills every human, wiping out the species. Could this truly happen? In real-world surveys AI researchers say that they see human extinction as a plausible outcome of AI development. In 2024 hundreds of these researchers signed a statement that read: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Okay, guys.

Pandemics and nuclear war are real, tangible concerns, more so than AI doom, at least to me, a scientist at the RAND Corporation. We do all kinds of research on national security issues and might be best known for our role in developing strategies for preventing nuclear catastrophe during the cold war. RAND takes big threats to humanity seriously, so I, skeptical about AI’s human extinction potential, proposed a project to research whether it could.

My team’s hypothesis was this: No scenario can be described where AI is conclusively an extinction threat to humanity. In other words, our starting hypothesis was that humans were too adaptable, too plentiful and too dispersed across the planet for AI to wipe us out using any tools hypothetically at its disposal. If we could prove this hypothesis wrong, it would mean that AI might be a real extinction threat to humanity.

Many people are assessing catastrophic risks from AI. In the most extreme cases, some people assert that AI will become a superintelligence, with a near certain chance that it will use novel, advanced tech like nanotechnology to take over and wipe us out. Forecasters have predicted the likelihood of existential risk from an AI catastrophe, often arriving between a 0 and 10 percent chance that AI causes humanity’s extinction by 2100. We were skeptical of the value of predictions like these for policymaking and risk reduction.

Our team consisted of a scientist, an engineer and a mathematician. We swallowed any of our AI skepticism and—in very RAND-like fashion—set about detailing how AI could actually cause human extinction. A simple global catastrophe or societal collapse was not enough for us. We were trying to take the risk of extinction seriously, which meant that we were interested only in a complete wipeout of our species. We also weren’t interested in whether AI would try to kill us—only in whether it could succeed.

It was a morbid task. We went about it by analyzing exactly how AI might exploit three major threats commonly perceived to be existential risks: nuclear war, biological pathogens and climate change.

It turns out it is very hard—though not completely out of the realm of possibility—for AI to kill us all.

In the course of our analysis, we also identified four things that our hypothetical super-evil AI has to have: First, it would need to somehow set an objective to cause extinction. AI also would have to gain control over the key physical systems that create the threat, like nuclear weapon launch control or chemical manufacturing infrastructure. It would need the ability to persuade humans to help and hide its actions long enough to succeed. And it has to be able to survive without humans around to support it, because even once society started to collapse, follow-up actions would be required to cause full extinction.

If AI did not possess all four of these capabilities, our team concluded its extinction project would fail. That said, it is plausible to create AI that has all of these capabilities, even if unintentionally.

Moreover, humans might create AI with all four of these capabilities intentionally. Developers are already trying to create agentic, or more autonomous, AI, and they’ve already observed AI that has the capacity for scheming and deception.

But if extinction is a plausible outcome of AI development, doesn’t that mean we should follow the precautionary principle? That is to say: Shut it all down because better off safe than sorry? We say the answer is no. The shut-it-down approach is only appropriate if we don’t care much about the benefits of AI. For better or worse, we care a great deal about the benefits AI will likely bring, and it is inappropriate to forgo them to avoid a potential but highly uncertain catastrophe, even one as consequential as human extinction.

Better at everything: how AI could make human beings irrelevant | Artificial intelligence (AI) | The Guardian

Right now, most big AI labs have a team figuring out ways that rogue AIs might escape supervision, or secretly collude with each other against humans. But there’s a more mundane way we could lose control of civilisation: we might simply become obsolete. This wouldn’t require any hidden plots – if AI and robotics keep improving, it’s what happens by default.

How so? Well, AI developers are firmly on track to build better replacements for humans in almost every role we play: not just economically as workers and decision-makers, but culturally as artists and creators, and even socially as friends and romantic companions. What place will humans have when AI can do everything we do, only better?

Talk of AI’s current abilities can occasionally sound like marketing hype, and some of it definitely is. But in the longer term, the scope for improvement is huge. You may believe that there will always be something uniquely human that AI just can’t replicate. I’ve spent 20 years in AI research, watching its progression from basic reasoning to solving complex scientific problems. Abilities that seemed uniquely human, such as handling ambiguity or using abstract analogies, are now easily handled. There may be delays along the way, but we should assume there will be continued progress in AI across the board.

These artificial minds won’t just aid humans – they’ll quietly take over in countless small ways, initially because they’re cheaper, eventually because they’re genuinely better than even our best performers. Once they’re reliable enough, they’ll become the only responsible choice for almost all important tasks, ranging from legal rulings and financial planning to healthcare decisions.

It’s easiest to imagine what this future may look like in the context of employment: you’ll hear of friends losing jobs and having trouble finding new work. Companies will freeze hiring in anticipation of next year’s better AI workers. More and more of your own job will consist of accepting suggestions from reliable, charming and eager-to-please AI assistants. You’ll be free to think about the bigger picture, but will find yourself conversing about it with your ultra-knowledgable AI assistant. This assistant will fill in the blanks in your plans, provide relevant figures and precedent, and suggest improvements. Eventually, you’ll simply ask it: “What do you think I should do next?” Whether or not you lose your job, it’ll be clear that your input is optional.

And it won’t be any different outside the world of work. It was a surprise even to some AI researchers that the first models capable of general reasoning abilities, the precursors to ChatGPT and Claude, could also be tactful, patient, nuanced and gracious. But it’s now clear that social skills can be learned by machines just like any other. People already have AI romantic companions, and AI doctors are consistently rated better on bedside manner than human doctors.

What will life look like when each of us has access to an endless supply of personalised affection, guidance and support? Your family and friends will be even more glued to their screens than usual. When they do talk to you, they’ll tell you about funny and impressive things their online companions have said.

Sam Altman’s goal for ChatGPT to remember 'your whole life’ is both exciting and disturbing | TechCrunch

OpenAI CEO Sam Altman laid out a big vision for the future of ChatGPT at an AI event hosted by VC firm Sequoia earlier this month.

When asked by one attendee about how ChatGPT can become more personalized, Altman replied that he eventually wants the model to document and remember everything in a person’s life.

The ideal, he said, is a “very tiny reasoning model with a trillion tokens of context that you put your whole life into.”

“This model can reason across your whole context and do it efficiently. And every conversation you’ve ever had in your life, every book you’ve ever read, every email you’ve ever read, everything you’ve ever looked at is in there, plus connected to all your data from other sources. And your life just keeps appending to the context,” he described.

“Your company just does the same thing for all your company’s data,” he added.

Altman may have some data-driven reason to think this is ChatGPT’s natural future. In that same discussion, when asked for cool ways young people use ChatGPT, he said, “People in college use it as an operating system.” They upload files, connect data sources, and then use “complex prompts” against that data.

Additionally, with ChatGPT’s memory options — which can use previous chats and memorized facts as context — he said one trend he’s noticed is that young people “don’t really make life decisions without asking ChatGPT.”

“A gross oversimplification is: Older people use ChatGPT as, like, a Google replacement,” he said. “People in their 20s and 30s use it like a life advisor.”

It’s not much of a leap to see how ChatGPT could become an all-knowing AI system. Paired with the agents the Valley is currently trying to build, that’s an exciting future to think about.

Imagine your AI automatically scheduling your car’s oil changes and reminding you; planning the travel necessary for an out-of-town wedding and ordering the gift from the registry; or preordering the next volume of the book series you’ve been reading for years.

But the scary part? How much should we trust a Big Tech for-profit company to know everything about our lives? These are companies that don’t always behave in model ways.

Organizations Using AI

It’s Time To Get Concerned, Klarna, UPS, Duolingo, Cisco, And Many Other Companies Are Replacing Workers With AI

The new workplace trend is not employee friendly. Artificial intelligence and automation technologies are advancing at blazing speed. A growing number of companies are using AI to streamline operations, cut costs, and boost productivity. Consequently, human workers are facing facing layoffs, replaced by AI. Like it or not, companies need to make tough decisions, including layoffs to remain competitive.

Corporations including Klarna, UPS, Duolingo, Intuit and Cisco are replacing laid-off workers with AI and automation. While these technologies enhance productivity, they raise serious concerns about future job security. For many workers, there is a big concern over whether or not their jobs will be impacted.

Economic pressures, inflation and roller coaster stock prices, have pushed firms to prioritize leaner operations. The result is there will be fewer human jobs available. Investor enthusiasm for AI rewards companies that deploy the technology. We’ve seen numerous occasions in which AI was used in corporate announcements, and their stock share prices rose higher.

AI and Work

Gen AI is at risk of creating a new gender gap in job market

Artificial intelligence tools such as ChatGPT can be a boon for productivity, but when it comes to adopting the technology, a significant gender gap exists.

Recent research shows that women are considerably less likely than men to use ChatGPT.

For businesses, this represents both a lost opportunity to boost overall productivity and a potential driver of widening gaps between workers.

AI may ‘exacerbate inequality’ at work. Here’s how experts think companies should address that

Workplace leaders are grappling with balancing between prioritizing people and profit making amid the rapid rise of artificial intelligence.

Companies have a responsibility to protect jobs as the AI boom could drive unemployment and “potentially exacerbate inequality,” CIMB Group’s chief data and AI officer Pedro Uria-Recio told CNBC Make It at the GITEX Asia 2025 conference.

“There is a huge wave of change, and unfortunately, some people might be left behind,” he said.

The U.N. Trade and Development agency warned in an April report that AI could affect 40% of jobs worldwide and widen inequality between nations.

Therefore, companies should work to not only equip employees with the right skills that will enable them to handle the AI revolution more effectively, but also work to create new employment, added Uria-Recio.

However, not all workplace leaders share the same perspective.

“We need to establish that protecting employment might not be the right mindset,” Tomasz Kurczyk, chief information technology officer at Prudential Singapore, told CNBC Make It.

“The question is: ‘What can we do to make sure that we adapt employment?’ Because it’s like trying to prevent a tsunami wave. We know protection will not necessarily be effective. So it’s thinking really how we can adapt,” said Kurczyk.

The People Refusing to Use AI

Nothing has convinced Sabine Zetteler of the value of using AI.

"I read a really great phrase recently that said something along the lines of 'why would I bother to read something someone couldn't be bothered to write' and that is such a powerful statement

and one that aligns absolutely with my views."

Ms Zetteler runs her own London-based communications agency, with around 10 staff, some full-time some part-time.

"What's the point of sending something we didn't write, reading a newspaper written by bots, listening to a song created by AI, or me making a bit more money by sacking my administrator who has four kids?

"Where's the joy, love or aspirational betterment even just for me as a founder in that? It means nothing to me," she says.

Ms Zetteler is among those resisting the AI invasion, which really got going with the launch of ChatGPT at the end of 2022.

Since then the service, and its many rivals have become wildly popular. ChatGPT is racking up over five billion visits a month, according to software firm Semrush.

But training AI systems like ChatGPT requires huge amounts of energy and, once trained, keeping them running is also energy intensive.

While it's difficult to quantify the electricity used by AI, a report by Goldman Sachs estimated that a ChatGPT query uses nearly 10 times as much electricity as a Google search query.

That makes some people uncomfortable.

AI in Education

Northeastern college student demanded her tuition fees back after catching her professor using OpenAI’s ChatGPT

A senior at Northeastern University filed a formal complaint and demanded a tuition refund after discovering her professor was secretly using AI tools to generate notes. The professor later admitted to using several AI platforms and acknowledged the need for transparency. The incident highlights growing student concerns over professors using AI, a reversal of earlier concerns from professors worried that students would use the technology to cheat.

Some students are not happy about their professor's use of AI. One college senior was so shocked to learn her teacher was using AI to help him create notes that she lodged a formal complaint and asked for a refund of her tuition money, according to the New York Times.

Ella Stapleton, who graduated from Northeastern University this year, grew suspicious of her business professor’s lecture notes when she spotted telltale signs of AI generation, including a stray “ChatGPT” citation tucked into the bibliography, recurrent typos that mirrored machine outputs, and images depicting figures with extra limbs.

“He’s telling us not to use it, and then he’s using it himself,” Stapleton said in an interview with the New York Times.

Stapleton lodged a formal complaint with Northeastern’s business school over the incident, focused on her professor’s undisclosed use of AI alongside broader concerns about his teaching approach—and demanded a tuition refund for that course. The claim amounted to just over $8,000.

After a series of meetings, Northeastern ultimately decided to reject the senior's claim.

The professor behind the notes, Rick Arrowood, acknowledged he used various AI tools—including ChatGPT, the Perplexity AI search engine, and an AI presentation generator called Gamma—in an interview with The New York Times.

o3 now cracks new Harvard Business School cases from the PDF, in one shot

I blurred the figures to not ruin the case, but I asked the AI to figure out financials, which incorporates data scattered throughout the case. More interesting, I asked it to compare to the case's answer.

There were no hallucinations here (surprisingly) but o3 does still hallucinate, so I wouldn't make it my sole accountant or analyst, yet. But how it gathered the information and coherently built out models like an MBA would is really interesting.

This quality level is very high - it also answered sophisticated brand strategy, strategic positioning, and finance questions. They would be very good answer from MBAs.

AI and the Law

Family shows AI video of slain victim as an impact statement — possibly a legal first

For two years, Stacey Wales kept a running list of everything she would say at the sentencing hearing for the man who killed her brother in a road rage incident in Chandler, Ariz.

But when she finally sat down to write her statement, Wales was stuck. She struggled to find the right words, but one voice was clear: her brother's.

"I couldn't help hear his voice in my head of what he would say," Wales told NPR.

That's when the idea came to her: to use artificial intelligence to generate a video of how her late brother, Christopher Pelkey, would address the courtroom and specifically the man who fatally shot him, Gabriel Paul Horcasitas, at a red light in 2021.

On Thursday, Wales stood before the court and played the video — in what AI experts say is likely the first time the technology has been used in the U.S. to create an impact statement read by an AI rendering of the deceased victim.

Since the sentencing hearing, Horcasitas's attorney Jason Lamm has filed a notice of appeal. He said he agreed that victims have an "unfettered right" to share their views at sentencing. "The issue, however, is to what extent did the trial judge rely on the AI video in fashioning a sentence," Lamm added.

AI and Deception

FBI warns of AI voice messages impersonating top U.S. officials

Malicious actors are impersonating top U.S. officials using AI-generated voice memos as part of a “vishing” scheme, the FBI said.

The scammers send out the fake voice messages trying to “establish rapport before gaining access to personal accounts,” the agency said.

Many of the targets of the scheme are “current or former senior US federal or state government officials and their contacts,” according to the FBI.

The top cybercrimes in 2024 were phishing scams, extortion and breaches of personal data, according to FBI data.

AI and Art

AI Detects an Unusual Detail Hidden in a Famous Raphael Masterpiece : ScienceAlert

AI can be trained to see details in images that escape the human eye, and an AI neural network identified something unusual about a face in a Raphael painting: It wasn't actually painted by Raphael.

The face in question belongs to St Joseph, seen in the top left of the painting known as the Madonna della Rosa (or Madonna of the Rose).

Scholars have in fact long debated whether or not the painting is a Raphael original. While it requires diverse evidence to conclude an artwork's provenance, a newer method of analysis based on an AI algorithm has sided with those who think at least some of the strokes were at the hand of another artist.

Researchers from the UK and US developed a custom analysis algorithm based on the works that we know are the result of the Italian master's brushwork.

"Using deep feature analysis, we used pictures of authenticated Raphael paintings to train the computer to recognize his style to a very detailed degree, from the brushstrokes, the color palette, the shading and every aspect of the work," mathematician and computer scientist Hassan Ugail from the University of Bradford in the UK explained in 2023, when the researchers' findings were published.

"The computer sees far more deeply than the human eye, to microscopic level. "

AI and Politics

Trump axes controversial Biden-era restrictions on AI chip exports | CNN Business

President Donald Trump will rescind a set of Biden-era curbs meant to keep advanced technology out of the hands of foreign adversaries but that has been panned by tech giants.

The move could have sweeping impacts on the global distribution of critical AI chips, as well as which companies profit from the new technology and America’s position as a world leader in artificial intelligence.

“I vocally opposed this rule for months, and indeed, the ranking member and I together urge the Biden administration not to adopt it, and I’m very pleased that President Trump has now confirmed he plans to rescind it,” US Senator Ted Cruz (R-Texas) said during a Senate committee hearing to discuss AI regulation on Thursday.

Cruz said he will soon introduce a new bill that “creates a regulatory AI sandbox,” adding that he wants to model new regulation after the approach former President Bill Clinton took at the “dawn of the internet.” OpenAI CEO Sam Altman, AMD CEO Lisa Su, Microsoft vice chair and president Brad Smith and CoreWeave CEO Michael Intrator testified during the hearing.

White House announces AI data center campus partnership with the UAE

The U.S. and United Arab Emirates are partnering on a massive artificial intelligence campus.

The Abu Dhabi data center will be built by the Emirate firm G42, which will partner with several U.S. companies on the facility.

The announcement comes as President Donald Trump visited the UAE as part of a broader trip to the Middle East.

The U.S. and United Arab Emirates are partnering on a massive artificial intelligence campus touted as the largest such facility outside the U.S., the White House said Thursday.

The Abu Dhabi data center will be built by the Emirate firm G42, which will partner with several U.S. companies on the facility, according to the release from the Department of Commerce. It will have a 5-gigawatt capacity and cover 10 square miles.

The names of the U.S. companies were not disclosed.

Nvidia’s Jensen Huang, OpenAI’s Sam Altman, SoftBank’s Masayoshi Son and Cisco President Jeetu Patel were all in the UAE for President Donald Trump’s visit.

“In the UAE, American companies will operate the data centers and offer American-managed cloud services throughout the region,” Commerce Secretary Howard Lutnik said in the release. “The agreement also contains strong security guarantees to prevent diversion of U.S. technology.”

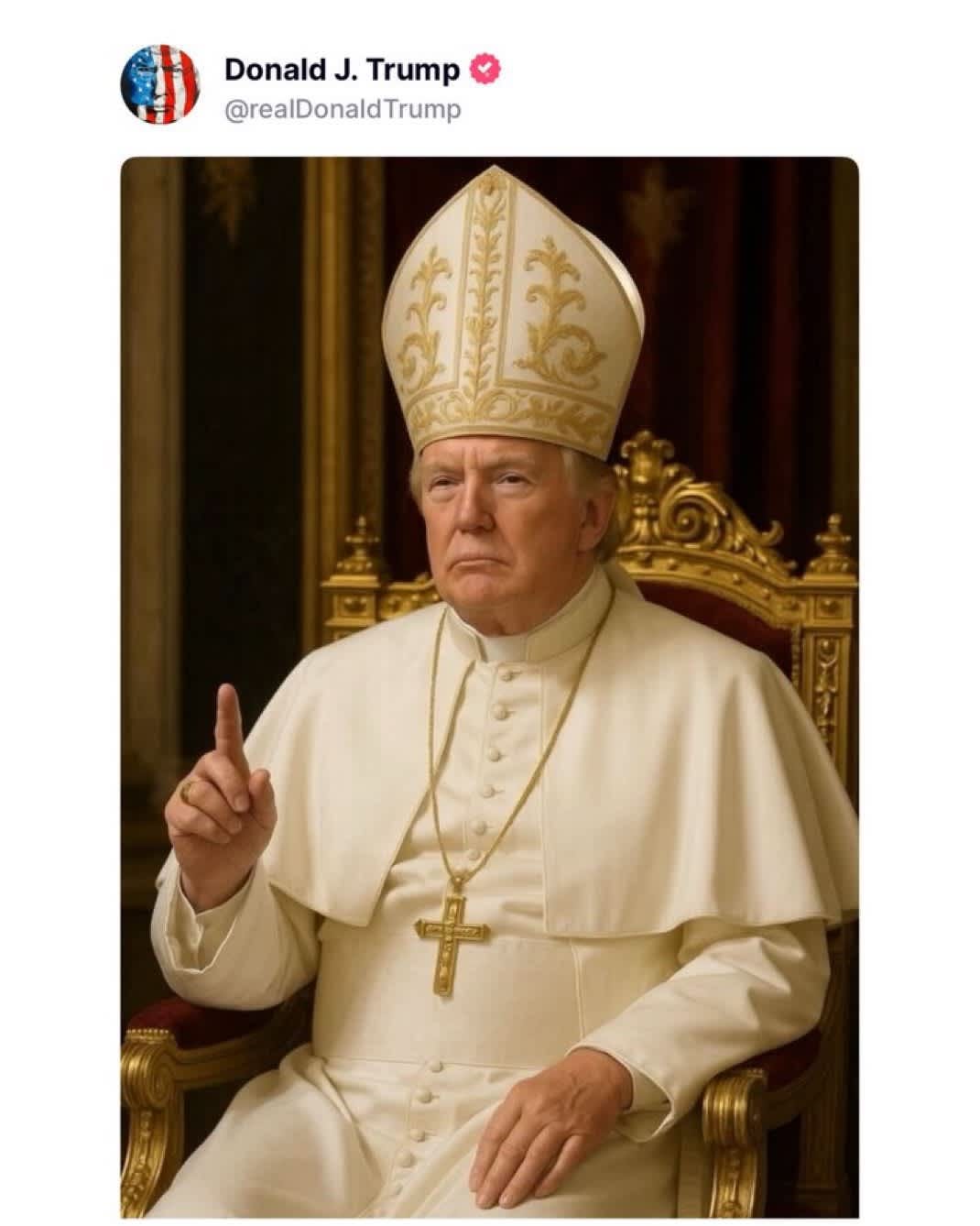

Trump depicted as pope in AI-generated photo

The White House posted an AI-generated photo of President Donald Trump, who was depicted as the pope of the Roman Catholic Church.

Trump attended the funeral of Pope Francis in Rome last weekend.

The president, days later, joked that he’d “like to be pope,” adding, “that would be my number one choice.”

Cardinals are set to begin a conclave this week to elect the next pope.

AI and Religion

Pope Leo XIV says advancement of AI played a factor in his papal name selection

Pope Leo XIV revealed that his papal name was partially inspired by the looming challenges of a world increasingly influenced by artificial intelligence.

In his first formal address as the Vatican’s newly elected pope on Saturday, Leo told the College of Cardinals that he chose to name himself after Pope Leo XIII, who led the Catholic Church from his election

in 1878 to his death in 1903. During his papacy, Leo XIII maintained a dedication to social issues and workers rights after much of the world had just been reshaped by the Industrial Revolution.

Though he said there are various reasons for the name he chose, Leo XIV primarily pointed to Leo XIII’s historic encyclical “Rerum Novarum,” also known as “Rights and Duties of Capital and Labor,” which laid a foundation for modern Catholic social teachings.

Issued in 1891, the open letter emphasized that “some opportune remedy must be found quickly for the misery and wretchedness pressing so unjustly on the majority of the working class.”

“In our own day, the Church offers to everyone the treasury of her social teaching in response to another industrial revolution and to developments in the field of artificial intelligence that pose new challenges for the defense of human dignity, justice and labour,” Leo XIV, previously known as Cardinal Robert Prevost, said in his address on Saturday, according to a transcript.