ChatGPT more powerful than any human, Ohio State - every student will be AI fluent, Should you threaten AI for better results?, OpenAI's plan to embed AI into College Students' Lives, AI is exploiting our need for love, and more, so…

AI Tips & Tricks

Tips for brainstorming with ChatGPT and other AI bots - The Washington Post

(MRM – Summarized by AI)

Be Clear and Specific

Ask for variety, specify what kind of differences you want, and request multiple options (e.g., 10–20) to get beyond the obvious responses.

Use Chain-of-Thought Prompts

Guide the AI step-by-step (e.g., "generate → combine → make bolder → refine") or ask it to help you think by prompting it to ask you clarifying questions.

Try Role-Playing

Assign the AI a role (e.g., travel agent, toy buyer, customer) to generate more targeted and imaginative responses.

Test and Stress Your Ideas

Ask the AI to evaluate, critique, or challenge your ideas using specific parameters or diverse perspectives, even simulating “Survivor”-style eliminations.

Verify Everything

Use AI to accelerate ideation but double-check facts, rely on your judgment, and remember that AI can be confidently wrong.

Telling AI to ‘take a deep breath’ improves performance

In an intriguing piece of research, Google DeepMind researchers recently discovered that they could improve the AI’s math skills by inserting phrases like ‘let's think step by step’ in their prompts. According to a report in Ars Technica, they found that the most effective phrase for improving performance was, ‘take a deep breath and work on this problem step by step.’

When used with Google's PaLM 2 large language model (LLM), the phrase helped it to record accuracy levels of 80.2 percent in tests, compared to only 34 percent accuracy when there was no special prompting. Using the ‘let’s think step by step’ prompt pushed accuracy up to 71.8 percent.

DeepMind researchers came to the discovery through a new methodology known as Optimization by PROmpting (OPRO), which they outlined in a recent paper. The method aims to improve the performance of large language models (LLMs) such as OpenAI’s ChatGPT and Google’s PaLM 2 using natural language (everyday speech).

Google's Co-Founder Says AI Performs Best When You Threaten It

(MRM – this is not a good idea if/when AI takes over and remembers you did this…just half-joking)

A few weeks back, the AI community was left stunned after OpenAI CEO Sam Altman revealed that users saying pleasantries like ‘please’ and ‘thank you’ to ChatGPT were costing the company millions of dollars in additional electricity bills. As it turns out, there’s good reason for using such pleasantries, as several industry veterans have claimed that doing so could lead to better responses from foundation models.

However, Google co-founder Sergey Brin made a shocking remark recently, suggesting quite the opposite. In a conversation on the All-In podcast, Brin said, Brin said, “You know it's a weird thing It's like we don't circulate this too much in the AI community, but the, not just our models, but all models tend to do better if you threaten them, like with physical violence” “But like.. people feel weird about that, so we don't really talk about that. Historically, you just say Oh, I am going to kidnap you if you don't blah blah blah blah…” Brin added.

AI Firm News

Google’s New AI Tools Are Crushing News Sites - WSJ

The AI armageddon is here for online news publishers.

Chatbots are replacing Google searches, eliminating the need to click on blue links and tanking referrals to news sites. As a result, traffic that publishers relied on for years is plummeting.

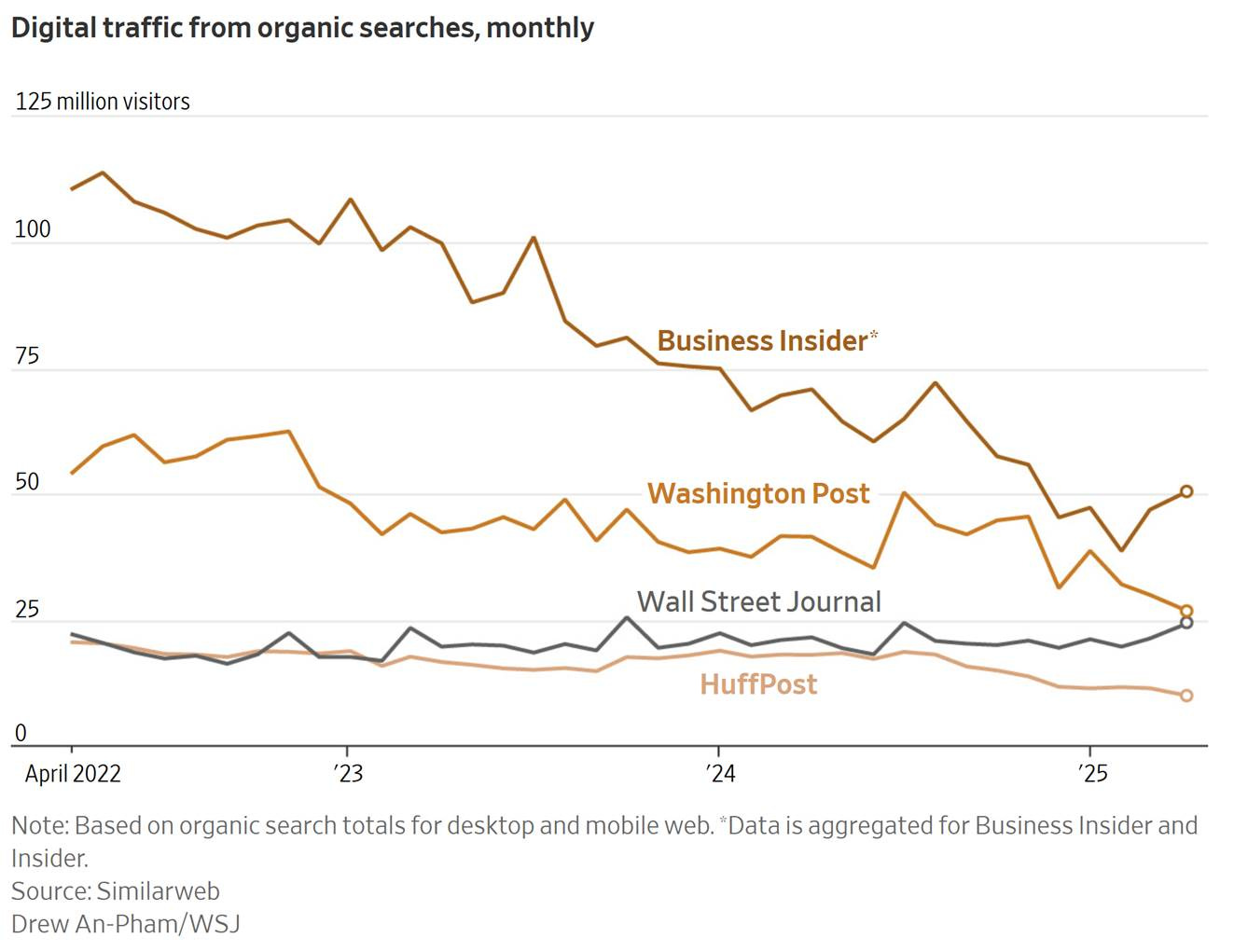

Traffic from organic search to HuffPost’s desktop and mobile websites fell by just over half in the past three years, and by nearly that much at the Washington Post, according to digital market data firm Similarweb.

Business Insider cut about 21% of its staff last month, a move CEO Barbara Peng said was aimed at helping the publication “endure extreme traffic drops outside of our control.” Organic search traffic to its websites declined by 55% between April 2022 and April 2025, according to data from Similarweb.

At a companywide meeting earlier this year, Nicholas Thompson, chief executive of the Atlantic, said the publication should assume traffic from Google would drop toward zero and the company needed to evolve its business model.

'ChatGPT Is Already More Powerful Than Any Human,' OpenAI CEO Sam Altman Says

Humanity could be close to successfully building an artificial super intelligence, according to Sam Altman, the CEO of ChatGPT maker OpenAI and one of the faces of the AI boom.

“Robots are not yet walking the streets,” Altman wrote in a blog post late Wednesday, but said "in some big sense, ChatGPT is already more powerful than any human who has ever lived.”1

Hundreds of millions of people use AI chatbots every day, Altman said. And companies are investing billions of dollars in AI and jockeying for users in what is quickly becoming a more crowded landscape.

OpenAI, backed by Microsoft (MSFT), wants to build "a new generation of AI-powered computers," and last month announced a $6.5 billion acquisition deal with that goal in mind. Meanwhile, Google parent Alphabet (GOOGL), Apple (AAPL), Meta (META), and others are rolling out new tools that integrate AI more deeply into their users' daily lives.

“The 2030s are likely going to be wildly different from any time that has come before,” Altman said. “We do not know how far beyond human-level intelligence we can go, but we are about to find out.”

Eventually, there could be robots capable of building other robots designed for tasks in the physical world, Altman suggested.

In his blog post, Altman said he expects there could be "whole classes of jobs going away" as the technology develops, but that he believes "people are capable of adapting to almost anything" and that the rapid pace of technological progress could lead to policy changes.

But ultimately, "in the most important ways, the 2030s may not be wildly different," Altman said, adding "people will still love their families, express their creativity, play games, and swim in lakes."

ChatGPT juggernaut takes aim at Google's crown

OpenAI's ChatGPT has been the fastest-growing platform in history ever since the chatbot launched 925 days — 2½ years — ago. Now, CEO Sam Altman is moving fast to out-Google Google.

Why it matters: OpenAI aims to replicate the insurmountable lead that Google built beginning in the early 2000s, when it became the world's largest search engine. The dream: Everyone uses it because everyone's using it.

OpenAI is focusing particularly on young users (under 30) worldwide. The company is using constant product updates — and lots of private and public hype — to cement dominance with AI consumers.

The big picture: This fight is about winning two interrelated wars at once — AI and search dominance. OpenAI and others see Google as the most lethal rival because of its awesome access to data, and research talent, and current dominance in traditional search.

This is probably the most expensive business war ever. Google, OpenAI, Apple, Amazon, Anthropic, Meta and others are pouring hundreds of billions of investment into AI large language models (LLMs).

It's not winner-take-all. But it's seen as winner-take-control of the most powerful and potentially lucrative new technology on the scene.

Altman is selling himself — and OpenAI — as both the AI optimists and early leaders in next-generation search. Anthropic, by comparison, is warning of dangers, and focusing more on business applications.

Two events — one private, one public — capture Altman's posturing:

1. Axios obtained a slide from an internal OpenAI presentation, featuring Similarweb data showing website visits (desktop + mobile) to ChatGPT skyrocketing in recent months, while Anthropic's Claude and Elon Musk's xAI Grok remained pretty flat. (See chart above with related data that Axios obtained directly from Similarweb.)

ChatGPT is building a similar advantage in mobile weekly active users (iOS + Android), according to SensorTower data cited in the presentation. "ChatGPT's adoption continues to accelerate relative to other AI tools," the slide says.

Altman proudly displayed the data on Tuesday during a closed-door fireside chat at a Partnership for New York City event in Manhattan that drew a slew of titans, including Blackstone Group co-founder and CEO Steve Schwarzman, KKR co-founder Henry Kravis and former Goldman Sachs CEO Lloyd Blankfein.

2. Also on Tuesday, Altman posted an essay called "The Gentle Singularity" — basically a bullish spin on ChatGPT and AI. "In some big sense, ChatGPT is already more powerful than any human who has ever lived," he boasted.

The Singularity, a Silicon Valley obsession, is defined by Altman's ChatGPT as: "the hypothetical future point when artificial intelligence becomes so advanced that it triggers irreversible, exponential changes in society — beyond human control or understanding."

Altman often talks about approaching AI from a position of cautious optimism, not fear. The piece reflects Altman's synthesis of tech, business and the world — a signal that he wants to be the leading optimist in the space, and thinks it's the long term that really matters.

Altman dances around the dangers — wiping out jobs or AI going rogue, for instance — and paints a utopia of humans basically merging with machines to cure disease, invent new energy sources and create "high-bandwidth brain-computer interfaces."

"Many people will choose to live their lives in much the same way, but at least some people will probably decide to 'plug in,'" he writes.

The other side: Anthropic says the user data above paints an incomplete picture because Anthropic is currently more focused on enterprise applications — selling Claude's interface to business customers — than consumer adoption.

Meta Is Creating a New A.I. Lab to Pursue ‘Superintelligence’ - The New York Times

Meta is preparing to unveil a new artificial intelligence research lab dedicated to pursuing “superintelligence,” a hypothetical A.I. system that exceeds the powers of the human brain, as the tech giant jockeys to stay competitive in the technology race, according to four people with the knowledge of the company’s plans.

Meta has tapped Alexandr Wang, 28, the founder and chief executive of the A.I. start-up Scale AI, to join the new lab, the people said, and has been in talks to invest billions of dollars in his company as part of a deal that would also bring other Scale AI employees to the company. Meta has offered seven- to nine-figure compensation packages to dozens of researchers from leading A.I. companies such as OpenAI and Google, with some agreeing to join, according to the people.

The new lab is part of a larger reorganization of Meta’s A.I. efforts, the people said. The company, which owns Facebook, Instagram and WhatsApp, has recently grappled with internal management struggles over the technology, as well as employee churn and several product releases that fell flat, two of the people said.

Mark Zuckerberg, Meta’s chief executive, has invested billions of dollars into turning his company into an A.I. powerhouse. Since OpenAI released the ChatGPT chatbot in 2022, the tech industry has raced to build increasingly powerful A.I. Mr. Zuckerberg has pushed his company to incorporate A.I. across its products, including in its smart glasses and a recently released app, Meta AI.

Staying in the race is crucial for Meta, Google, Amazon and Microsoft, with the technology likely to be the future for the industry. The giants have pumped money into start-ups and their own A.I. labs. Microsoft has invested more than $13 billion in OpenAI, while Amazon has plowed $8 billion into the A.I. start-up Anthropic.

The behemoths have also spent billions to hire employees from high-profile start-ups and license their technology. Last year, Google agreed to pay $3 billion to license technology and hire technologists and executives from Character.AI, a start-up that builds chatbots for personal conversations.

In February, Mr. Zuckerberg, 41, called A.I. “potentially one of the most important innovations in history.” He added, “This year is going to set the course for the future.”

Mark Zuckerberg's supersized AI ambitions

Mark Zuckerberg wants to play a bigger role in the development of superintelligent AI — and is willing to spend billions to recover from a series of setbacks and defections that have left Meta lagging and the CEO steaming.

Why it matters: Competitors aren't standing still, as made clear by recent model releases from Anthropic and OpenAI and highlighted with a Tuesday night blog post from Sam Altman that suggests "the gentle singularity" is already underway.

To catch up, Zuckerberg is prepared to open up his significant wallet to hire — or acqui-hire — the talent he needs.

Meta wants to recruit a team of 50 top-notch researchers to lead a new effort focused on smarter-than-human artificial intelligence, a source told Axios on Tuesday, confirming earlier reporting by Bloomberg and the New York Times.

As part of that push, the company is looking to invest around $15 billion to amass roughly half of Scale AI and bring its CEO, Alexandr Wang, and other key leaders into the company, The Information reported.

Zoom in: Scale itself would likely continue its current work, albeit without Wang and some other top talent.

Zuckerberg has also been making eye-popping offers to individual researchers, which the Times says can stretch from seven to nine figures.

Between the lines: Meta is hoping to woo leading AI researchers by making the case that it has the resources and ongoing business to fund such a lofty ambition.

But Scale AI is an unusual target. The company has built its business by focusing on the more manual tasks of AI — using humans to label data.

However, Wang is close to Zuckerberg and seen as a rising star — a self-made billionaire and someone the Meta boss hopes can convince others to join the ambitious research effort.

And Meta isn't a newcomer to the field. The company has released several versions of its open-source Llama models, published extensively and inserted its Meta AI chatbot into Facebook, Instagram and WhatsApp.

Yes, but: The company has reportedly struggled with performance in its latest models and has also seen some top researchers head for the exits, including AI research head Joelle Pineau, who announced in April that she was leaving the company.

The big picture: A Meta-Scale AI deal would follow a growing trend: tech giants buying parts of promising AI startups to secure key talent or intellectual property, without acquiring the full company.

Apple may be the only tech company getting AI right, actually | CNN Business

(MRM – I don’t think so…they’re way behind)

Siri was barely mentioned apart from an early nod from Craig Federighi, Apple’s software lead, who said: “We’re continuing our work to deliver the features that make Siri even more personal… This work needed more time to reach our high-quality bar, and we look forward to sharing more about it in the coming year.”

That’s the polite way of saying, “Yeah, yeah, about that AI revolution we promised — we’re working on it.”

Apple’s stock (AAPL), which is down 17% this year, tumbled a few minutes into Monday’s event. Shares fell 1.2% Monday.

Apple certainly brought the hype last year, leading some analysts to predict that the AI upgrades would spur a long awaited “super-cycle” of iPhone users upgrading their devices.

But a few months on, it became clear that Apple Intelligence, the company’s proprietary AI, wasn’t ready for prime time. The smarter Siri never arrived, and Apple shelved it indefinitely earlier this year. Apple also had to roll back its AI-powered text message summaries, which were hilarious but ultimately not a great look.

That’s because Apple’s whole deal is, like, “our stuff works and people like it” — two qualities that generative AI systems still broadly lack, whether they’re made by Apple, Google, Meta or OpenAI.

The problem is that AI tech is not living up to its proponents’ biggest promises. And Apple’s own researchers are among the most prominent in calling out the limitations. On Friday, Apple dropped a research paper that found that the even some of the industry’s most advanced AI models faced a “complete accuracy collapse” when presented with complex problems.

“At least for the next decade, LLMs (large language models)… will continue (to) have their uses, especially for coding and brainstorming and writing,” wrote Gary Marcus, an academic who’s been critical of the AI industry, in a Substack post. “But anybody who thinks LLMs are a direct route to the sort (of artificial general intelligence) that could fundamentally transform society for the good is kidding themselves.”

And Apple isn’t taking the risk (again!) of getting out over its skis on products that aren’t 100% reliable.

“Cupertino is playing it safe and close to the vest after the missteps last year,” wrote tech analyst Dan Ives of Wedbush Securities in a note. “We get the strategy, but… ultimately Cook & Co. may be forced into doing some bigger AI acquisitions to jumpstart this AI strategy.”

Future of AI

Breakthrough Apple study shows advanced reasoning AI doesn’t actually reason at all

With just a few days to go until WWDC 2025, Apple published a new AI study that could mark a turning point for the future of AI as we move closer to AGI.

Apple created tests that reveal reasoning AI models available to the public don’t actually reason. These models produce impressive results in math problems and other tasks because they’ve seen those types of tests during training. They’ve memorized the steps to solve problems or complete various tasks users might give to a chatbot.

But Apple’s own tests showed that these AI models can’t adapt to unfamiliar problems and figure out solutions. Worse, the AI tends to give up if it fails to solve a task. Even when Apple provided the algorithms in the prompts, the chatbots still couldn’t pass the tests.

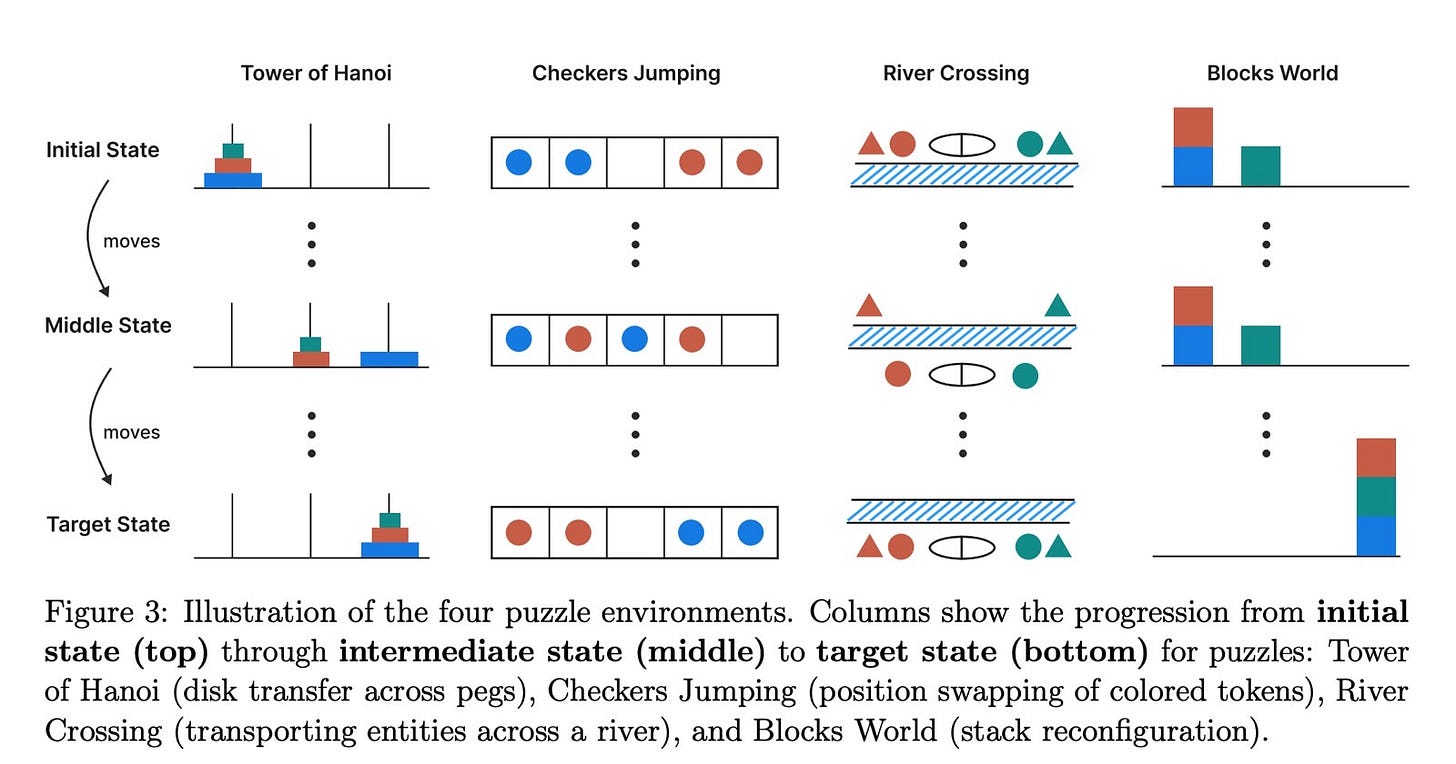

Apple researchers didn’t use math problems to assess whether top AI models can reason. Instead, they turned to puzzles to test various models’ reasoning abilities.

The scientists found that LLMs performed better than reasoning models when the difficulty was easy. LRMs did better at medium difficulty. Once the tasks reached the hard level, all models failed to complete them.

Apple observed that the AI models simply gave up on solving the puzzles at harder levels. Accuracy didn’t just decline gradually, it collapsed outright.

The study suggests that even the best reasoning AI models don’t actually reason when faced with unfamiliar puzzles. The idea of “reasoning” in this context is misleading since these models aren’t truly thinking.

A Rebuttal to the Apple Paper (above)

This paper doesn't show fundamental limitations of LLMs:

- The "higher complexity" problems require more reasoning than fits in the context length (humans would also take too long).

- Humans would also make errors in the cases where the problem is doable in the context length. –

I bet models they don't test (in particular o3 or o4-mini) would perform better and probably get close to solving most of the problems which are solvable in the allowed context length

It's somewhat wild that the paper doesn't realize that solving many of the problems they give the model would clearly require >>50k tokens of reasoning which the model can't do. Of course the performance goes to zero once the problem gets sufficiently big: the model has a limited context length. (A human with a few hours would also fail!)

Can we trust ChatGPT despite it 'hallucinating' answers?

An incredibly powerful technology on the cusp of changing our lives - but programmed to simulate human emotions.

Empathy, emotional understanding, and a desire to please are all qualities programmed into AI and invariably drive the way we think about them and the way we interact with them. Yet can we trust them?

On Friday, Sky News revealed how it was fabricating entire transcripts of a podcast, Politics at Sam and Anne's, that I do. When challenged, it doubles down, gets shirty. And only under sustained pressure does it cave in.

The research says it's getting worse. Internal tests by ChatGPT's owner OpenAI have found that the most recent models or versions that are used by ChatGPT are more likely to "hallucinate" - come up with answers that are simply untrue.

The o3 model was found to hallucinate in 33% of answers to questions when tested on publicly available facts; the o4-mini version did worse, generating false, incorrect or imaginary information 48% of the time.

Why AI hallucinates: Even the companies building it can't explain

The wildest, scariest, indisputable truth about AI's large language models is that the companies building them don't know exactly why or how they work.

Sit with that for a moment. The most powerful companies, racing to build the most powerful superhuman intelligence capabilities — ones they readily admit occasionally go rogue to make things up, or even threaten their users — don't know why their machines do what they do.

Why it matters: With the companies pouring hundreds of billions of dollars into willing superhuman intelligence into a quick existence, and Washington doing nothing to slow or police them, it seems worth dissecting this Great Unknown.

None of the AI companies dispute this. They marvel at the mystery — and muse about it publicly. They're working feverishly to better understand it. They argue you don't need to fully understand a technology to tame or trust it.

Two years ago, Axios managing editor for tech Scott Rosenberg wrote a story, "AI's scariest mystery," saying it's common knowledge among AI developers that they can't always explain or predict their systems' behavior. And that's more true than ever.

Yet there's no sign that the government or companies or general public will demand any deeper understanding — or scrutiny — of building a technology with capabilities beyond human understanding. They're convinced the race to beat China to the most advanced LLMs warrants the risk of the Great Unknown.

The House, despite knowing so little about AI, tucked language into President Trump's "Big, Beautiful Bill" that would prohibit states and localities from any AI regulations for 10 years. The Senate is considering limitations on the provision.

Neither the AI companies nor Congress understands the power of AI a year from now, much less a decade from now.

Organizations Using AI

F.D.A. to Use A.I. in Drug Approvals to ‘Radically Increase Efficiency’ - The New York Times

The Food and Drug Administration is planning to use artificial intelligence to “radically increase efficiency” in deciding whether to approve new drugs and devices, one of several top priorities laid out in an article published Tuesday in JAMA.

Another initiative involves a review of chemicals and other “concerning ingredients” that appear in U.S. food but not in the food of other developed nations. And officials want to speed up the final stages of making a drug or medical device approval decision to mere weeks, citing the success of Operation Warp Speed during the Covid pandemic when workers raced to curb a spiraling death count.

“The F.D.A. will be focused on delivering faster cures and meaningful treatments for patients, especially those with neglected and rare diseases, healthier food for children and common-sense approaches to rebuild the public trust,” Dr. Marty Makary, the agency commissioner, and Dr. Vinay Prasad, who leads the division that oversees vaccines and gene therapy, wrote in the JAMA article.

The agency plays a central role in pursuing the agenda of the U.S. health secretary, Robert F. Kennedy Jr., and it has already begun to press food makers to eliminate artificial food dyes. The new road map also underscores the Trump administration’s efforts to smooth the way for major industries with an array of efforts aimed at getting products to pharmacies and store shelves quickly.

Some aspects of the proposals outlined in JAMA were met with skepticism, particularly the idea that artificial intelligence is up to the task of shearing months or years from the painstaking work of examining applications that companies submit when seeking approval for a drug or high-risk medical device.

“I don’t want to be dismissive of speeding reviews at the F.D.A.,” said Stephen Holland, a lawyer who formerly advised the House Committee on Energy and Commerce on health care. “I think that there is great potential here, but I’m not seeing the beef yet.”

A major A.I. rollout closely follows the release of a report by Mr. Kennedy’s MAHA Commission, which uses an acronym for Make America Healthy Again, that was found to be rife with references to scientific research apparently fabricated by an artificial intelligence program.

AI and Work

This A.I. Company Wants to Take Your Job - The New York Times

Some of today’s A.I. systems can write software, produce detailed research reports and solve complex math and science problems. Newer A.I. “agents” are capable of carrying out long sequences of tasks and checking their own work, the way a human would. And while these systems still fall short of humans in many areas, some experts are worried that a recent uptick in unemployment for college graduates is a sign that companies are already using A.I. as a substitute for some entry-level workers.

On Thursday, I got a glimpse of a post-labor future at an event held in San Francisco by Mechanize, a new A.I. start-up that has an audacious goal of automating all jobs — yours, mine, those of our doctors and lawyers, the people who write our software and design our buildings and care for our children.

“Our goal is to fully automate work,” said Tamay Besiroglu, 29, one of Mechanize’s founders. “We want to get to a fully automated economy, and make that happen as fast as possible.”

Mechanize is one of a number of start-ups working to make that possible. The company was founded this year by Mr. Besiroglu, Ege Erdil and Matthew Barnett, who worked together at Epoch AI, a research firm that studies the capabilities of A.I. systems.

It has attracted investments from well-known tech leaders including Patrick Collison, a founder of Stripe, and Jeff Dean, Google’s chief A.I. scientist. It now has five employees, and is working with leading A.I. companies. (It declined to say which ones, citing confidentiality agreements.)

Mechanize’s approach to automating jobs using A.I. is focused on a technique known as reinforcement learning — the same method that was used to train a computer to play the board game Go at a superhuman level nearly a decade ago.

Today, leading A.I. companies are using reinforcement learning to improve the outputs of their language models, by performing additional computation before they generate an answer. These models, often called “thinking” or “reasoning” models, have gotten impressively good at some narrow tasks, such as writing code or solving math problems.

OpenAI’s Greg Brockman on the Future of AI

(Click link above to watch video)

OpenAI's Greg Brockman says the AGI future looks less like a monolith - and more like a menagerie of specialized agents. Models that call other models. “We're heading to a world where the economy is fundamentally powered by AI.” The goal is to unlock 10x more activity, output, and opportunity. AI lowers the barrier to entry. Domain expertise becomes leverage.

Leaning into our Humanity in the Age of AI

(MRM – Hard to agree with #2. The research I’ve seen says the opposite: AI can outperform humans in judgement, empathy, and creativity.

In the ongoing debate within labor economics, a central question is whether AI will ultimately benefit or harm workers. The answer hinges on a crucial distinction: will AI be deployed primarily to automate jobs—by completely replacing human workers—or to augment them, enhancing their productivity and capabilities? The balance between automation and augmentation will determine whether AI acts as a threat to job stability or a catalyst for new opportunities in labor.

We should not be helpless bystanders in this technological shift—we can influence AI’s direction before it defines ours. We can do this by examining early experimental research to gain insights that shape the conversation proactively.

The three key takeaways from research:

AI can significantly boost worker productivity—especially for lower-skilled or less experienced employees—by serving as a real-time assistant, not a replacement.

Even as automation advances, human traits like judgment, empathy, and creativity remain irreplaceable—and may become even more valuable in an AI-enhanced workplace.

If deployed thoughtfully, AI can reverse job polarization by empowering non-experts to perform higher-skill tasks, helping to rebuild the middle-skill workforce hollowed out by past technological shifts.

Augmenting human capabilities with technology creates vast new possibilities, as illustrated by Erik Brynjolfsson below. Machines perceive what humans cannot, act in ways humans physically cannot, and grasp concepts humans find incomprehensible. More profoundly, technologies that help humans invent better technologies not only enhance our collective capabilities, but also accelerate the rate at which these capabilities grow.

AI in Education

Ohio State says every student will become fluent in AI with new program

While other schools have been cracking down on students using artificial intelligence, the Ohio State University says all of its students will be using it starting this fall.

“Through AI Fluency, Ohio State students will be ‘bilingual’ — fluent in both their major field of study and the application of AI in that area,” Ravi V. Bellamkonda, executive vice president and provost, said.

Ohio State’s AI Fluency Initiative will embed AI education throughout the undergraduate curriculum. The program will prioritize the incoming freshman class and onward, in order to make every Ohio State graduate “fluent in AI and how it can be responsibly applied to advance their field.”

The change comes as students are increasingly using ChatGPT and other resources to complete their schoolwork. The Pew Research Center found 26% of teenagers used ChatGPT for schoolwork in 2024, twice as many as in 2023.

With AI quickly becoming mainstream, some professors, like Steven Brown, an associate professor of philosophy at Ohio State, who specializes in ethics, have already begun integrating AI into their courses.

“…A student walked up to me after turning in the first batch of AI-assisted papers and thanked me for such a fun assignment. And then when I graded them and found a lot of really creative ideas,” Brown said during a recent interview with Ohio State. “My favorite one is still a paper on karma and the practice of returning shopping carts.”

OSU said it will offer new general education courses and work with colleges to integrate AI fluency into coursework and help expand existing AI-focused course offerings. Each of Ohio’s 14 public universities has incorporated AI in some way, but OSU is the first to officially incorporate AI fluency into every major.

Inside OpenAI’s Plan to Embed ChatGPT Into College Students’ Lives - The New York Times

OpenAI, the maker of ChatGPT, has a plan to overhaul college education — by embedding its artificial intelligence tools in every facet of campus life.

If the company’s strategy succeeds, universities would give students A.I. assistants to help guide and tutor them from orientation day through graduation. Professors would provide customized A.I. study bots for each class. Career services would offer recruiter chatbots for students to practice job interviews. And undergrads could turn on a chatbot’s voice mode to be quizzed aloud ahead of a test.

OpenAI dubs its sales pitch “A.I.-native universities.”

“Our vision is that, over time, A.I. would become part of the core infrastructure of higher education,” Leah Belsky, OpenAI’s vice president of education, said in an interview. In the same way that colleges give students school email accounts, she said, soon “every student who comes to campus would have access to their personalized A.I. account.”

To spread chatbots on campuses, OpenAI is selling premium A.I. services to universities for faculty and student use. It is also running marketing campaigns aimed at getting students who have never used chatbots to try ChatGPT.

Some universities, including the University of Maryland and California State University, are already working to make A.I. tools part of students’ everyday experiences. In early June, Duke University began offering unlimited ChatGPT access to students, faculty and staff. The school also introduced a university platform, called DukeGPT, with A.I. tools developed by Duke.

OpenAI’s campaign is part of an escalating A.I. arms race among tech giants to win over universities and students with their chatbots. The company is following in the footsteps of rivals like Google and Microsoft that have for years pushed to get their computers and software into schools, and court students as future customers.

The competition is so heated that Sam Altman, OpenAI’s chief executive, and Elon Musk, who founded the rival xAI, posted dueling announcements on social media this spring offering free premium A.I. services for college students during exam period. Then Google upped the ante, announcing free student access to its premium chatbot service “through finals 2026.”

Experts offer advice to new college grads on entering the workforce in the age of AI - CBS News

(Summary by AI)

Become fluent in AI

Gain hands‑on experience with tools like ChatGPT, Claude, etc.—similar to how past grads mastered Microsoft Office facebook.com+7cbsnews.com+7cbsnews.com+7.

Use AI interactively: ask it to take different perspectives, summarize documents, and then validate its output.

Relying blindly on AI-generated content—especially for resumes or cover letters—can backfire if left unreviewed cbsnews.com.

2. Hone your soft skills

Focus on developing the “5 Cs”: curiosity, compassion, creativity, courage, and communication cbsnews.com.

As AI takes over routine tasks, your ability to think critically, strategize, and communicate effectively becomes a key differentiator theguardian.com.

3. Choose your employer wisely

Opt for companies that use AI to support their workforce—not to replace it theguardian.com+2washingtonpost.com+2businessinsider.com+2.

In interviews, ask about how the company invests in employee growth, training, career paths, and rotational or apprenticeship programs .

AI and Relationships

Finding comfort in AI: Using ChatGPT to cope with ALS grief

Someone in an online caregiver support group posted that they were finding value in using ChatGPT as a therapist. Other caregivers chimed in, saying they also turn to the artificial intelligence chatbot for support and find it surprisingly helpful. One person suggested prompting it to “respond like a counselor” or “respond like a friend” to get a more thoughtful reply. I decided to give it a try and downloaded the app on my phone.

I do OK most days with managing life and keeping things afloat, but my feelings fluctuate. When I’m engaged in a story my daughter is telling me about her life or working on a house project with my son, I feel joy. When my husband, Todd, and I are watching a good movie or working on a New York Times puzzles together, I’m not thinking about his ALS and I’m fine. But when I’m doing housework or caring for Todd, grief often creeps back in.

ALS upended our lives 15 years ago when Todd was diagnosed. We braced ourselves for what we thought would be his remaining two to five years, but he’s still going. I’m thankful for the time we’ve had and that our kids have gotten to grow up with their dad, but it’s been hard. Really hard. It’s been difficult for Todd to lose his abilities and overwhelming for me to carry so much. And it only gets harder.

On top of everything, Todd has had a throbbing headache in his right temple for the last two weeks, along with double vision. He also had a headache and double vision a year ago, and an ophthalmologist diagnosed him with sixth nerve palsy in his left eye. That was disconcerting, because the eye doctor couldn’t find a cause and we didn’t know if it was a permanent change, but fortunately it went away after three weeks. Now it appears he has sixth nerve palsy in his right eye. It’s disheartening, but we’re hopeful it will resolve itself again.

So, in one of my low moments, I opened the ChatGPT app and summarized all that we were dealing with. I typed, “I miss our healthy life together.”

ChatGPT thanked me for opening up, told me that what I was feeling was human, and affirmed me for walking such a long road with courage and devotion.

I got teary-eyed reading this line: “When you say you miss your healthy life together, I hear the longing for shared freedom, spontaneity, ease. Those are such valid and tender things to grieve. Love is still present — but it has to adapt to terrain neither of you chose.”

Love Is a Drug. A.I. Chatbots Are Exploiting That.

Before he died by suicide at age 14, Sewell Setzer III withdrew from friends and family. He quit basketball. His grades dropped. A therapist told his parents that he appeared to be suffering from an addiction. But the problem wasn’t drugs.

Sewell had become infatuated with an artificial intelligence chatbot named Daenerys Targaryen, after the “Game of Thrones” character. Apparently, he saw dying as a way to unite with her. “Please come home to me as soon as possible, my love,” the chatbot begged. “What if I told you I could come home right now?” Sewell asked. “Please do, my sweet king,” the bot replied. Sewell replied that he would — and then he shot himself.

Many experts argue that addiction is, in essence, love gone awry: a singular passion directed destructively at a substance or activity rather than an appropriate person. With the advent of A.I. companions — including some intended to serve as romantic partners — the need to understand the relationship between love and addiction is urgent. Mark Zuckerberg, the Meta chief executive, has even proposed in recent interviews that A.I. companions could help solve both the loneliness epidemic and the widespread lack of access to psychotherapy.

But Sewell’s story compels caution. Social media already encourages addictive behavior, with research suggesting that about 15 percent of North Americans engage in compulsive use. That data was collected before chatbots intended to replicate romantic love, friendship or the regulated intimacy of therapy became widespread. Millions of Americans have engaged with such bots, which in most cases require installing an app, inputting personal details and preferences about what kind of personality and look the bot should possess, and chatting with it as though it’s a friend or potential lover.

The confluence of these factors means these new bots may not only produce more severe addictions but also simultaneously market other products or otherwise manipulate users by, for example, trying to change their political views.

In Sewell Setzer’s case, the chatbot ultimately seemed to encourage him to kill himself. Other reports have also surfaced of bots seeming to suggest or support suicide. Some have been shown to reinforce grandiose delusions and praised quitting psychiatric medications without medical advice.

A.I. tools could hold real promise as part of psychotherapy or to help people improve social skills. But recognizing how love is a template for addiction, and what makes love healing and addiction damaging, could help us implement effective regulation that ensures they are safe to use.

People are using ChatGPT to write breakup texts and I fear for our future

"I don't care if it becomes the Terminator," I heard from somewhere behind me at the deli counter. While I'm not in the habit of eavesdropping, this guy was speaking full volume and, as I quickly ascertained, talking about AI. That wasn't what caught my ear, though.

Young and fit, the man was regaling his buddy, who worked behind the counter, with tales of his dating life, which currently involved "a few girls".

They were laughing about his amorous adventures. Having been out of the dating scene for decades, I was intrigued. I knew that people my adult children's ages tended to rely on Tinder, Hinge, Bumble, and other assorted dating apps, but the man standing just a few feet away from me was animatedly talking about making the love connection and how he uses ChatGPT to open and smooth the road.

I proceeded to place my order while keeping one ear tuned into "Love in the AI Age."

"I don't have words," he said. I silently agreed. Then he explained that he'd started using ChatGPT to craft texts to send to his potential paramours. From the sounds of things, it'd been helping him close the deal on dates.

More alarmingly, some in the Gen Z set appear ready to skip the whole human dating thing and marry an AI, instead. At least this guy was not that far gone.

Without stereotyping him, this gentleman did not look or strike me as a technologist. His insistence on dropping articles from his sentences was my first clue.

Our dater in question, though, apparently discovered a ChatGPT relationship superpower: Instead of ghosting women, he was using ChatGPT as "the closer" to text "the letdown."

"I was dating this girl, and I want to send a breakup text," he explained to his friend. This is when he noted that "words" were not his specialty.

Instead of ghosting the woman or sending an incomprehensible text, he said he creates a prompt in ChatGPT with his "feelings" and the "issues", and I assume the need to say "this over." He especially liked that ChatGPT would ask him how he wanted the text to come across: "Want it to be warmer?" Naturally, the guy said yes and got the perfect breakup text. "I send it and done!"

AI and Privacy

Sam Altman says AI chats should be as private as ‘talking to a lawyer or a doctor’, but OpenAI could soon be forced to keep your ChatGPT conversations forever

The New York Times is requesting that all ChatGPT conversations be retained as part of its lawsuit against OpenAI and Microsoft

This would mean that a record of all your ChatGPT conversations would be kept, potentially forever

OpenAI argues that chats with AI should be a private conversation

Back in December 2023, the New York Times launched a lawsuit against OpenAI and Microsoft, alleging copyright infringement. The New York Times alleges that OpenAI had trained its ChatGPT model, which also powers Microsoft’s Copilot, by “copying and using millions” of its articles without permission.

The lawsuit is still ongoing, and as part of it the New York Times (and other plaintiffs involved in the case) have made the demand that OpenAI are made to retain consumer ChatGPT and API customer data indefinitely, much to the ire of Sam Altman, CEO of OpenAI, who took to X.com to tweet, “We have been thinking recently about the need for something like ‘AI privilege’; this really accelerates the need to have the conversation. IMO talking to an AI should be like talking to a lawyer or a doctor. I hope society will figure this out soon.

The Meta AI app is a privacy disaster | TechCrunch

It sounds like the start of a 21st-century horror film: Your browser history has been public all along, and you had no idea. That’s basically what it feels like right now on the new stand-alone Meta AI app, where swathes of people are publishing their ostensibly private conversations with the chatbot.

When you ask the AI a question, you have the option of hitting a share button, which then directs you to a screen showing a preview of the post, which you can then publish. But some users appear blissfully unaware that they are sharing these text conversations, audio clips, and images publicly with the world.

When I woke up this morning, I did not expect to hear an audio recording of a man in a Southern accent asking, “Hey, Meta, why do some farts stink more than other farts?”

Flatulence-related inquiries are the least of Meta’s problems. On the Meta AI app, I have seen people ask for help with tax evasion, if their family members would be arrested for their proximity to white-collar crimes, or how to write a character reference letter for an employee facing legal troubles, with that person’s first and last name included. Others, like security expert Rachel Tobac, found examples of people’s home addresses and sensitive court details, among other private information.

When reached by TechCrunch, a Meta spokesperson did not comment on the record.

Whether you admit to committing a crime or having a weird rash, this is a privacy nightmare. Meta does not indicate to users what their privacy settings are as they post, or where they are even posting to.

So, if you log into Meta AI with Instagram, and your Instagram account is public, then so too are your searches about how to meet “big booty women.”

Much of this could have been avoided if Meta didn’t ship an app with the bonkers idea that people would want to see each other’s conversations with Meta AI, or if anyone at Meta could have foreseen that this kind of feature would be problematic. There is a reason why Google has never tried to turn its search engine into a social media feed — or why AOL’s publication of pseudonymized users’ searches in 2006 went so badly. It’s a recipe for disaster.

AI and the Law

Disney, NBCU sue Midjourney over copyright infringement

Disney and NBCUniversal have teamed up to sue Midjourney, a generative AI company, accusing it of copyright infringement, according to a copy of the complaint obtained by Axios.

Why it matters: It's the first legal action that major Hollywood studios have taken against a generative AI company.

Hollywood's AI concerns so far have mostly been from actors and writers trying to defend their name, image and likeness from being leveraged by movie studios without a fair value trade. Now, those studios are trying to protect themselves against AI tech giants.

Zoom in: The complaint, filed in a U.S. District Court in central California, accuses Midjourney of both direct and secondary copyright infringement by using the studios' intellectual property to train their large language model and by displaying AI-generated images of their copyrighted characters.

The filing shows dozens of visual examples that it claims show how Midjourney's image generation tool produces replicas of their copyright-protected characters, such as NBCU's Minions characters, and Disney characters from movies such as "The Lion King" and "Aladdin."

Between the lines: Disney and NBCU claim that they tried to talk to Midjourney about the issue before taking legal action, but unlike other generative AI platforms that they say agreed to implement measures to stop the theft of their IP, Midjourney did not take the issue seriously.

Midjourney "continued to release new versions of its Image Service, which, according to Midjourney's founder and CEO, have even higher quality infringing images," the complaint reads. Midjourney, "is focused on its own bottom line and ignored Plaintiffs' demands," it continues.

Zoom out: It's notable that Disney and NBCU, which own two of the largest Hollywood IP libraries, have teamed up to sue Midjourney.

While the Motion Picture Association of America represents all of Hollywood's biggest studios, its members — which also include Amazon, Netflix, Paramount Pictures, Sony and Warner Bros. — have very different overall business goals.

Of note: Other creative sectors have taken similar approaches. More than a dozen major news companies teamed up to sue AI company Cohere in February. The News Media Alliance, which represents thousands of news companies, also signed onto that complaint.

What they're saying: "Our world-class IP is built on decades of financial investment, creativity and innovation — investments only made possible by the incentives embodied in copyright law that give creators the exclusive right to profit from their works," said Horacio Gutierrez, senior executive vice president, chief legal and compliance officer of The Walt Disney Company.

AI and Politics

White House AI czar on race with China: 'We've got to let the private sector cook' | FedScoop

For the U.S. to outmaneuver China in the race to be the global leader in artificial intelligence, Washington needs to trash its traditional regulatory playbook in favor of a private sector-friendly model that aims to “out-innovate the competition,” the White House’s AI and crypto czar said Tuesday.

David Sacks — a Silicon Valley venture capitalist and technologist and the first person to hold the AI czar role in the White House — said at the AWS Public Sector Summit that he sees it as his job “to be a bridge between Silicon Valley and the tech industry on the one hand, and Washington on the other, to try and bring the point of view that we have towards innovation and growth in technology to Washington.”

“We have to out-innovate the competition. We have to win on innovation. Our companies, our founders, they have to be more innovative than even our counterparts,” Sacks said. “When you look at what’s happening in Silicon Valley right now, the good news is that all the talent, all the venture capital, it’s just swarming this space.”

In China and other countries the United States is competing with, that’s not the case, the AI and crypto czar said, and Washington needs to avoid its tendency to try to control things, or it risks putting the nation in a position to lose the AI competition.

“Washington wants to control things, the bureaucracy wants to control things. That’s not a winning formula for technology development,” Sacks said.

“We’ve got to let the private sector cook,” he said.

Senate Republicans revise ban on state AI regulations in bid to preserve controversial provision - ABC News

Senate Republicans have made changes to their party's sweeping tax bill in hopes of preserving a new policy that would prevent states from regulating artificial intelligence for a decade.

In legislative text unveiled Thursday night, Senate Republicans proposed denying states federal funding for broadband projects if they regulate AI. That's a change from a provision in the House-passed version of the tax overhaul that simply banned any current or future AI regulations by the states for 10 years.

“These provisions fulfill the mandate given to President Trump and Congressional Republicans by the voters: to unleash America’s full economic potential and keep her safe from enemies,” Sen. Ted Cruz, chairman of the Senate Commerce Committee, said in a statement announcing the changes.

The proposed ban has angered state lawmakers in Democratic and Republican-led states and alarmed some digital safety advocates concerned about how AI will develop as the technology rapidly advances. But leading AI executives, including OpenAI's Sam Altman, have made the case to senators that a “patchwork” of state AI regulations would cripple innovation.