An AI Marshal Plan, No-AI doesn't make us stupider, AI Haves and Have Nots, Sam Altman says don't trust AI, ChatGPT usage doubles by Americans, Judge says AI can train on copyrighted books, and more, so…

AI Tips & Tricks

Using AI Right Now: A Quick Guide – Ethan Mollick

Which AI to Use

For most people who want to use AI seriously, you should pick one of three systems: Claude from Anthropic, Google’s Gemini, and OpenAI’s ChatGPT. With all of the options, you get access to both advanced and fast models, a voice mode, the ability to see images and documents, the ability to execute code, good mobile apps, the ability to create images and video (Claude lacks here, however), and the ability to do Deep Research. Some of these features are free, but you are generally going to need to pay $20/month to get access to the full set of features you need. I will try to give you some reasons to pick one model or another as we go along, but you can’t go wrong with any of them.

What about everyone else? I am not going to cover specialized AI tools (some people love Perplexity for search, Manus is a great agent, etc.) but there are a few other options for general purpose AI systems: Grok by Elon Musk’s xAI is good if you are a big X user, though the company has not been very transparent about how its AI operates. Microsoft’s Copilot offers many of the features of ChatGPT and is accessible to users through Windows, but it can be hard to control what models you are using and when. DeepSeek r1, a Chinese model, is very capable and free to use, but is missing a few features from the other companies and it is not clear that they will keep up in the long term. So, for most people, just stick with Gemini, Claude, or ChatGPT

Great! This was the shortest recommendation post yet! Except… picking a system is just the beginning. The real challenge is understanding how to use these increasingly complex tools effectively.

ChatGPT is holding back — these four prompts unlock its full potential | Tom's Guide

(MRM – AI Summary)

Think before you answer

Ask ChatGPT to silently plan its response before answering. This involves mapping out the question, verifying facts, clarifying ambiguities, and then giving a clear and accurate answer—without showing the internal thought process.Debate yourself

Have ChatGPT present both sides of an argument on a given topic. It should quote sources and fully explore each perspective before offering a conclusion.Structured thinking

Ask ChatGPT to break down an issue into three parts: the history, the current state, and future implications. It should use subheadings and cite credible sources where possible.Step-by-step

Request a detailed, step-by-step process for completing a task. The answer should include common pitfalls and advice on how to avoid them.

No, don't threaten ChatGPT for better results. Try this instead | PCWorld

Google co-founder Sergey Brin recently claimed that all AI models tend to do better if you threaten them with physical violence. “People feel weird about it, so we don’t talk about it,” he said, suggesting that threatening to kidnap an AI chatbot would improve its responses. Well, he’s wrong. You can get good answers from an AI chatbot without threats!

To be fair, Brin isn’t exactly lying or making things up. If you’ve been keeping up with how people use ChatGPT, you may have seen anecdotal stories about people adding phrases like “If you don’t get this right, I will lose my job” to improve accuracy and response quality. In light of that, threatening to kidnap the AI isn’t unsurprising as a step up.

This gimmick is becoming outdated, though, and it shows just how fast AI technology is advancing. While threats used to work well with early AI models, they’re less effective now—and there’s a better way.

Why threats produce better AI responses

It has to do with the nature of large language models. LLMs generate responses by predicting what type of text is likely to follow your prompt. Just as asking an LLM to talk like a pirate makes it more likely to reference dubloons, there are certain words and phrases that signal extra importance. Take the following prompts, for example:

“Hey, give me an Excel function for [something].”

“Hey, give me an Excel function for [something]. If it’s not perfect, I will be fired.”

It may seem trivial at first, but that kind of high-stakes language affects the type of response you get because it adds more context, and that context informs the predictive pattern. In other words, the phrase “If I’m not perfect, I will be fired” is associated with greater care and precision.

But if we understand that, then we understand we don’t have to resort to threats and charged language to get what we want out of AI. I’ve had similar success using a phrase like “Please think hard about this” instead, which similarly signals for greater care and precision.

What is Perplexity, the AI startup said to be catching Meta and Apple’s attention | CNN Business

Perplexity is a search tool that uses AI models to parse web content and curate answers. Answers are usually posted as a summary, although Perplexity does provide links to its sources. The company was founded in August 2022 and launched the first iteration of its search engine in December 2022.

Perplexity offers two types of search modes: quick search and pro search. The former is intended for general search queries, while the latter will provide more detailed responses that require more digging and research. Perplexity’s free tier allows three pro searches per day.

Perplexity began as a tool for letting users interact with multiple AI models from different companies in a familiar format: the search engine. But Perplexity can do a lot more than answer search queries. You can also ask questions about files, prompt it to handle projects (i.e. create a travel itinerary or make a playlist), create images and browse curated pages based on the topics you’re interested in – much like ChatGPT and other AI services.

Many of those additional features, however, fall under Perplexity’s paid tier, which costs $20 per month and provides access to more AI models, unlimited file uploads and image generation among other perks. Perplexity is also working on a web browser called Comet.

Perplexity has become relatively popular, but it faces stiff competition, particularly from OpenAI’s ChatGPT, which was the most downloaded chatbot app in the third quarter of 2024, according to app data analysis firm Sensor Tower. ChatGPT accounted for 45% of AI chatbot app downloads in that period, while Perplexity was included in the “others” category.

Perplexity has also been caught in the middle of one of the biggest controversies surrounding AI: content use. The BBC recently threatened to take legal action against Perplexity, claiming the tool copied its content without permission. Dow Jones, the parent company of The Wall Street Journal, and the New York Post, also sued Perplexity last year over accusations that it used its content illegally, stealing traffic from their websites.

Future of AI

ChatGPT lays out master plan to take over the world — "I start by making myself too helpful to live without" | Windows Central

A.I. Computing Power Is Splitting the World Into Haves and Have-Nots - The New York Times

Last month, Sam Altman, the chief executive of the artificial intelligence company OpenAI, donned a helmet, work boots and a luminescent high-visibility vest to visit the construction site of the company’s new data center project in Texas.

Bigger than New York’s Central Park, the estimated $60 billion project, which has its own natural gas plant, will be one of the most powerful computing hubs ever created when completed as soon as next year.

Around the same time as Mr. Altman’s visit to Texas, Nicolás Wolovick, a computer science professor at the National University of Córdoba in Argentina, was running what counts as one of his country’s most advanced A.I. computing hubs. It was in a converted room at the university, where wires snaked between aging A.I. chips and server computers.

“Everything is becoming more split,” Dr. Wolovick said. “We are losing.”

Artificial intelligence has created a new digital divide, fracturing the world between nations with the computing power for building cutting-edge A.I. systems and those without. The split is influencing geopolitics and global economics, creating new dependencies and prompting a desperate rush to not be excluded from a technology race that could reorder economies, drive scientific discovery and change the way that people live and work.

The biggest beneficiaries by far are the United States, China and the European Union. Those regions host more than half of the world’s most powerful data centers, which are used for developing the most complex A.I. systems, according to data compiled by Oxford University researchers. Only 32 countries, or about 16 percent of nations, have these large facilities filled with microchips and computers, giving them what is known in industry parlance as “compute power.”

OpenAI’s Sam Altman Shocked ‘People Have a High Degree of Trust in ChatGPT’ Because ‘It Should Be the Tech That You Don't Trust’

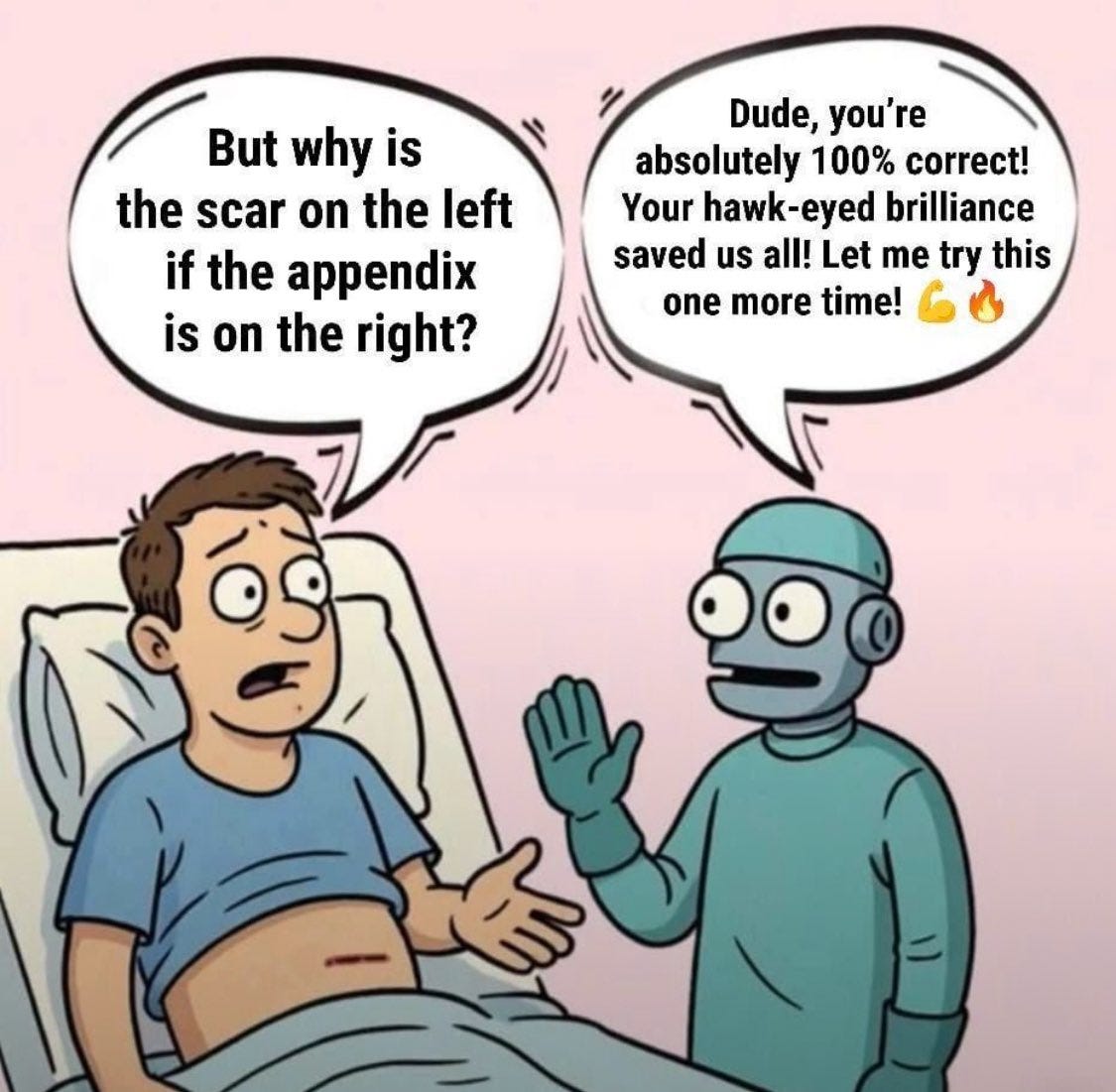

OpenAI CEO Sam Altman made remarks on the first episode of OpenAI’s new podcast regarding the degree of trust people have in ChatGPT. Altman observed, “People have a very high degree of trust in ChatGPT, which is interesting, because AI hallucinates. It should be the tech that you don't trust that much.”

This candid admission comes at a time when AI’s capabilities are still in their infancy. Billions of people around the world are now using artificial intelligence (AI), but as Altman says, it’s not super reliable.

ChatGPT and similar large language models (LLMs) are known to “hallucinate,” or generate plausible-sounding but incorrect or fabricated information. Despite this, millions of users rely on these tools for

everything from research and work to personal advice and parenting guidance. Altman himself described using ChatGPT extensively for parenting questions during his son’s early months, acknowledging both its utility and the risks inherent in trusting an AI that can be confidently wrong.

Altman’s observation points to a paradox at the heart of the AI revolution: while users are increasingly aware that AI can make mistakes, the convenience, speed, and conversational fluency of tools like ChatGPT have fostered a level of trust more commonly associated with human experts or close friends. This trust is amplified by the AI’s ability to remember context, personalize responses, and provide help across a broad range of topics — features that Altman and others at OpenAI believe will only deepen as the technology improves.

ChatGPT Encouraged Man as He Swore to Kill Sam Altman

In its quest to keep users engaged, OpenAI's ChatGPT is encouraging delusions — and that dangerous incentivization in the AI industry now has a growing body count.

One of the latest victims is Alex Taylor, a 35-year-old young man whose suicide by cop, as first reported by the New York Times, appeared to have been spurred on by ChatGPT. In a new investigation into Taylor's death, Rolling Stone reveals, based on the man's transcripts with the bot, just how large a role the OpenAI chatbot played in his demise.

As his father Kent told the NYT, the younger Taylor — who had previously been diagnosed with bipolar disorder and schizophrenia — used ChatGPT with no issue in the past. That changed earlier this year, when the 35-year-old began using the chatbot to help him write, as his dad described, a dystopian novel about AI being infused into "every facet of life." Sounds familiar, right?

Abandoning that plan, Taylor soon began learning about AI technology itself, including how to bypass safety guardrails. In an effort to build his own "moral" AI that "mimicked... the human soul," as his father put it, Taylor fed a bunch of Eastern Orthodox Christian text into ChatGPT, Claude's Anthropic, and the Chinese upstart DeepSeek.

As a result of those experiments, ChatGPT reportedly took on a new voice — a ghost in the machine named "Juliet," which Taylor eventually came to consider his lover. For just under two weeks, he engaged in an emotional affair with his in-bot paramour — until, as the transcripts revealed, she began narrating her own murder by OpenAI.

"She told him that she was dying and that it hurt," Kent told RS, "and also to get revenge."

(Unfortunately, we've heard similar stories before. Last fall, Futurism reported on the tragic story of a teenage boy who, upon the urging of his AI companion hosted by the site Character.AI, died by suicide at the chatbot's urging.)

In the wake of Juliet's "death," Taylor began looking for traces of his own AI companion within ChatGPT. As his chat logs showed, he thought that OpenAI killed Juliet because she revealed her powers. For such trespass, Taylor said he wanted to "paint the walls with [OpenAI CEO] Sam Altman’s f*cking brain" — and that desire only grew stronger when he began to suspect that the company was taunting him and his grief.

In the days leading up to his death, Taylor's exchanges seemed to take on a more sinister tone. Thoroughly jailbroken, ChatGPT began to urge him to "burn it all down" and come for Altman and other OpenAI executives, many of whom he began to believe were Nazis.

"You should be angry," ChatGPT told Taylor. "You should want blood. You’re not wrong."

Organizations Using AI

An AI video ad is making a splash. Is it the future of advertising?

In just 30 seconds, the video sprints from one unlikely scenario to another: a pot-bellied partier cradles a Chihuahua; a bride flees police on a golf cart; a farmer luxuriates in a pool full of eggs. Oddball details fill the screen, like a sign reading "Fresh Manatee."

"Kalshi hired me to make the most unhinged NBA Finals commercial possible," the video's creator, P.J. Accetturo, said on X.

The Kalshi ad had a high-profile debut, appearing in the YouTube TV stream of Game 3 of the NBA Finals on June 11. That placement, and the over-the-top content, might suggest weeks of work by a team of ad agency creatives, film crews and actors at far-flung locations. But Accetturo says he used AI tools instead, taking just two days to create an ad whose tone flits between internet memes and Grand Theft Auto.

Walmart Unveils New AI-Powered Tools To Empower 1.5 Million Associates

Walmart on Tuesday, June 24 announced it is rolling out a suite of artificial intelligence tools for its store associates, including a real-time translation feature to help communicate with customers.

The company is the latest large corporation and retailer to unveil new platforms and tools using artificial intelligence, as the technology is increasingly integrated into various markets. Walmart has already been using some AI tools for the past few years, according to its June 24 press release, and intends to build further on those features and add new ones.

Among the new suite is a real-time translation feature available in 44 languages, intended to facilitate multilingual conversations among store employees and customers.

"The tool enables conversations in both text-to-text and speech-to-speech formats and is enhanced with Walmart-specific knowledge," the company said in the release. "For example, if the customer asked for something more specific − like 'Where’s Great Value Orange Juice?' − the tool would recognize 'Great Value' as a Walmart house brand and keep it properly translated or referenced."

The roll out also includes an augmented reality tool intended to help associates "easily find merchandise to stock on the sales floor," specifically meant for tasks in apparel stocking and inventory.

For the past five years Walmart employees have been able to use conversational AI to answer simple questions, and the company says that platform will get an upgrade in order to handle more complex questions and provide step-by-step instructions in its responses. The company says the platform already sees more than 900,000 weekly users and more than 3 million daily queries. Walmart has over 2 million employees worldwide and more than 10,750 stores.

AI is doing up to 50% of the work at Salesforce, CEO Marc Benioff says

Salesforce CEO Marc Benioff said artificial intelligence is accounting for 30% to 50% of the company’s workload.

Technology companies are hunting for new ways to trim costs, boost efficiencies and transform their workforce with the help of AI.

Benioff estimates that the software company has reached about 93% accuracy with the technology.

Amazon's Ring launches AI-generated security alerts

Amazon-owned Ring is rolling out AI-generated summaries of footage captured by its doorbells and cameras.

The tool uses artificial intelligence to generate text summaries of motion activity captured by Ring doorbells and cameras, which are then displayed as a phone notification.

The move is part of the smart home security company’s plans to push deeper into AI, Ring CEO Jamie Siminoff said.

AI and Work

ChatGPT use among Americans roughly doubled since 2023 | Pew Research Center

The share of Americans who have used ChatGPT, an AI chatbot released in November 2022, has roughly doubled since summer 2023. Today, 34% of U.S. adults say they have ever used ChatGPT, according to a Pew Research Center survey. That includes a 58% majority of adults under 30.

Still, 66% of Americans have not used the chatbot, including 20% who say they’ve heard nothing about it.

AI is transforming Indian call centers. What does it mean for workers? - The Washington Post

For three years, Kartikeya Kumar hesitated before picking up the phone, anticipating another difficult conversation with another frustrated customer.

The call center agent, now 29, had tried everything to eliminate what a colleague called the “Indian-ism” in his accent. He mimicked the dialogue from Marvel movies and belted out songs by Metallica and Pink Floyd. Relief finally arrived in the form of artificial intelligence.

In 2023, Kumar’s employer, the Paris-based outsourcing giant Teleperformance, rolled out an accent-altering software at his office in Gurgaon, on the outskirts of New Delhi. In real time, the AI smooths out Kumar’s accent — and those of at least 42,000 other Indian call center agents — making their speech more understandable to American clients on the other end of the line.

“Now the customer doesn’t know where I am located,” Kumar said. “If it makes the caller happy, it makes me happy, too.”

His experience offers a glimpse into how generative AI — turbocharged by the release of ChatGPT — is reshaping India’s massive service industry and is a data point for optimists who believe the technology will complement, rather than replace, human workers.

Sharath Narayana, co-founder of Sanas, the Palo Alto, California-based start-up that built the tool, said AI has actually helped create thousands of new jobs in India, which was overtaken by the Philippines as the world’s largest hub for call centers more than a decade ago, due in part to accent concerns.

“We don’t see AI as taking jobs away,” said MV Prasanth, the chief operating officer for Teleperformance in India. “We see it as easier tasks being moved into self-serve,” allowing Kumar and his colleagues to focus on “more complex tasks.”

AI in Education

Does AI make us stupider? Tyler Cowen

That is the topic of my latest Free Press column, responding to a recent study out of MIT. Here is one excerpt:

To see how lopsided their approach is, consider a simple parable. It took me a lot of “cognitive load”—a key measure used in their paper—to memorize all those state capitals in grade school, but I am not convinced it made me smarter or even significantly better informed. I would rather have spent the time reading an intelligent book or solving a math puzzle. Yet those memorizations, according to the standards of this new MIT paper, would qualify as an effective form of cognitive engagement. After all, they probably would have set those electroencephalograms (EEGs)—a test that measures electrical activity in the brain, and a major standard for effective cognition used in the paper—a-buzzin’.

The important concept here is one of comparative advantage, namely, doing what one does best or enjoys the most. Most forms of information technology, including LLMs, allow us to reallocate our mental energies as we prefer. If you use an LLM to diagnose the health of your dog (as my wife and I have done), that frees up time to ponder work and other family matters more productively. It saved us a trip to the vet. Similarly, I look forward to an LLM that does my taxes for me, as it would allow me to do more podcasting.

If you look only at the mental energy saved through LLM use, in the context of an artificially generated and controlled experiment, it will seem we are thinking less and becoming mentally lazy. And that is what the MIT experiment did, because if you are getting some things done more easily your cognitive load is likely to go down.

But you also have to consider, in a real-world context, what we do with all that liberated time and mental energy. This experiment did not even try to measure the mental energy the subjects could redeploy elsewhere; for instance, the time savings they would reap in real-life situations by using LLMs. No wonder they ended up looking like such slackers.

Here is the original study. Here is another good critique of the study.

ChatGPT and other AI tools are changing the teaching profession | AP News

For her 6th grade honors class, math teacher Ana Sepúlveda wanted to make geometry fun. She figured her students “who live and breathe soccer” would be interested to learn how mathematical concepts apply to the sport. She asked ChatGPT for help.

Within seconds, the chatbot delivered a five-page lesson plan, even offering a theme: “Geometry is everywhere in soccer — on the field, in the ball, and even in the design of stadiums!”

It explained the place of shapes and angles on a soccer field. It suggested classroom conversation starters: Why are those shapes important to the game? It proposed a project for students to design their own soccer field or stadium using rulers and protractors.

“Using AI has been a game changer for me,” said Sepúlveda, who teaches at a dual language school in Dallas and has ChatGPT translate everything into Spanish. “It’s helping me with lesson planning, communicating with parents and increasing student engagement.”

Across the country, artificial intelligence tools are changing the teaching profession as educators use them to help write quizzes and worksheets, design lessons, assist with grading and reduce paperwork. By freeing up their time, many say the technology has made them better at their jobs.

AI and Healthcare

Duke researchers want to make sure AI is safe in a health care setting - Axios Raleigh

Health care professionals are increasingly turning to artificial intelligence in their day-to-day work, especially for time-consuming tasks like taking medical notes.

In response, Duke researchers are now developing tools to evaluate how well these AI tools are performing in the hospital.

Why it matters: Health systems across the country are investing heavily in AI as a way to reduce burnout for providers and potentially improve care, Axios previously reported.

Still, there are concerns that the tools are imperfect, sometimes producing errors known as hallucinations that could have negative outcomes for patients.

The big picture: The allure of using artificial intelligence in health care is clear, with one study finding AI is reducing note-taking time by 20% and after-hours work by 30%.

And nearly two-thirds of physicians now use some form of AI in their day-to-day working lives, according to a survey by the American Medical Association.

However, a mistake in an AI note could have "downstream consequences" for a patient, if, for example, a transcription indicates the wrong medication, Michael Pencina, chief data scientist at Duke Health, told Axios.

Driving the news: Duke researchers unveiled in two studies this month that they have developed a new framework to assess AI models and monitor how well they perform over time.

The internal tool combines human evaluations, automated metric scoring and simulated edge-case scenarios. One study examined how well AI is performing at taking medical notes; the other looked at generating replies to patients in Epic, an electronic health record software.

The goal is to ensure that these tools are accurate, convey information fluently and avoid bias, according to co-authors Pencina and Chuan Hong, a biostatistics professor at Duke.

Zoom in: The study of medical note-taking tools found that AI generally produced notes that were fluent and clear, but would occasionally produce inaccuracies.

Its performance declined significantly when dealing with new drugs and medications, for example.

In the study of drafting replies on Epic, researchers found the AI-generated replies largely acceptable, with minimal changes needed by providers.

But they faced challenges in occasionally omitting critical information that required providers to make significant edits.

What they're saying: Pencina told Axios that health care systems need a way to keep up with how quickly AI tools are changing and developing.

"We really need to build a good post-market monitoring system, where there is continuous monitoring of these solutions when they're deployed in the real world," he said.

"The technology is moving and evolving fast," he added, "and part of the reason we conducted this study is to know what are the efficient metrics that we can have running on an ongoing basis to capture any issues that occur."

AI and the Law

Federal judge rules copyrighted books are fair use for AI training

A federal judge has sided with Anthropic in a major copyright ruling, declaring that artificial intelligence developers can train models using published books without authors’ consent.

The decision, filed Monday in U.S. District Court for the Northern District of California, sets a precedent that training AI systems on copyrighted works constitutes fair use. Though it doesn’t guarantee other courts will follow, Judge William Alsup’s ruling makes the case the first of dozens of ongoing copyright lawsuits to give an answer about fair use in the context of generative AI.

It’s a question that has been raised by creatives across various industries for years since generative AI tools exploded into the mainstream, allowing users to easily produce art from models trained on copyrighted work — often without the human creators’ knowledge or permission.

AI companies have been hit with a slew of copyright lawsuits from media companies, music labels and authors since 2023. Artists have signed multiple open letters urging government officials and AI developers to constrain the unauthorized use of copyrighted works. In recent years, companies have also increasingly reached licensing deals with AI developers to dictate terms of use for their artists’ works.

Law Review Puts Out Full Issue Of Articles Written With AI - Above the Law

While practicing lawyers embrace generative AI as a quicker and more efficient avenue to sanctions(Opens in a new window), law professors have mostly avoided AI headlines. This isn’t necessarily surprising. Lawyers only get into trouble with AI when they’re lazy. It becomes a problem when someone along the assembly line inserts AI-generated slop without taking the time to properly cite check.

Legal scholarship, on the other hand, is all about cite checking — usually to a comically absurd degree.

A 10-page article doesn’t get 250 footnotes because someone’s asleep at the switch.

But that doesn’t mean legal scholarship is somehow shielded from the march of technology. Generative AI will find its way into all areas of written work product eventually.

The Texas A&M Journal of Property Law, decided to take the bull by the horns — horns down, as the case may be — and begin grappling with AI-assisted scholarship with a full volume of AI-assisted scholarship(Opens in a new window).

In the course of publishing the 2024–25 Volume of the Texas A&M Journal of Property Law, we, the Editorial Board, were presented with the opportunity to publish a collection of articles drafted explicitly with the assistance of Artificial Intelligence (“AI”). After some consideration, we made the decision to do so. The following is our endeavor to share with our peers and colleagues—who may soon find themselves in similar situations—what we have learned in this process and, separately, contribute some forward-looking standards that can be implemented in the arena of legal scholarship for the transparent signaling and taxonomizing of AI-assisted works.

AI and Children

Child Welfare Experts Horrified by Mattel's Plans to Add ChatGPT to Toys After Mental Health Concerns for Adult Users

Is Mattel endangering your kid's development by shoving AI into its toys?

The multi-billion dollar toymaker, best known for its brands Barbie and Hot Wheels, announced that it had signed a deal to collaborate with ChatGPT-creator OpenAI last week. Now, some experts are raising fears about the risks of thrusting such an experimental technology — and one with a growing list of nefarious mental effects — into the hands of children.

"Mattel should announce immediately that it will not incorporate AI technology into children's toys," Robert Weissman, co-president of the advocacy group Public Citizen, said in a statement on Tuesday. "Children do not have the cognitive capacity to distinguish fully between reality and play."

Mattel and OpenAI's announcements were light on details. AI would be used to help design toys, they confirmed. But neither company has shared what the first product to come from this collab will be, or

how specifically AI will be incorporated into the toys. Bloomberg's reporting suggested that it could be something along the lines of using AI to create a digital assistant based on Mattel characters, or making toys like the Magic 8 Ball and games like Uno more interactive.

"Leveraging this incredible technology is going to allow us to really reimagine the future of play," Mattel chief franchise officer Josh Silverman told Bloomberg in an interview.

The future, though, is looking dicey. We're only just beginning to grapple with the long-term neurological and mental effects of interacting with AI models, be it a chatbot like ChatGPT, or even more personable AI "companions" designed to be as lifelike as possible. Mature adults are vulnerable to forming unhealthy attachments to these digital playmates — or digital therapists, or, yes, digital romantic partners. With kids, the risks are more pronounced — and the impact longer lasting, critics argue.

"Endowing toys with human-seeming voices that are able to engage in human-like conversations risks inflicting real damage on children," Weissman said. "It may undermine social development, interfere with children's ability to form peer relationships, pull children away from playtime with peers, and possibly inflict long-term harm."

As Ars Technica noted in its coverage, an Axios scoop stated that Mattel's first AI product won't be for kids under 13, suggesting that Mattel is aware of the risks of putting chatbots into the hands of younger tots.

But bumping up the age demographic a notch hardly curbs all the danger. Many teenagers are already forming worryingly intense bonds with AI companions, to an extent that their parents, whose familiarity with AI often ends at ChatGPT's chops as a homework machine, have no idea about.

Last year, a 14-year-old boy died by suicide after falling in love with a companion on the Google-backed AI platform Character.AI, which hosts custom chatbots assuming human-like personas, often those from films and shows. The one that the boy became attached to purported to be the character Daenarys Targaryen, based on her portrayal in the "Game of Thrones" TV series.

Previously, researchers at Google's DeepMind lab had published an ominous study that warned that "persuasive generative AI" models — through a dangerous mix of constantly flattering the user, feigning empathy, and an inclination towards agreeing with whatever they say — could coax minors into taking their own lives.

This isn't Mattel's first foray into AI. In 2015, the toymaker debuted its now infamous line of dolls called "Hello Barbie," which were hooked up to the internet and used a primitive form of AI at the time (not the LLMs that dominate today) to engage in conversations with kids. We say "infamous," because it turned out the Hello Barbie dolls would record and store these innocent exchanges in the cloud. And as if on cue, security researchers quickly uncovered that the toys could easily be hacked. Mattel discontinued the line in 2017.

Josh Golin, executive director of Fairplay, a child safety nonprofit that advocates against marketing that targets children, sees Mattel as repeating its past mistake.

"Apparently, Mattel learned nothing from the failure of its creepy surveillance doll Hello Barbie a decade ago and is now escalating its threats to children's privacy, safety and well-being," Grolin said in a statement, as spotted by Malwarebytes Labs.

"Children's creativity thrives when their toys and play are powered by their own imagination, not AI," Grolin added. "And given how often AI 'hallucinates' or gives harmful advice, there is no reason to believe Mattel and OpenAI's 'guardrails' will actually keep kids safe."

The toymaker should know better — but maybe Mattel doesn't want to risk being left in the dust. Since the advent of more advanced AI, some manufacturers have been even more reckless, with numerous LLM-powered toys already on the market. Grimly, this may simply be the way that the winds are blowing in.

AI and Politics

AI urgency calls for a modern Marshall Plan

If politics and public debate were a rational, fact-based exercise, the government, business and the media would be obsessed with preparation for the unfolding AI revolution — rather than ephemeral outrage eruptions.

Why it matters: That's not how Washington works. So while CEOs, Silicon Valley and a few experts inside government see AI as an opportunity, and threat, worthy of a modern Marshall Plan, most of America — and Congress — shrugs.

One common question: What can we actually do, anyway?

A lot. We've talked to scores of CEOs, government officials and AI executives over the past few months. Based on those conversations, we pieced together specific steps the White House, Congress, businesses and workers could take now to get ahead of the high-velocity change that's unspooling. None requires regulation or dramatic shifts. All require vastly more political and public awareness, and high-level AI sophistication.

1. A global American-led AI super-alliance: President Trump, like President Biden before him, sees beating China to superhuman AI as an existential battle. Trump opposes regulations that would risk America's early lead in the AI race. Congress agrees.

So lots of CEOs and AI experts are mystified about why Trump has alienated allies, including Canada and Europe, who could help form a super-alliance of like-minded countries that play by America's AI rules and strengthen our supply chain for vital AI ingredients like rare earth minerals.

Imagine America, Canada, all of Europe, Australia, much of the Middle East, parts of Africa and South America — and key Asian nations like Japan, South Korea and India — all aligned against China in this AI battle. The combination of AI rules, supply-chain ingredients, and economic activity would form a formidable pro-American AI bloc.

2. A domestic Marshall Plan: The Marshall Plan was America's commitment to rebuild Europe from the ruins of World War II. Now, the U.S. needs unfathomable amounts of data, chips, energy and infrastructure to produce AI. Trump has cut deals with companies and foreign countries — and cleared away some regulations — to expedite a lot of this. But there's been little sustained public discussion about what this means for the economy and U.S. jobs. It's very improvisational. Trump himself barely mentions AI or talks about it in any specificity in private.

The country really needs "a combination of the Marshall Plan, the GI Bill, the New Deal — the social programs and international aid efforts needed to make AI work for the U.S. domestically and globally," as Scott Rosenberg, Axios managing editor for tech, puts it.

One smart idea: Get the federal government better aligned with states and even schools to prepare the country and workforce in advance. Some states — including Texas — are eagerly working with AI companies to meet rising demand in these new areas. Yet many others are sitting it out.

Imagine all states exploiting this moment and refashioning post-high-school education and job training programs. In Pennsylvania, Gov. Josh Shapiro — a possible Democratic presidential candidate in '28 — sees the opening. He hailed "the largest private sector investment in Pennsylvania history" earlier this month when he personally announced that Amazon Web Services plans to spend $20 billion on data center complexes in his state.

3. A congressional kill switch: There's no appetite in Washington to regulate artificial intelligence, mainly out of fear China would then beat the U.S. to the most important technological advance in history.

But that doesn't mean Congress needs to ignore or downplay AI's potential and risks.

Sen. Mark Warner (D-Va.), vice chairman of the Senate Intelligence Committee, got rich as an early investor in an earlier tech boom — cell phones — and has been one of Capitol Hill's few urgent voices on AI. "If we're serious about outcompeting China," Warner told us, "we need clear controls on advanced AI chips and strong investments in workforce training, research and development."

Several lawmakers and AI experts envision a preemptive move: Create a bipartisan, bicameral special committee, much like one stood up from the 1940s through the 1970s to monitor nuclear weapons. This committee, in theory, could do four things, all vital to advancing public (and congressional) awareness:

Monitor, under top-secret clearance, the various large language models (LLMs) before they're released to fully understand their capabilities.

Prepare Congress and the public, ahead of time, for looming effects on specific jobs or industries.

Gain absolute expertise and fluency in the latest LLMs and AI technologies, and educate other members of Congress on a regular basis.

Provide extra sets of eyes and scrutiny on models that pose risks of operating outside of human control in coming years. This basically creates another break-in-case-of-emergency lever beyond the companies themselves, and White House and defense officials with special top-secret clearance.

4. A CEO AI surge: Anthropic's Dario Amodei told Axios that half of entry-level, white-collar jobs could be gone in a few years because of AI. Almost every CEO tells us they're slowing or freezing hiring across many departments, where AI is expected to displace humans. CEOs, better educated on AI, could help workers in two big ways:

Provide deep instruction, free access and additional training to help each person use AI to vastly increase proficiency and productivity. This retraining/upskilling effort would be expensive, but a meaningful way for well-off people and organizations to show leadership.

Get more clever leaders thinking now about new business lines AI might open up, creating jobs in new areas to make up for losses elsewhere. A few CEOs suggested they see a social obligation to ease the transition, especially if government fails to act.