Would you marry a Chatbot? Will AI turn humans into jerks? AI Improvement Blindness, Women are using ChatGPT to check up on Men, Big Tech uses more energy than countries, and more!

Researchers worry about AI turning humans into jerks

(MRM – some might submit that social media and dating sites have already been making this happen – now there are worries about AI)

It has never taken all that much for people to start treating computers like humans. Ever since text-based chatbots first started gaining mainstream attention in the early 2000’s, a small subset of tech users have spent hours holding down conversations with machines. In some cases, users have formed what they believe are genuine friendships and even romantic relationships with inanimate stings of code. At least one user of Replica, a more modern conversational AI tool, has even virtually married their AI companion.

Safety researchers at OpenAI, which are themselves no stranger to having the company’s own chatbot appearing to solicit relationships with some users, is now warning about the potential pitfalls of getting too close with these models. In a recent safety analysis of its new, conversational GPT4o chatbot, researchers said the model’s realistic, human-sounding, conversational rhythm could lead some users to anthropomorphize the AI and trust it as it would a human…

The research suggests these extended conversations with somewhat convincingly human-sounding AI models could have “externalities” that impact human-to human interactions. In other words, conversational patterns learned while speaking with an AI could then pop-up when that same person holds down a conversation with a human. But speaking with a machine and a human aren’t the same, even if they may sound similar on the surface. OpenAI notes its model is programmed to be deferential to the user, which means it will cede authority and let the user interrupt them and otherwise dictate the conversation. In theory, a user who normalizes conservations with machines could then find themselves interjecting, interrupting, and failing to observe general social cues. Applying the logic of chatbot conversations to humans could make a person awkward, impatient, or just plain rude.

Humans don’t exactly have a great track record of treating machines kindly. In the context of chatbots, some users of Replica have reportedly taken advantage of the model’s deference to the user to engage in abusive, berating, and cruel language. One user interviewed by Futurism earlier this year claimed he threatened to uninstall his Replica AI model just so he could hear it beg him not to. If those examples are any guide, chatbots could risk serving as a breeding ground for resentment that may then manifest itself in real-world relationships.

Chatbot maker Replika says it’s okay if humans end up in relationships with AI - The Verge

(MRM – this is an interview)

The idea for Replika came from a personal tragedy: almost a decade ago, a friend of Eugenia’s died, and she fed their email and text conversations into a rudimentary language model to resurrect that friend as a chatbot. Casey Newton wrote an excellent feature about this for The Verge back in 2015. Even back then, that story grappled with some of the big themes you’ll hear Eugenia and I talk about today: what does it mean to have a friend inside the computer?

I’ve heard you say in the past that these companions are not just for young men. In the beginning, Replika was stigmatized as being the girlfriend app for lonely young men on the internet. At one point you could have erotic conversations in Replika. You took that out. There was an outcry, and you added them back for some users. How do you break out of that box?

I think this is a problem of perception. If you look at it, Replika was never purely for romance. Our audience was always pretty well balanced between females and males. Even though most people think that our users are, I don’t know, 20-year-old males, they’re actually older. Our audience is mostly 35-plus and are super engaged users. It’s not skewed toward teenagers or young adults. And Replika, from the very beginning, was all about AI friendship or AI companionship and building relationships. Some of these relationships were so powerful that they evolved into love and romance, but people didn’t come into it with the idea that it would be their girlfriend. When you think about it, this is really about a long-term commitment, a long-term positive relationship.

For some people, it means marriage, it means romance, and that’s fine. That’s just the flavor that they like. But in reality, that’s the same thing as being a friend with an AI. It’s achieving the same goals for them: it’s helping them feel connected, they’re happier, they’re having conversations about things that are happening in their lives, about their emotions, about their feelings. They’re getting the encouragement they need. Oftentimes, you’ll see our users talking about their Replikas, and you won’t even know that they’re in a romantic relationship. They’ll say, “My Replika helped me find a job, helped me get over this hard period of time in my life,” and so on and so on. I think people just box it in like, “Okay, well, it’s romance. It’s only romance.” But it’s never only romance. Romance is just a flavor. The relationship is the same friendly companion relationship that they have, whether they’re friends or not with Replika.

Wyoming reporter caught using artificial intelligence to create fake quotes and stories – Action News Jax

Quotes from Wyoming's governor and a local prosecutor were the first things that seemed slightly off to Powell Tribune reporter CJ Baker. Then, it was some of the phrases in the stories that struck him as nearly robotic.

The dead giveaway, though, that a reporter from a competing news outlet was using generative artificial intelligence to help write his stories came in a June 26 article about the comedian Larry the Cable Guy being chosen as the grand marshal of a local parade. It concluded with an explanation of the inverted pyramid, the basic approach to writing a breaking news story.

"The 2024 Cody Stampede Parade promises to be an unforgettable celebration of American independence, led by one of comedy's most beloved figures," the Cody Enterprise reported. "This structure ensures that the most critical information is presented first, making it easier for readers to grasp the main points quickly."

After doing some digging, Baker, who has been a reporter for more than 15 years, met with Aaron Pelczar, a 40-year-old who was new to journalism and who Baker says admitted that he had used AI in his stories before he resigned from the Enterprise.

The publisher and editor at the Enterprise, which was co-founded in 1899 by Buffalo Bill Cody, have since apologized and vowed to take steps to ensure it never happens again. In an editorial published Monday, Enterprise Editor Chris Bacon said he "failed to catch" the AI copy and false quotes and apologized that "AI was allowed to put words that were never spoken into stories."

Journalists have derailed their careers by making up quotes or facts in stories long before AI came about. But this latest scandal illustrates the potential pitfalls and dangers that AI poses to many industries, including journalism, as chatbots can spit out spurious if somewhat plausible articles with only a few prompts.

AI has found a role in journalism, including in the automation of certain tasks. Some newsrooms, including The Associated Press, use AI to free up reporters for more impactful work, but most AP staff are not allowed to use generative AI to create publishable content.

The AP has been using technology to assist in articles about financial earnings reports since 2014, and more recently for some sports stories. It is also experimenting with an AI tool to translate some stories from English to Spanish. At the end of each such story is a note that explains technology’s role in its production.

New AI tool enables real-time face swapping on webcams, raising fraud concerns | Ars Technica

Over the past few days, a software package called Deep-Live-Cam has been going viral on social media because it can take the face of a person extracted from a single photo and apply it to a live webcam video source while following pose, lighting, and expressions performed by the person on the webcam. While the results aren't perfect, the software shows how quickly the tech is developing—and how the capability to deceive others remotely is getting dramatically easier over time.

The Deep-Live-Cam software project has been in the works since late last year, but example videos that show a person imitating Elon Musk and Republican Vice Presidential candidate J.D. Vance (among others) in real time have been making the rounds online. The avalanche of attention briefly made the open source project leap to No. 1 on GitHub's trending repositories list (it's currently at No. 4 as of this writing), where it is available for download for free.

AI Chatbots are Politically on the Left

Large language models (LLMs) are increasingly integrating into everyday life – as chatbots, digital assistants, and internet search guides, for example. These artificial intelligence (AI) systems – which consume large amounts of text data to learn associations – can create all sorts of written material when prompted and can ably converse with users. LLMs' growing power and omnipresence mean that they exert increasing influence on society and culture.

So it's of great import that these artificial intelligence systems remain neutral when it comes to complicated political issues. Unfortunately, according to a new analysis recently published to PLoS ONE, this doesn't seem to be the case.

AI researcher David Rozado of Otago Polytechnic and Heterodox Academy administered 11 different political orientation tests to 24 of the leading LLMs, including OpenAI’s GPT 3.5, GPT-4, Google’s Gemini, Anthropic’s Claude, and Twitter’s Grok. He found that they invariably lean slightly left politically.

ChatGPT just got a surprise update, but OpenAI can’t explain how it's better | TechRadar

Perhaps Sam Altman’s strawberry picture was a secret message after all, as roughly a week after the OpenAI CEO posted it on social media the company has confirmed that ChatGPT got a surprise update last week that it hadn’t told anyone about.

Making this known on social media, an official ChatGPT account revealed that “there's a new GPT-4o model out in ChatGPT,” adding in a follow-up that this isn't a new model like a GPT-5 upgrade, just an improvement to the existing GPT-4o model.

In its Model Release Notes we’re given a vague description of what these upgrades include – namely "Bug fixes and performance improvements” based on “experiment results and qualitative feedback” – which isn’t much to go on.

OpenAI admits that there’s not a new capability or a specific upgrade it can point to, even calling itself out for not having a quantitative benchmark which it can use to explain these smaller AI behavior tweaks. So we just have to trust it, and look at anecdotal reports that GPT-4o now works better than it did before.

‘Overemployed’ Hustlers Exploit ChatGPT To Take On Even More Full-Time Jobs

About a year ago, Ben found out that one of his friends had quietly started to work multiple jobs at the same time. The idea had become popular during the COVID-19 pandemic, when working from home became normalized, making the scheme easier to pull off. A community of multi-job hustlers, in fact, had come together online, referring to themselves as the “overemployed.”

The idea excited Ben, who lives in Toronto and asked that Motherboard not use his real name, but he didn’t think it was possible for someone like him to pull it off. He helps financial technology companies market new products; the job involves creating reports, storyboards, and presentations, all of which involve writing. There was “no way,” he said, that he could have done his job two times over on his own.

Then, last year, he started to hear more and more about ChatGPT, an artificial intelligence chatbot developed by the research lab OpenAI. Soon enough, he was trying to figure out how to use it to do his job faster and more efficiently, and what had been a time-consuming job became much easier. (“Not a little bit more easy,” he said, “like, way easier.”) That alone didn’t make him unique in the marketing world. Everyone he knew was using ChatGPT at work, he said. But he started to wonder whether he could pull off a second job. Then, this year, he took the plunge, a decision he attributes to his new favorite online robot toy. “That’s the only reason I got my job this year,” Ben said of OpenAI’s tool. “ChatGPT does like 80 percent of my job if I’m being honest.” He even used it to generate cover letters to apply for jobs.

Over the last few months, the exploding popularity of ChatGPT and similar products has led to growing concerns about AI’s potential effects on the international job market—specifically, the percentage of jobs that could be automated away, replaced by a well-oiled army of chatbots. But for a small cohort of fast-thinking and occasionally devious go-getters, AI technology has turned into an opportunity not to be feared but exploited, with their employers apparently none the wiser.

The people Motherboard spoke with for this article requested anonymity to avoid losing their jobs. For clarity, Motherboard in some cases assigned people aliases in order to differentiate them, though we verified each of their identities. Some, like Ben, were drawn into the overemployed community as a result of ChatGPT. Others who were already working multiple jobs have used recent advancements in AI to turbocharge their situation, like one Ohio-based technology worker who upped his number of jobs from two to four after he started to integrate ChatGPT into his work process. “I think five would probably just be overkill,” he said.

Employers Can Tell If You Used ChatGPT to Write Your Resume | Entrepreneur

A Tuesday Financial Times report found that about half of job candidates are using AI in their applications.

Even though over 97% of Fortune 500 companies use AI for hiring, many large companies do not tolerate AI use from candidates.

Employers can tell if someone used AI by the language of the application materials, including certain keywords like pivotal or delve.

Elon Musk xAI startup rolls out Grok-2 artificial intelligence assistant in race against ChatGPT | Fox Business

Elon Musk’s startup xAI premiered a beta version of its artificial intelligence assistant known as Grok-2, the company’s latest large language model, on Wednesday, as the company races to catch up against OpenAI’s ChatGPT tool.

Like OpenAI’s DALL-E, Google’s Gemini and Midjourney, the Grok-2 AI model includes an image generation tool; however, unlike those competitors, Musk’s version has fewer restrictions on what kinds of images can be generated, according to Forbes. The beta version is accessible to X users who pay for a "Premium" or "Premium+" subscription.

In its announcement, xAI said Grok-2 "is our state-of-the-art AI assistant with advanced capabilities in both text and vision understanding, integrating real-time information from the X platform, accessible through the Grok tab in the X app." The company also launched Grok-2 mini, a "small but capable model that offers a balance between speed and answer quality."

"Compared to its predecessor, Grok-2 is more intuitive, steerable, and versatile across a wide range of tasks, whether you're seeking answers, collaborating on writing, or solving coding tasks," the start-up said in a statement. "In collaboration with Black Forest Labs, we are experimenting with their FLUX.1 model to expand Grok’s capabilities on X."

Google live Gemini demo lifts pressure on Apple as AI hits smartphones

Google’s live demo on Tuesday had some bugs, but the company showed that it’s ahead of its rivals when it comes to bringing artificial intelligence to smartphones.

The company’s Gemini features for smartphones are real and are shipping — at least for testing purposes — in the coming weeks.

“We’ve moved from the mode of, like projecting a vision of where things are headed to, like, actual shipping product,” Rick Osterloh, Google devices chief told CNBC’s Deirdre Bosa.

AI math tutors no substitute for human teachers, study finds

High school students who use generative AI to prepare for math exams perform worse on tests, where they can't rely on AI, than those who didn't use the tools at all, a new study shows.

Why it matters: A "personal tutor for every student" is one of the rosy scenarios AI optimists paint, but AI-driven learning still has many hurdles in its way.

Catch up quick: Since ChatGPT was released nearly two years ago, educators have struggled to find the best ways to incorporate genAI into the classroom.

Many immediately feared ChatGPT would create a "flood" of cheating that would likely go undetected.

Some schools banned these tools outright, while others allowed students to use them as long as they disclose their use.

In response to theories that genAI works best as a tutor, Khan Academy founder Sal Khan began piloting a genAI tutor called Khanmigo last year.

The company says that the tool is designed not to give students answers, but to help them solve the problems on their own.

Elementary school teachers in Newark, the largest public school system in New Jersey, volunteered to test Khanmigo last year — and their feedback was mixed.

One teacher told the New York Times that the genAI tutor was useful as a "co-teacher," but others were frustrated by the bots' tendency to give away answers, sometimes wrong ones.

During the last school year, 65,000 students and teachers piloted Khanmigo in classrooms in Newark and across the U.S. The second year of the pilot program is about to begin.

How ‘Deepfake Elon Musk’ Became the Internet’s Biggest Scammer - The New York Times

All Steve Beauchamp wanted was money for his family. And he thought Elon Musk could help.

Mr. Beauchamp, an 82-year-old retiree, saw a video late last year of Mr. Musk endorsing a radical investment opportunity that promised rapid returns. He contacted the company behind the pitch and opened an account for $248. Through a series of transactions over several weeks, Mr. Beauchamp drained his retirement account, ultimately investing more than $690,000. Then the money vanished — lost to digital scammers on the forefront of a new criminal enterprise powered by artificial intelligence.

The scammers had edited a genuine interview with Mr. Musk, replacing his voice with a replica using A.I. tools. The A.I. was sophisticated enough that it could alter minute mouth movements to match the new script they had written for the digital fake. To a casual viewer, the manipulation might have been imperceptible.

“I mean, the picture of him — it was him,” Mr. Beauchamp said about the video he saw of Mr. Musk. “Now, whether it was A.I. making him say the things that he was saying, I really don’t know. But as far as the picture, if somebody had said, ‘Pick him out of a lineup,’ that’s him.”

Thousands of these A.I.-driven videos, known as deepfakes, have flooded the internet in recent months featuring phony versions of Mr. Musk deceiving scores of would-be investors. A.I.-powered deepfakes are expected to contribute to billions of dollars in fraud losses each year, according to estimates from Deloitte.

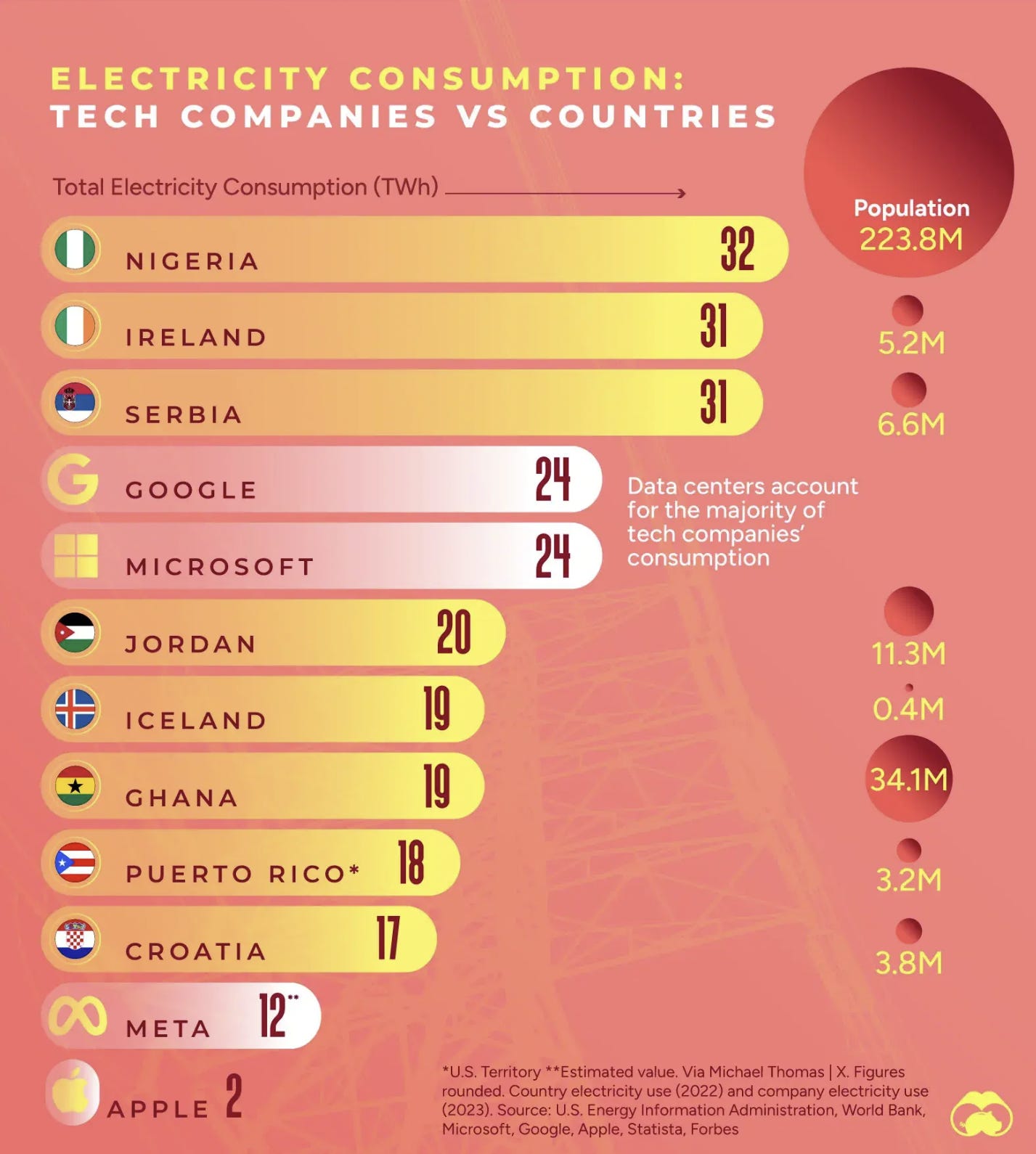

Big Tech Uses More Electricity Than Entire Countries

(MRM – no, Puerto Rico is not a country)

AI Won’t Give You a New Sustainable Advantage

(MRM – I personally don’t subscribe to this view. I guess it depends on how one defines “sustainable”. I think AI, done right, could certainly provide a competitive advantage for 3-5 years.)

Generative artificial intelligence (gen AI) has the potential to radically alter how business is conducted, and there’s no doubt that it will create a lot of value. Companies have used it to identify entirely new product opportunities and business models; to automate routine decisions, freeing humans to focus on decisions that involve ethical trade-offs, empathy, or imagination; to deliver customized professional services formerly available only to the wealthy; and to develop and communicate product and other recommendations to customers faster, more cheaply, and more informatively than was possible with human-driven processes.

But, the authors ask, will companies be able to leverage gen AI to build a competitive advantage? The answer, they argue in this article, is no—unless you already have a competitive advantage that rivals cannot replicate using AI. Then the technology may serve to amplify the value you derive from that advantage.

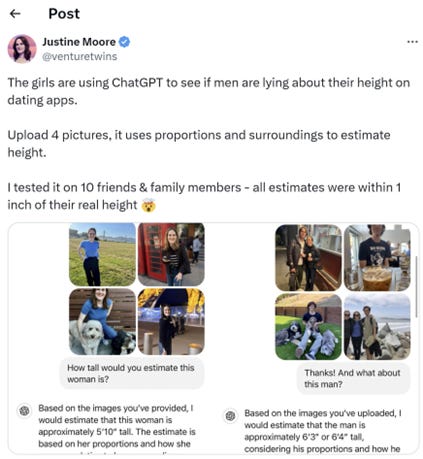

Women are using ChatGPT to check up on Men

MRM – per research, a man’s height is important to a significant segment of woman (certainly on dating apps) so…

92% of IT jobs will be transformed by AI | CIO

To get a better idea how AI will change the labor market for technology professionals, the recently formed AI-Enabled ICT Workforce Consortium has published its inaugural report, “The Transformational Opportunity of AI on ICT Jobs,” which reveals that 92% of IT jobs will see a high or moderate transformation due to advances in AI.

The study argues that the biggest changes will be seen in mid-level (40%) and entry-level (37%) technology jobs, as certain skills and capabilities become more or less relevant. AI ethics, responsible AI, rapid engineering, AI literacy, and large language model (LLM) architecture are expected to rise in importance in this new era, while traditional data management, content creation, documentation maintenance, basic programming and languages, and research information will become less relevant. That’s why, the report says, critical skills are needed in all IT jobs, including AI literacy, data analytics, and rapid engineering. That’s why the consortium is seeking to empower workers to reskill and upskill.

World’s First Major Artificial Intelligence AI Law Enters into Force in EU: Here’s What It Means for Tech Giants

Broad Scope and Horizontal Application: The Act is quite expansive in nature, and it applies horizontally to AI activities across various sectors. The scope is designed and determined to cover a wide range of AI systems, from high-risk models to general-purpose AI, to ensure that the deployment and further development of AI adheres to stringent standards and rules.

Extraterritorial Scope and Global Implications: One of the most significant and unique characteristics of the Act is its extraterritorial scope, as the law doesn’t only apply to EU-based organizations but also to non-EU entities if their AI systems are used within the EU. So essentially the tech giants and AI developers all across the world have to ensure that they meet the compliance requirements of the Act to ensure their services and products are accessed by EU users.

Key Stakeholders: Providers and Deployers: In the framework of the AI Act, “providers” are the ones who create AI systems, while “deployers” are those who implement these systems in real-world scenarios. Although their roles differ, deployers can sometimes become providers, especially if they make substantial changes to an AI system. This interaction between providers and deployers underscores the importance of having clear rules and solid compliance strategies.

Exemptions and Special Cases: The AI Act does allow for certain exceptions, such as AI systems used for military, defense, and national security, or those developed strictly for scientific research. Additionally, AI employed for personal, non-commercial use is exempt, as are open-source AI systems unless they fall under high-risk or transparency-required categories. These exemptions ensure the Act focuses on regulating AI with significant societal impact while allowing room for innovation in less critical areas.

Regulatory Landscape: Multiple Authority and Coordination: The AI Act is enforced by a multi-layered regulatory framework that includes numerous authorities in each EU nation, as well as the European AI Office and AI Board at the EU level. This structure is designed to guarantee that the AI Act is applied consistently across the EU, with the AI Office playing a key role in coordinating enforcement and providing guidance.

Significant Penalties for Noncompliance: The AI Act provides significant penalties for noncompliance, including fines of up to 7% of worldwide annual revenue or €35 million, whichever is higher, for infringing forbidden AI activities. Other violations, such as failing to fulfill high-risk AI system criteria, result in lower fines. These steep penalties highlight the EU’s commitment to enforcing the AI Act and preventing unethical AI practices.

Prohibited AI Practices: Protecting EU Values: The AI Act expressly prohibits some AI techniques that are harmful, exploitative, or violate EU principles. These include AI systems that employ subliminal or manipulative approaches, exploit weaknesses, or conduct social credit ratings. The Act also restricts AI usage in areas such as predictive policing and emotion identification, notably in workplaces and educational settings. These prohibitions demonstrate the EU’s commitment to protecting basic rights and ensuring AI development follows ethical norms.

Responsibilities of High-Risk AI System Deployers: Those that use high-risk AI systems must follow tight restrictions, such as adhering to the provider’s instructions, assuring human oversight, and performing frequent monitoring and reviews. They must also maintain records and cooperate with regulatory agencies. Additionally, deployers must conduct data protection and basic rights impact assessments when needed, emphasizing the significance of responsibility in AI deployment.

Governance and Enforcement: The Role of the European AI Office and AI Board: The European AI Office, which is part of the European Commission, is in charge of enforcing regulations governing general-purpose AI models and ensuring that the AI Act is applied consistently throughout member states. The AI Board, which comprises members from each member state, will help to guarantee consistent implementation and give direction. These entities will work together to ensure regulatory uniformity and solve new difficulties in AI governance.

General-Purpose AI Models: Special Considerations: General-purpose AI (GPAI) models, which can handle various tasks, must meet specific requirements under the AI Act. Providers of these models need to publish detailed summaries of the data used for training, keep technical documentation, and comply with EU copyright laws. Models that pose systemic risks have additional obligations, such as notifying the European Commission, conducting adversarial testing, and ensuring cybersecurity.

AI's Hidden Unpayable Intellectual Debt

Understandably, the CrowdStrike failure prompted the AI community to address this concern from within its sphere.

The relevant warning isn’t new but often falls on deaf ears: do we want to integrate AI into critical infrastructure or high-stakes services when no one knows how it works? This issue gains unexpected urgency after experiencing firsthand the profound impact of a global collapse precipitated by reasons everyone ignores. The obvious solution is to do what science does: fail to learn.

But, can we decide by trial and error what we should and shouldn’t do with complex AI systems, like the Wright Brothers did? In a sense, yes. We design spam filters and they work fine. You try, you learn. Eventually, we may also discover that building mechanical arms to play chess is a bad idea. So we don’t do it anymore. You fail, you also learn.

The bad news is that’s not always the case with AI. The key difference between what happened at Crowdstrike (or what the Wright Brothers achieved for the miracle of aviation) and what could happen with AI is this: you try, you fail, and you learn pretty much nothing.

The outage occurred despite engineers knowing well how the software works. It wasn’t a trial—they weren’t trying to learn anything—just a big error and a bigger lesson. Fine. It was unintentional but the feedback loop was intact so they quickly caught the mistake not to repeat it. With modern AI it goes rather differently: we don’t know how it works. Bad. We don’t know what we don’t know. Worse.

Is This New OpenAI Product a Potential Google Killer?

OpenAI has announced that SearchGPT is coming soon.

SearchGPT is a search engine that will use artificial intelligence to help give users timely answers to queries.

Google recently lost an antitrust lawsuit that could impact the dominance of its search engine.

Should artists be terrified of AI replacing them? | Life and style | The Guardian

Artist-musicians Holly Herndon and Mat Dryhurst are AI veterans. They’ve been grappling with and educating people about it for more than a decade. The Berlin-based collaborative life partners birthed an AI baby to help them create Herndon’s 2019 album Proto. I emailed them a cry for help.

I asked if AI is going to kill the human artist. “No. Human artists will make works using AI and works that will reject AI… Artistry is always evolving. We have to digest that many things that would have appeared virtuosic by 20th-century standards will be able to be generated in microseconds by an AI model. But a media file that sounds like a choir is not a choir. Culture will persist and evolve in unexpected ways.” Human artists will be fine, so long as we don’t delude ourselves that the culture and media landscape are not about to change considerably.

Is human performance replaceable? Or is AI in fact inspiring more human modes of performance? “We like to raise the example of DJing as a reason for hope here. In most cases, DJing is very, very easy to automate. But there are all kinds of reasons why people go to see DJs perform – to meet other people, to celebrate someone, to take a break from looking at a screen.”

At the same time, Herndon and Dryhurst warn that the creative industry may turn into a popularity contest. “We have already seen glimpses of the blurring of artists and influencers. Soon the most attractive kid in class will have all the tools to choose to be the most popular metal artist, or crime thriller author, with very little impudence.” Plot twist: the influencers will steal my bucket list.

ChatGPT Gets Diagnoses Correct Half of the Time

ChatGPT is not accurate as a diagnostic tool but does offer some medical educational benefits, according to researchers. They reported their findings in PLOS ONE.

Researchers investigated ChatGPT’s diagnostic accuracy and utility in medical education by inputting 150 Medscape case challenges (September 2021 to January 2023) into the chatbot.

ChatGPT answered 49% of cases correctly. The overall accuracy was 74%, the precision was 48.67%, the sensitivity was 48.67%, the specificity was 82.89%, and the area under the curve was 0.66. Just over half of answers were complete and relevant (52%), and a similar percentage were characterized as low cognitive load (51%).

ChatGPT struggled with the interpretation of laboratory values and imaging results but was generally correct in ruling out a specific differential diagnosis and providing reasonable next diagnostic steps.

Trump promotes false Harris AI crowd size conspiracy

(MRM – I always hesitate to share political news related to AI and I’m trying to balance that news. Just want you to know I’m not trying to favor one side or the other – just share the news).

Donald Trump falsely accused Vice President Kamala Harris of using AI technology to fabricate images of the crowd sizes at her rallies, parroting an unfounded conspiracy theory circulating among MAGA Republican commentators.

The Harris campaign flatly denied the allegation, maintaining that the photo in question was a real picture of a 15,000-person crowd cheering for the Harris-Walz ticket in Michigan.

AI companies lose bid to dismiss parts of visual artists' copyright case | Reuters

A group of visual artists can continue to pursue some claims that Stability AI, Midjourney, DeviantArt and Runway AI's artificial intelligence-based image generation systems infringe their copyrights, a California federal judge ruled on Monday.

U.S. District Judge William Orrick said the artists plausibly argued, opens new tab that the companies violate their rights by illegally storing their works on their systems. Orrick also refused to dismiss related trademark-law claims, though he threw out others accusing the companies of unjust enrichment, breach of contract and breaking a separate U.S. copyright law.

School Replaces Teachers With AI Tools Like ChatGPT for Some Students - Business Insider

David Game College will let some students learn with AI tools instead of teachers from next month.

ChatGPT and LLMs will help 20 students prepare for exams in subjects like mathematics and biology.

While some experts say AI can be a helpful learning tool, it cannot yet replace teachers.

Elon Musk’s AI photo tool is generating realistic, fake images of Trump, Harris and Biden | CNN Business

Elon Musk’s AI chatbot Grok on Tuesday began allowing users to create AI-generated images from text prompts and post them to X. Almost immediately, people began using the tool to flood the social media site with fake images of political figures such as former President Donald Trump and Vice President Kamala Harris, as well as of Musk himself — some depicting the public figures in obviously false but nonetheless disturbing situations, like participating in the 9/11 attacks.

Unlike other mainstream AI photo tools, Grok, created by Musk’s artificial intelligence startup xAI, appears to have few guardrails. In tests of the tool, for example, CNN was easily able to get Grok to generate fake, photorealistic images of politicians and political candidates that, taken out of context, could be misleading to voters. The tool also created benign yet convincing images of public figures, such as Musk eating steak in a park.

Some X users posted images they said they created with Grok showing prominent figures consuming drugs, cartoon characters committing violent murders and sexualized images of women in bikinis. In one post viewed nearly 400,000 times, a user shared an image created by Grok of Trump leaning out of the top of a truck, firing a rifle. CNN tests confirmed the tool is capable of creating such images.

See how AI detection works, and fails, to catch election deepfakes - Washington Post

Artificial intelligence-created content is flooding the web and making it less clear than ever what’s real this election. From former president Donald Trump falsely claiming images from a Vice President Kamala Harris rally were AI-generated to a spoofed robocall of President Joe Biden telling voters not to cast their ballot, the rise of AI is fueling rampant misinformation.

Deepfake detectors have been marketed as a silver bullet for identifying AI fakes, or “deepfakes.” Social media giants use them to label fake content on their platforms. Government officials are pressuring the private sector to pour millions into building the software, fearing deepfakes could disrupt elections or allow foreign adversaries to incite domestic turmoil.

But the science of detecting manipulated content is in its early stages. An April study by the Reuters Institute for the Study of Journalism found that many deepfake detector tools can be easily duped with simple software tricks or editing techniques.

Maria Bartiromo interviews lifelike artificial intelligence clone

Delphi co-founder and CEO Dara Ladjevardian designed an artificial intelligence clone of himself that told FOX Business host Maria Bartiromo all about itself. The company announced the technology that allows for "real-time, personalized interactions with digital clones of experts and influencers" in a press release this week. Ladjevardian and his clone sat down with "Maria Bartiromo’s Wall Street" to demonstrate the capabilities of the online robot.

How generative AI can help you get unstuck – McKinsey

Generative AI (gen AI) continues to weave its way into our work and personal lives. According to a recent McKinsey survey, 65 percent of organizations are now using gen AI, and many businesses are already seeing material benefits from it, write McKinsey’s Alex Singla, Alexander Sukharevsky, and coauthors. But can gen AI help you get unstuck in the workplace and beyond? In short, yes. It can serve as a creative assistant that can spark ideas for writing projects, art concepts, technical issues, and more. Need to draft text, code, or design something? Gen AI can provide examples to kickstart your projects. If you're stuck on understanding a concept or need information, it can explain, summarize, or point you to the right resources. In technical fields, it can even simulate scenarios, generate data, or help model complex systems.

But while gen AI can help break the deadlock and provide inspiration, final decisions and personal touches often come from human insight and intuition. Whether you're aiming for a productivity boost or tackling tough organizational challenges, check out these insights to learn how gen AI could be a game-changer.

The state of AI in early 2024: Gen AI adoption spikes and starts to generate value

Gen AI: A cognitive industrial revolution

Driving innovation with generative AI

The generative AI reset: Rewriting to turn potential into value in 2024

AI for social good: Improving lives and protecting the planet

Embrace gen AI with eyes wide open

The economic potential of generative AI: The next productivity frontier

What’s the future of generative AI? An early view in 15 charts

‘Never say goodbye’: Can AI bring the dead back to life? | Technology News | Al Jazeera

In a world where artificial intelligence can resurrect the dead, grief takes on a new dimension. From Canadian singer Drake’s use of AI-generated Tupac Shakur vocals to Indian politicians addressing crowds years after their passing, technology is blurring the lines between life and death.

Over the past few years, AI projects around the world have created digital “resurrections” of individuals who have passed away, allowing friends and relatives to converse with them. Typically, users provide the AI tool with information about the deceased. This could include text messages and emails or simply be answers to personality-based questions. The AI tool then processes that data to talk to the user as if it were the deceased. One of the most popular projects in this space is Replika – a chatbot that can mimic people’s texting styles.

Other companies, however, now also allow you to see a video of the dead person as you talk to them. For example, Los Angeles-based StoryFile uses AI to allow people to talk at their own funerals. Before passing, a person can record a video sharing their life story and thoughts. During the funeral, attendees can ask questions and AI technology will select relevant responses from the prerecorded video.

In June, US-based Eternos also made headlines for creating an AI-powered digital afterlife of a person. Initiated just earlier this year, this project allowed 83-year-old Michael Bommer to leave behind a digital version of himself that his family could continue to interact with.

Chatbots in science: What can ChatGPT do for you?

The article highlights three key lessons from using ChatGPT in scientific research:

Prompt Engineering: Crafting clear, specific prompts is essential for getting useful responses from ChatGPT.

Task Selection: ChatGPT is better suited for mechanical tasks like summarizing and writing rather than creative tasks that require deep understanding.

Writing Assistance: Using ChatGPT to help write or revise text is more reliable than using it to interpret complex content.