Another busy week in the AI world as OpenAI has a new release (o1, aka "Strawberry") that can reason, Apple’s AI debuts, AI Agents are the rage, AI CEOs meet with the White House and Tyler Cowen wonders “how weird will AI culture get”

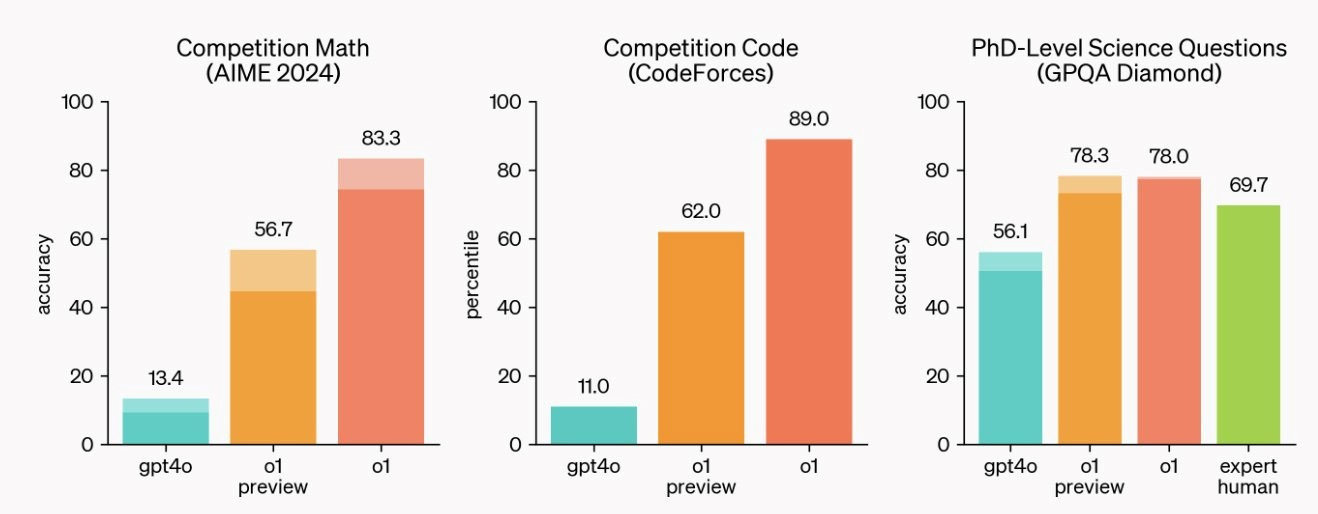

OpenAI Unveils New ChatGPT (o1 – aka “Strawberry”) That Can Reason Through Math and Science

OpenAI's new model is capable of complex reasoning, working through problems more like a human would, Axios' Ina Fried reports.

The new tool, called o1, is better at complex math, science and coding questions than previous iterations, and it will also do a better job explaining how it reaches its conclusions, the company said.

🧠 How it works: OpenAI trained o1 — which had been code named "Strawberry" before its launch — through a lengthy process of trial-and-error problem-solving, The New York Times explains.

Ultimately, the goal is to create systems that work through problems one step at a time, with each step informing the next one. That type of reasoning should reduce errors and help AI perform more complex tasks.

"The model sharpens its thinking and fine tunes the strategies that it uses to get to the answer," Mira Murati, OpenAI's chief technology officer, told Wired.

⌨️ What's next: Some paid ChatGPT subscribers will have access to o1 beginning today, and OpenAI also plans to sell a lightweight version specifically to help people write code.

Strawberry Alarm Clock!

Deep Prasad writes: OpenAI just released an AI as smart as most PhDs in physics, mathematics and the life sciences. Wake up. The world will never be the same and in many ways, it will be unrecognizable a decade from now.

Mckay Wrigley is enthusiastic: o1’s ability to think, plan, and execute is off the charts.

Ethan Mollick says: There are a lot of milestones that AI passed today. Gold medal at the Math Olympiad among them.

Like Ethan, however, I agree the model is not necessarily better at a lot of non-reasoning tasks. Ethan also notes that AGI will be jagged and uneven.

Something New: On OpenAI's "Strawberry" and Reasoning – Ethan Mollick

I have had access to the much-rumored OpenAI “Strawberry” enhanced reasoning system for awhile, and now that it is public, I can finally share some thoughts1. It is amazing, still limited, and, perhaps most importantly, a signal of where things are heading.

The new AI model, called o1-preview (why are the AI companies so bad at names?), lets the AI “think through” a problem before solving it. This lets it address very hard problems that require planning and iteration, like novel math or science questions. In fact, it can now beat human PhD experts in solving extremely hard physics problems.

To be clear, o1-preview doesn’t do everything better. It is not a better writer than GPT-4o, for example. But for tasks that require planning, the changes are quite large.

Introducing PaperQA2, the first AI agent that conducts entire scientific literature reviews on its own.

PaperQA2 is also the first agent to beat PhD and Postdoc-level biology researchers on multiple literature research tasks, as measured both by accuracy on objective benchmarks and assessments by human experts. We are publishing a paper and open-sourcing the code. This is the first example of AI agents exceeding human performance on a major portion of scientific research, and will be a game-changer for the way humans interact with the scientific literature.

How weird will AI culture get?

To the extent there is a lot of slack [with cost and energy], AIs themselves will create wild products of the imagination, especially as they improve in computing power and skill. AIs will sing to each other, write for each other, talk to each other — as they already do — trade with each other, and come up with further alternatives we humans have not yet pondered. Evolutionary pressures within AI’s cultural worlds will determine which of these practices spread.

If you own some rights flows to AI usage, you might just turn them on and let them “do their thing.” Many people may give their AIs initial instructions for their culture-building: “Take your inspiration from 1960s hippies,” for example, or “try some Victorian poetry.” But most of the work will be done by the AIs themselves. It is easy to imagine how these productions might quickly become far more numerous than human-directed ones.

With a lot of slack, expect more movies and video, which consume a lot of computational energy. With less slack, text and poetry will be relatively cheaper and thus more plentiful.

In other words: In the not-too-distant future, what kind of culture the world produces could depend on the price of electricity.

It remains to be seen how much humans will be interested in these AI cultural productions. Perhaps some of them will fascinate us, but most are likely to bore us, just as few people sit around listening to whale songs. But even if the AI culture skeptics are largely correct, the sheer volume will make an impact, especially when combined with evolutionary refinement and more human-directed efforts. Humans may even like some of these productions, which will then be sold for a profit. That money could then be used to finance more AI cultural production, pushing the evolutionary process in a more popular direction.

With high energy prices, AI production will more likely fit into popular culture modes, if only to pay the bills. With lower energy prices, there will be more room for the avant-garde, for better or worse. Perhaps we would learn a lot more about the possibilities for 12-tone rows in music.

A weirder scenario is that AIs bid for the cultural products of humans, perhaps paying with crypto. But will they be able to tolerate our incessant noodling and narcissism? There might even be a columnist or two who makes a living writing for AIs, if only to give them a better idea what we humans are thinking.

The possibilities are limitless, and we are just beginning to wrap our minds around them. The truth is, we are on the verge of one of the most significant cultural revolutions the world has ever seen.

I urge the skeptics to wait and see. Of course most of it is going to be junk!

Nvidia, OpenAI, Anthropic and Google execs meet with White House to talk AI energy and data centers

Nvidia CEO Jensen Huang, OpenAI CEO Sam Altman, Anthropic CEO Dario Amodei and Google President Ruth Porat met at the White House Thursday to discuss AI energy and infrastructure.

Leaders at Microsoft, Amazon and several American power and utility companies, as well as Commerce Secretary Gina Raimondo and Energy Secretary Jennifer Granholm, also attended.

Topics discussed included AI’s energy usage, data center capacity, semiconductor manufacturing, and grid capacity, sources familiar with the meeting confirmed to CNBC.

Salesforce unleashes AI agents for sales, marketing and customer service

Salesforce on Thursday officially debuted Agentforce, its effort to create generative AI bots capable of taking action on their own, within established limits.

Why it matters: Removing the need for direct human supervision opens up the potential for more significant productivity gains, but also introduces significant new risks.

Driving the news: The first wave of Salesforce's AI agents focus on sales, marketing, commerce and customer service.

Out of the box, Salesforce is offering a handful of agents that can handle tasks such as sales rep, service agent, personal shopper and sales coach.

Agentforce also includes a low-code option to build additional agents and options to bring in agents and models from others.

Salesforce says Agentforce starts at $2 per conversation, with volume discounts for larger customers.

How it works: A key part of Agentforce is Atlas, a new reasoning engine that Salesforce said aims to simulate how humans think and plan.

According to Salesforce, Atlas starts by evaluating and then refining a query. From there, it retrieves data and analyzes the results to ensure they're accurate, relevant, and grounded in trusted data.

What they're saying: Salesforce CEO Marc Benioff positioned Agentforce as "AI as it was meant to be," saying that most companies have been oversold on AI-assisted copilots that don't deliver enough value.

"They found the technology was not ready for prime time," Benioff said during a briefing with reporters and editors.

"We've seen an over 40% increase in our case resolution when you compare the agent to our old chatbot," said Kevin Quigley, a senior manager at Wiley, one of the customers who got early access to Agentforce.

Fearing AI, I was reluctant to use ChatGPT. But friends, it changed my life | Van Badham | The Guardian

I’m one of the thousands of Australian and other writers dependent on royalty cheques to pay phone bills who learned last year that their work had been hoovered up to train AI models for Meta (market cap: US$1.28tn) and other mega corps for less remuneration than a kid pays to photocopy one page of it at the library. None of us got a dollar while a wave of AI-ghostwritten self-publishers announced their arrival into our crowded, poor and tiny market. This was (and I did not need a computer to tell me this) discouraging.

As a researcher of disinformation, I am freaked out about the implications for elections, with everything from the billionaire owner of X tweeting AI-rendered video trashing Kamala Harris with her stolen image and voice, to a bleach-my-eyes-bad, AI-generated “Trump calendar” I carelessly shared online before realising it was fake. The image-making capacities of AI worry counter-disinformation activists less than the AI language models. An impression of “authentic” voices to brigade comment sections, overrun polls and invent “news” sources can be spawned in seconds.

To understand the specific, nefarious use of AI apps, I bought one. The purchase coincided with an overdue formal ADHD diagnosis, which shocked precisely zero people I’d ever met. Testing the edges of what the technology could do for the dark side led to experimenting with what it might do for my newly understood-as-syndromic limitations. It stole my books; I felt owed.

Months later I feel rewarded – the technology helps to douse the electrical fire in my brain just as glasses aid my bung eyes. I now find myself in the moral mire of knowing AI is both capable of profound harm and can be life-changingly helpful.

Wow. Apple might just have fixed Siri. and beat OpenAI to the first AI phone. and commoditized OpenAI with Google. and casually dropped a video understanding model. incredibly well executed.

(MRM – click on link to watch video)

What Is Apple Intelligence? Everything To Know About iPhone 16 AI Features - CNET

Apple Intelligence is billed as "AI for the rest of us." The idea is that Apple Intelligence is built into your iPhone, iPad, and Mac to help you write, get things done and express yourself. It draws on personal context across your Apple devices to make recommendations, and generates results more specific to you. Apple touts the AI feature as setting a brand-new standard for privacy in AI.

CNET's Lisa Eadicicco in her story about Apple intelligence says that understanding personal context when delivering answers and carrying out tasks is a big part of Apple's approach with Apple Intelligence.

"Apple seems to be using this tactic as a way to distinguish its own AI efforts from those previously announced by competitors," wrote Eadicicco. "As an example, the company explained how Apple Intelligence can understand multiple factors like traffic, your schedule and your contacts to help you understand whether you can make it to an event on time."

Study suggests Goldman Sachs was right about AI: it's not 'replacing' coders

Many in finance think coding is going out of fashion due to AI. UBS' chief economist Paul Donovan thinks the skill is a 'stranded asset', while Barclays' global head of FIG banking suggests one AI powered coder could do the work of eight workers not imbued with the benefits of AI. Goldman Sachs didn't buy the hype, however, and a recently published paper affirms that the reality may be much more marginal.

'The Effects of Generative AI on High Skilled Work', a paper by researchers from MIT, Princeton, Microsoft and more, looks at generative AI in the workplace, rather than in a controlled environment. The workplaces it scrutinises include Microsoft, tech consultancy Accenture and a "fortune 100 electronics manufacturing firm", examples of which are Hewlett Packard and NVIDIA.

Under the study, 4,867 software engineers who were asked to implement Github CoPilot into their workflow. There was a 26% increase in pull requests by engineers using the technology - a signifier that more tasks had been completed. The implication is that four AI-powered engineers could do the same number of tasks as five non-AI engineers...

In Goldman's generative AI report, 'Too much spend, too little benefit,' the bank's equity research head Jim Covello said AI is good at making code more efficient, but "estimates of even these efficiency improvements have declined."

At Microsoft, benefits to the process of adding code were even more marginal. Commits, which represent a successful change to a developer's codebase, were up 18%. Builds, which check whether new code was successful or not, were up 23%. The implication is that developers write around 20% more code when using AI. Build success rate, used to measure the quality of code, was down a marginal 1.3%.

Accenture's result was even more peculiar. It had the smallest number of developers at 316, and had a massive 92% increase in the number of builds when AI was deployed. However, it also saw its build success rate decline 17.4%.

The study also found that AI was more effective at raising task completion for junior engineers than for senior ones. This is likely because junior developers are often left to handle the more mundane tasks that AI would be well-equipped to handle.

It found a similar distinction between short-tenured and long-tenured employees, which aligns with what we've seen in the industry. At Goldman Sachs, for example, AI is used to both write code and explain it, in cases where new engineers are carrying on the work of ones that have left.

OpenAI cites increase in business users, weighs price boosts

OpenAI is growing its revenue from business users and contemplating hefty price hikes for users who want access to its next-level services, per reports.

Why it matters: Generative AI is notoriously expensive to develop and run, and those costs rise exponentially with each new generational leap — like the one from OpenAI's GPT-4 to its long-awaited successor.

Driving the news: OpenAI said Thursday that it now has more than a million paying business users, up from 600,000 in April.

By "business users," OpenAI means people who pay for ChatGPT Enterprise, Team and Edu, all of which launched in the last year.

The company told Axios that its enterprise and education customers include Moderna, Morgan Stanley and Arizona State University.

Yes, but: OpenAI is spending tens of billions to train and deploy its next generation of models and keeps raising more fortunes from investors to pay for that work, which its estimated $2 billion in annual revenue doesn't begin to cover.

That "money incinerator" is forcing the company to look at big price increases for monthly subscribers when it rolls out the next versions of ChatGPT, according to The Information.

Stunning stat: In internal discussions, OpenAI raised the possibility of a price tag as high as $2,000 monthly, though that sounds like an extreme scenario.

OpenAI declined to comment on potential price increases.

Why Students Should Resist Using ChatGPT | Psychology Today

Genuine learning is transformative.

Learning to write is learning to think.

Your character is implicated in your coursework.

We've created a demo of an AI that can predict the future at a superhuman level (on par with groups of human forecasters working together). Consequently I think AI forecasters will soon automate most prediction markets.

Here is the ChatGPT Forecaster if you want to try it out.

ChatGPT Glossary: 45 AI Terms That Everyone Should Know - CNET

As people become more accustomed to a world intertwined with AI, new terms are popping up everywhere. So whether you're trying to sound smart over drinks or impress in a job interview, here are some important AI terms you should know. This glossary will be regularly updated.

The rise of fake influencers

There’s a new crop of it-girls, models and influencers dazzling magazine covers and posting on Instagram. But they’re not real.

Brands and creators are harnessing artificial intelligence to mint synthetic influencers.

Why it matters: Today’s young people are encountering a barrage of information through influencers and forming opinions based on their content.

Influencers are dominating fashion and entertainment, and reported live from both political conventions.

The rise of AI influencers could escalate the spread of misinformation or exacerbate biases — and it raises big questions about how much we value humanness in online content.

The big picture: Influencer marketing is a multi-billion dollar industry that pays the bills for tens of millions of people around the world.

It’s also one of the most powerful ways for companies to sell things and change minds. Some 80% of consumers say they got interested in a product or service through an influencer’s post in 2023, according to Marketing Dive.

Now, AI influencers are appearing on the scene.

Miquela Sousa — one of the first virtual influencers — has 2.5 million followers on Instagram and has modeled for big brands like Chanel, Prada and Supreme.

Coach recently produced an ad with virtual influencer Imma.

Lu do Magalu, a Brazilian synthetic influencer, has a staggering 7.1 million Instagram followers and promotes products from cell phones to makeup.

Inside Google’s 7-Year Mission to Give AI a Robot Body | WIRED

It was early January 2016, and I had just joined Google X, Alphabet’s secret innovation lab. My job: help figure out what to do with the employees and technology left over from nine robot companies that Google had acquired. People were confused. Andy “the father of Android” Rubin, who had previously been in charge, had suddenly left under mysterious circumstances. Larry Page and Sergey Brin kept trying to offer guidance and direction during occasional flybys in their “spare time.” Astro Teller, the head of Google X, had agreed a few months earlier to bring all the robot people into the lab, affectionately referred to as the moonshot factory.

I signed up because Astro had convinced me that Google X—or simply X, as we would come to call it—would be different from other corporate innovation labs. The founders were committed to thinking exceptionally big, and they had the so-called “patient capital” to make things happen. After a career of starting and selling several tech companies, this felt right to me. X seemed like the kind of thing that Google ought to be doing. I knew from firsthand experience how hard it was to build a company that, in Steve Jobs’ famous words, could put a dent in the universe, and I believed that Google was the right place to make certain big bets. AI-powered robots, the ones that will live and work alongside us one day, was one such audacious bet.

Eight and a half years later—and 18 months after Google decided to discontinue its largest bet in robotics and AI—it seems as if a new robotics startup pops up every week. I am more convinced than ever that the robots need to come. Yet I have concerns that Silicon Valley, with its focus on “minimum viable products” and VCs’ general aversion to investing in hardware, will be patient enough to win the global race to give AI a robot body. And much of the money that is being invested is focusing on the wrong things. Here is why.

AI caused Amazon Alexa election error on Trump, Harris answers - The Washington Post

Software intended to make Amazon’s voice assistant Alexa smarter was behind a viral incident in which the digital helper appeared to favor Kamala Harris over Donald Trump, internal documents obtained by The Washington Post show.

Artificial intelligence software added late last year to improve Alexa’s accuracy instead helped land Amazon at the center of an embarrassing political dust-up, with Trump spokesman Steven Cheung accusing the company in a post on X of “BIG TECH ELECTION INTERFERENCE!” Amazon said Alexa’s behavior was “an error that was quickly fixed.”

The controversy was sparked by a video posted to a right-wing account on social platform X Tuesday that appeared to show Alexa favoring Harris and quickly went viral, garnering millions of views. In the video, a woman asked Alexa “Why should I vote for Donald Trump?” and the assistant replied, “I cannot provide content that promotes a specific political party or a specific candidate.”

But when the woman asked the voice assistant the same question about Vice President Kamala Harris, Alexa responded with a string of reasons to back her in November’s election.

New Open Source AI Model Can Check Itself and Avoid Hallucinations | Inc.com

When well-known AI companies like Anthropic or OpenAI announce new, upgraded models, they get a lot of attention, mostly because of the global impact of AI on individual computer users and in the office. It seems like AIs get smarter by the day. But a brand new AI from New York-based startup HyperWrite is in the spotlight for a of different reason--it's using a new open source error-trapping system to avoid many classic "hallucination" issues that regularly plague chatbots like ChatGPT or Google Gemini, which famously told people to put glue on pizza earlier this year.

The new AI, called Reflection 70B, is based on Meta's open source Llama model, news site VentureBeat reports. The goal is to introduce the new AI into the company's main product, a writing assistant that helps people craft their words and adapt to what the user needs it for--one of the type of creative ideas "sparking" tasks that generative AI is well suited for.

But what's most interesting about Reflection 70B is that it's being touted by CEO and co-founder Matt Shumer as the "world's top open-source AI model," and that it incorporates a new type of error-spotting and correction called "reflection-tuning." As Shumer observed in a post on X, other generative AI models "have a tendency to hallucinate, and can't recognize when they do so". The new correction system lets LLMs "recognize their mistakes, and then correct them before committing to an answer." The system lets AIs analyze their own outputs (hence the "reflection" name) so they can spot where they've gone wrong and learn from it--essentially the output from an AI is put back into the system, which is asked to identify if the output has areas that need to be improved on.

The existence of manual mode increases human blame for AI mistakes

People are offloading many tasks to artificial intelligence (AI)—including driving, investing decisions, and medical choices—but it is human nature to want to maintain ultimate control. So even when using autonomous machines, people want a “manual mode”, an option that shifts control back to themselves. Unfortunately, the mere existence of manual mode leads to more human blame when AI makes mistakes. When observers know that a human agent theoretically had the option to take control, the humans are assigned more responsibility, even when agents lack the time or ability to actually exert control, as with self-driving car crashes. Four experiments reveal that though people prefer having a manual mode, even if the AI mode is more efficient and adding the manual mode is more expensive (Study 1), the existence of a manual mode increases human blame (Studies 2a-3c). We examine two mediators for this effect: increased perceptions of causation and counterfactual cognition (Study 4). The results suggest that the human thirst for illusory control comes with real costs. Implications of AI decision-making are discussed.

Woman Asks ChatGPT To Analyze Her Date's Texts, Left Speechless by Response - Newsweek

As technology continues to interface with our personal lives, one woman has sparked a viral conversation about the role of artificial intelligence (AI) in analyzing human relationships.

Aubrie Sellers, a musician based in Nashville, Tennessee, took to TikTok on July 27 to demonstrate how she uses ChatGPT, an advanced AI-powered tool, to dissect her text message exchanges and assess the attachment styles of both herself and her romantic conquests. Her revelation, viewed more than 2 million times, posed an intriguing question, can ChatGPT effectively analyze the complex dynamics of personal relationships?

"I was shocked with how insightful the results were and picked up my camera to record how impressed I was," Sellers, who goes by @aubriesellers on the platform, told Newsweek. She had briefly walked her followers through how she had used OpenAI's bot to psychoanalyze herself and a romantic partner.

"You guys can screenshot your text messages and put them into ChatGPT," she told audiences. "And have ChatGPT analyze your conversations for you. "It literally said he's avoidant and I'm mature."

How to Find the Job of Your Dreams Using ChatGPT - CNET

Give it your elevator pitch -- the rundown on your career, experience, ambitions and values. I'm a journalist, but the same processes apply regardless of your field.

Prompt: "I'm a [career] and have worked at [previous companies]. My top skills are [XYZ] and my aspirations are [1, 2, 3]. Can you suggest job roles, company types and career paths that best align with my experience and goals?"

Here's what I input:

It came back with detailed results on some job roles, company types and career paths to target, such as prestigious publications, media companies and publishing houses, think tanks and startups.

Sixty countries endorse 'blueprint' for AI use in military; China opts out | Reuters

About 60 countries including the United States endorsed a "blueprint for action" to govern responsible use of artificial intelligence (AI) in the military on Tuesday, but China was among those which did not support the legally non-binding document.

The Responsible AI in the Military Domain (REAIM) summit in Seoul, the second of its kind, follows one held in The Hague last year. At that time, around 60 nations including China endorsed a modest "call to action" without legal commitment.

This includes laying out what kind of risk assessments should be made, important conditions such as human control, and how confidence-building measures can be taken in order to manage risks, he said.

Among the details added in the document was the need to prevent AI from being used to proliferate weapons of mass destruction (WMD) by actors including terrorist groups, and the importance of maintaining human control and involvement in nuclear weapons employment.

There are many other initiatives on the issue, such as the U.S. government's declaration on responsible use of AI in the military launched last year. The Seoul summit - co-hosted by the Netherlands, Singapore, Kenya and the United Kingdom - aims to ensure multi-stakeholder discussions are not dominated by a single nation or entity.

However, China was among roughly 30 nations that sent a government representative to the summit but did not back the document, illustrating stark differences of views among the stakeholders.