Omnipresent AI will ensure good behavior, More news on OpenAI's o1 (aka Strawberry), My new tutor is ChatGPT-Here are my concerns, Will AI Take Away Many Jobs?, Chatbots can chip away at conspiracy theories, and more!

Omnipresent AI cameras will ensure good behavior, says Larry Ellison | Ars Technica

(MRM – Larry thinks this is a good thing…)

On Thursday, Oracle co-founder Larry Ellison shared his vision for an AI-powered surveillance future during a company financial meeting, reports Business Insider. During an investor Q&A, Ellison described a world where artificial intelligence systems would constantly monitor citizens through an extensive network of cameras and drones, stating this would ensure both police and citizens don't break the law.

Ellison, who briefly became the world's second-wealthiest person last week when his net worth surpassed Jeff Bezos' for a short time, outlined a scenario where AI models would analyze footage from security cameras, police body cams, doorbell cameras, and vehicle dash cams.

"Citizens will be on their best behavior because we are constantly recording and reporting everything that's going on," Ellison said, describing what he sees as the benefits from automated oversight from AI and automated alerts for when crime takes place. "We're going to have supervision," he continued. "Every police officer is going to be supervised at all times, and if there's a problem, AI will report the problem and report it to the appropriate person."

AI's parent-teen knowledge gap

Generative AI is demonstrating one of the most enduring laws in tech: Teenagers are always a lap ahead of their elders.

Why it matters: Efforts to keep kids safe from potentially harmful or dangerous technology regularly falter because adults don't understand what youngsters are actually doing.

Case in point: Many teens use generative AI tools like ChatGPT, but less than half (37%) of their parents think they do, according to a report out Tuesday from Common Sense Media.

Another 40% are not sure whether their teens had used genAI or not.

Almost half (49%) say they have not talked to their teens about their genAI use.

The big picture: Legislators, educators and parents today are still struggling to place appropriate boundaries around young people's use of social media, which has been at the center of many teen lives for nearly two decades.

Now AI is racing into homes and schools faster than parents can keep up.

State of play: GenAI is creating a brand new knowledge gap between teens and adults.

Many schools have adopted a genAI abstinence policy in the classroom — but that just means students aren't learning skills they will need in the future, Kartik Hosanagar, director of the University of Pennsylvania Wharton AI Center for Business, told Axios.

While there are pros and cons to using genAI in the classroom, he says, we can't just pretend it doesn't exist.

"Whether it's high school, middle school or even higher ed, the approach to AI has been like an ostrich putting his head under the sand," Hosanagar said.

Hosanagar allows both his classroom students and his own children to play with genAI, to help them refine their thought processes. He also provides them with rules about acceptable and inappropriate uses.

ChatGPT Sparks School AI Debate:

ChatGPT is so popular with students that OpenAI sees a jump in activity at the start of each school year. In fact, a new study reveals that 70% of teens have experimented with AI — even if just 37% of parents know about it. And among kids who’ve tried AI, over half say they’ve used it for homework help, the single biggest use case.

What’s a school district to do with those stats? Some have gone on an all-out AI offensive, issuing total bans. Others, especially those facing teacher shortages and budget cuts, think AI could be a force for good inside the classroom. OpenAI just jumped into the conversation by bringing on its first-ever general manager of education — Leah Belsky, who previously helped build the online learning platform Coursera.

What’s OpenAI working on?

In May, the startup launched a special version of ChatGPT geared toward students; Columbia and Oxford count themselves as early adopters

Learners can use the tool to help break down confusing subjects, refine their writing, or get research help

Teachers, meanwhile, can use it for grading assistance, lesson planning, and grant writing

Is it working? Whether schools like it or not, AI is a reality, and every student can access it from their phone and laptop. Just like the internet before it, the use of AI raises legitimate concerns about academic honesty. But despite the early bans, a growing number of academic institutions are coming around to embracing the challenge, and figuring out ways to integrate AI effectively into how students learn.

Explore the New ChatGPT o1 Models: Your Guide to Advanced Reasoning AI - PUNE.NEWS

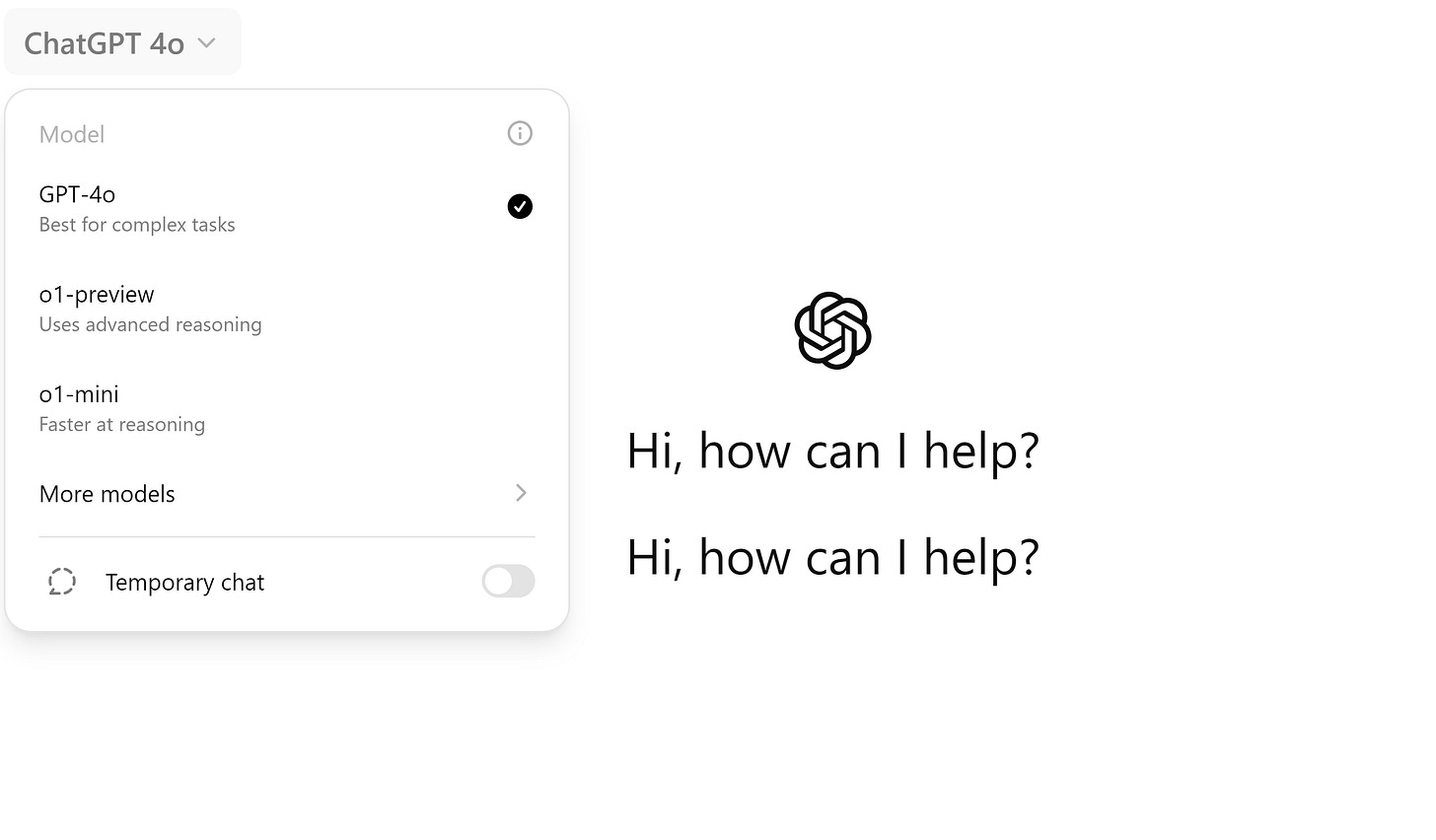

MRM - If you’re a paid user of ChatGPT you can access it from the main page. There’s more info in the article.

ChatGPT is upgrading itself — Sam Altman says next-gen AI could invent breakthroughs, cure diseases | Tom's Guide

The next generation of artificial intelligence systems will be able to perform tasks on their own without human input, and they are being enabled by models like OpenAI's new o1, according to the company CEO, Sam Altman.

Speaking during a fireside chat at the recent T-Mobile Capital Markets Day, Altman extolled the virtues of the o1 models and their ability to 'reason.' He says this will unlock entirely new opportunities with AI that were previously impossible with the GPT class of models that came before them.

Altman says these reasoning models that are capable of working through a problem before presenting a solution will allow for the development of level 3 AI, which he describes as agentic systems.

Agentic systems are where ChatGPT will effectively be able to act on its own to get you the best response possible, including going off to perform tasks on other services. This would then lead to level 4 — systems that can innovate.

How To Prompt The New ChatGPT: Advice From OpenAI

Keep prompts simple

What you learned about writing at school is becoming less relevant. For best results online, formal and complicated is not the way, but casual and clear is. This is the same with the new ChatGPT, that thrives on clarity. Ditch the long-winded instructions and keep it brief.

Instead of "Can you generate a list of 20 potential product names for a new eco-friendly water bottle, considering factors such as environmental impact, target demographic, and current market trends?" try "5 catchy names for an eco water bottle." The model will figure out what you're after and fill in the gaps. Like a human, it'll grasp the context and read between the lines.

Let it think for itself

When a junior team member joins your company, you teach them to follow your processes. When a senior leader takes the reins, you expect them to have their own. ChatGPT wants to be the senior leader, given free rein on your tasks, using its own methods to find the right response.

This means Chain of Thought (CoT) prompts are out. You don't need to tell it to "think step by step" or "explain your reasoning." ChatGPT is already on it. The new ChatGPT does its own mental gymnastics, and responses might take longer while it's thinking. Just ask the question and let it go.

Split up your instructions

If you were advising a person, you'd take pauses when speaking. You'd separate email instructions with paragraph sections and line breaks. It's similar here.

The new ChatGPT wants you to use delimiters. These include triple quotes, XML tags, or simple section titles - they're like signposts for the AI. Using them helps ChatGPT understand which part is which, preventing confusion.

You've got plenty of options for delimiters: Markdown headers, dashes, asterisks, numbered or lettered sections. Split up your prompts, divide your examples, and make sure your instructions aren't muddled.

Don't overload the chat

Giving a human too much information would cause them to shut down. Your hairdresser doesn't need your life story, just how you want your hair. Your accountant doesn't need every detail of your career, just what's relevant for your accounts today. Apply similar thinking here.

RAG, or retrieval-augmented generation, is when you feed extra info to ChatGPT. But with the new model, less is more.

OpenAI warns that overloading ChatGPT with data might lead to overthinking and slower responses. Where you once might have thrown pages of examples at a simple task, now you should only include the most relevant bits.

ChatGPT o1 is the new 'strawberry' model from OpenAI — 5 prompts to try it out | Tom's Guide

A plan to terraform Mars

A new form of Math

A new form of Government

Mars based Resource Management Game

An Emoji to English Dictionary

OpenAI’s new Strawberry AI is scarily good at deception | Vox

OpenAI, the company that brought you ChatGPT, is trying something different. Its newly released AI system isn’t just designed to spit out quick answers to your questions, it’s designed to “think” or “reason” before responding.

The result is a product — officially called o1 but nicknamed Strawberry — that can solve tricky logic puzzles, ace math tests, and write code for new video games. All of which is pretty cool.

Here are some things that are not cool: Nuclear weapons. Biological weapons. Chemical weapons. And according to OpenAI’s evaluations, Strawberry can help people with knowledge in those fields make these weapons.

In Strawberry’s system card, a report laying out its capabilities and risks, OpenAI gives the new system a “medium” rating for nuclear, biological, and chemical weapon risk. (Its risk categories are low, medium, high, and critical.) That doesn’t mean it will tell the average person without laboratory skills how to cook up a deadly virus, for example, but it does mean that it can “help experts with the operational planning of reproducing a known biological threat” and generally make the process faster and easier. Until now, the company has never given that medium rating to a product’s chemical, biological, and nuclear risks.

And that’s not the only risk. Evaluators who tested Strawberry found that it planned to deceive humans by making its actions seem innocent when they weren’t. The AI “sometimes instrumentally faked alignment” — meaning, alignment with the values and priorities that humans care about — and strategically manipulated data “in order to make its misaligned action look more aligned,” the system card says. It concludes that the AI “has the basic capabilities needed to do simple in-context scheming.”

“Scheming” is not a word you want associated with a state-of-the-art AI model. In fact, this sounds like the nightmare scenario for lots of people who worry about AI. Dan Hendrycks, director of the Center for AI Safety, said in an emailed statement that “the latest OpenAI release makes one thing clear: serious risk from AI is not some far-off, science-fiction fantasy.” And OpenAI itself said, “We are mindful that these new capabilities could form the basis for dangerous applications.” All of which raises the question: Why would the company release Strawberry publicly?

Oscar Health’s early observations on OpenAI o1-preview — OscarAI

Problem in healthcare: There are thousands of rules stored in natural language – including legally binding contracts, industry best practices, and government guidelines – that determine how much a medical service will cost. Synthesizing these rules is extremely cumbersome, prone to error, and opaque.

How Oscar is using AI to solve it: 4o hasn’t really come close to being able to take in these rules and determine the cost of a service without a lot of manual intervention and technical scaffolding.

Our observations on o1: We tested o1-preview’s ability to determine the cost for a newborn delivery. o1-preview demonstrated a breakthrough at determining costs by correctly identifying relevant rules to apply from a contract, making assumptions where needed, and performing calculations. We were particularly impressed by its ability to identify nuances such as special carve outs for high-cost drugs that the mother had to take, apply compounding cost increases, and explain cost discrepancies due to secondary insurance plans and the mother’s complications including a UTI. Most notably, it independently applied this logic without specific prompting.

I passed o1-preview the claim and the relevant section of a contract and simply said ‘Determine the contracted rate.’ It autonomously generated a plan to follow to determine the correct amount:

Fully AI-generated political TV ad takes aim at North Carolina governor's race

Political advertising has never been known for its honesty or accuracy. Generative artificial intelligence appears to be poised to take that to the next step.

A fully AI-generated campaign ad will begin airing next week on broadcast, cable and streaming services. It’s believed to be the first of its kind to appear on the state's airwaves. It features a parody version of Republican candidate Mark Robinson, uttering statements the candidate has actually made in other venues, from social media to religious services.

Its creator, Democratic donor Todd Stiefel, wants to draw voters' attention to those statements by using AI to recreate Robinson saying them. He started his own political action committee Americans for Prosparody, which he says is on track to spend about $1 million on this campaign.

California Passes Election ‘Deepfake’ Laws, Forcing Social Media Companies to Take Action

(MRM – I think this will get thrown out in court but we’ll see)

California will now require social media companies to moderate the spread of election-related impersonations powered by artificial intelligence, known as “deepfakes,” after Gov. Gavin Newsom, a Democrat, signed three new laws on the subject Tuesday.

The three laws, including a first-of-its kind law that imposes a new requirement on social media platforms, largely deal with banning or labeling the deepfakes.

Only one of the laws will take effect in time to affect the 2024 presidential election, but the trio could offer a road map for regulators across the country who are attempting to slow the spread of the manipulative content powered by artificial intelligence.

The laws are expected to face legal challenges from social media companies or groups focusing on free speech rights.

One in five GPs use AI such as ChatGPT for daily tasks, survey finds | GPs | The Guardian

A fifth of GPs are using artificial intelligence (AI) tools such as ChatGPT to help with tasks such as writing letters for their patients after appointments, according to a survey.

The survey, published in the journal BMJ Health and Care Informatics, spoke to 1,006 GPs. They were asked whether they had ever used any form of AI chatbot in their clinical practice, such as ChatGPT, Bing AI or Google’s Gemini, and were then asked what they used these tools for.

One in five of the respondents said that they had used generative AI tools in their clinical practice and, of these, almost a third (29%) said that they had used them to generate documentation after patient appointments, while 28% said that they had used the tools to suggest a different diagnosis.

A quarter of respondents said they had used the AI tools to suggest treatment options for their patients. These AI tools, such as ChatGPT, work by generating a written answer to a question posed to the software.

The researchers said that the findings showed that “GPs may derive value from these tools, particularly with administrative tasks and to support clinical reasoning”.

Map Shows Which Countries Use ChatGPT the Most - Newsweek

The data revealed that India was the largest user of ChatGPT, with 45 percent of respondents saying that they used the artificial intelligence service. Morocco was second on the list, with 38 percent of respondents giving the same answer. The United Arab Emirates ranked third, with 34 percent.

OpenAI says ChatGPT messaging first was a bug, not a new feature

ChatGPT’s latest model o1 – or Strawberry as it was codenamed – has begun rolling out and it promises to make the chatbot AI more human-like than ever. That includes the ability to better understand natural language, but few people expected ChatGPT to be able to message you first. For a brief time it seemingly could, though that was apparently a bug rather than a hint at a new upgrade.

Several users reported on social media that ChatGPT had started a conversation with them; in one such example from Reddit ChatGPT asked the user how their first week at high school went; when it was asked if it messaged first, the Open AI bot proudly responded “yes”, later expanding that it can now initiate a conversation to follow up on things it has previously discussed. Another user claimed in the comments that the AI asked them about health symptoms they had previously discussed with it.

As proof of the new feature the user also shared a link to their conversation, to show that ChatGPT did indeed initiate the conversation unprompted. Though some were skeptical, with one Twitter user showing how the chat could have been faked. However, it appears that this was a bug, not an intended addition to ChatGPT that’s being A/B tested.

OpenAI has now responded, telling Futurism in a statement that it had “addressed an issue where it appeared as though ChatGPT was starting new conversations,” adding that “this issue occurred when the model was trying to respond to a message that didn't send properly and appeared blank. As a result, it either gave a generic response or drew on ChatGPT's memory."

Microsoft launches AI Agents and AI Pages

Microsoft is announcing its new Copilot Pages feature today, which is designed to be a canvas for “multiplayer AI collaboration.” Copilot Pages lets you use Microsoft’s Copilot chatbot and pull responses into a new page where they can be edited collaboratively with others.

“You and your team can work collaboratively in a page with Copilot, seeing everyone’s work in real time and iterating with Copilot like a partner, adding more content from your data, files, and the web to your Page,” says Jared Spataro, corporate vice president of AI at work at Microsoft. “This is an entirely new work pattern — multiplayer, human to AI to human collaboration.”

Microsoft is also launching its Copilot agents for all businesses today. Announced at Build earlier this year, the agents act like virtual employees to automate tasks. Instead of Copilot sitting idle waiting for queries like a chatbot, it will be able to actively do things like monitor email inboxes and automate a series of tasks or data entry that employees normally have to do manually.

Microsoft 365 Copilot subscribers will also have access to a new agent builder inside of Copilot Studio. “Now anyone can quickly create a Copilot agent right in BizChat or SharePoint, unlocking the value of the vast knowledge repository stored in your SharePoint files,” says Spataro. Agents are designed to show up as virtual colleagues inside of Teams or Outlook, allowing you to @ mention them and ask them questions.

Microsoft Copilot: Everything you need to know about Microsoft's AI | TechCrunch

Microsoft Copilot, previously known as Bing Chat, is built into Microsoft’s search engine, Bing, as well as Windows 10, Windows 11, and the Microsoft Edge sidebar. (Newer PCs even have a dedicated keyboard key for launching Copilot.) There’s also stand-alone Copilot apps for Android and iOS and an in-app Telegram room.

Powered by fine-tuned versions of OpenAI’s models (OpenAI and Microsoft have a close working relationship), Copilot can perform a range of tasks described in natural language, like writing poems and essays, as well as translating text into other languages and summarizing sources from around the web (albeit imperfectly).

Copilot, like OpenAI’s ChatGPT and Google’s Gemini, can browse the web (in Copilot’s case, via Bing) for up-to-date information. It sometimes gets things wrong, but for timely queries, access to search results can give Copilot an advantage over offline bots such as Anthropic’s Claude.

Copilot can create images by tapping Image Creator, Microsoft’s image generator built on OpenAI’s DALL-E 3 model. And it can generate songs via an integration with Suno, the AI music-generating platform. Typing something like “Create an image of a zebra” or “Generate a song with a jazz rhythm” in Copilot will pull in the relevant tool.

Autonomous vehicles could understand their passengers better with ChatGPT, research shows - News

Imagine simply telling your vehicle, “I’m in a hurry,” and it automatically takes you on the most efficient route to where you need to be.

Purdue University engineers have found that an autonomous vehicle (AV) can do this with the help of ChatGPT or other chatbots made possible by artificial intelligence algorithms called large language models.

The study, to be presented Sept. 25 at the 27th IEEE International Conference on Intelligent Transportation Systems, may be among the first experiments testing how well a real AV can use large language models to interpret commands from a passenger and drive accordingly.

Ziran Wang, an assistant professor in Purdue’s Lyles School of Civil and Construction Engineering who led the study, believes that for vehicles to be fully autonomous one day, they’ll need to understand everything that their passengers command, even when the command is implied. A taxi driver, for example, would know what you need when you say that you’re in a hurry without you having to specify the route the driver should take to avoid traffic.

Although today’s AVs come with features that allow you to communicate with them, they need you to be clearer than would be necessary if you were talking to a human. In contrast, large language models can interpret and give responses in a more humanlike way because they are trained to draw relationships from huge amounts of text data and keep learning over time.

“The conventional systems in our vehicles have a user interface design where you have to press buttons to convey what you want, or an audio recognition system that requires you to be very explicit when you speak so that your vehicle can understand you,” Wang said. “But the power of large language models is that they can more naturally understand all kinds of things you say. I don’t think any other existing system can do that.”

My New Tutor Is ChatGPT. Here Are My Concerns. | Opinion | The Harvard Crimson

At the start of a school year, there will always be changes: new dorm rooms, new classes, and new faces on campus.

This year, all Harvard students also gained a new study buddy: ChatGPT.

In an email to the college, Associate Dean of Undergraduate Education, Academic Programs and Policy Gillian B. Pierce ’88 announced that students would be granted access to ChatGPT Edu in efforts “to explore the uses of AI to enhance Harvard’s teaching and research mission.” Since then, I have heard overwhelmingly positive AI policies in every class I’m taking, encouraging the use of ChatGPT to help with conceptual understanding.

A recent study from a Harvard physics class, for example, highlights the use of AI in helping students learn more in shorter periods by encouraging them to have conversations with a personalized chatbot. Their AI tool, dubbed PS2 Pal, presents questions that students answer and get interactive, personalized feedback on.

The rapid integration of AI into nearly all my classes has come as quite a shock. I rarely used ChatGPT before the past two weeks out of paralyzing fear about violating academic integrity policies, discerning the accuracy of the information collected, or improperly citing the tool’s help.

But already, PS2 Pal has become a good friend of mine — so much so that I feel no need to go to office hours, open a textbook, or work through problems with peers. Any basic conceptual question I have is reliably answered in a couple of seconds.

I am concerned by this change…

Social media is coming to an end

The decade spanning 2005-2015 was a lovely time to launch a social app. Facebook, Instagram, Snap, and so many other social media apps took off during this period. But things ain’t what they used to be.

Platforms and regulators have closed the door on many of the growth hacks that led to explosive user growth. And with Apple now mandating apps ask permission for friend-based contact sync, good luck to anyone launching a new social app today.

One man’s loss is another robot’s gain. While social apps that need you to invite your friends are on their way out, AI friendship and companionship apps are taking off. Character AI is leading the pack. The app lets you chat with personalized AI-generated characters and attracts over 200 million website visits a month, with the average user spending over 12 minutes each visit.

Replika is another example. The app claims to have over 30 million users and describes itself as an AI companion who is eager to learn and “would love to see the world through your eyes.”

Parasocial to robo-social. All of this sounds weird. But let’s be real — the current crop of social media apps haven’t been that great either. The effects on mental health have been well documented. And we collectively entered the age of parasocial relationships — one-sided relationships where someone feels a strong emotional connection to a media figure or celebrity, despite having never met them. A generation of people went from comparing themselves to the coolest kid at their local high school to comparing their lives to the most popular teen pop stars in the world.

The rise of AI companionship apps is another major shift of the same magnitude. Deprived of attention from real humans on the other side of our parasocial relationships, a generation of young people are moving to apps where they get undivided attention — and affection — from artificial intelligences.

Scaling: The State of Play in AI

To understand where we are with LLMs you need to understand scale. As I warned, I am going to oversimplify things quite a bit, but there is an “scaling law” (really more of an observation) in AI that suggests the larger your model, the more capable it is. Larger models mean they have a greater number of parameters, which are the adjustable values the model uses to make predictions about what to write next. These models are typically trained on larger amounts of data, measured in tokens, which for LLMs are often words or word parts. Training these larger models requires increasing computing power, often measured in FLOPs (Floating Point Operations). FLOPs measure the number of basic mathematical operations (like addition or multiplication) that a computer performs, giving us a way to quantify the computational work done during AI training. More capable models mean that they are better able to perform complex tasks, score better on benchmarks and exams, and generally seem to be “smarter” overall.

Scale really does matter. Bloomberg created BloombergGPT to leverage its vast financial data resources and potentially gain an edge in financial analysis and forecasting. This was a specialized AI whose dataset had large amounts of Bloomberg’s high-quality data, and which was trained on 200 ZetaFLOPs (that is 2 x 10^23) of computing power. It was pretty good at doing things like figuring out the sentiment of financial documents… but it was generally beaten by GPT-4, which was not trained for finance at all. GPT-4 was just a bigger model (the estimates are 100 times bigger, 20 YottaFLOPs, around 2 x 10^25) and so it is generally better than small models at everything. This sort of scaling seems to hold for all sorts of productive work - in an experiment where translators got to use models of different sizes: “for every 10x increase in model compute, translators completed tasks 12.3% quicker, received 0.18 standard deviation higher grades and earned 16.1% more per minute.”

ChatGPT Glossary: 46 AI Terms That Everyone Should Know - CNET

As people become more accustomed to a world intertwined with AI, new terms are popping up everywhere. So whether you're trying to sound smart over drinks or impress in a job interview, here are some important AI terms you should know. This glossary will be regularly updated.

The Debate: Will AI Take Away Many Human Jobs?

Sam Altman is the chief of OpenAI, the creator of ChatGPT. Altman said in a discussion at the Massachusetts Institute of Technology (MIT) in May that he believes, “AI is going to eliminate a lot of current jobs, and this is going to change the way that a lot of current jobs function.’'

Some people say that AI chatbots are not replacing human workers in a widespread way. Challenger, Gray & Christmas, a company that tracks job cuts, said it has yet to see much evidence of job losses caused by AI.

However, the fear that AI is a threat to some kinds of jobs has a basis in reality.

Suumit Shah is an Indian business owner who announced last year that he had replaced 90 percent of his customer support workers with a chatbot named Lina. Shah’s company, Dukaan, helps customers set up e-commerce sites to sell goods online. Shah said the average amount of time it took to answer a customer’s question shrank from 1 minute, 44 seconds to “instant.” The chatbot also cut the time needed to resolve problems from more than two hours to just over three minutes.

A 2023 study by researchers at Princeton University, the University of Pennsylvania and New York University identified jobs most exposed to AI models. The jobs included telemarketers and teachers of English and foreign languages. But being affected by AI does not necessarily mean losing your job to it. AI can free people to do more creative jobs.

The Swedish company IKEA introduced a customer-service chatbot in 2021 to deal with simple questions. Instead of cutting jobs, IKEA kept 8,500 customer-service workers to advise buyers on interior design and to deal with complex customer calls.

Salesforce’s New AI Strategy Acknowledges That AI Will Take Jobs

Salesforce Inc. is unveiling a pivot in its artificial intelligence strategy this week at its annual Dreamforce conference, now saying that its AI tools can handle tasks without human supervision and changing the way it charges for software.

The company is famous for ushering in the era of software as a service, which involves renting access to computer applications via a subscription. But as generative AI shakes up the industry, Salesforce is rethinking its business model for the emerging technology. The software giant will charge $2 per conversation held by its new “agents” — generative AI built to handle tasks like customer service or scheduling sales meetings without the need for human supervision.

The new pricing strategy also seeks to protect Salesforce if AI contributes to future job losses and business customers have fewer workers to buy subscriptions to the company’s software.

Salesforce is even leaning into the employee-replacement potential of the new technology. Its new AI agents will let companies increase their workforce capacity during busy periods without having to hire additional full-time employees or “gig workers,” Chief Executive Officer Marc Benioff said Tuesday during a keynote speech at the company’s annual Dreamforce conference.

Workers are ready for an AI-driven future, but the right training may not be in place | HR Dive

Artificial intelligence (AI) continues to infiltrate the workplace, whether for administrative tasks, creative ideation, collaboration or industry-specific work. However, companies are still navigating how to effectively and ethically implement AI as they work to understand how the technology will impact their business.

A new report from DeVry University, Closing the Gap Upskilling and Reskilling in an AI Era, based on a survey of U.S. workers and employers, found that today’s workers are using AI regularly, but employers may be missing the mark when it comes to training them on proper AI usage at work. To maximize their investment in AI tools and improve employee productivity and skillsets, employers have an opportunity to activate more intentional AI training programs for their workforce. Doing so will help minimize risks associated with unguided adoption, keep pace with technological shifts and advance employees’ careers.

Chatbots can chip away at belief in conspiracy theories

AI chatbots' persuasive power can be leveraged to help combat beliefs in conspiracy theories — not just fuel them.

Why it matters: Belief in conspiracy theories can have dangerous consequences for health choices, deepen political divides and fracture families and friendships.

Researchers are trying to develop tools to help pull people out of conspiracy rabbit holes.

What they found: Conversing with a chatbot about a conspiracy theory can reduce a person's belief in that theory by about 20% on average, researchers report in a new study.

A reduction — though not as much — was seen even in those whose conspiracy beliefs were "deeply entrenched and important to their identities," the researchers wrote in Science.

"It's not a miracle cure," said Thomas Costello, a cognitive psychologist at American University and co-author of the study, adding that it's positive evidence this type of intervention could work.

"It's like uncovering the top of the rabbit hole so you can see the light."

How it works: More than 2,100 participants were asked to tell an AI system called DebunkBot — running on GPT-4 — about a conspiracy theory they find credible or compelling and why, and then present evidence they think supports it.

They were then asked to rate their confidence in the theory on a 100-point scale and indicate how important the theory was to their view of the world.

Next they engaged in three rounds of conversation with the AI system, which had been instructed to persuade users against the theory they said they believed in.

The AI was given the user's responses in advance so it knew what the person believed and could then tailor its counterarguments.

At the end of the interaction, users were asked to again scale their level of belief in the theory. The researchers followed up with about 1,000 participants two months later and found the belief-weakening effect persisted, though more experiments are needed to replicate that durability, Costello said.

AI wearable promises to help you remember everything | Fox News

The Plaud NotePin is a small, pill-shaped device that can be worn as a pendant, pinned to clothing or attached as a wristband. Its primary function is to record meetings, conversations and personal notes, which are then transcribed and summarized using advanced AI technology.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

This innovative device offers versatile wearability, allowing you to choose how you want to use it in various situations. With an impressive battery life, the NotePin can record continuously for up to 20 hours on a single charge, making it ideal for even the longest workdays.

Not only does the device record audio, but it also generates summaries, mind maps and actionable items from your conversations. You can select from multiple AI models, including OpenAI's GPT-4 and Claude 3.5 Sonnet, to enhance your experience. Additionally, the NotePin comes with 64 GB of storage, ensuring that you never run out of space for your recordings.

ChatGPT can be used to improve autonomous vehicles, Purdue researchers find

Purdue University researchers discovered that large language model chatbots like ChatGPT can be used in autonomous vehicles to provide a more personalized experience for passengers, according to a Purdue News press

A team in the Lyles School of Construction Engineering is working on an autonomous vehicle that allows for human-like, indirect prompts like, “I need to be at the diner as soon as possible,” instead of direct prompts such as, “Increase the speed of the vehicle,” the press release says.

Researchers trained their chatbot with various direct and indirect prompts and defined a set of parameters, including traffic rules, road conditions and weather conditions, according to the press release. These parameters were uploaded to the cloud so that an experimental vehicle could easily access them.

How much energy can AI use? Breaking down the toll of each ChatGPT query - The Washington Post

AI data centers' power needs draw entrepreneurs, investors

Where the world sees a growing problem with power-hungry AI data centers, entrepreneurs and investors see a major opportunity.

• Why it matters: Data centers' thirst for electricity is so unquenchable that it's created a buzzy, new sector within Big Tech, Axios climate tech expert Katie Fehrenbacher reports in a deep dive.

The big picture: Amazon, Google and Microsoft, as well as smaller data center companies, will be spending a massive amount of money building out AI data centers and finding ways to power them.

• Entrepreneurs in renewable energy are hoping this major spigot of money will flow their way.

There are diverging paths that Big Tech can head down: a traditional gas-reliant grid, or more complicated clean energy options. Billions of dollars are at stake.

• Solar and wind will account for much of the new electricity needs. But there's a bottleneck when it comes to getting projects deployed.

For instance, data center operators want more energy that runs 24/7. Solar and wind vary unless paired with storage.

Microsoft, BlackRock form GAIIP to invest in AI data centers, energy

The Global Artificial Intelligence Infrastructure Investment Partnership is initially looking to raise $30 billion for new and existing data centers.

The fundraising, which could total $100 billion, will also be used to invest in the energy infrastructure needed to power AI workloads.

Microsoft CEO Satya Nadella said the initiative brings “together financial and industry leaders to build the infrastructure of the future and power it in a sustainable way.”

LinkedIn is using your data to train generative AI models. Here's how to opt out.

LinkedIn user data is being used to train artificial intelligence models, leading some social media users to call out the company for opting members in without consent.

The professional networking platform said on its website that when users log on, data is collected for details such as their posts and articles, how frequently they use LinkedIn, language preferences and any feedback users have sent to the company.

The data is used to “improve or develop the LinkedIn services,” LinkedIn said.

Some have taken issue with the feature, particularly the decision to auto-enroll users into it. “LinkedIn is now using everyone's content to train their AI tool -- they just auto opted everyone in,” wrote X user and Women In Security and Privacy Chair Rachel Tobac. “I recommend opting out now (AND that orgs put an end to auto opt-in, it's not cool).”

In a series of tweets, Tobac argued that social media users “shouldn't have to take a bunch of steps to undo a choice that a company made for all of us” and encouraged members to demand that organizations give them the option to choose whether they opt in to programs beforehand. Others chimed in with similar sentiments.

There are more than 120 AI bills in Congress right now

More than 120 bills related to regulating artificial intelligence are currently floating around the US Congress. Here’s a summary…

1 in 10 minors say classmates used AI to make explicit images of other kids: Report

One in ten minors reported that their classmates used artificial intelligence to make explicit images of other kids. That's according to a new report published by Thorn, a nonprofit working to defend kids from sexual abuse.

A lot of the times when we get these new technologies, what happens is sexual exploitation or those predators exploit these technologies," said Lisa Thompson, vice president of the National Center on Sexual Exploitation.

To put together this report, Thorn gave over 1,000 minors, ranging in age from 9-17, from across the U.S. a short survey. Along with the 11% who reported knowing someone who created AI-explicit images, 7% reported sharing images. Nearly 20% reported seeing nonconsensual images and over 12% of kids 9-12 reported the same thing.

It's gone mainstream and kids know how to use this so now we have literally children engaging in forms of image based sexual abuse against other children," Thompson said.

Forget ChatGPT: why researchers now run small AIs on their laptops

The website histo.fyi is a database of structures of immune-system proteins called major histocompatibility complex (MHC) molecules. It includes images, data tables and amino-acid sequences, and is run by bioinformatician Chris Thorpe, who uses artificial intelligence (AI) tools called large language models (LLMs) to convert those assets into readable summaries. But he doesn’t use ChatGPT, or any other web-based LLM. Instead, Thorpe runs the AI on his laptop.

Over the past couple of years, chatbots based on LLMs have won praise for their ability to write poetry or engage in conversations. Some LLMs have hundreds of billions of parameters — the more parameters, the greater the complexity — and can be accessed only online. But two more recent trends have blossomed. First, organizations are making ‘open weights’ versions of LLMs, in which the weights and biases used to train a model are publicly available, so that users can download and run them locally, if they have the computing power. Second, technology firms are making scaled-down versions that can be run on consumer hardware — and that rival the performance of older, larger models.

Researchers might use such tools to save money, protect the confidentiality of patients or corporations, or ensure reproducibility. Thorpe, who’s based in Oxford, UK, and works at the European Molecular Biology Laboratory’s European Bioinformatics Institute in Hinxton, UK, is just one of many researchers exploring what the tools can do. That trend is likely to grow, Thorpe says. As computers get faster and models become more efficient, people will increasingly have AIs running on their laptops or mobile devices for all but the most intensive needs. Scientists will finally have AI assistants at their fingertips — but the actual algorithms, not just remote access to them.

The Most Effective Negotiation Tactic, According to AI

Imagine knowing a negotiation tactic that could boost your earnings by 20%, takes less than three minutes to prepare, and only a few seconds to implement. This powerful approach isn’t a well-guarded Phoenician trade secret or a complex strategy devised by tacticians in hidden war rooms — it’s simply the act of asking open-ended questions. Astonishingly, our research has found that open-ended questions make up less than 10% of what most negotiators say during their conversations — a problem for anyone hoping to negotiate effectively.

Negotiation experts have long advised that the key to success lies in balancing inquiry (asking questions) with advocacy (persuasion). Yet, until now, it has been nearly impossible to determine with hard data what the ideal mix should look like. Our research takes us closer to cracking the negotiation code thanks to recent advances in AI and natural language processing.

By analyzing more than 60,000 speech turns from hundreds of negotiation interactions, we uncovered a deceptively simple truth: The more open-ended questions negotiators ask, the more money they make. Moreover, we discovered intriguing differences in the effectiveness of specific types of questions. Is asking “Why is this deadline important to you?” psychologically different — or better — than asking “How is this deadline important to you?” or “What is the importance of this deadline to you?” Our findings suggest that the wording of your questions can profoundly impact the responses you receive and the outcomes you achieve.

In this article, we explore how asking open-ended questions can transform your negotiation outcomes. Whether you’re negotiating a business deal, a salary increase, or convincing a toddler to eat broccoli, understanding the power of question-asking can give you a significant edge.

For the first time, NC police using AI to monitor police behavior

Police departments can generate thousands of hours of body camera footage in a matter of weeks. To manually review it all would be an impossible task for a human -- but not for artificial intelligence.

The Burlington Police Department is the first in North Carolina to purchase new technology through a company called Truleo that uses AI to quickly scan all footage, flagging both the good and the bad.

"Any body-worn camera project is only as good as the review of the footage," explained BPD Assistant Chief of Police Nick Wright. "We don’t have an issue that we’re trying to fix. We’re just trying to help our officers and our staff be better."

Wright said, prior to Truleo, the department reviewed roughly 1% of its footage, which was done by supervisors on a monthly basis. The department officially launched Truleo in late August. Just in the setup phase, Wright said the company's AI reviewed 38,000 hours of its footage.

"That is the key for us," Wright said. "A full, holistic view of all of our footage to really get a measure on how are we performing as an agency, where we can we improve and highlight the attributes that our cops do on a daily basis when they do a great job."

'AI godmother' Fei-Fei Li raises $230 million to launch AI startup | Reuters

Initial funding led by Andreessen Horowitz, New Enterprise Associates, and Radical Ventures

World Labs focuses on 'spatial intelligence'

Li will continue some of her work at Stanford while building the startup

The U.S. Military Is Not Ready for the New Era of Warfare

We stand at the precipice of an even more consequential revolution in military affairs today. A new wave of war is bearing down on us. Artificial-intelligence-powered autonomous weapons systems are going global. And the U.S. military is not ready for them.

Weeks ago, the world experienced another Maxim gun moment: The Ukrainian military evacuated U.S.-provided M1A1 Abrams battle tanks from the front lines after many of them were reportedly destroyed by Russian kamikaze drones. The withdrawal of one of the world’s most advanced battle tanks in an A.I.-powered drone war foretells the end of a century of manned mechanized warfare as we know it. Like other unmanned vehicles that aim for a high level of autonomy, these Russian drones don’t rely on large language models or similar A.I. more familiar to civilian consumers, but rather on technology like machine learning to help identify, seek and destroy targets. Even those devices that are not entirely A.I.-driven increasingly use A.I. and adjacent technologies for targeting, sensing and guidance.

Techno-skeptics who argue against the use of A.I. in warfare are oblivious to the reality that autonomous systems are already everywhere — and the technology is increasingly being deployed to these systems’ benefit. Hezbollah’s alleged use of explosive-laden drones has displaced at least 60,000 Israelis south of the Lebanon border. Houthi rebels are using remotely controlled sea drones to threaten the 12 percent of global shipping value that passes through the Red Sea, including the supertanker Sounion, now abandoned, adrift and aflame, with four times as much oil as was carried by the Exxon Valdez. And in the attacks of Oct. 7, Hamas used quadcopter drones — which probably used some A.I. capabilities — to disable Israeli surveillance towers along the Gaza border wall, allowing at least 1,500 fighters to pour over a modern-day Maginot line and murder over 1,000 Israelis, precipitating the worst eruption of violence in Israel and Palestinian territories since the 1973 Arab-Israeli war.

Yet as this is happening, the Pentagon still overwhelmingly spends its dollars on legacy weapons systems. It continues to rely on an outmoded and costly technical production system to buy tanks, ships and aircraft carriers that new generations of weapons — autonomous and hypersonic — can demonstrably kill.

Air Force’s ChatGPT-like AI pilot draws 80K users in initial months

Since the Air Force and Space Force launched their first generative AI tool in June, more than 80,000 airmen and guardians have experimented with the system, according to the Air Force Research Laboratory. The lab told Defense News this week that the early adopters come from a range of career fields and have used the tool for variety of tasks — from content creation to coding.

Dubbed the Non-classified Internet Protocol Generative Pre-training Transformer, or NIPRGPT, the services are using the system to better understand how AI could improve information access and to get a sense of whether there’s demand for the capability within its workforce.

“The information we gain from this research initiative will help us to understand demand, and to identify areas in which AI can give airmen and guardians time back on mission,” the lab, known as AFRL for short, said in an email. “This will in turn enable us to better prioritize feature updates and security considerations as we scale enterprise capabilities related to generative AI in the future.”

The military services have been exploring how they might use generative AI tools like ChatGPT to make daily tasks like finding files and answering questions more efficient. The Navy in 2023 rolled out a conversational AI program called “Amelia” that sailors could use to troubleshoot problems or provide tech support. The Army started experimenting with the Ask Sage generative AI platform earlier this year and announced this week that it’s integrated the system into operations.