AI Firms Are Running Large Scale Experiments on Humans

In many fields, any human experimentation must go through rigorous external review. Not so with the introduction of AI into society at large.

A few years ago my coauthors and I fielded a survey on students’ perceptions around free speech and self-censorship at UNC and other North Carolina universities. This was considered human research and as such, had to go through our IRB (Institutional Review Board). IRBs are federally-mandated committees that ensure human research is not harming people when it is implemented. This could be drug research, behavioral research or even plain surveys. IRBs were created because some researchers in the past had used vulnerable populations like children, the poor, the incarcerated, minorities, etc. IRBs exist to ensure participants know their rights, are treated ethically and their welfare is taken care of.

Questions IRB’s ask the researcher include some of the following:

Have the participants given informed consent?

After the research begins, will there be ongoing communication with the participants about adverse events or any other results?

Will the participants’ privacy be protected?

Is participation in the participants’ best interests?

I’m not going to lie. IRB review is a pain as it takes a lot of time, the board can focus on minutiae, and the whole thing is bureaucratic. However, I understand their intent and they can catch ethical or harmful issues in research. They keep researchers honest.

For pharmaceuticals, the process goes far beyond the IRB. Several phases of testing are involved so bringing a drug to market takes on average 10-15 years.

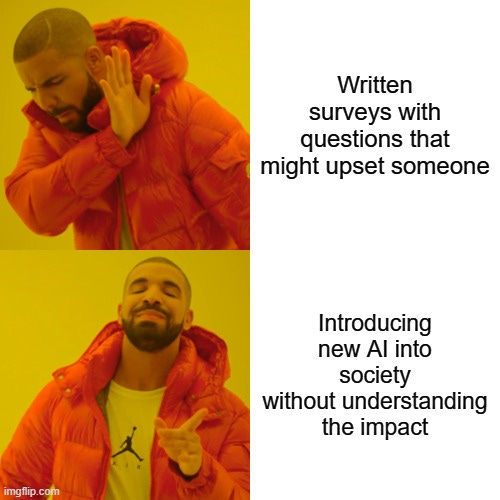

These thoughts occurred to me when I read an article about OpenAI exploring how to “ethically” make AI erotica. I found it an interesting contrast that researchers must go through a rigorous review process for something as simple as a survey and drugs take 10-15 years to come to market, but firms can introduce powerful new technologies into the world without anyone either knowing the consequences and without any external review.

Don’t get me wrong. I’m definitely not a fan of bureaucracy and government intervention and I am amazed daily at how AI makes me more knowledgeable, productive and creative. But you have to admit it’s a more than a little mind-blowing that so much care is taken for work that has little possibility for harm (simple survey questions asked of a very small number of humans) and so little thought is given to introducing a new technology that will fundamentally alter the lives of everyone on the planet in ways unknown.

Now I admit it’s a little weird for me of all people to be bringing this issue up. I spent 30 years in high tech, am generally a fan of capitalism and now am a professor in a top business school. I teach a course on AI start-ups, am the co-chair of my university’s generative AI committee and speak and write widely about AI. Lastly, I’m working hard to help my university be a leader in AI. So yes, it’s a little odd.

But in my defense, as I’ve studied AI and its promise and perils, it’s obvious it has far-reaching implications. I think it’s a safe prediction to say its impact will easily eclipse that of the cell phone and we know how much mobile phones have changed our lives.

Kranzberg's First Law of Technology:

Technology is neither good nor bad; nor is it neutral.

We know new technologies will change our world; the printing press, steam engine, airplane, and computer fundamentally altered our lives in ways both positive and negative. Yes, the cotton gin was an invention that boosted cotton sales and generated economic growth in the U.S. and U.K. However, it also expanded slavery and helped lead to the Civil War. At its introduction, no one could foresee these outcomes. In sum, there have been winners and losers and unintended consequences with each new invention. With AI the impact will be by far the most impactful on humanity and the unknowns far less discernable.

We know it can make us more productive, creative and smarter. We know it can make organizations more efficient and effective, create new jobs and industries and potentially even make our lives more interesting and enjoyable. But we also know it will lead to job losses in certain fields, is biased, enables disinformation, hallucinates and oh-by-the-way could lead to the end of humanity. Even if AI doesn’t kill us, it will change how we live, think and love as humans, as I’ve written in AI won’t kill us, it will seduce us and Was “learn to code” bad advice given AI?

Now, to be fair, all technologies that have been introduced have not gone through a rigorous IRB process and all were questioned as to their usefulness versus their downside. This questioning can go all the way back to Socrates and the invention of writing.

In Plato’s Phaedrus, Socrates instructs us on the peril of the adoption of writing. Socrates recounts a conversation between the Egyptian god Theuth and King Thamus of Egypt. Theuth created many inventions and arts such as numbers, astronomy and games like dice and in the discussion, he is trying to convince Thamas of the usefulness of his technologies.

Below is Socrates speaking. Highlights are mine.

The story goes that Thamus said much to Theuth, both for and against each art, which it would take too long to repeat. But when they came to writing, Theuth said: “O King, here is something that, once learned, will make the Egyptians wiser and will improve their memory; I have discovered a potion for memory and for wisdom.”

Thamus, however, replied: “O most expert Theuth, one man can give birth to the elements of an art, but only another can judge how they can benefit or harm those who will use them. And now, since you are the father of writing, your affection for it has made you describe its effects as the opposite of what they really are. In fact, it will introduce forgetfulness into the soul of those who learn it: they will not practice using their memory because they will put their trust in writing, which is external and depends on signs that belong to others, instead of trying to remember from the inside, completely on their own.

You have not discovered a potion for remembering, but for reminding; you provide your students with the appearance of wisdom, not with its reality. Your invention will enable them to hear many things without being properly taught, and they will imagine that they have come to know much while for the most part they will know nothing. And they will be difficult to get along with, since they will merely appear to be wise instead of really being so.”

Obviously, writing was a huge gain for humanity, otherwise we would not have Plato’s writings about this anecdote. Not to mention the more functional benefits writing provides. But at the same time, it’s clear some skills were lost with the introduction of this new technology. How we lived and thought changed.

Back to IRBs.

What if AI firms had to consider the effects of their products into the wider world by answering IRB questions. Here are some of those questions, along with what I feel are the honest answers.

Q) Have the users of AI been given informed consent?

A) Me - We have no clue as to the long-term impacts of AI, so how can we as users give informed consent?Q) As the AI is rolled out, will there be ongoing communication with the users of AI about adverse events or any other results?

A) Me - A lot of articles are being written about the current and potential adverse impacts of AI and AI firms do try to be responsive to the criticism with product improvements. However, at the same time, they keep rolling out new products and capabilities before we had time to absorb and understanding the impact of the previous ones.Q) Will the AI user’s privacy be protected?

A) Me - Many AI firms will do their best to protect users’ privacy but there will inevitably be data breaches and some firms will use users’ data unethically.Q) Is the use of AI in the users’ best interests?

A) Me - We are in no way remotely capable of knowing the answer to this question. And again, AI firms are releasing product and product so fast that predicting future impacts are impossible.

How then do we proceed? Whose responsibility is it to understand the implications of artificial intelligence? Of course, the firms responsible for it should be doing this research and some like OpenAI are. But their motivations do not lead them to be disinterested observers. Government is another option, and it must play a role. But governments still haven’t figured out how to deal with the smartphone revolution, much less AI. There are many thought leaders and journalists who debate these issues, and they play a very valuable role. To these groups I would add universities.

Universities study the very disciplines that will be impacted by AI; law, journalism, business, political science, etc. There are scholars in each area who, with some research on AI, could project scenarios for an AI-influenced future. Scholars could work in cross-discipline teams to study specific areas such as bias, deep fakes and AI companions.

I plan to write soon about the role universities must play to help society sort out the implications of AI. Stay tuned.

I want to participate in this experiment as a human AI project