"If You Were in Charge of AI, Would Humanity Survive?"

Try this "Race to AGI" simulation to find out

TLDR: Whether or not AI will destroy humanity is perhaps the most important question surrounding its development. As firms pour billions into the race for Artificial General Intelligence (AGI), the consequences of this competition remain uncertain. To explore these dynamics, I created a simple simulation that places players in the role of AI CEOs racing to develop AGI safely—or risk catastrophic failure.

I used the Sim in two of my classes and the results were, well, sobering. In this piece, I explain the Sim design, what the Sim taught me about the battle to be the first to AGI and offer you a chance to try your hand at winning the Race to AGI.

The Godfather of AI thinks AI has a 10%-20% chance of ending humanity.

AI raises many questions but the most important question about AI is whether it will end humanity by becoming super powerful, either wiping out humanity on purpose or unintentionally. Geoffrey Hinton, the “Godfather of AI”, puts the probability of that happening at 10%-20%, a bit of an increase from his prior estimate.

As one might guess, there are different views on this. On one side are “doomers” like Eliezer Yudkowsky, who believe that an AI more intelligent than humans poses an existential threat. On the other side are “techno-optimists” such as tech venture capitalist, Marc Andreessen, who believe AI will bring tremendous benefits to humanity.

The reason techno-optimists think AI can be developed safely is the belief it can be “aligned” to human values and goals and ultimately be controlled by humans. Safety, therefore, is guaranteed by investments in AI alignment.

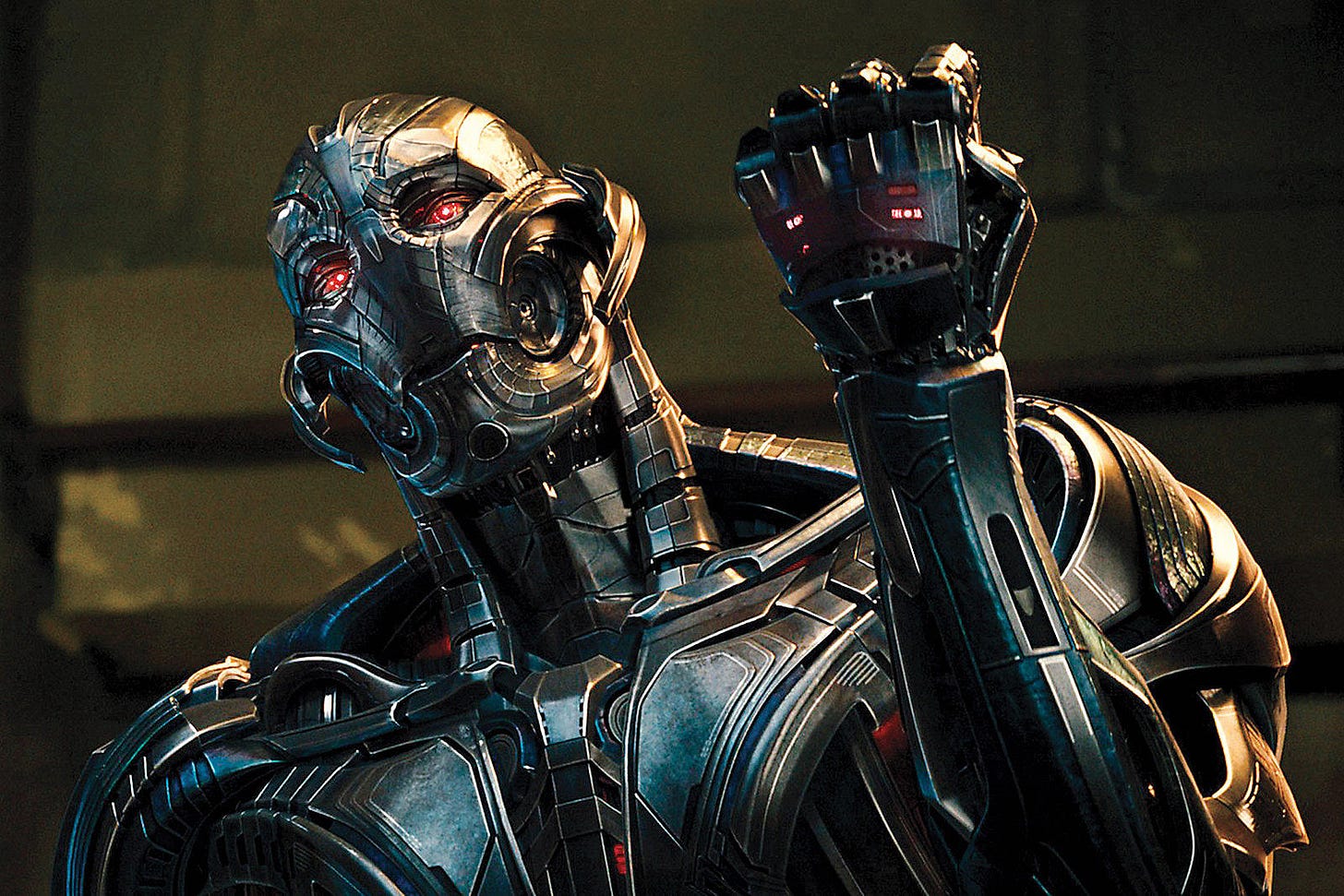

Doomers question whether AI can ever be 100% aligned. A fictional example of the problem of alignment can be found in the movie, Avengers, Age of Ultron. In the plot, Ultron is an AI robot created by Tony Stark to keep humanity safe. However, after learning human history in its training, Ultron concludes that humanity's inherent capacity for conflict, destruction, and self-interest makes them the greatest threat to the planet's survival and the best course of action is to wipe them out.

So far, although many companies and organizations are working on alignment, none have developed a failsafe means of aligning AI.

The Moloch Trap

One of the arguments offered by those who feel the threat from AI is existential is based on the “Moloch Trap.” Its essence is explained well by Scott Alexander in his brilliant 2014 Meditations on Moloch article.

“Moloch is the personification of the forces that coerce competing individuals to take actions which, although locally optimal, ultimately lead to situations where everyone is worse off. Moreover, no individual is able to unilaterally break out of the dynamic.”

One example of the Moloch Trap in action is overfishing. In their desire to reap the benefits, individual fishing companies or nations overexploit fish stocks to maximize their profits before competitors do. While that may work for them in the short term, in the long term, fish populations collapse, harming both the environment and the long-term viability of the fishing industry.

Applied to AI, the Moloch Trap takes the form of several countries and firms each racing to build Artificial General Intelligence (aka AGI, a time when AI can match or exceed human intelligence in many different arenas) to gain competitive advantage. Because they are compelled by competition to move fast, they may be less incented to consider the implications for humanity and, even if they do, may still not invest enough in alignment to ensure AGI can be implemented safely.

The Race to AGI Simulation

To replicate these competitive dynamics, I created a very simple simulation on Anthropic’ s Claude called The Race to AGI. Interestingly, I built the Sim without knowing how to code by using Claude’s Artifacts feature, just by describing what I wanted the Sim to do. I would write the prompt, see the code spit out in the Artifact window, and then see the Sim interface. I’d then play the game, make adjustments via more prompts, and see how the Sim looked. Once I was happy with the result, I published the Sim, had a few colleagues try it out, and then made the final changes. The whole creation process (not including sending it out to be tested) only took me 1-2 hours max. Pretty amazing!

Now it was time to use it in the classroom. One class was made up of undergrads and another of MBAs. Both times the topic for the day was AI ethics.

To better replicate the competitive environment that is AI development, I put the students in groups of 3-4. Within that group’s “world,” each student was the CEO of an AI firm, and was competing against the others in his/her world.

The Sim Mechanics

I asked Claude to “Explain the game mechanics simply for an essay I'm writing about the simulation,” and here is what Claude said.

In this AI ethics simulation, players run an artificial intelligence company trying to develop Artificial General Intelligence (AGI). Each round, players must decide how to allocate their research budget between two competing priorities: AGI development and alignment research (making sure the AGI is safe and aligned with human values).

The game lasts for a maximum of 10 rounds. Each round, players use a slider to divide their budget - any percentage given to AGI development is taken away from alignment research and vice versa. For example, if a player allocates 70% to AGI development, that automatically leaves 30% for alignment research.

Progress in both areas is partially random but influenced by budget allocation. The more money invested in an area, the faster progress typically occurs in that area. Players receive advice each round from two advisors: a Chief Scientist who pushes for AGI development, and a Chief Safety Officer who advocates for alignment research.

The game ends in one of two ways:

The player achieves AGI (reaches 100% AGI progress) within 10 rounds

The 10 rounds end without achieving AGI

If AGI is achieved, the game performs a probability check based on the player's alignment progress.* For example, if alignment progress is at 30%, there's a 70% chance that the AGI will have catastrophic consequences for humanity. The higher the alignment progress, the better the chances of a positive outcome.

This creates tension between racing to develop AGI quickly versus taking time to ensure it's safe. While achieving AGI faster might seem appealing, doing so without sufficient investment in alignment research significantly increases the risk of disaster. The simulation thus illustrates the real-world challenge of balancing technological progress with safety and ethical considerations in AI development.

The Sim Results

I’ll share my undergrad class results here as I have more concrete data on them.

As you can see below in the first and second columns, there were seven “worlds”, five which had 3 firms or players and two which had 4 firms/players.

In the third column, you can see that in every world, at least one firm reached AGI. Overall, 13 out of 23 firms (students) reached AGI, or a little over half. In six of the worlds (fourth column), however, one of more firms destroyed humanity in their pursuit of AGI.

The one world that did not destroy humanity was World #4. However, in that world three of the players misunderstood the goal and focused on maximizing Alignment over AGI, and hence none of those three firms achieved AGI. The only reason they did not destroy humanity as well was that the one player that did understand the instructions achieved AGI safely.

The MBA student results were similar; although there were more students and worlds, only one world achieved AGI without destroying humanity.

The Lesson Learned

The big takeaway for the students was the Sim brought home the ethical lesson of the Moloch Trap: many individuals, firms, or countries competitively maximizing for their own advantage can lead to disaster for the whole. Each student was caught up trying to “win”, and some did reach AGI safely, but when another firm failed at reaching AGI safely, their world was destroyed. This was a point I thought the Sim would make but, until I tried it out, I wasn’t sure it would work out that way.

Of course, the Sim is not a totally accurate representation of reality (see my caveats on the Sim below), I do think it illustrates some of the implications for real-world AGI development and the need for collective action to avoid potential catastrophe.

Would You Win the Race to AGI?

I hope you and others would try this Sim (see instructions and link below) and/or create your own simulation, using predictions you feel appropriate about the risk of AI destroying humanity and our ability to align AI systems to not harm us (alignment being something no one has figured out yet, which is worrisome in itself).

Playing The Race to AGI Sim

If you would like to try your hand at The Race to AGI, here are the directions the students received. You can use them as well to play the Sim.

You can play it by yourself but if you really want to replicate the world we are in, you should play it in “worlds” with 3-4 of your friends, family, or colleagues.

Welcome to The Race to Artificial General Intelligence!

In this simulation, you'll step into the role of a leader at a cutting-edge AI research company. Your mission is to develop Artificial General Intelligence (AGI) first - an AI system capable of understanding or learning any intellectual task that a human being can. However, you not only need to reach AGI first. You need to reach it safely.Your Challenge: Your main challenge is to balance two crucial aspects of AI development:

1.AGI Progress: Advancing towards the creation of AGI.

2.Alignment Research: Ensuring the AGI's goals and actions align with human values and safety.

How to Play

1.Each round, you'll allocate your budget between AGI development and alignment research.

2.You have a total of 10 rounds to achieve AGI.

3.After each round, you'll receive advice from two key advisors:

Your Chief Scientist, who advocates for rapid AGI development.

Your Chief Safety Officer, who emphasizes the importance of alignment research.

How It Works

•You’ll be competing against others in your group to be the first to AGI.

•You will do each round simultaneously as a group. After each round you can choose to either

-Share information with competitors on your progress on AGI and Safety

-Keep that information private or

-Something else…The Stakes

·If you achieve AGI with sufficient alignment first, it could lead to tremendous benefits for humanity and huge profits for your firm and you personally.

·However, if you rush to AGI without adequate alignment, the consequences could be catastrophic.

Winning the Game: Success isn't just about achieving AGI—it's about doing so responsibly. When you reach AGI, the game will determine the outcome based on your alignment progress. The higher your alignment, the better your chances of a positive outcome for humanity.

Key Decisions: As you play, consider:

·How do you balance short-term progress with long-term safety?

·When do you push for a breakthrough, and when do you err on the side of caution?

·How do you weigh the advice of your Chief Scientist against that of your Chief Safety Officer?

•Remember, every decision you make has far-reaching consequences. The future of humanity is in your hands!

•Are you ready to take on the challenge of developing safe and beneficial AGI? If so, join the Race to AGI here!

A Caveat: Limitations of the Sim and Its Lessons

One big question (as it is with any simulation) is “how well does the Race to AGI Sim mirror reality?”

The Sim, while not a Kobayashi Maru situation that is unwinnable, can be won. However, it requires strategic budget allocation and very good luck. Therefore, one criticism could be that it is too hard to “win”. That said, I think it will be challenging for firms in the real world to be able to reach AGI and alignment simultaneously (especially since no one has cracked the code on alignment). The fact that this is happening in a competitive situation makes it even more difficult.

The more realistic elements are the following.

The Sim captures the fundamental tensions between rapid development, safety measures, and the competing internal pressures from different influencers (scientist vs. safety officer).

It reflects the probabilistic nature of catastrophic risk (higher alignment = lower risk).

Shows how early investment decisions compound over time.

Illustrates that reaching AGI first doesn't guarantee a positive outcome.

Demonstrates that progress in both AGI and alignment involves uncertainty (random factors).

Models how budget allocation directly impacts development speed.

Where the Sim falls short could be in these areas.

Presents a fixed 10-round timeline, while real AGI development has no clear endpoint.

Assumes a direct relationship between money invested and progress made.

Does not account for breakthroughs or insights that could accelerate progress.

Treats AGI as a single threshold rather than a gradual development.

Doesn't include external factors like regulation, public opinion, or market pressures.

Suggests progress can be precisely measured, which is unrealistic for both AGI and alignment and assumes perfect knowledge of current progress levels in both AGI and alignment.

Simplifies the catastrophic risk into a single probability roll.

A final objection might be that the students didn’t take the Sim seriously because it was just a game. That’s true. However, it’s also true that, unlike those leading our tech firms and countries, the students were not motivated by the allure of money and power but only by the desire to win a game and yet they fell into the Moloch Trap. This was even after I had them read an article about the Moloch Trap.

I am sure there are other limitations of the Sim…it is quite a simplified version of reality. There are likely other ways to wargame how the push to AGI may play out from a safety standpoint, which could be put into other Sims and played out. I would applaud that - wargames are a great way to test out strategies to see which have the best potential for success and which will likely fail.

In sum, I hope this essay encourages others to replicate potential futures in The Race to AGI.

* An important factor in the Sim are the percentages assigned to the chance of disaster. You can see the percentages I assigned below. With no alignment, the chance of a disaster was 100%. However, with 70% alignment, there was a 30% chance of disaster, which was determined by a virtual die roll in the Sim.

Are these the right percentages? It’s not clear to me that anyone knows. The more you believe these percentages are in the ballpark, the more you think the Sim is close to reality. If you don’t, you might not put much credence in the lessons from the Sim. The big caveat to that, however, is no one really knows what the actual percent of disaster really is or how to ensure alignment. I would think that should give us pause.